stat341 / CM 361: Difference between revisions

m (Conversion script moved page Stat341 / CM 361 to stat341 / CM 361: Converting page titles to lowercase) |

|||

| (234 intermediate revisions by 16 users not shown) | |||

| Line 9: | Line 9: | ||

===[[Generating Random Numbers]] - May 12, 2009=== | ===[[Generating Random Numbers]] - May 12, 2009=== | ||

Generating random numbers in a computational setting presents challenges. A good way to generate random numbers in computational statistics involves analyzing various distributions using computational methods. As a result, the probability distribution of each possible number appears to be uniform (pseudo-random). Outside a computational setting, presenting a uniform distribution is fairly easy (for example, rolling a fair die repetitively to produce a series of random numbers from 1 to 6). | Generating random numbers in a computational setting presents challenges. A good way to generate random numbers in computational statistics involves analyzing various distributions using computational methods. <s>As a result, the probability distribution of each possible number appears to be uniform</s> (pseudo-random). Outside a computational setting, presenting a uniform distribution is fairly easy (for example, rolling a fair die repetitively to produce a series of random numbers from 1 to 6). | ||

We begin by considering the simplest case: the uniform distribution. | We begin by considering the simplest case: the uniform distribution. | ||

| Line 76: | Line 76: | ||

'''Proof''': | '''Proof''': | ||

Recall that, if ''f'' is the pdf corresponding to F, then, | Recall that, if ''f'' is the pdf corresponding to F where f is defined as 0 outside of its domain, then, | ||

:<math>F(x) = P(X \leq x) = \int_{-\infty}^x f(x)</math> | :<math>F(x) = P(X \leq x) = \int_{-\infty}^x f(x)</math> | ||

| Line 113: | Line 113: | ||

:<math> F^{-1}(x) = \frac{-\log(1-y)}{\theta} = \frac{-\log(u)}{\theta} </math> | :<math> F^{-1}(x) = \frac{-\log(1-y)}{\theta} = \frac{-\log(u)}{\theta} </math> | ||

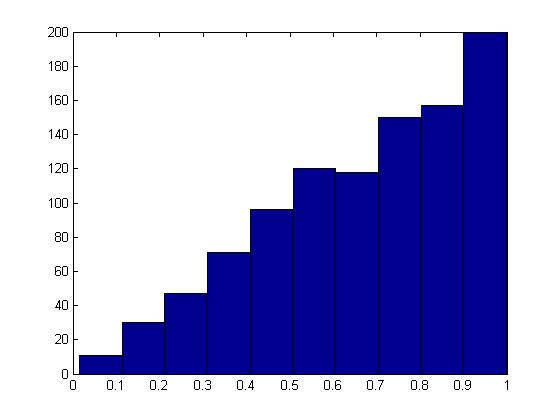

Now we can generate our random sample <math> | Now we can generate our random sample <math>u_1\dots u_n</math> from <math>F(x)</math> by: | ||

:<math>1)\ u_i \sim Unif[0, 1]</math> | :<math>1)\ u_i \sim Unif[0, 1]</math> | ||

| Line 126: | Line 126: | ||

x=-log(1-u)/1; | x=-log(1-u)/1; | ||

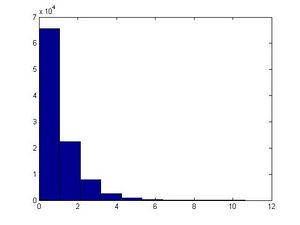

hist(x) | hist(x) | ||

[[Image:HistRandNum.jpg|center|300px|"Histogram showing the expected exponentional distribution" ]] | |||

The major problem with this approach is that we have to find <math>F^{-1}</math> and for many distributions it is too difficult (or impossible) to find the inverse of <math>F(x)</math>. Further, for some distributions it is not even possible to find <math>F(x)</math> (i.e. a closed form expression for the distribution function, or otherwise; even if the closed form expression exists, it's usually difficult to find <math>F^{-1}</math>). | The major problem with this approach is that we have to find <math>F^{-1}</math> and for many distributions it is too difficult (or impossible) to find the inverse of <math>F(x)</math>. Further, for some distributions it is not even possible to find <math>F(x)</math> (i.e. a closed form expression for the distribution function, or otherwise; even if the closed form expression exists, it's usually difficult to find <math>F^{-1}</math>). | ||

| Line 200: | Line 199: | ||

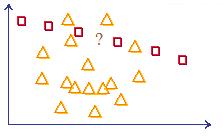

====Acceptance/Rejection Method==== | ====Acceptance/Rejection Method==== | ||

[[Image:edit.JPG|thumb|left|500px|Ali: Some statements are incorrect, inaccurate or misleading. Acceptance-Rejection Method needs to be motivated in more details. ]]<br /><br /><br /><br /><br /><br /> | |||

Suppose we wish to sample from a target distribution <math>f(x)</math> that is difficult to sample from directly. | |||

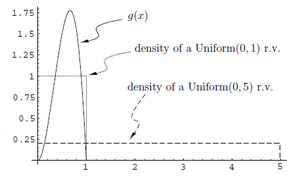

Let <math>g(x)</math> be a distribution that is easy to sample from and satisfies the condition: <br /><br /> | |||

<math>\forall x: f(x) \leq c \cdot g(x)\ </math>, where <math> c \in \Re^+</math> | |||

<br /><br /> | |||

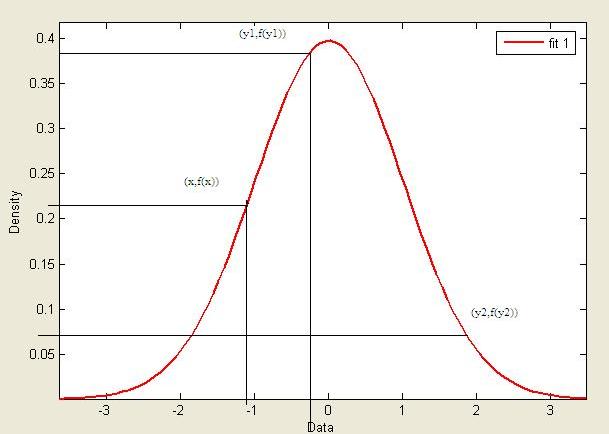

[[Image:fxcgx.JPG|thumb|right|300px|"Graph of the pdf of <math>f(x)</math> (target distribution) and <math> c g(x)</math> (proposal distribution)"]] | |||

Since c*g(x) > f(x) for all x, it is possible to obtain samples that follows f(x) by rejecting a proportion of samples drawn from c*g(x).<br /><br /> | |||

This proportion depends on how different f(x) and g(x) are and may vary at different values of x. | |||

That is, if <math> f(x) \approx g(x) \text { at } x = x_1 \text { and } f(x) \ll g(x) \text { at } x = x_2 </math>, we will need to reject more samples drawn at <math> \,x_2 </math> than at <math> \,x_1 </math>. | |||

Overall, it can shown that by accepting samples drawn from g(x) with probability <math> \frac {f(x)}{c \cdot g(x)} </math>, we can obtain samples that follows f(x) | |||

<br /><br /> | |||

Consider the example in the graph,<br /> | |||

Sampling y = 7 from <math> cg(x)</math> will yield a sample that follows the target distribution <math>f(x)</math> and will y be accepted w/p 1. | |||

Sampling y = 9 from <math> cg(x)</math> will yield a point that is distant from <math>f(x)</math> and will be accepted with a low probability. | |||

'''Proof''' | '''Proof''' | ||

[[Image:edit.JPG|thumb|left|500px|Ali: Proof of what? . ]]<br /><br /><br /><br /><br /><br /> | |||

Show that if points are sampled according to the Acceptance/Rejection method then they follow the target distribution.<br /><br /> | |||

<math> P(X=x|accept) = \frac{P(accept|X=x)P(X=x)}{P(accept)}</math><br /> | |||

<math> | by Bayes' theorem | ||

<math> | <br /><br /> | ||

<math>\begin{align} &P(accept|X=x) = \frac{f(x)}{c \cdot g(x)}\\ &Pr(X=x) = g(x)\frac{}{} \end{align}</math><br /><br />by hypothesis.<br /><br /> | |||

< | |||

Therefore, | Then,<br /> | ||

<math> | <math>\begin{align} P(accept) &= \int^{}_x P(accept|X=x)P(X=x) dx \\ | ||

&= \int^{}_x \frac{f(x)}{c \cdot g(x)} g(x) dx\\ | |||

&= \frac{1}{c} \int^{}_x f(x) dx\\ | |||

&= \frac{1}{c} \end{align} </math> | |||

<br /><br /> | |||

Therefore,<br /> | |||

<math> P(X=x|accept) = \frac{\frac{f(x)}{c\ \cdot g(x)}g(x)}{\frac{1}{c}} = f(x) </math> as required. | |||

'''Procedure (Continuous Case)''' | '''Procedure (Continuous Case)''' | ||

| Line 323: | Line 339: | ||

: <math> \Sigma_{i=1}^t X_i \sim~ Gamma (t, \lambda) </math> | : <math> \Sigma_{i=1}^t X_i \sim~ Gamma (t, \lambda) </math> | ||

Using this property, we can use the Inverse Transform Method to generate samples from an exponential distribution with appropriate variables, and use these to generate a sample following a Gamma distribution. | |||

| Line 381: | Line 397: | ||

It can also be shown that the joint distribution of r and θ is given by, | It can also be shown that the joint distribution of r and θ is given by, | ||

:<math>\begin{matrix} f(r,\theta) = \frac{1}{2}e^{-\frac{d}{2}} | :<math>\begin{matrix} f(r,\theta) = \frac{1}{2}e^{-\frac{d}{2}}\times\frac{1}{2\pi},\quad d = r^2 \end{matrix} </math> | ||

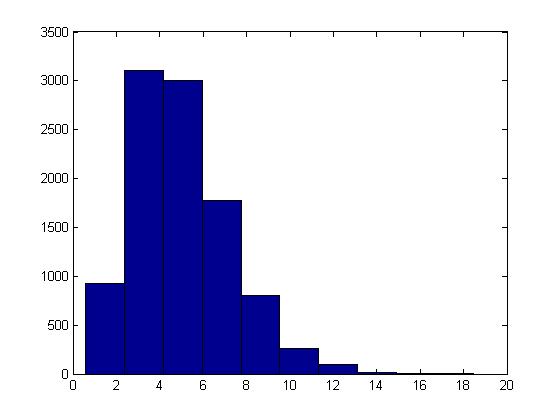

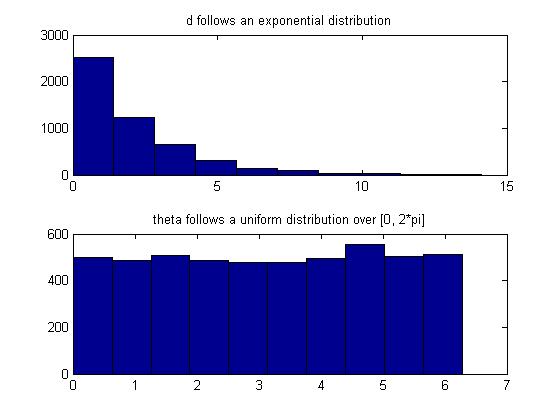

Note that <math> \begin{matrix}f(r,\theta)\end{matrix}</math> consists of two density functions, Exponential and Uniform, so assuming that r and <math>\theta</math> are independent | Note that <math> \begin{matrix}f(r,\theta)\end{matrix}</math> consists of two density functions, Exponential and Uniform, so assuming that r and <math>\theta</math> are independent | ||

<math> \begin{matrix} \Rightarrow d \sim~ Exp(1/2), \theta \sim~ Unif[0,2\pi] \end{matrix} </math> | <math> \begin{matrix} \Rightarrow d \sim~ Exp(1/2), \theta \sim~ Unif[0,2\pi] \end{matrix} </math> | ||

| Line 428: | Line 444: | ||

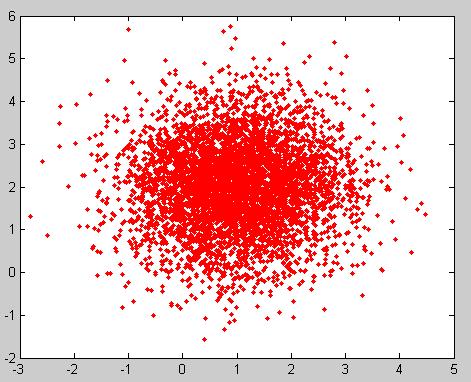

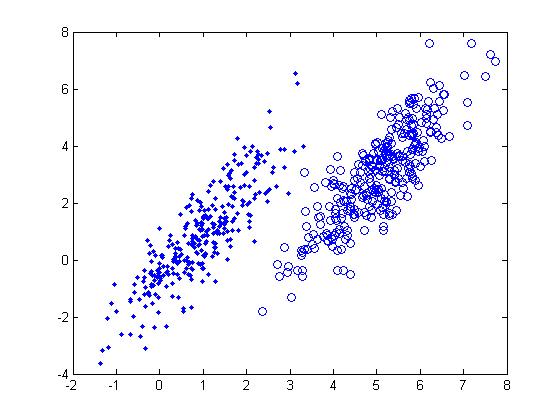

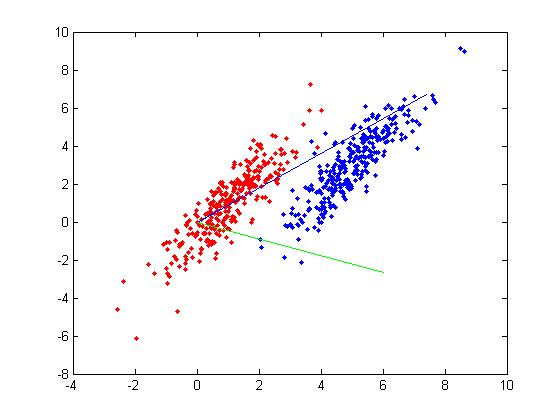

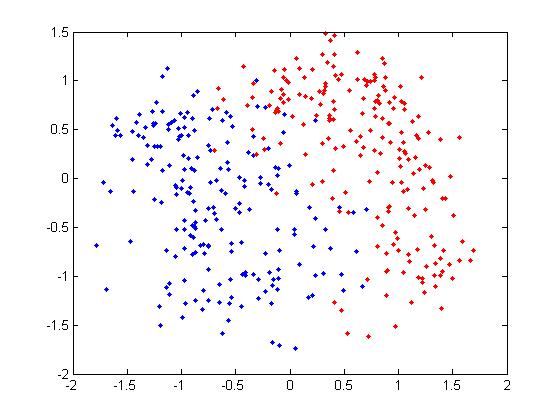

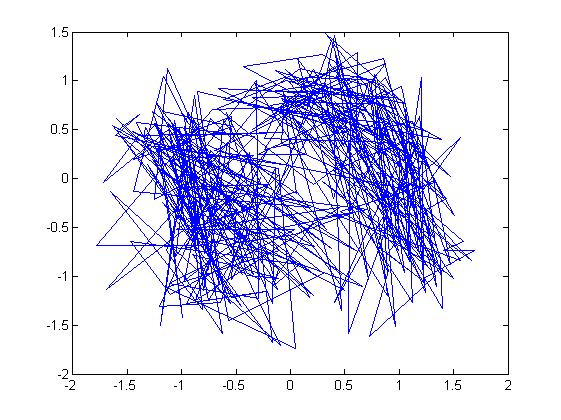

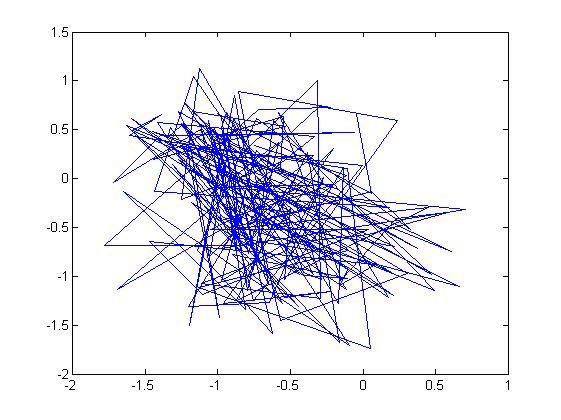

[[File:BothMay19.jpg]] | [[File:BothMay19.jpg]] | ||

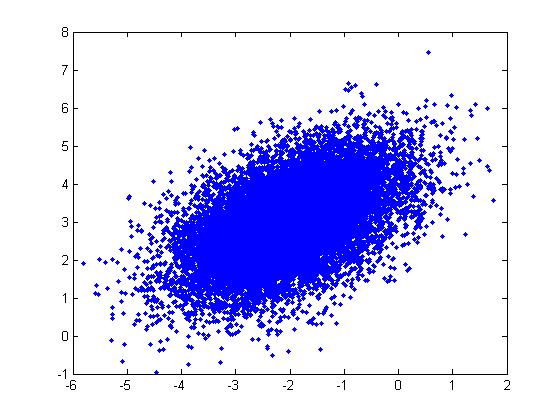

=====Useful Properties (Single and Multivariate)===== | =====Useful Properties (Single and Multivariate Normal)===== | ||

Box-Muller | The Box-Muller method as described above samples only from the standard normal distribution. However, both singlevariate and multivariate normal distributions have properties that allow us to use samples generated by the Box-Muller method to sample any normal distribution in general. | ||

| Line 456: | Line 472: | ||

[[File:MultiVariateMay19.jpg]] | [[File:MultiVariateMay19.jpg]] | ||

Note: In the example above, we | Note: In the example above, we generated the square root of <math>\Sigma</math> using the Cholesky decomposition, | ||

ss = chol(sigma); | ss = chol(sigma); | ||

| Line 467: | Line 483: | ||

===[[Bayesian and Frequentist Schools of Thought]] - May 21, 2009=== | ===[[Bayesian and Frequentist Schools of Thought]] - May 21, 2009=== | ||

In this lecture we will continue to discuss sampling from specific distributions , introduce '''Monte Carlo Integration''', and also talk about the differences between the Bayesian and Frequentist views on probability, along with references to '''Bayesian Inference'''. | |||

== | ====Binomial Distribution==== | ||

A Binomial distribution <math>X \sim~ Bin(n,p) </math> is the sum of <math>n</math> independent Bernoulli trials, each with probability of success <math>p</math> <math>(0 \leq p \leq 1)</math>. For each trial we generate an independent uniform random variable: <math>U_1, \ldots, U_n \sim~ Unif(0,1)</math>. Then X is the number of times that <math>U_i \leq p</math>. In this case if n is large enough, by the central limit theorem, the Normal distribution can be used to approximate a Binomial distribution. | |||

Sampling from Binomial distribution in Matlab is done using the following code: | |||

n=3; | |||

p=0.5; | |||

trials=1000; | |||

X=sum((rand(trials,n))'<=p); | |||

hist(X) | |||

Where the histogram is a Binomial distribution, and for higher <math>n</math>, it would resemble a Normal distribution. | |||

==== | ====Monte Carlo Integration==== | ||

Monte Carlo Integration is a numerical method of approximating the evaluation of integrals by simulation. In this course we will mainly look at three methods for approximating integrals: | |||

# Basic Monte Carlo Integration | |||

# Importance Sampling | |||

# Markov Chain Monte Carlo (MCMC) | |||

====Bayesian VS Frequentists==== | |||

Bayesian | |||

During the history of statistics, two major schools of thought emerged along the way and have been locked in an on-going struggle in trying to determine which one has the correct view on probability. These two schools are known as the Bayesian and Frequentist schools of thought. Both the Bayesians and the Frequentists holds a different philosophical view on what defines probability. Below are some fundamental differences between the Bayesian and Frequentist schools of thought: | |||

'''Frequentist''' | |||

*Probability is '''objective''' and refers to the limit of an event's relative frequency in a large number of trials. For example, a coin with a 50% probability of heads will turn up heads 50% of the time. | |||

*Parameters are all fixed and unknown constants. | |||

*Any statistical process only has interpretations based on limited frequencies. For example, a 95% C.I. of a given parameter will contain the true value of the parameter 95% of the time. | |||

'''Bayesian''' | |||

*Probability is '''subjective''' and can be applied to single events based on degree of confidence or beliefs. For example, Bayesian can refer to tomorrow's weather as having 50% of rain, whereas this would not make sense to a Frequentist because tomorrow is just one unique event, and cannot be referred to as a relative frequency in a large number of trials. | |||

*Parameters are random variables that has a given distribution, and other probability statements can be made about them. | |||

*Probability has a distribution over the parameters, and point estimates are usually done by either taking the mode or the mean of the distribution. | |||

====Bayesian Inference==== | |||

==== | '''Example''': | ||

If we have a screen that only displays single digits from 0 to 9, and this screen is split into a 4x5 matrix of pixels, then all together the 20 pixels that make up the screen can be referred to as <math>\vec{X}</math>, which is our data, and the parameter of the data for this case, which we will refer to as <math> \theta </math>, would be a discrete random variable that can take on the values of 0 to 9. In this example, a Bayesian would be interested in finding <math> Pr(\theta=a|\vec{X}=\vec{x})</math>, whereas a Frequentist would be more interested in finding <math> Pr(\vec{X}=\vec{x}|\theta=a)</math> | |||

=====Bayes' Rule===== | |||

:<math>f(\theta|X) = \frac{f(X | \theta)\, f(\theta)}{f(X)}.</math> | |||

Note: In this case <math>f (\theta|X)</math> is referred to as '''posterior''', <math>f (X | \theta)</math> as '''likelihood''', <math>f (\theta)</math> as '''prior''', and <math>f (X)</math> as the '''marginal''', where <math>\theta</math> is the parameter and <math>X</math> is the observed variable. | |||

:<math> | |||

'''Procedure in Bayesian Inference''' | |||

*First choose a probability distribution as the prior, which represents our beliefs about the parameters. | |||

*Then choose a probability distribution for the likelihood, which represents our beliefs about the data. | |||

*Lastly compute the posterior, which represents an update of our beliefs about the parameters after having observed the data. | |||

As mentioned before, for a Bayesian, finding point estimates usually involves finding the mode or the mean of the parameter's distribution. | |||

''' | '''Methods''' | ||

*Mode: <math>\theta = \arg\max_{\theta} f(\theta|X) \gets</math> value of <math>\theta</math> that maximizes <math>f(\theta|X)</math> | |||

*Mean: <math> \bar\theta = \int^{}_\theta \theta \cdot f(\theta|X)d\theta</math> | |||

If it is the case that <math>\theta</math> is high-dimensional, and we are only interested in one of the components of <math>\theta</math>, for example, we want <math>\theta_1</math> from <math> \vec{\theta}=(\theta_1,\dots,\theta_n)</math>, then we would have to calculate the integral: <math>\int^{} \int^{} \dots \int^{}f(\theta|X)d\theta_2d\theta_3 \dots d\theta_n </math> | |||

This sort of calculation is usually very difficult or not feasible to compute, and thus we would need to do it by simulation. | |||

'''Note''': | |||

#<math>f(x)=\int^{}_\theta f(X | \theta)f(\theta) d\theta</math> is not a function of <math>\theta</math>, and is called the '''Normalization Factor''' | |||

#Therefore, since f(x) is like a constant, the posterior is proportional to the likelihood times the prior: <math>f(\theta|X)\propto f(X | \theta)f(\theta)</math> | |||

==[[Monte Carlo Integration]] - May 26, 2009== | |||

Today's lecture completes the discussion on the Frequentists and Bayesian schools of thought and introduces '''Basic Monte Carlo Integration'''.<br><br> | |||

====Frequentist vs Bayesian Example - Estimating Parameters==== | |||

Estimating parameters of a univariate Gaussian: | |||

Frequentist: <math>f(x|\theta)=\frac{1}{\sqrt{2\pi}\sigma}e^{-\frac{1}{2}*(\frac{x-\mu}{\sigma})^2}</math><br> | |||

Bayesian: <math>f(\theta|x)=\frac{f(x|\theta)f(\theta)}{f(x)}</math> | |||

== | =====Frequentist Approach===== | ||

Let <math>X^N</math> denote <math>(x_1, x_2, ..., x_n)</math>. Using the Maximum Likelihood Estimation approach for estimating parameters we get:<br> | |||

:<math>L(X^N; \theta) = \prod_{i=1}^N \frac{1}{\sqrt{2\pi}\sigma}e^{-\frac{1}{2}(\frac{x_i- \mu} {\sigma})^2}</math> | |||

:<math>l(X^N; \theta) = \sum_{i=1}^N -\frac{1}{2}log (2\pi) - log(\sigma) - \frac{1}{2} \left(\frac{x_i- \mu}{\sigma}\right)^2 </math> | |||

:<math>\frac{dl}{d\mu} = \displaystyle\sum_{i=1}^N(x_i-\mu)</math> | |||

Setting <math>\frac{dl}{d\mu} = 0</math> we get | |||

:<math>\displaystyle\sum_{i=1}^Nx_i = \displaystyle\sum_{i=1}^N\mu</math> | |||

:<math>\displaystyle\sum_{i=1}^Nx_i = N\mu \rightarrow \mu = \frac{1}{N}\displaystyle\sum_{i=1}^Nx_i</math><br> | |||

==== | =====Bayesian Approach===== | ||

Assuming the prior is Gaussian: | |||

:<math>P(\theta) = \frac{1}{\sqrt{2\pi}\tau}e^{-\frac{1}{2}(\frac{x-\mu_0}{\tau})^2}</math> | |||

:<math>\ | :<math>f(\theta|x) \propto \frac{1}{\sqrt{2\pi}\sigma}e^{-\frac{1}{2}(\frac{x_i-\mu}{\sigma})^2} * \frac{1}{\sqrt{2\pi}\tau}e^{-\frac{1}{2}(\frac{x-\mu_0}{\tau})^2}</math> | ||

By completing the square we conclude that the posterior is Gaussian as well: | |||

:<math>f(\theta|x)=\frac{1}{\sqrt{2\pi}\tilde{\sigma}}e^{-\frac{1}{2}(\frac{x-\tilde{\mu}}{\tilde{\sigma}})^2}</math> | |||

Where | |||

:<math>\tilde{\mu} = \frac{\frac{N}{\sigma^2}}{{\frac{N}{\sigma^2}}+\frac{1}{\tau^2}}\bar{x} + \frac{\frac{1}{\tau^2}}{{\frac{N}{\sigma^2}}+\frac{1}{\tau^2}}\mu_0</math> | |||

The expectation from the posterior is different from the MLE method. | |||

Note that <math>\displaystyle\lim_{N\to\infty}\tilde{\mu} = \bar{x}</math>. Also note that when <math>N = 0</math> we get <math>\tilde{\mu} = \mu_0</math>. | |||

====Basic Monte Carlo Integration==== | |||

Although it is almost impossible to find a precise definition of "Monte Carlo Method", the method is widely used and has numerous descriptions in articles and monographs. As an interesting fact, the term '''Monte Carlo''' is claimed to have been first used by Ulam and von Neumann as a Los Alamos code word for the stochastic simulations they applied to building better atomic bombs. ''Stochastic simulation'' refers to a random process in which its future evolution is described by probability distributions (counterpart to a deterministic process), and these simulation methods are known as ''Monte Carlo methods''. [Stochastic process, Wikipedia]. The following example (external link) illustrates a Monte Carlo Calculation of Pi: [http://www.chem.unl.edu/zeng/joy/mclab/mcintro.html] | |||

<!-- EDITING, BACK OFF --> | |||

We start with a simple example: | |||

:<math>\begin{align}I &= \displaystyle\int_a^b h(x)\,dx | |||

&= \displaystyle\int_a^b w(x)f(x)\,dx \end{align}</math> | |||

where | |||

:<math>\displaystyle w(x) = h(x)(b-a)</math> | |||

:<math>f(x) = \frac{1}{b-a} \rightarrow</math> the p.d.f. is Unif<math>(a,b)</math> | |||

:<math>\hat{I} = E_f[w(x)] = \frac{1}{N}\displaystyle\sum_{i=1}^Nw(x_i)</math> | |||

If <math>x_i \sim~ Unif(a,b)</math> then by the '''Law of Large Numbers''' <math>\frac{1}{N}\displaystyle\sum_{i=1}^Nw(x_i) \rightarrow \displaystyle\int w(x)f(x)\,dx = E_f[w(x)]</math> | |||

=====Process===== | |||

Given <math>I = \displaystyle\int^b_ah(x)\,dx</math> | |||

# <math>\begin{matrix} w(x) = h(x)(b-a)\end{matrix}</math> | |||

# <math> \begin{matrix} x_1, x_2, ..., x_n \sim UNIF(a,b)\end{matrix}</math> | |||

# <math>\hat{I} = \frac{1}{N}\displaystyle\sum_{i=1}^Nw(x_i)</math> | |||

From this we can compute other statistics, such as | |||

# <math> SE=\frac{s}{\sqrt{N}}</math> where <math>s^2=\frac{\sum_{i=1}^{N}(Y_i-\hat{I})^2 }{N-1} </math> with <math> \begin{matrix}Y_i=w(x_i)\end{matrix}</math> | |||

# <math>\begin{matrix} 1-\alpha \end{matrix}</math> CI's can be estimated as <math> \hat{I}\pm Z_\frac{\alpha}{2}\times SE</math> | |||

'''Example 1''' | |||

Find <math> E[\sqrt{x}]</math> for <math>\begin{matrix} f(x) = e^{-x}\end{matrix} </math> | |||

# We need to draw from <math>\begin{matrix} f(x) = e^{-x}\end{matrix} </math> | |||

# <math>\hat{I} = \frac{1}{N}\displaystyle\sum_{i=1}^Nw(x_i)</math> | |||

This example can be illustrated in Matlab using the code below: | |||

==== | u=rand(100,1) | ||

x=-log(u) | |||

h= x.^ .5 | |||

mean(h) | |||

%The value obtained using the Monte Carlo method | |||

F = @ (x) sqrt (x). * exp(-x) | |||

quad(F,0,50) | |||

%The value of the real function using Matlab | |||

'''Example 2''' | |||

Find <math> I = \displaystyle\int^1_0h(x)\,dx, h(x) = x^3 </math> | |||

# <math> \displaystyle I = x^4/4 = 1/4 </math> | |||

# <math>\displaystyle W(x) = x^3*(1-0)</math> | |||

# <math> Xi \sim~Unif(0,1)</math> | |||

# <math>\hat{I} = \frac{1}{N}\displaystyle\sum_{i=1}^N(x_i^3)</math> | |||

This example can be illustrated in Matlab using the code below: | |||

x = rand (1000) | |||

mean(x^3) | |||

''' | '''Example 3''' | ||

To estimate an infinite integral | |||

such as <math> I = \displaystyle\int^\infty_5 h(x)\,dx, h(x) = 3e^{-x} </math> | |||

# | # Substitute in <math> y=\frac{1}{x-5+1} => dy=-\frac{1}{(x-4)^2}dx => dy=-y^2dx </math> | ||

# | # <math> I = \displaystyle\int^1_0 \frac{3e^{-(\frac{1}{y}+4)}}{-y^2}\,dy </math> | ||

# | # <math> w(y) = \frac{3e^{-(\frac{1}{y}+4)}}{-y^2}(1-0)</math> | ||

# <math>\ | # <math> Y_i \sim~Unif(0,1)</math> | ||

# <math>\hat{I} = \frac{1}{N}\displaystyle\sum_{i=1}^N(\frac{3e^{-(\frac{1}{y_i}+4)}}{-y_i^2})</math> | |||

==[[Importance Sampling and Monte Carlo Simulation]] - May 28, 2009== | |||

<!-- UNDER CONSTRUCTION! --> | |||

During this lecture we covered two more examples of Monte Carlo simulation, finishing that topic, and begun talking about Importance Sampling. | |||

====Binomial Probability Monte Carlo Simulations==== | |||

=====Example 1:===== | |||

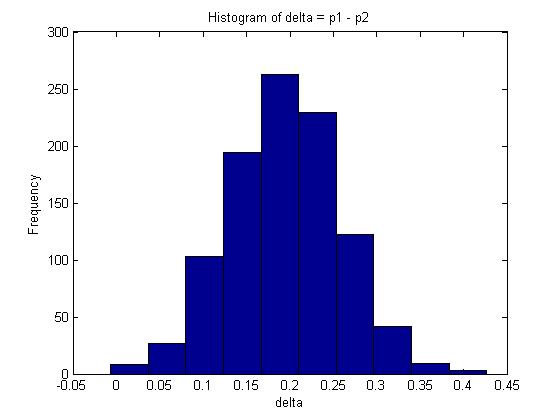

You are given two independent Binomial distributions with probabilities <math>\displaystyle p_1\text{, }p_2</math>. Using a Monte Carlo simulation, approximate the value of <math>\displaystyle \delta</math>, where <math>\displaystyle \delta = p_1 - p_2</math>.<br> | |||

:<math>\displaystyle X \sim BIN(n, p_1)</math>; <math>\displaystyle Y \sim BIN(m, p_2)</math>; <math>\displaystyle \delta = p_1 - p_2</math><br><br> | |||

So <math>\displaystyle f(p_1, p_2 | x,y) = \frac{f(x, y|p_1, p_2)f(p_1,p_2)}{f(x,y)}</math> where <math>\displaystyle f(x,y)</math> is a flat distribution and the expected value of <math>\displaystyle \delta</math> is as follows:<br> | |||

:<math>\displaystyle E(\delta) = \int\int\delta f(p_1,p_2|X,Y)\,dp_1dp_2</math><br><br> | |||

Since X, Y are independent, we can split the conditional probability distribution:<br> | |||

:<math>\displaystyle f(p_1,p_2|X,Y) \propto f(p_1|X)f(p_2|Y)</math><br><br> | |||

We need to find conditional distribution functions for <math>\displaystyle p_1, p_2</math> to draw samples from. In order to get a distribution for the probability 'p' of a Binomial, we have to divide the Binomial distribution by n. This new distribution has the same shape as the original, but is scaled. A Beta distribution is a suitable approximation. Let<br> | |||

:<math>\displaystyle f(p_1 | X) \sim \text{Beta}(x+1, n-x+1)</math> and <math>\displaystyle f(p_2 | Y) \sim \text{Beta}(y+1, m-y+1)</math>, where<br> | |||

:<math>\displaystyle \text{Beta}(\alpha,\beta) = \frac{\Gamma(\alpha+\beta)}{\Gamma(\alpha)\Gamma(\beta)}p^{\alpha-1}(1-p)^{\beta-1}</math><br><br> | |||

''' | '''Process:''' | ||

# Draw samples for <math>\displaystyle p_1</math> and <math>\displaystyle p_2</math>: <math>\displaystyle (p_1,p_2)^{(1)}</math>, <math>\displaystyle (p_1,p_2)^{(2)}</math>, ..., <math>\displaystyle (p_1,p_2)^{(n)}</math>; | |||

:<math>\displaystyle | # Compute <math>\displaystyle \delta = p_1 - p_2</math> in order to get n values for <math>\displaystyle \delta</math>; | ||

# <math>\displaystyle \hat{\delta}=\frac{1}{N}\sum_{\forall i}\delta^{(i)}</math>.<br><br> | |||

'''Matlab Code:'''<br> | |||

:The Matlab code for recreating the above example is as follows: | |||

n=100; %number of trials for X | |||

m=100; %number of trials for Y | |||

x=80; %number of successes for X trials | |||

y=60; %number of successes for y trials | |||

p1=betarnd(x+1, n-x+1, 1, 1000); | |||

p2=betarnd(y+1, m-y+1, 1, 1000); | |||

delta=p1-p2; | |||

mean(delta); | |||

The mean in this example is given by 0.1938. | |||

A 95% confidence interval for <math>\delta</math> is represented by the interval between the 2.5% and 97.5% quantiles which covers 95% of the probability distribution. In Matlab, this can be calculated as follows: | |||

q1=quantile(delta,0.025); | |||

q2=quantile(delta,0.975); | |||

The interval is approximately <math> 95% CI \approx (0.06606, 0.32204) </math> | |||

The histogram of delta is:<br> | |||

[[File:Delta_hist.jpg]] | |||

Note: In this case, we can also find <math>E(\delta)</math> analytically since | |||

<math>E(\delta) = E(p_1 - p_2) = E(p_1) - E(p_2) = \frac{x+1}{n+2} - \frac{y+1}{m+2} \approx 0.1961 </math>. Compare this with the maximum likelihood estimate for <math>\delta</math>: <math>\frac{x}{n} - \frac{y}{m} = 0.2</math>. | |||

===== | =====Example 2:===== | ||

Bayesian Inference for Dose Response | |||

We conduct an experiment by giving rats one of ten possible doses of a drug, where each subsequent dose is more lethal than the previous one: | |||

<math> | :<math>\displaystyle x_1<x_2<...<x_{10}</math><br> | ||

<math> | For each dose <math>\displaystyle x_i</math> we test n rats and observe <math>\displaystyle Y_i</math>, the number of rats that die. Therefore,<br> | ||

where <math>\displaystyle | :<math>\displaystyle Y_i \sim~ BIN(n, p_i)</math><br>. | ||

We can assume that the probability of death grows with the concentration of drug given, i.e. <math>\displaystyle p_1<p_2<...<p_{10}</math>. Estimate the dose at which the animals have at least 50% chance of dying.<br> | |||

:Let <math>\displaystyle \delta=x_j</math> where <math>\displaystyle j=min\{i|p_i\geq0.5\}</math> | |||

:We are interested in <math>\displaystyle \delta</math> since any higher concentrations are known to have a higher death rate.<br><br> | |||

'''Solving this analytically is difficult:''' | |||

:<math>\displaystyle \delta = g(p_1, p_2, ..., p_{10})</math> where g is an unknown function | |||

:<math>\displaystyle \hat{\delta} = \int \int..\int_A \delta f(p_1,p_2,...,p_{10}|Y_1,Y_2,...,Y_{10})\,dp_1dp_2...dp_{10}</math><br> | |||

::<math>\displaystyle = \ | :: where <math>\displaystyle A=\{(p_1,p_2,...,p_{10})|p_1\leq p_2\leq ...\leq p_{10} \}</math><br><br> | ||

'''Process: Monte Carlo'''<br> | |||

We assume that | |||

# Draw <math>\displaystyle p_i \sim~ BETA(y_i+1, n-y_i+1)</math> | |||

# Keep sample only if it satisfies <math>\displaystyle p_1\leq p_2\leq ...\leq p_{10}</math>, otherwise discard and try again. | |||

# Compute <math>\displaystyle \delta</math> by finding the first <math>\displaystyle p_i</math> sample with over 50% deaths. | |||

# Repeat process n times to get n estimates for <math>\displaystyle \delta_1, \delta_2, ..., \delta_N </math>. | |||

# <math>\displaystyle \bar{\delta} = \frac{\displaystyle\sum_{\forall i} \delta_i}{N}</math>. | |||

For instance, for each dose level <math>X_i</math>, for <math>1<=i<=10</math>, 10 rats are used and it is observed that the numbers that are dying is <math>Y_i</math>, where <math>Y_1 = 4, Y_2 = 3, </math>etc. | |||

==== | ====Importance Sampling==== | ||

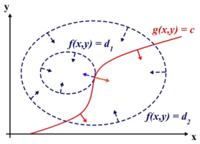

[[Image:ImpSampling.PNG|thumb|right|300px|'A diagram of a Monte Carlo Approximation of g(x)[Importance Sampling, Wikipedia]]] | |||

In statistics, Importance Sampling helps estimating the properties of a particular distribution. As in the case with the Acceptance/Rejection method, we choose a good distribution from which to simulate the given random variables. The main difference in importance sampling however, is that certain values of the input random variables in a simulation have more impact on the parameter being estimated than others. As shown in the figure, the uniform distribution <math>U\sim~Unif[0,1]</math> is a proposal distribution to sample from and g(x) is the target distribution. | |||

<br />Here we cast the integral <math>\int_{0}^1 g(x)dx</math>, as the expectation of <math>g(x)</math> with respect to U such that,<br /><br /> | |||

<math>I = \int_{0}^1 g(x)= E_U(g(x))</math>. <br /><br />Hence we can approximate this integral by,<br /><br /> <math>\hat{I} = \frac{1}{N}\displaystyle\sum_{i=1}^{N} g(u_i)</math>. <br><br /> | |||

[Source: Monte Carlo Methods and Importance Sampling, Eric C. Anderson (1999). Retrieved June 9th from URL: http://ib.berkeley.edu/labs/slatkin/eriq/classes/guest_lect/mc_lecture_notes.pdf] | |||

<br> | |||

<br> | |||

In <math>I = \displaystyle\int h(x)f(x)\,dx</math>, Monte Carlo simulation can be used only if it easy to sample from f(x). Otherwise, another method must be applied. If sampling from f(x) is difficult but there exists a probability distribution function g(x) which is easy to sample from, then <math>\ I</math> can be written as<br> | |||

=== | :: <math>\begin{align}I &= \int h(x)f(x)\,dx = \int \frac{h(x)f(x)}{g(x)}g(x)\,dx \\ | ||

&= E_g(w(x)) \end{align}</math><br /><br />the expectation of <math>\displaystyle w(x) = \frac{h(x)f(x)}{g(x)}</math> with respect to g(x) and therefore <math>\displaystyle x_1,x_2,...,x_N \sim~ g</math> | |||

:: <math> \hat{I}= \frac{1}{N}\sum_{i=1}^{N} w(x_i)</math> <br><br> | |||

'''Process'''<br> | |||

# Choose <math>\displaystyle g(x)</math> such that it's easy to sample from and draw <math>x_i \sim~ g(x)</math> | |||

# Compute <math> w(x_i)=\frac{h(x_i)f(x_i)}{g(x_i)}</math> | |||

# <math> \hat{I} = \frac{1}{N}\sum_{i=1}^{N} w(x_i)</math><br><br> | |||

Note: By the law of large numbers, we can say that <math>\hat{I}</math> converges in probability to <math>I </math>. | |||

The | '''"Weighted" average'''<br> | ||

:The term "importance sampling" is used to describe this method because a higher 'importance' or 'weighting' is given to the values sampled from <math>\displaystyle g(x)</math> that are closer to the original distribution <math>\displaystyle f(x)</math>, which we would ideally like to sample from (but cannot because it is too difficult).<br> | |||

:<math>\displaystyle I = \int\frac{h(x)f(x)}{g(x)}g(x)\,dx</math> | |||

:<math>=\displaystyle \int \frac{f(x)}{g(x)}h(x)g(x)\,dx</math><br /><br /> | |||

:<math>\displaystyle \int \frac{f(x)}{g(x)}E_g(h(x))\,dx</math> which is like saying that we are applying a regular Monte Carlo Simulation method to <math>\displaystyle\int h(x)g(x)\,dx </math>, and taking each result from this process and weighting the more accurate ones (i.e. the ones for which <math>\displaystyle \frac{f(x)}{g(x)}</math> is high) higher. | |||

One can view <math> \frac{f(x)}{g(x)}\ = B(x)</math> as a weight. | |||

Then <math>\displaystyle \hat{I} = \frac{1}{N}\displaystyle\sum_{i=1}^{N} w(x_i) = \frac{1}{N}\displaystyle\sum_{i=1}^{N} B(x_i)h(x_i)</math><br><br> | |||

i.e. we are computing a weighted sum of <math> h(x_i) </math> instead of a sum | |||

===[[A Deeper Look into Importance Sampling]] - June 2, 2009 === | |||

From last class, we have determined that an integral can be written in the form <math>I = \displaystyle\int h(x)f(x)\,dx </math> <math>= \displaystyle\int \frac{h(x)f(x)}{g(x)}g(x)\,dx</math> We continue our discussion of Importance Sampling here. | |||

====Importance Sampling==== | |||

We can see that the integral <math>\displaystyle\int \frac{h(x)f(x)}{g(x)}g(x)\,dx = \int \frac{f(x)}{g(x)}h(x) g(x)\,dx</math> is just <math> \displaystyle E_g(h(x)) \rightarrow</math>the expectation of h(x) with respect to g(x), where <math>\displaystyle \frac{f(x)}{g(x)} </math> is a weight <math>\displaystyle\beta(x)</math>. In the case where <math>\displaystyle f > g</math>, a greater weight for <math>\displaystyle\beta(x)</math> will be assigned. Thus, the points with more weight are deemed more important, hence "importance sampling". This can be seen as a variance reduction technique. | |||

=== | =====Problem===== | ||

The method of Importance Sampling is simple but can lead to some problems. The <math> \displaystyle \hat I </math> estimated by Importance Sampling could have infinite standard error. | |||

The | |||

< | Given <math>\displaystyle I= \int w(x) g(x) dx </math> | ||

<math>= \displaystyle E_g(w(x)) </math> | |||

<math>= \displaystyle \frac{1}{N}\sum_{i=1}^{N} w(x_i) </math> | |||

where <math>\displaystyle w(x)=\frac{f(x)h(x)}{g(x)} </math>. | |||

Obtaining the second moment, | |||

::<math>\displaystyle E[(w(x))^2] </math> | |||

This | ::<math>\displaystyle = \int (\frac{h(x)f(x)}{g(x)})^2 g(x) dx</math> | ||

::<math>\displaystyle = \int \frac{h^2(x) f^2(x)}{g^2(x)} g(x) dx </math> | |||

::<math>\displaystyle = \int \frac{h^2(x)f^2(x)}{g(x)} dx </math> | |||

We can see that if <math>\displaystyle g(x) \rightarrow 0 </math>, then <math>\displaystyle E[(w(x))^2] \rightarrow \infty </math>. This occurs if <math>\displaystyle g </math> has a thinner tail than <math>\displaystyle f </math> then <math>\frac{h^2(x)f^2(x)}{g(x)} </math> could be infinitely large. The general idea here is that <math>\frac{f(x)}{g(x)} </math> should not be large. | |||

=====Remark 1===== | |||

It is evident that <math>\displaystyle g(x) </math> should be chosen such that it has a thicker tail than <math>\displaystyle f(x) </math>. | |||

If <math>\displaystyle f</math> is large over set <math>\displaystyle A</math> but <math>\displaystyle g</math> is small, then <math>\displaystyle \frac{f}{g} </math> would be large and it would result in a large variance. | |||

=====Remark 2===== | |||

It is useful if we can choose <math>\displaystyle g </math> to be similar to <math>\displaystyle f</math> in terms of shape. Ideally, the optimal <math>\displaystyle g </math> should be similar to <math>\displaystyle \left| h(x) \right|f(x)</math>, and have a thicker tail. It's important to take the absolute value of <math>\displaystyle h(x)</math>, since a variance can't be negative. Analytically, we can show that the best <math>\displaystyle g</math> is the one that would result in a variance that is minimized. | |||

< | =====Remark 3===== | ||

Choose <math>\displaystyle g </math> such that it is similar to <math>\displaystyle \left| h(x) \right| f(x) </math> in terms of shape. That is, we want <math>\displaystyle g \propto \displaystyle \left| h(x) \right| f(x) </math> | |||

====Theorem (Minimum Variance Choice of <math>\displaystyle g</math>) ==== | |||

The choice of <math>\displaystyle g</math> that minimizes variance of <math>\hat I</math> is <math>\displaystyle g^*(x)=\frac{\left| h(x) \right| f(x)}{\int \left| h(s) \right| f(s) ds}</math>. | |||

=====Proof:===== | |||

We know that <math>\displaystyle w(x)=\frac{f(x)h(x)}{g(x)} </math> | |||

The variance of <math>\displaystyle w(x) </math> is | |||

:: <math>\displaystyle Var[w(x)] </math> | |||

:: <math>\displaystyle = E[(w(x)^2)] - [E[w(x)]]^2 </math> | |||

:: <math>\displaystyle = \int \left(\frac{f(x)h(x)}{g(x)} \right)^2 g(x) dx - \left[\int \frac{f(x)h(x)}{g(x)}g(x)dx \right]^2 </math> | |||

:: <math>\displaystyle = \int \left(\frac{f(x)h(x)}{g(x)} \right)^2 g(x) dx - \left[\int f(x)h(x) \right]^2 </math> | |||

As we can see, the second term does not depend on <math>\displaystyle g(x) </math>. Therefore to minimize <math>\displaystyle Var[w(x)] </math> we only need to minimize the first term. In doing so we will use '''Jensen's Inequality'''. | |||

::::::::::<math>\displaystyle ======Aside: Jensen's Inequality====== </math> | |||

:: | |||

If <math>\displaystyle g </math> is a convex function ( twice differentiable and <math>\displaystyle g''(x) \geq 0 </math> ) then <math>\displaystyle g(\alpha x_1 + (1-\alpha)x_2) \leq \alpha g(x_1) + (1-\alpha) g(x_2)</math><br /> | |||

Essentially the definition of convexity implies that the line segment between two points on a curve lies above the curve, which can then be generalized to higher dimensions: | |||

::<math>\displaystyle g(\alpha_1 x_1 + \alpha_2 x_2 + ... + \alpha_n x_n) \leq \alpha_1 g(x_1) + \alpha_2 g(x_2) + ... + \alpha_n g(x_n) </math> where <math>\displaystyle \alpha_1 + \alpha_2 + ... + \alpha_n = 1 </math> | |||

::::::::::======================================================= | |||

:<math>\ | =====Proof (cont)===== | ||

<br> | Using Jensen's Inequality, <br /> | ||

::<math>\displaystyle g(E[x]) \leq E[g(x)] </math> as <math>\displaystyle g(E[x]) = g(p_1 x_1 + ... p_n x_n) \leq p_1 g(x_1) + ... + p_n g(x_n) = E[g(x)] </math> | |||

Therefore | |||

::<math>\displaystyle E[(w(x))^2] \geq (E[\left| w(x) \right|])^2 </math> | |||

::<math>\displaystyle E[(w(x))^2] \geq \left(\int \left| \frac{f(x)h(x)}{g(x)} \right| g(x) dx \right)^2 </math> <br /> | |||

and | |||

::<math>\begin{align} \displaystyle \left(\int \left| \frac{f(x)h(x)}{g(x)} \right| g(x) dx \right)^2 &= \left(\int \frac{f(x)\left| h(x) \right|}{g(x)} g(x) dx \right)^2 \\ &= \left(\int \left| h(x) \right| f(x) dx \right)^2 \end{align}</math><br /><br /> since <math>\displaystyle f </math> and <math>\displaystyle g</math> are density functions, <math>\displaystyle f, g </math> are non negative. <br /> | |||

< | Thus, this is a lower bound on <math>\displaystyle E[(w(x))^2]</math>. If we replace <math>\displaystyle g^*(x) </math> into <math>\displaystyle E[g^*(x)]</math>, we can see that the result is as we require. Details omitted.<br /> | ||

However, this is mostly of theoritical interest. In practice, it is impossible or very difficult to compute <math>\displaystyle g^*</math>. | |||

Note: Jensen's inequality is actually unnecessary here. We just use it to get <math>E[(w(x))^2] \geq (E[|w(x)|])^2</math>, which could be derived using variance properties: <math>0 \leq Var[|w(x)|] = E[|w(x)|^2] - (E[|w(x)|])^2 = E[(w(x))^2] - (E[|w(x)|])^2</math>. | |||

:<math>\ | |||

<br>'' | ===[[Importance Sampling and Markov Chain Monte Carlo (MCMC)]] - June 4, 2009 === | ||

Remark 4:<br /> | |||

:<math> | :<math>\begin{align} I &= \int^\ h(x)f(x)\,dx \\ | ||

&= \int \ h(x)\frac{f(x)}{g(x)}g(x)\,dx \\ | |||

\hat{I}&=\frac{1}{N} \sum_{i=1}^{N} h(x_i)b(x_i)\end{align}</math><br /><br /> | |||

where <math>\displaystyle b(x_i) = \frac{f(x_i)}{g(x_i)}</math><br /><br /> | |||

:<math>I =\displaystyle \frac{\int\ h(x)f(x)\,dx}{\int f(x) dx}</math> | |||

Apply the idea of importance sampling to both the numerator and denominator: | |||

:: <math>=\displaystyle \frac{\int\ h(x)\frac{f(x)}{g(x)}g(x)\,dx}{\int\frac{f(x)}{g(x)}g(x) dx}</math> | |||

:: <math>= \displaystyle\frac{\sum_{i=1}^{N} h(x_i)b(x_i)}{\sum_{1=1}^{N} b(x_i)}</math> | |||

:: <math>= \displaystyle\sum_{i=1}^{N} h(x_i)b'(x_i)</math> where <math>\displaystyle b'(x_i) = \frac{b(x_i)}{\sum_{i=1}^{N} b(x_i)}</math> | |||

The above results in the following form of Importance Sampling: | |||

::<math> \hat{I} = \displaystyle\sum_{i=1}^{N} b'(x_i)h(x_i) </math> where <math>\displaystyle b'(x_i) = \frac{b(x_i)}{\sum_{i=1}^{N} b(x_i)}</math> | |||

This is very important and useful especially when f is known only up to a proportionality constant. Often, this is the case in the Bayesian approach when f is a posterior density function. | |||

==== Example of Importance Sampling ==== | |||

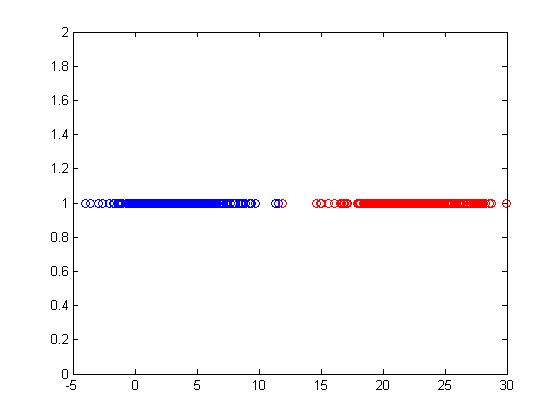

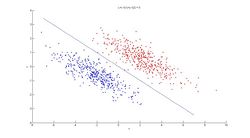

Estimate <math> I = \displaystyle\ Pr (Z>3) </math> when <math>Z \sim~ N(0,1)</math><br /><br /> | |||

:<math>\begin{align} Pr(Z>3) &= \int^\infty_3 f(x)\,dx \approx 0.0013 \\ | |||

&= \int^\infty_{-\infty} h(x)f(x)\,dx \end{align}</math><br /> | |||

:Where <math> | |||

h(x) = \begin{cases} | |||

0, & \text{if } x < 3 \\ | |||

1, & \text{if } x \ge 3 | |||

\end{cases}</math> | |||

<br>'''Approach I: Monte Carlo'''<br> | |||

:<math>\hat{I} = \frac{1}{N}\displaystyle\sum_{i=1}^Nh(x_i)</math> where <math>X \sim~ N(0,1) </math> | |||

The idea here is to sample from normal distribution and to count number of observations that is greater than 3. | |||

The variability will be high in this case if using Monte Carlo since this is considered a low probability event (a tail event), and different runs may give significantly different values. For example: the first run may give only 3 occurences (i.e if we generate 1000 samples, thus the probability will be .003), the second run may give 5 occurences (probability .005), etc. | |||

This example can be illustrated in Matlab using the code below (we will be generating 100 samples in this case): | |||

format long | |||

for i = 1:100 | |||

a(i) = sum(randn(100,1)>=3)/100; | |||

end | |||

meanMC = mean(a) | |||

varMC = var(a) | |||

< | On running this, we get <math> meanMC = 0.0005 </math> and <math> varMC \approx 1.31313 * 10^{-5} </math> | ||

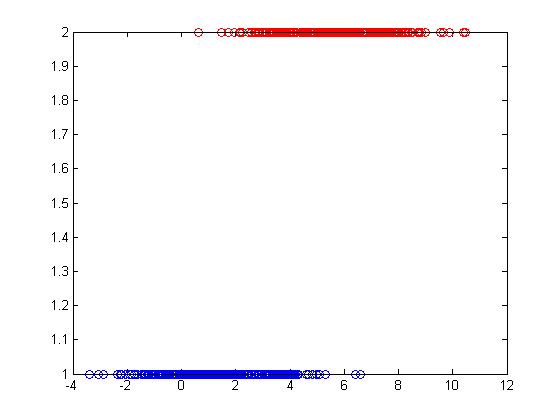

<br>'''Approach II: Importance Sampling'''<br> | |||

:<math>\hat{I} = \frac{1}{N}\displaystyle\sum_{i=1}^Nh(x_i)\frac{f(x_i)}{g(x_i)}</math> where <math>f(x)</math> is standard normal and <math>g(x)</math> needs to be chosen wisely so that it is similar to the target distribution. | |||

<math> | |||

:Let <math>g(x) \sim~ N(4,1) </math> | |||

:<math>b(x) = \frac{f(x)}{g(x)} = e^{(8-4x)}</math> | |||

:<math>\hat{I} = \frac{1}{N}\displaystyle\sum_{i=1}^Nb(x_i)h(x_i)</math> | |||

<math>\frac{}{}\ | |||

This example can be illustrated in Matlab using the code below: | |||

for j = 1:100 | |||

N = 100; | |||

x = randn (N,1) + 4; | |||

for ii = 1:N | |||

h = x(ii)>=3; | |||

b = exp(8-4*x(ii)); | |||

w(ii) = h*b; | |||

end | |||

I(j) = sum(w)/N; | |||

end | |||

MEAN = mean(I) | |||

VAR = var(I) | |||

<math>\ | Running the above code gave us <math> MEAN \approx 0.001353 </math> and <math> VAR \approx 9.666 * 10^{-8} </math> which is very close to 0, and is much less than the variability observed when using Monte Carlo | ||

and | |||

<math>\ | |||

==== Markov Chain Monte Carlo (MCMC) ==== | |||

Before we tackle Markov chain Monte Carlo methods, which essentially are a 'class of algorithms for sampling from probability distributions based on constructing a Markov chain' [MCMC, Wikipedia], we will first give a formal definition of Markov Chain. | |||

Consider the same integral: | |||

<math> I = \displaystyle\int^\ h(x)f(x)\,dx </math> | |||

Idea: If <math>\displaystyle X_1, X_2,...X_N</math> is a Markov Chain with stationary distribution f(x), then under some conditions | |||

:<math>\hat{I} = \frac{1}{N}\displaystyle\sum_{i=1}^Nh(x_i)\xrightarrow{P}\int^\ h(x)f(x)\,dx = I</math> | |||

<br>'''Stochastic Process:'''<br> | |||

A Stochastic Process is a collection of random variables <math>\displaystyle \{ X_t : t \in T \}</math> | |||

*'''State Space Set:'''<math>\displaystyle X </math>is the set that random variables <math>\displaystyle X_t</math> takes values from. | |||

*'''Indexed Set:'''<math>\displaystyle T </math>is the set that t takes values from, which could be discrete or continuous in general, but we are only interested in discrete case in this course. | |||

<br>'''Example 1'''<br> | |||

i.i.d random variables | |||

:<math> \{ X_t : t \in T \}, X_t \in X </math> | |||

:<math> X = \{0, 1, 2, 3, 4, 5, 6, 7, 8\} \rightarrow</math>'''State Space''' | |||

:<math> T = \{1, 2, 3, 4, 5\} \rightarrow</math>'''Indexed Set''' | |||

<br>'''Example 2'''<br> | |||

:<math>\displaystyle X_t</math>: price of a stock | |||

:<math>\displaystyle t</math>: opening date of the market | |||

:: | |||

'''Basic Fact:''' In general, if we have random variables <math>\displaystyle X_1,...X_n</math> | |||

:<math>\displaystyle f(X_1,...X_n)= f(X_1)f(X_2|X_1)f(X_3|X_2,X_1)...f(X_n|X_n-1,...,X_1)</math> | |||

''' | :<math>\displaystyle f(X_1,...X_n)= \prod_{i = 1}^n f(X_i|Past_i)</math> where <math>\displaystyle Past_i = (X_{i-1}, X_{i-2},...,X_1)</math> | ||

<br>'''Markov Chain:'''<br> | |||

A Markov Chain is a special form of stochastic process in which <math>\displaystyle X_t</math> depends only on <math> \displaystyle X_{t-1}</math>. | |||

<br | |||

''' | |||

A | |||

For example, | |||

:<math>\displaystyle f(X_1,...X_n)= f(X_1)f(X_2|X_1)f(X_3|X_2)...f(X_n|X_{n-1})</math> | |||

=== | <br>'''Transition Probability:'''<br> | ||

The probability of going from one state to another state. | |||

:<math>p_{ij} = \Pr(X_{n}=j\mid X_{n-1}= i). \,</math> | |||

<br>'''Transition Matrix:'''<br> | |||

For n states, transition matrix P is an <math>N \times N</math> matrix with entries <math>\displaystyle P_{ij}</math> as below: | |||

= | :<math>P=\left(\begin{matrix}p_{1,1}&p_{1,2}&\dots&p_{1,j}&\dots\\ | ||

p_{2,1}&p_{2,2}&\dots&p_{2,j}&\dots\\ | |||

\vdots&\vdots&\ddots&\vdots&\ddots\\ | |||

p_{i,1}&p_{i,2}&\dots&p_{i,j}&\dots\\ | |||

\vdots&\vdots&\ddots&\vdots&\ddots | |||

\end{matrix}\right)</math> | |||

<br>'''Example:'''<br> | <br>'''Example:'''<br> | ||

Let <math>\displaystyle | A "Random Walk" is an example of a Markov Chain. Let's suppose that the direction of our next step is decided in a probabilistic way. The probability of moving to the right is <math>\displaystyle Pr(heads) = p</math>. And the probability of moving to the left is <math>\displaystyle Pr(tails) = q = 1-p </math>. Once the first or the last state is reached, then we stop. The transition matrix that express this process is shown as below: | ||

:<math>P=\left(\begin{matrix}1&0&\dots&0&\dots\\ | |||

p&0&q&0&\dots\\ | |||

:<math>P=\left(\begin{matrix}1 | 0&p&0&q&0\dots\\ | ||

\vdots&\vdots&\ddots&\vdots&\ddots\\ | |||

1 | p_{i,1}&p_{i,2}&\dots&p_{i,j}&\dots\\ | ||

0&0&0&1 | \vdots&\vdots&\ddots&\vdots&\ddots\\ | ||

0&0&\dots&0&1 | |||

\end{matrix}\right)</math> | \end{matrix}\right)</math> | ||

<br /><br /><br /> | |||

The | ===<big>'''[[Markov Chain Definitions]]''' - June 9, 2009</big>=== | ||

Practical application for estimation: | |||

The general concept for the application of this lies within having a set of generated <math>x_i</math> which approach a distribution <math>f(x)</math> so that a variation of importance estimation can be used to estimate an integral in the form<br /> | |||

<math> I = \displaystyle\int^\ h(x)f(x)\,dx </math> by <math>\hat{I} = \frac{1}{N}\displaystyle\sum_{i=1}^Nh(x_i)</math><br /> | |||

All that is required is a Markov chain which eventually converges to <math>f(x)</math>. | |||

<br /><br /> | |||

In the previous example, the entries <math>p_{ij}</math> in the transition matrix <math>P</math> represent the probability of reaching state <math>j</math> from state <math>i</math> after one step. For this reason, the sum over all entries in a particular row sum to 1, as this itself must be a pmf if a transition from <math>i</math> is to lead to a state still within the state set for <math>X_t</math>. | |||

==== | '''Homogeneous Markov Chain'''<br /> | ||

The probability matrix <math>P</math> is the same for all indicies <math>n\in T</math>. | |||

<math>\displaystyle Pr(X_n=j|X_{n-1}=i)= Pr(X_1=j|X_0=i)</math> | |||

If we denote the pmf of <math>X_n</math> by a probability vector <math>\frac{}{}\mu_n = [P(X_n=x_1),P(X_n=x_2),..,P(X_n=x_i)]</math> <br /> | |||

where <math>i</math> denotes an ordered index of all possible states of <math>X</math>.<br /> | |||

Then we have a definition for the<br /> | |||

'''marginal probabilty''' <math>\frac{}{}\mu_n(i) = P(X_n=i)</math><br /> | |||

where we simplify <math>X_n</math> to represent the ordered index of a state rather than the state itself. | |||

<br /><br /> | |||

From this definition it can be shown that, | |||

<math>\frac{}{}\mu_{n-1}P=\mu_0P^n</math> | |||

<big>'''Proof:'''</big> | |||

<math>\mu_{n-1}P=[\sum_{\forall i}(\mu_{n-1}(i))P_{i1},\sum_{\forall i}(\mu_{n-1}(i))P_{i2},..,\sum_{\forall i}(\mu_{n-1}(i))P_{ij}]</math> | |||

and since | |||

< | <blockquote> | ||

<math>\sum_{\forall i}(\mu_{n-1}(i))P_{ij}=\sum_{\forall i}P(X_{n-1}=i)Pr(X_n=j|X_{n-1}=i)=\sum_{\forall i}P(X_{n-1}=i)\frac{Pr(X_n=j,X_{n-1}=i)}{P(X_{n-1}=i)}</math> | |||

<math>=\sum_{\forall i}Pr(X_n=j,X_{n-1}=i)=Pr(X_n=j)=\mu_{n}(j)</math> | |||

< | </blockquote> | ||

Therefore,<br /> | |||

<math>\frac{}{}\mu_{n-1}P=[\mu_{n}(1),\mu_{n}(2),...,\mu_{n}(i)]=\mu_{n}</math> | |||

With this, it is possible to define <math>P(n)</math> as an n-step transition matrix where <math>\frac{}{}P_{ij}(n) = Pr(X_n=j|X_0=i)</math><br /> | |||

'''Theorem''': <math>\frac{}{}\mu_n=\mu_0P^n</math><br /> | |||

'''Proof''': <math>\frac{}{}\mu_n=\mu_{n-1}P</math> From the previous conclusion<br /> | |||

<math>\frac{}{}=\mu_{n-2}PP=...=\mu_0\prod_{i = 1}^nP</math> And since this is a homogeneous Markov chain, <math>P</math> does not depend on <math>i</math> so<br /> | |||

<math>\frac{}{}=\mu_0P^n</math> | |||

<math>\ | |||

From this it becomes easy to define the n-step transition matrix as <math>\frac{}{}P(n)=P^n</math> | |||

<math>\ | ====Summary of definitions==== | ||

*'''transition matrix''' is an NxN when <math>N=|X|</math> matrix with <math>P_{ij}=Pr(X_1=j|X_0=i)</math> where <math>i,j \in X</math><br /> | |||

*'''n-step transition matrix''' also NxN with <math>P_{ij}(n)=Pr(X_n=j|X_0=i)</math><br /> | |||

*'''marginal (probability of X)'''<math>\mu_n(i) = Pr(X_n=i)</math><br /> | |||

*'''Theorem:''' <math>P_n=P^n</math><br /> | |||

*'''Theorem:''' <math>\mu_n=\mu_0P^n</math><br /> | |||

--- | |||

The | ====Definitions of different types of state sets==== | ||

Define <math>i,j \in</math> State Space<br /> | |||

If <math>P_{ij}(n) > 0</math> for some <math>n</math> , then we say <math>i</math> reaches <math>j</math> denoted by <math>i\longrightarrow j</math> <br /> | |||

This also mean j is accessible by i: <math>j\longleftarrow i</math> <br /> | |||

If <math>i\longrightarrow j</math> and <math>j\longrightarrow i</math> then we say <math>i</math> and <math>j</math> communicate, denoted by <math>i\longleftrightarrow j</math> | |||

<br /><br /> | |||

'''Theorems'''<br /> | |||

1) <math>i\longleftrightarrow i</math><br /> | |||

2) <math>i\longleftrightarrow j \Rightarrow j\longleftrightarrow i</math><br /> | |||

3) If <math>i\longleftrightarrow j,j\longleftrightarrow k\Rightarrow i\longleftrightarrow k</math><br /> | |||

4) The set of states of <math>X</math> can be written as a unique disjoint union of subsets (equivalence classes) <math>X=X_1\bigcup X_2\bigcup ...\bigcup X_k,k>0 </math> where two states <math>i</math> and <math>j</math> communicate <math>IFF</math> they belong to the same subset | |||

<br /><br /> | |||

'''More Definitions'''<br /> | |||

A set is '''Irreducible''' if all states communicate with each other (has only one equivalence class).<br /> | |||

A subset of states is '''Closed''' if once you enter it, you can never leave.<br /> | |||

A subset of states is '''Open''' if once you leave it, you can never return.<br /> | |||

An '''Absorbing Set''' is a closed set with only 1 element (i.e. consists of a single state).<br /> | |||

<math>\displaystyle | <b>Note</b> | ||

*We cannot have <math>\displaystyle i\longleftrightarrow j</math> with i recurrent and j transient since <math>\displaystyle i\longleftrightarrow j \Rightarrow j\longleftrightarrow i</math>. | |||

*All states in an open class are transient. | |||

*A Markov Chain with a finite number of states must have at least 1 recurrent state. | |||

*A closed class with an infinite number of states has all transient or all recurrent states.<br /> | |||

===[[Again on Markov Chain]] - June 11, 2009=== | |||

====Decomposition of Markov chain==== | |||

In the previous lecture it was shown that a Markov Chain can be written as the disjoint union of its classes. This decomposition is always possible and it is reduced to one class only in the case of an irreducible chain. | |||

<br>'''Example:'''<br> | |||

Let <math>\displaystyle X = \{1, 2, 3, 4\}</math> and the transition matrix be: | |||

:<math>P=\left(\begin{matrix}1/3&2/3&0&0\\ | |||

2/3&1/3&0&0\\ | |||

1/4&1/4&1/4&1/4\\ | |||

0&0&0&1 | |||

\end{matrix}\right)</math> | |||

The decomposition in classes is: | |||

::::class 1: <math>\displaystyle \{1, 2\} \rightarrow </math> From the matrix we see that the states 1 and 2 have only <math>\displaystyle P_{12}</math> and <math>\displaystyle P_{21}</math> as nonzero transition probability | |||

::::class 2: <math>\displaystyle \{3\} \rightarrow </math> The state 3 can go to every other state but none of the others can go to it | |||

::::class 3: <math>\displaystyle \{4\} \rightarrow </math> This is an absorbing state since it is a close class and there is only one element | |||

:: | |||

==== | ====Recurrent and Transient states==== | ||

<math>\ | A state i is called <math>\emph{recurrent}</math> or <math>\emph{persistent}</math> if | ||

:<math>\displaystyle Pr(x_{n}=i</math> for some <math>\displaystyle n\geq 1 | x_{0}=i)=1 </math> | |||

That means that the probability to come back to the state i, starting from the state i, is 1. | |||

If it is not the case (ie. probability less than 1), then state i is <math>\emph{transient} </math>. | |||

<math> | It is straight forward to prove that a finite irreducible chain is recurrent. | ||

:: | |||

<br>'''Theorem'''<br> | |||

Given a Markov chain, | |||

<br>A state <math>\displaystyle i</math> is <math>\emph{recurrent}</math> if and only if <math>\displaystyle \sum_{\forall n}P_{ii}(n)=\infty</math> | |||

<br>A state <math>\displaystyle i</math> is <math>\emph{transient}</math> if and only if <math>\displaystyle \sum_{\forall n}P_{ii}(n)< \infty</math> | |||

<br>'''Properties'''<br> | |||

*If <math>\displaystyle i</math> is <math>\emph{recurrent}</math> and <math>i\longleftrightarrow j</math> then <math>\displaystyle j</math> is <math>\emph{recurrent}</math> | |||

*If <math>\displaystyle i</math> is <math>\emph{transient}</math> and <math>i\longleftrightarrow j</math> then <math>\displaystyle j</math> is <math>\emph{transient}</math> | |||

*In an equivalence class, either all states are recurrent or all states are transient | |||

*A finite Markov chain should have at least one recurrent state | |||

*The states of a finite, irreducible Markov chain are all recurrent (proved using the previous preposition and the fact that there is only one class in this kind of chain) | |||

In the example above, state one and two are a closed set, so they are both recurrent states. State four is an absorbing state, so it is also recurrent. State three is transient. | |||

<br>'''Example'''<br> | |||

Let <math>\displaystyle X=\{\cdots,-2,-1,0,1,2,\cdots\}</math> and suppose that <math>\displaystyle P_{i,i+1}=p </math>, <math>\displaystyle P_{i,i-1}=q=1-p</math> and <math>\displaystyle P_{i,j}=0</math> otherwise. | |||

<math>\displaystyle \ | This is the Random Walk that we have already seen in a previous lecture, except it extends infinitely in both directions. | ||

\ | |||

\ | We now see other properties of this particular Markov chain: | ||

\ | *Since all states communicate if one of them is recurrent, then all states will be recurrent. On the other hand, if one of them is transient, then all the other will be transient too. | ||

*Consider now the case in which we are in state <math>\displaystyle 0</math>. If we move of n steps to the right or to the left, the only way to go back to <math>\displaystyle 0</math> is to have n steps on the opposite direction. | |||

<math>\displaystyle Pr(x_{2n}=0|X_{0}=0)=P_{00}(2n)=[ {2n \choose n} ]p^{n}q^{n}</math> | |||

We now want to know if this event is transient or recurrent or, equivalently, whether <math>\displaystyle \sum_{\forall i}P_{ii}(n)\geq\infty</math> or not. | |||

To proceed with the analysis, we use the <math>\emph{Stirling }</math> <math>\displaystyle\emph{formula}</math>: | |||

< | <math>\displaystyle n!\sim~n^{n}\sqrt{n}e^{-n}\sqrt{2\pi}</math> | ||

The probability could therefore be approximated by: | |||

<math> | <math>\displaystyle P_{00}(n)=\sim~\frac{(4pq)^{n}}{\sqrt{n\pi}}</math> | ||

And the formula becomes: | |||

<math>\displaystyle \sum_{\forall n}P_{00}(n)=\sum_{\forall n}\frac{(4pq)^{n}}{\sqrt{n\pi}}</math> | |||

We can conclude that if <math>\displaystyle 4pq < 1</math> then the state is transient, otherwise is recurrent. | |||

<math>\displaystyle | <math>\displaystyle | ||

\sum_{\forall n}P_{00}(n)=\sum_{\forall n}\frac{(4pq)^{n}}{\sqrt{n\pi}} = \begin{cases} | |||

\infty, & \text{if } p = \frac{1}{2} \\ | |||

< \infty, & \text{if } p\neq \frac{1}{2} | |||

\end{cases}</math> | |||

An alternative to Stirling's approximation is to use the generalized binomial theorem to get the following formula: | |||

<math> | |||

\frac{1}{\sqrt{1 - 4x}} = \sum_{n=0}^{\infty} \binom{2n}{n} x^n | |||

</math> | |||

Then substitute in <math>x = pq</math>. | |||

<math> | |||

\frac{1}{\sqrt{1 - 4pq}} = \sum_{n=0}^{\infty} \binom{2n}{n} p^n q^n = \sum_{n=0}^{\infty} P_{00}(2n) | |||

</math> | |||

So we reach the same conclusion: all states are recurrent iff <math>p = q = \frac{1}{2}</math>. | |||

<math>\ | ====Convergence of Markov chain==== | ||

We define the <math>\displaystyle \emph{Recurrence}</math> <math>\emph{time}</math><math>\displaystyle T_{i,j}</math> as the minimum time to go from the state i to the state j. It is also possible that the state j is not reachable from the state i. | |||

<math>\displaystyle T_{ij}=\begin{cases} | |||

min\{n: x_{n}=i\}, & \text{if }\exists n \\ | |||

\ | \infty, & \text{otherwise } | ||

\ | \end{cases}</math> | ||

\ | |||

\end{ | |||

The mean of the recurrent time <math>\displaystyle m_{i}</math>is defined as: | |||

<math>m_{i}=\displaystyle E(T_{ij})=\sum nf_{ii} </math> | |||

where <math>\displaystyle f_{ij}=Pr(x_{1}\neq j,x_{2}\neq j,\cdots,x_{n-1}\neq j,x_{n}=j/x_{0}=i)</math> | |||

Using the objects we just introduced, we say that: | |||

<math>\displaystyle \text{state } i=\begin{cases} | |||

\text{null}, & \text{if } m_{i}=\infty \\ | |||

\text{non-null or positive} , & \text{otherwise } | |||

\end{cases}</math> | |||

<br>'''Lemma'''<br> | |||

In a finite state Markov Chain, all the recurrent state are positive | |||

====Periodic and aperiodic Markov chain==== | |||

A Markov chain is called <math>\emph{periodic}</math> of period <math>\displaystyle n</math> if, starting from a state, <s>we will return to it every <math>\displaystyle n</math> steps with probability <math>\displaystyle 1</math></s> we can only return to that state in a multiple of n steps. | |||

<br>'''Example'''<br> | |||

Considerate the three-state chain: | |||

<math>P=\left(\begin{matrix} | <math>P=\left(\begin{matrix} | ||

0&1&0\\ | 0&1&0\\ | ||

0&0&1\\ | 0&0&1\\ | ||

1&0&0 | 1&0&0 | ||

\end{matrix}\right)</math> | \end{matrix}\right)</math> | ||

It's evident that, starting from the state 1, we will return to it on every <math>3^{rd}</math> step and so it works for the other two states. The chain is therefore periodic with perdiod <math>d=3</math> | |||

<math> | |||

An irreducible | An irreducible Markov chain is called <math>\emph{aperiodic}</math> if: | ||

<math>\displaystyle Pr(x_{n}=j | x_{0}=i) > 0 \text{ and } Pr(x_{n+1}=j | x_{0}=i) > 0 \text{ for some } n\ge 0 </math> | |||

<math> | |||

<br>'''Another Example'''<br> | |||

Consider the chain | |||

<math>P=\left(\begin{matrix} | |||

0&0.5&0&0.5\\ | |||

<math>P=\left(\begin{matrix} | 0.5&0&0.5&0\\ | ||

0&0.5&0&0.5\\ | |||

0.5&0&0.5&0\\ | |||

0& | |||

\end{matrix}\right)</math> | \end{matrix}\right)</math> | ||

This chain is periodic by definition. You can only get back to state 1 after at least 2 steps <math>\Rightarrow</math> period <math>d=2</math> | |||

==Markov Chains and their Stationary Distributions - June 16, 2009== | |||

====New Definition:Ergodic==== | |||

A state is '''Ergodic''' if it is non-null, recurrent, and aperiodic. A Markov Chain is ergodic if all its states are ergodic. | |||

Define a vector <math>\pi</math> where <math>\pi_i > 0 \forall i</math> and <math>\sum_i \pi_i = 1</math>(ie. <math>\pi</math> is a pmf) | |||

<math>\pi</math> is a stationary distribution if <math>\pi=\pi P</math> where P is a transition matrix. | |||

<math>\ | |||

<math>\ | |||

====Limiting Distribution==== | |||

If as <math>n \longrightarrow \infty , P^n \longrightarrow \left[ \begin{matrix} | |||

\pi\\ | |||

\pi\\ | |||

<math>\ | \vdots\\ | ||

\pi\\ | |||

\end{matrix}\right]</math> | |||

then <math>\pi</math> is the limiting distribution of the Markov Chain represented by P.<br /> | |||

'''Theorem:''' An irreducible, ergodic Markov Chain has a unique stationary distribution <math>\pi</math> and there exists a limiting distribution which is also <math>\pi</math>. | |||

====Detailed Balance==== | |||

= | The condition for detailed balanced is <math>\displaystyle \pi_i p_{ij} = p_{ji} \pi_j </math> | ||

=====Theorem===== | |||

If <math>\pi</math> satisfies detailed balance then it is a stationary distribution. | |||

< | '''Proof. | ||

'''<br> | |||

We need to show <math>\pi = \pi P</math> | |||

<math>\displaystyle [\pi p]_j = \sum_{i} \pi_i p_{ij} = \sum_{i} p_{ji} \pi_j = \pi_j \sum_{i} p_{ji}= \pi_j </math> as required | |||

Warning! A chain that has a stationary distribution does not necessarily converge. | |||

For example, | |||

<math>P=\left(\begin{matrix} | |||

0&1&0\\ | |||

0&0&1\\ | |||

1&0&0 | |||

\end{matrix}\right)</math> has a stationary distribution <math>\left(\begin{matrix} | |||

1/3&1/3&1/3 | |||

\end{matrix}\right)</math> but it will not converge. | |||

====Stationary Distribution==== | |||

<math>\pi</math> is stationary (or invariant) distribution if <math>\pi</math> = <math>\pi * p</math> | |||

[0.5 0 0.5] | |||

Half of time their chain will spend half time in 1st state and half time in 3rd state. | |||

====Theorem==== | |||

An irreducible ergodic Markov Chain has a unique stationary distribution <math>\pi</math>. | |||

The limiting distribution exists and is equal to <math>\pi</math>. | |||

If g is any bounded function, then with probability 1: | |||

<math>lim \frac{1}{N}\displaystyle\sum_{i=1}^Ng(x_n)\longrightarrow E_n(g)=\displaystyle\sum_{j}g(j)\pi_j</math> | |||

====Example==== | |||

Find the limiting distribution of | |||

<math>P=\left(\begin{matrix} | |||

1/2&1/2&0\\ | |||

1/2&1/4&1/4\\ | |||

0&1/3&2/3 | |||

\end{matrix}\right)</math> | |||

Solve <math>\pi=\pi P</math> | |||

<math>\displaystyle \pi_0 = 1/2\pi_0 + 1/2\pi_1</math><br /> | |||

<math>\displaystyle \pi_1 = 1/2\pi_0 + 1/4\pi_1 + 1/3\pi_2</math><br /> | |||

<math>\displaystyle \pi_2 = 1/4\pi_1 + 2/3\pi_2</math><br /> | |||

<math> | |||

Also <math>\displaystyle \sum_i \pi_i = 1 \longrightarrow \pi_0 + \pi_1 + \pi_2 = 1</math><br /> | |||

We can solve the above system of equations and obtain <br /> | |||

<math>\displaystyle \pi_2 = 3/4\pi_1</math><br /> | |||

<math>\displaystyle \pi_0 = \pi_1</math><br /> | |||

Thus, <math>\displaystyle \pi_0 + \pi_1 + 3/4\pi_1 = 1</math> | |||

and we get <math>\displaystyle \pi_1 = 4/11</math> | |||

Subbing <math>\displaystyle \pi_1 = 4/11</math> back into the system of equations we obtain <br /> | |||

<math>\displaystyle \pi_0 = 4/11</math> and <math>\displaystyle \pi_2 = 3/11</math> | |||

Therefore the limiting distribution is <math>\displaystyle \pi = (4/11, 4/11, 3/11)</math> | |||

==Monte Carlo using Markov Chain - June 18, 2009== | |||

Consider the problem of computing <math> I = \displaystyle\int^\ h(x)f(x)\,dx </math> | |||

<math>\bullet</math> Generate <math>\displaystyle X_1</math>, <math>\displaystyle X_2</math>,... from a Markov Chain with stationary distribution <math>\displaystyle f(x)</math> | |||

<math>\bullet</math> <math>\hat{I} = \frac{1}{N}\displaystyle\sum_{i=1}^Nh(x_i)\longrightarrow E_f(h(x))=\hat{I}</math> | |||

====''''' Metropolis Hastings Algorithm''''' ==== | |||

The Metropolis Hastings Algorithm first originated in the physics community in 1953 and was adopted later on by statisticians. It was originally used for the computation of a Boltzmann distribution, which describes the distribution of energy for particles in a system. In 1970, Hastings extended the algorithm to the general procedure described below. | |||

Suppose we wish to sample from <math>f(x)</math>, the 'target' distribution. Choose <math>q(y | x)</math>, the 'proposal' distribution that is easily sampled from.<br /><br /> | |||

:: | :'''<math>\emph{Procedure:}</math> ''' | ||

[ | :<br\>1. Initialize <math>\displaystyle X_0</math>, this is the starting point of the chain, choose it randomly and set index <math>\displaystyle i=0</math> | ||

:<br\>2. <math>Y~ \sim~ q(y\mid{x})</math> | |||

:<br\>3. Compute <math>\displaystyle r(X_i,Y)</math>, where <math>r(x,y)=min{\{\frac{f(y)}{f(x)}*\frac{q(x\mid{y})}{q(y\mid{x})},1}\}</math> | |||

:<br\>4. <math> U~ \sim~ Unif [0,1] </math> | |||

:<br\>5. If <math>\displaystyle U<r </math> | |||

:::then <math>\displaystyle X_{i+1}=Y </math> | |||

::else <math>\displaystyle X_{i+1}=X_i </math> | |||

:<br\>6. Update index <math>\displaystyle i=i+1</math>, and go to step 2 | |||

'''''A couple of remarks about the algorithm''''' | |||

'''Remark 1:''' A good choice for <math>q(y\mid{x})</math> is <math>\displaystyle N(x,b^2)</math> where <math>\displaystyle b>0 </math> is a constant. The starting point of the algorithm <math>X_0=x</math>, i.e. the proposal distibution is a normal centered at the current, randomly chosen, state. | |||

''' | '''Remark 2:''' If the proposal distribution is symmetric, <math>q(y\mid{x})=q(x\mid{y})</math>, then <math>r(x,y)=min{\{\frac{f(y)}{f(x)},1}\}</math>. This is called the Metropolis Algorithm, which is a special case of the original algorithm of Metropolis (1953). | ||

'''Remark 3:''' <math>\displaystyle N(x,b^2)</math> is symmetric. Probability of setting mean to x and sampling y is equal to the probability of setting mean to y and samplig x. | |||

'''Example:''' The Cauchy distribution has density <math> f(x)=\frac{1}{\pi}*\frac{1}{1+x^2}</math> | |||

Let the proposal distribution be <math>q(y\mid{x})=N(x,b^2) </math> | |||

<math>r(x,y)=min{\{\frac{f(y)}{f(x)}*\frac{q(x\mid{y})}{q(y\mid{x})},1}\}</math> | |||

::<math>=min{\{\frac{f(y)}{f(x)},1}\}</math> since <math>q(y\mid{x})</math> is symmetric <math>\Rightarrow</math> <math>\frac{q(x\mid{y})}{q(y\mid{x})}=1</math> | |||

::<math>=min{\{\frac{ \frac{1}{\pi}\frac{1}{1+y^2} }{ \frac{1}{\pi} \frac{1}{1+x^2} },1}\}</math> | |||

::<math>=min{\{\frac{1+x^2 }{1+y^2},1}\}</math> | |||

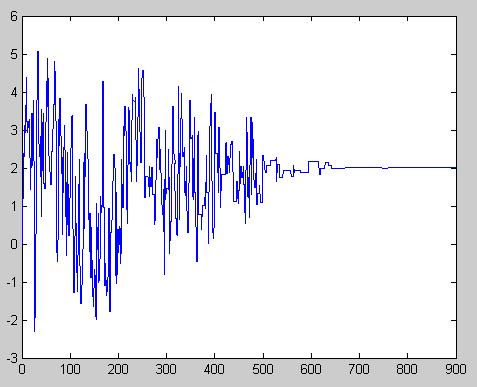

Now, having calculated <math>\displaystyle r(x,y)</math>, we complete the problem in Matlab using the following code: | |||

b=2; % let b=2 for now, we will see what happens when b is smaller or larger | |||

X(1)=randn; | |||

for i=2:10000 | |||

Y=b*randn+X(i-1); % we want to decide whether we accept this Y | |||

r=min( (1+X(i-1)^2)/(1+Y^2),1); | |||

u=rand; | |||

if u<r | |||

X(i)=Y; % accept Y | |||

else | |||

X(i)=X(i-1); % reject Y remaining in the current state | |||

end; | |||

end; | |||

'''''We need to be careful about choosing b!''''' | |||

:'''If b is too large''' | |||

::Then the fraction <math>\frac{f(y)}{f(x)}</math> would be very small <math>\Rightarrow</math> <math>r=min{\{\frac{f(y)}{f(x)},1}\}</math> is very small aswell. | |||

::It is highly unlikely that <math>\displaystyle u<r</math>, the probability of rejecting <math>\displaystyle Y</math> is high so the chain is likely to get stuck in the same state for a long time <math>\rightarrow</math> chain may not coverge to the right distribution. | |||

: | ::It is easy to observe by looking at the histogram of <math>\displaystyle X</math>, the shape will not resemble the shape of the target <math>\displaystyle f(x)</math> | ||

:: Most likely we reject y and the chain will get stuck. | |||

:'''If b is too small | |||

::Then we are setting up our proposal distribution <math>q(y\mid{x})</math> to be much narrower then than the target <math>\displaystyle f(x)</math> so the chain will not have a chance to explore the sample state space and visit majority of the states of the target <math>\displaystyle f(x)</math>. | |||

:'''If b is just right''' | |||

::Well chosen b will help avoid the issues mentioned above and we can say that the chain is "mixing well". | |||

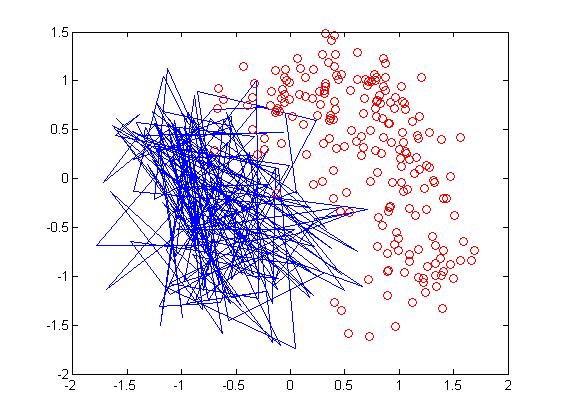

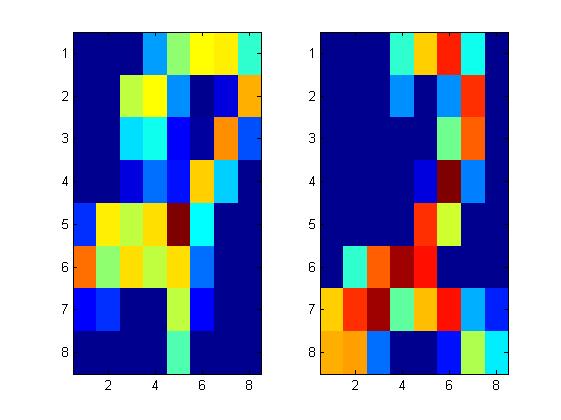

<gallery widths="250px" heights="250px"> | |||

Image:Blarge.jpg|B too large (B=1000) | |||

Image:Bsmall.JPG|B too small (B=0.001 | |||

Image:Bgood.JPG|Good choice of B (B=2) | |||

</gallery> | |||

'''Mathematical explanation for why this algorithm works:''' | |||

We talked about <math>\emph{discrete}</math> MC so far. | |||

<br\> We have seen that: <br\>- <math>\displaystyle \pi</math> satisfies detailed balance if <math>\displaystyle \pi_iP_{ij}=P_{ji}\pi_j</math> and <br\>- if <math>\displaystyle\pi</math> satisfies <math>\emph{detailed}</math> <math>\emph{balance}</math>then it is a stationary distribution <math>\displaystyle \pi=\pi P</math> | |||

In <math>\emph{continuous}</math>case we write the Detailed Balance as <math>\displaystyle f(x)P(x,y)=P(y,x)f(y)</math> and say that <br\><math>\displaystyle f(x)</math> is <math>\emph{stationary}</math> <math>\emph{distribution}</math> if <math>f(x)=\int f(y)P(y,x)dy</math>. | |||

<math> | We want to show that if Detailed Balance holds (i.e. assume <math>\displaystyle f(x)P(x,y)=P(y,x)f(y)</math>) then <math>\displaystyle f(x)</math> is stationary distribution. | ||

That is to show: <math>\displaystyle f(x)P(x,y)=P(y,x)f(y)\Rightarrow </math> <math>\displaystyle f(x)</math> is stationary distribution. | |||

:<math>f(x)=\int f(y)P(y,x)dy</math> | |||

:::<math>=\int f(x)P(x,y)dy</math> | |||

:::<math>=f(x)\int P(x,y)dy</math> and since <math>\int P(x,y)dy=1</math> | |||

:::<math>=\displaystyle f(x)</math> | |||

'''''Now, we need to show that detailed balance holds in the Metropolis-Hastings...''''' | |||

Consider 2 points <math>\displaystyle x</math> and <math>\displaystyle y</math>: | |||

:'''Either''' <math>\frac{f(y)}{f(x)}*\frac{q(x\mid{y})}{q(y\mid{x})}>1</math> '''OR''' <math>\frac{f(y)}{f(x)}*\frac{q(x\mid{y})}{q(y\mid{x})}<1</math> (ignoring that it might equal to 1) | |||

Without loss of generality. suppose that the product is <math>\displaystyle<1</math>. | |||

In | In this case <math>r(x,y)=\frac{f(y)}{f(x)}*\frac{q(x\mid{y})}{q(y\mid{x})}</math> and <math>\displaystyle r(y,x)=1</math> | ||

:''Some intuitive meanings before we continue:'' | |||

:<math>\displaystyle P(x,y)</math> is jumping from <math>\displaystyle x</math> to <math>\displaystyle y</math> if proposal distribution generates <math>\displaystyle y</math> '''and''' <math>\displaystyle y</math> is accepted | |||

:<math>\displaystyle P(y,x)</math> is jumping from <math>\displaystyle y</math> to <math>\displaystyle x</math> if proposal distribution generates <math>\displaystyle x</math> '''and''' <math>\displaystyle x</math> is accepted | |||

:<math>q(y\mid{x})</math> is the probability of generating <math>\displaystyle y</math> | |||

:<math>q(x\mid{y})</math> is the probability of generating <math>\displaystyle x</math> | |||

:<math>\displaystyle r(x,y)</math> probability of accepting <math>\displaystyle y</math> | |||

:<math>\displaystyle r(y,x)</math> probability of accepting <math>\displaystyle x</math>. | |||

With that in mind we can show that <math>\displaystyle f(x)P(x,y)=P(y,x)f(y)</math> as follows: | |||

=== | <math>P(x,y)=q(y\mid{x})*(r(x,y))=q(y\mid{x})\frac{f(y)}{f(x)}\frac{q(x\mid{y})}{q(y\mid{x})}</math> Cancelling out <math>\displaystyle q(y\mid{x})</math> and bringing <math>\displaystyle f(x)</math> to the other side we get | ||

<br\><math>f(x)P(x,y)=f(y)q(x\mid{y})</math> <math>\Leftarrow</math> '''equation''' <math>\clubsuit</math> | |||

<math>P(y,x)=q(x\mid{y})*(r(y,x))=q(x\mid{y})*1</math> Multiplying both sides by <math>\displaystyle f(y)</math> we get | |||

<br\><math>f(y)P(y,x)=f(y)q(x\mid{y})</math> <math>\Leftarrow</math> '''equation''' <math>\clubsuit\clubsuit</math> | |||

Noticing that the right hand sides of the '''equation''' <math>\clubsuit</math> and '''equation''' <math>\clubsuit\clubsuit</math> are equal we conclude that: | |||

<br\><math>\displaystyle f(x)P(x,y)=P(y,x)f(y)</math> as desired and thus showing that Metropolis-Hastings satisfies detailed balance. | |||

Next lecture we will see that Metropolis-Hastings is also irreducible and ergodic thus showing that it converges. | |||

==Metropolis Hastings Algorithm Continued - June 25 == | |||

Metropolis–Hastings algorithm is a Markov chain Monte Carlo method. It is used to help sample from probability distributions that are difficult to sample from. The algorithm was named after Nicholas Metropolis (1915-1999), also co-author of the Simulated Anealing method (that is introduced in this lecture as well). The Gibbs sampling algorithm, that will be introduced next lecture, is a special case of the Metropolis–Hastings algorithm. This is a more efficient method, although less applicable at times. | |||

In the last class, we showed that Metropolis Hastings satisfied the the detail-balance equations. i.e. | |||

<br\><math>\displaystyle f(x)P(x,y)=P(y,x)f(y)</math>, which means <math>\displaystyle f(x) </math> is the stationary distribution of the chain. | |||

But this is not enough, we want the chain to converge to the stationary distribution as well. | |||

Thus, we also need it to be: | |||

<b>Irreducible:</b> There is a positive probability to reach any non-empty set of states from any starting point. This is trivial for many choice of <math>\emph{q}</math> including the one that we used in the example in the previous lecture (which was normally distributed) | |||

In | <b>Aperiodic:</b> The chain will not oscillate between different set of states. In the previous example, <math> q(y\mid{x}) </math> is <math> \displaystyle N(x,b^2)</math>, which will clearly not oscillate. | ||

Next we discuss a couple of variations of Metropolis Hastings | |||

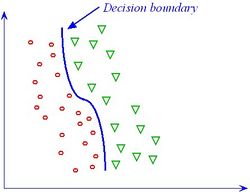

====''''' Random Walk Metropolis Hastings''''' ==== | |||

:'''<math>\emph{Procedure:}</math> ''' | |||

:<br\>1. Draw <math>\displaystyle Y = X_i + \epsilon</math>, where <math>\displaystyle \epsilon </math> has distribution <math>\displaystyle g </math>; <math>\epsilon = Y-X_i \sim~ g </math>; <math>\displaystyle X_i </math> is current state & <math>\displaystyle Y </math> is going to be close to <math>\displaystyle X_i </math> | |||

:<br\>2. It means <math>q(y\mid{x}) = g(y-x)</math>. (Note that <math>\displaystyle g </math> is a function of distance between the current state and the state the chain is going to travel to, i.e. it's of the form <math>\displaystyle g(|y-x|) </math>. Hence we know in this version that <math>\displaystyle q </math> is symmetric <math>\Rightarrow q(y\mid{x}) = g(|y-x|) = g(|x-y|) = q(x\mid{y})</math>) | |||

:<br\>3. <math>r=min{\{\frac{f(y)}{f(x)},1}\}</math> | |||

Recall in our previous example we wanted to sample from the Cauchy distribution and our proposal distribution was <math> q(y\mid{x}) </math> <math>\sim~ N(x,b^2) </math> | |||

In matlab, we defined this as | |||

<math>\displaystyle Y = b* randn + x </math> (i.e <math>\displaystyle Y = X_i + randn*b) </math> | |||

In this case, we need <math>\displaystyle \epsilon \sim~ N(0,b^2) </math> | |||

The hard problem is to choose b so that the chain will mix well. | |||

<b>Rule of thumb: </b> choose b such that the rejection probability is 0.5 (i.e. half the time accept, half the time reject) | |||

<b> Example </b> | |||

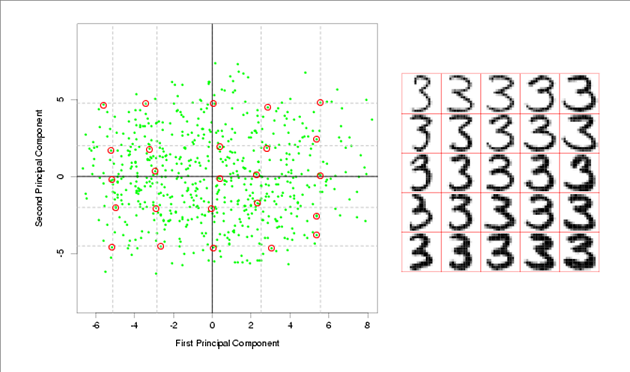

: | [[File:Figure.JPG]] | ||

If we draw <math>\displaystyle y_1 </math> then <math>{\frac{f(y_1)}{f(x)}} > 1 \Rightarrow min{\{\frac{f(y_1)}{f(x)},1}\} = 1</math>, accept <math>\displaystyle y_1</math> with probability 1 | |||

If we draw <math>\displaystyle y_2 </math> then <math>{\frac{f(y_2)}{f(x)}} < 1 \Rightarrow min{\{\frac{f(y_2)}{f(x)},1}\} = \frac{f(y_2)}{f(x)}</math>, accept <math>\displaystyle y_2</math> with probability <math>\frac{f(y_2)}{f(x)}</math> | |||

= | |||

Hence, each point drawn from the proposal that belongs to a region with higher density will be accepted for sure (with probability 1), and if a point belongs to a region with less density, then the chance that it will be accepted will be less than 1. | |||

====''''' Independence Metropolis Hastings''''' ==== | |||

<math>\ | In this case, the proposal distribution is independent of the current state, i.e. <math>\displaystyle q(y\mid{x}) = q(y)</math> | ||

We draw from a fixed distribution | |||

<math>\ | And define <math>r = min{\{\frac{f(y)}{f(x)} \cdot \frac{q(x)}{q(y)},1}\}</math> | ||

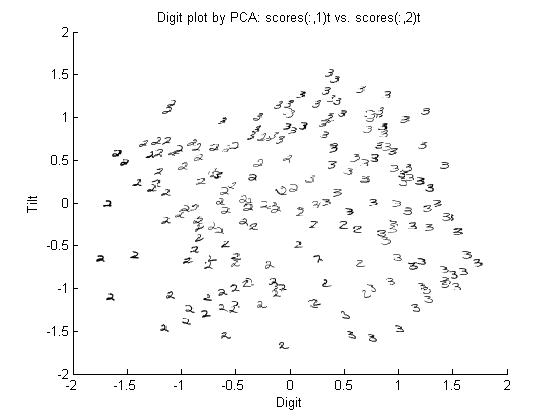

<math>\ | And, this does not work unless <math>\displaystyle q </math> is very similar to the target distribution <math>\displaystyle f </math> (i.e. usually used when <math>\displaystyle f </math> is known up to a proportionality constant - the form of the distibution is known, but the distribution is not exactly known) | ||