STAT946F17/ Teaching Machines to Describe Images via Natural Language Feedback

Introduction

In the era of Artificial Intelligence, one should ideally be able to educate the robot about its mistakes, possibly without needing to dig into the underlying software. Reinforcement learning (RL) has become a standard way of training artificial agents that interact with an environment. RL agents optimize their action policies so as to maximize the expected reward received from the environment. Several works explored the idea of incorporating humans into the learning process, in order to help the reinforcement learning agent to learn faster. In most cases, the guidance comes in the form of a simple numerical (or “good”/“bad”) reward. In this work, natural language is used as a way to guide an RL agent. The author argues that a sentence provides a much stronger learning signal than a numeric reward in that we can easily point to where the mistakes occur and suggest how to correct them.

Here the goal is to allow a non-expert human teacher to give feedback to an RL agent in the form of natural language, just as one would to a learning child. The author has focused on the problem of image captioning, a task where the content of an image is described using sentences. This can also be seen as a multimodal problem where the whole network/model needs to combine the solution space of learning in both the image processing and text-generation domain. Image captioning is an application where the quality of the output can easily be judged by non-experts.

Related Works

Several works incorporate human feedback to help an RL agent learn faster.

- Thomaz et al. (2006) exploits humans in the loop to teach an agent to cook in a virtual kitchen. The users watch the agent learn and may intervene at any time to give a scalar reward. Reward shaping (Ng et al., 1999) is used to incorporate this information in the Markov Decision Process (MDP).

- Judah et al. (2010) iterates between “practice”, during which the agent interacts with the real environment, and a critique session where a human labels any subset of the chosen actions as good or bad.

- Knox et al., (2012) discusses different ways of incorporating human feedback, including reward shaping, Q augmentation, action biasing, and control sharing.

- Griffith et al. (2013) proposes policy shaping which incorporates right/wrong feedback by utilizing it as direct policy labels.

- Mao et. al. propose a multimodal Recurrent Neural Network (m-RNN) for image captioning on 4 crucial datasets: IAPR TC-12, Flickr 8K, Flickr 30K and MS COCO [14].Their approach involves a double network comprising of a deep RNN for sentence generation and a deep CNN for image learning.

Above approaches mostly assume that humans provide a numeric reward, unlike in this work where feedback is given in natural language. A few attempts have been made to advise an RL agent using language.

- Maclin et al. (1994) translated advice to a short program which was then implemented as a neural network. The units in this network represent Boolean concepts, which recognize whether the observed state satisfies the constraints given by the program. In such a case, the advice network will encourage the policy to take the suggested action.

- Weston et al. (2016) incorporates human feedback to improve a text-based question answering agent.

- Kaplan et al. (2017) exploits textual advice to improve training time of the A3C algorithm in playing an Atari game.

The authors propose the Phrase-based Image Captioning Model which is similar to most image captioning models except that it exploits attention and linguistic information. Several recent approaches trained the captioning model with policy gradients in order to directly optimize for the desired performance metrics. This work follows the same line.

There is also similar efforts on dialogue based visual representation learning and conversation modeling. These models aim to mimic human-to-human conversations while in this work the human converses with and guides an artificial learning agent.

Methodology

The framework consists of a new phrase-based captioning model trained with Policy Gradients that incorporates natural language feedback provided by a human teacher. The phrase-based captioning model allows natural guidance by a non-expert.

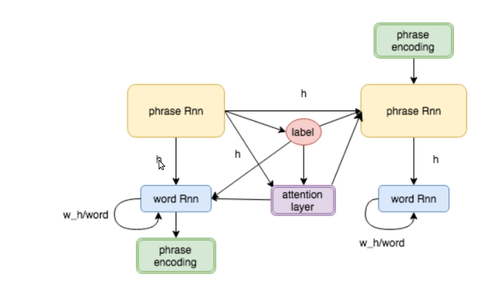

Phrase-based Image Captioning

The captioning model uses a hierarchical recurrent neural network (RNN). Hierarchical RNN is a complex architecture that typically uses stacked RNNs because of their ability to build better hidden states near the output level. Inspired by the work by Jonathan et al. the authors have utilized Hierarchical RNN composed of a two-level LSTMs, a phrase RNN at the top level, and a word RNN at second level that generates a sequence of words for each phrase. The basic difference from the work done by Jonathan et al. is that the authors have reasoned out about the type of phrases and exploited the attention mechanism over the image. The model receives an image as input and outputs a caption. One can think of the phrase RNN as providing a “topic” at each time step, which instructs the word RNN what to talk about. The structure of the model is explained in Figure 1.

A convolutional neural network is used in order to extract a set of feature vectors $a = (a_1, \dots, a_n)$, with $a_j$ a feature in location j in the input image. These feature vectors are given to the attention layer. There are also two more inputs to the attention layer, current hidden state of the phrase-RNN and output of the label unit. The label unit predicts one out of four possible phrase labels, i.e., a noun (NP), preposition (PP), verb (VP), and conjunction phrase (CP). This information could be useful for the attention layer. For example, when we have a NP the model may look at objects in the image, while for VP it may focus on more global information. Computations can be expressed with the following equations:

$$ \begin{align*} \small{\textrm{hidden state of the phrase-RNN at time step t}} \leftarrow h_t &= f_{phrase}(h_{t-1}, l_{t-1}, c_{t-1}, e_{t-1}) \\ \small{\text{output of the label unit}} \leftarrow l_t &= softmax(f_{phrase-label}(h_t)) \\ \small{\text{output of the attention layer}} \leftarrow c_t &= f_{att}(h_t, l_t, a) \end{align*} $$

After deciding about phrases, the outputs of phrase-RNN go to another LSTM to produce words for each phrase. $w_{t,i}$ denotes the i-th word output of the word-RNN in the t-th phrase. There is an additional <EOP> token in word-RNN’s vocabulary, which signals the end-of-phrase. Furthermore, $h_{t,i}$ denotes the i-th hidden state of the word-RNN for the t-th phrase. $$ h_{t,i} = f_{word}(h_{t,i-1}, c_t, w_{t,i}) \\ w_{t,i} = f_{out}(h_{t,i}, c_t, w_{t,i-1}) \\ e_t = f_{word-phrase}(w_{t,1}, \dots ,w_{t,n}) $$

Note that $e_t$ encodes the generated phrase via simple mean-pooling over the words, which provides additional word-level context to the next phrase.

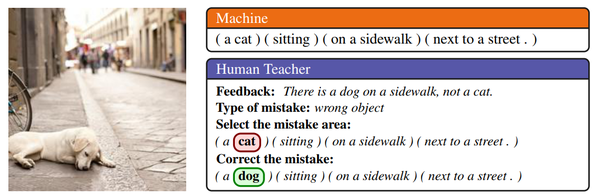

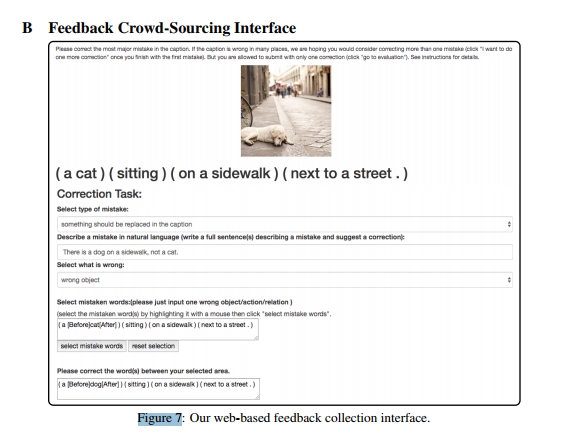

Crowd-sourcing Human Feedback

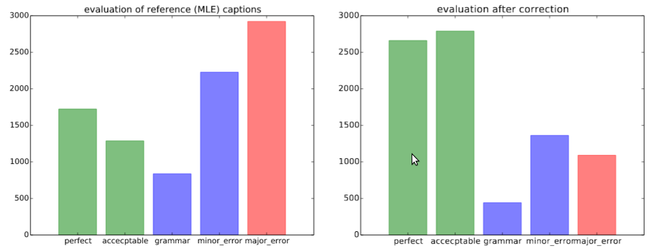

The authors have created a web interface that allows collecting feedback information on a larger scale via AMT. Figure 2 depicts the interface and an example of caption correction. A snapshot of the model is used to generate captions for a subset of MS-COCO images using greedy decoding. There are two rounds of annotation. In the first round, the annotator is shown a captioned image and is asked to assess the quality of the caption, by choosing between: perfect, acceptable, grammar mistakes only, minor or major errors. They ask the annotators to choose minor and major error if the caption contained errors in semantics. They advise them to choose minor for small errors such as wrong or missing attributes or awkward prepositions and go with major errors whenever any object or action naming is wrong. A visualization of this web-based interface is provided in Figure 3(a).

For the next (more detailed, and thus more costly) round of annotation, They only select captions which are not marked as either perfect or acceptable in the first round. Since these captions contain errors, the new annotator is required to provide detailed feedback about the mistakes. Annotators are asked to:

- Choose the type of required correction (something “ should be replaced”, “is missing”, or “should be deleted”)

- Write feedback in natural language (annotators are asked to describe a single mistake at a time)

- Mark the type of mistake (whether the mistake corresponds to an error in object, action, attribute, preposition, counting, or grammar)

- Highlight the word/phrase that contains the mistake

- Correct the chosen word/phrase

- Evaluate the quality of the caption after correction (it could be bad even after one round of correction)

Figure 3(b) shows the statics of the evaluations before and after one round of correction task. The authors acknowledge the costliness of the second round of annotation.

Feedback Network

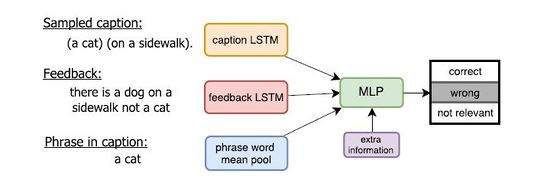

The collected feedback provides a strong supervisory signal which can be used in the RL framework. In particular, the authors design a neural network (feedback network or FBN) which will provide an additional reward based on the feedback sentence.

RL training will require us to generate samples (captions) from the model. Thus, during training, the sampled captions for each training image will differ from the reference maximum likelihood estimation (MLE) caption for which the feedback is provided. The goal of the feedback network is to read a newly sampled caption and judge the correctness of each phrase conditioned on the feedback. This network performs the following computations:

$$

h_t^{caption} = f_{sent}(h_{t-1}^{caption}, \omega_t^c) \\

h_t^{feedback} = f_{sent}(h_{t-1}^{feedback}, \omega_t^f) \\

q_i = f_{phrase}(\omega_{i,1}^c, \omega_{i,2}^c, \dots, \omega_{i,N}^c) \\

o_i = f_{fbn}(h_T^{caption}, h_T^{feedback }, q_i, m) \\

$$

Here, $\omega_t^c$ and $\omega_t^f$ denote the one-hot encoding of words in the sampled caption and feedback sentence for the t-th phrase, respectively. FBN encodes both the caption and feedback using an LSTM ($f_{sent}$), performs mean pooling ($f_{phrase}$) over the words in a phrase to represent the phrase i with $q_i$, and passes this information through a 3-layer MLP ($f_{fbn}$). The MLP accepts additional information about the mistake type (e.g., wrong object/action) encoded as a one hot vector m. The output layer of the MLP is a 3-way classification layer that predicts whether the phrase i is correct, wrong, or not relevant (wrt feedback sentence).

Policy Gradient Optimization using Natural Language Feedback

One can think of a caption decoder as an agent following a parameterized policy $p_\theta$ that selects an action at each time step. An “action” in our case requires choosing a word from the vocabulary (for the word RNN), or a phrase label (for the phrase RNN). The objective for learning the parameters of the model is the expected reward received when completing the caption $w^s = (w^s_1, \dots ,w^s_T)$. Here, $w_t^s$ is the word sampled from the model at time step t.

$$ L(\theta) = -\mathop{{}\mathbb{E}}_{\omega^s \sim p_\theta}[r(w^s)] $$ Such an objective function is non-differentiable. Thus policy gradients are used as in [13] to find the gradient of the objective function: $$ \nabla_\theta L(\theta) = - \mathop{{}\mathbb{E}}_{\omega^s \sim p_\theta}[r(w^s)\nabla_\theta \log p_\theta(w^s)] $$ Which is estimated using a single Monte-Carlo sample: $$ \nabla_\theta L(\theta) \approx - r(w^s)\nabla_\theta \log p_\theta(w^s) $$ Then a baseline $b = r(\hat \omega)$ is used. A baseline does not change the expected gradient but can drastically reduce the variance. $$ \hat{\omega}_t = argmax \ p(\omega_t|h_t) \\ \nabla_\theta L(\theta) \approx - (r(\omega^s) - r(\hat{\omega}))\nabla_\theta \log p_\theta(\omega^s) $$ Reward: A sentence reward is defined as a weighted sum of the BLEU scores. BLEU is an algorithm for evaluating the quality of text which has been machine-translated from one natural language to another. Quality is considered to be the correspondence between a machine's output and that of a human: "the closer a machine translation is to a professional human translation, the better it is" – this is the central idea behind BLEU. Additionally, it was one of the first metrics to claim a high correlation with human judgements of quality [10, 11 and 12] and remains one of the most popular automated and inexpensive metrics (more information about BLUE score here and a nice discussion on it here).

$$ r(\omega^s) = \beta \sum_i \lambda_i \cdot BLEU_i(\omega^s, ref) $$

As reference captions to compute the reward, the authors either use the reference captions generated by a snapshot of the model which were evaluated as not having minor and major errors, or ground-truth captions. In addition, they weigh the reward by the caption quality as provided by the annotators (e.g. $\beta = 1$ for perfect and $\beta = 0.8$ for acceptable). They further incorporate the reward provided by the feedback network: $$ r(\omega_t^p) = r(\omega^s) + \lambda_f f_{fbn}(\omega^s, feedback, \omega_t^p) $$ Where $\omega^p_t$ denotes the sequence of words in the t-th phrase. $\omega^s$ denotes generated sentence, and $\omega^s = \omega^p_1 \omega^p_2 \dots \omega^p_P$.

Note that FBN produces a classification of each phrase. This can be converted into reward, by assigning

correct to 1, wrong to -1, and 0 to not relevant. So the final gradient takes the following form:

$$

\nabla_\theta L(\theta) = - \sum_{p=1}^{P}(r(\omega^p) - r(\hat{\omega}^p))\nabla_\theta \log p_\theta(\omega^p)

$$

Implementation

The authors use Adam optimizer with learning rate $1e-6$ and batch size $50$. They first optimize the cross entropy loss for the first $K$ epochs, then for the following $t = 1$ to $T$ epochs, they use cross entropy loss for the first $P − \lfloor\frac{t}{m}\rfloor$ phrases (where $P$ denotes the number of phrases), and the policy gradient algorithm for the remaining $\lfloor \frac{t}{m} \rfloor$ phrases ($m = 5$).

Experimental Results

The authors used MS-COCO dataset. COCO is a large-scale object detection, segmentation, and captioning dataset. COCO has several features: Object segmentation, Recognition in context, Superpixel stuff segmentation, 330K images (>200K labeled), 1.5 million object instances, 80 object categories, 91 stuff categories, 5 captions per image, 250,000 people with key points. They used 82K images for training, 2K for validation, and 4K for testing. To collect feedback, they randomly chose 7K images from the training set, as well as all 2K images from validation. In addition, they use a word vocabulary size of 23,115.

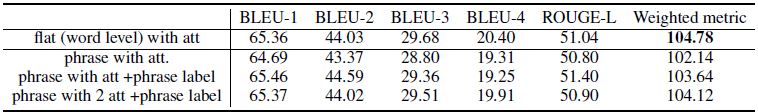

Phrase-based captioning model

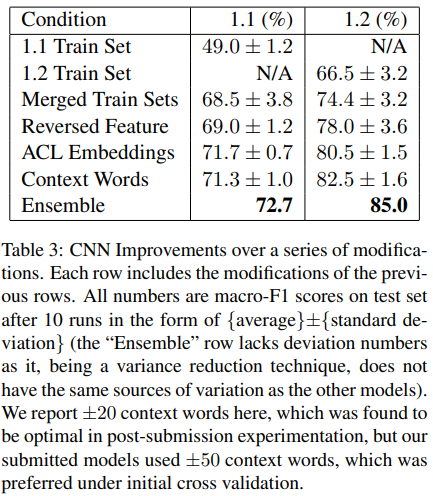

The authors analyze different instantiations of their phrase-based captioning in the following table. To sanity check, they compare it to a flat approach (word-RNN only). Overall, their model performs slightly worse (0.66 points). However, the main strength of their model is that it allows a more natural integration with feedback.

Feedback network

The authors use 9000 images to collect feedback; 5150 of them are evaluated as containing errors. Finally, they use 4174 images for the second round of annotation. They randomly select 9/10 of them to serve as a training set for feedback network, and 1/10 of them to be the test set. The model achieves the highest accuracy of 74.66% when they provide it with the kind of mistake the reference caption had (e.g. an object, action, etc). This is not particularly surprising as it requires the most additional information to train the model and the most time to compile the dataset for.

RL with Natural Language Feedback

The following table reports the performance of several instantiations of the RL models. All models have been pre-trained using cross-entropy loss (MLE) on the full MS-COCO training set. For the next rounds of training, all the models are trained only on the 9K images.

The authors define “C” captions as all captions that were corrected by the annotators and were not evaluated as containing minor or major error, and ground-truth captions for the rest of the images. For “A”, they use all captions (including captions which were evaluated as correct) that did not have minor or major errors, and GT for the rest. A detailed breakdown of these captions is reported in the following table. The authors test their model in two separate cases:

- They first test a model using the standard cross-entropy loss, but which now also has access to the corrected captions in addition to the 5GT captions. This model (MLEC) is able to improve over the original MLE model by 1.4 points. They then test the RL model by optimizing the metric wrt the 5GT captions. This brings an additional point, achieving 2.4 over the MLE model. Next, the RL agent is given access to 3GT captions, the “C" captions and feedback sentences. They show that this model outperforms the no-feedback baseline by 0.5 points. If the RL agent has access to 4GT captions and feedback descriptions, a total of 1.1 points over the baseline RL model and 3.5 over the MLE model will be achieved.

- They also test a more realistic scenario, in which the models have access to either a single GT caption, “C" (or “A”), and feedback. This mimics a scenario in which the human teacher observes the agent and either gives feedback about the agent’s mistakes, or, if the agent’s caption is completely wrong, the teacher writes a new caption. Interestingly, RL, when provided with the corrected captions, performs better than when given GT captions. Overall, their model outperforms the base RL (no feedback) by 1.2 points.

These experiments make an important point. Instead of giving the RL agent a completely new target (caption), a better strategy is to “teach” the agent about the mistakes it is doing and suggest a correction. This is not very difficult to understand intuitively - informing the agent of its error indeed conveys more information than teaching it a completely correct answer, because the latter forces the network to "train" its memory from a sample which is, at least seemingly, insulated from its prior memory.

Conclusion

In this paper, a human teacher is enabled to provide feedback to the learning agent in the form of natural language. The authors focused on the problem of image captioning. They proposed a hierarchical phrase-based RNN as the captioning model, which allowed natural integration with human feedback. They also crowd-sourced feedback and showed how to incorporate it in policy gradient optimization.

Critique

Authors of this paper consider both grand truth caption and feedback as the same source of information. However, feedback seems to contain much richer information than grand truth caption. So, maybe that's why RLF (reinforcement learning with feedback network) outperforms other models in experimental results. In addition, the hierarchical phrased based image captioning model does not outperform the baseline; thus, maybe that's a good idea to follow the same idea using existing image captioning models and then apply parsing techniques and compare the results. Another interesting future work one can do is to incorporate confidence score of feedback network in the learning process in order to emphasize strong feedbacks.

Comments

In the hierarchical phrase-based RNN, human involving is a key part of improving the performance of the network. According to this paper, the feedback LSTTM network is capable of handling simple sentences. What if the feedback is weak or even ambiguous? Is there a threshold for the feedback such that the network can refuse a wrong feedback? Follow this architecture, it would be interesting to see whether such feedback strategy can be applied in machine translation.

My intuition says that this architecture is particularly well suited for handling sparse bad feedbacks due to the very nature of RNNs (robustness) and provides a solid foundation of feedback-augmented learning networks.

It is not very clear if we use the human feedback on examples that are already labelled.

References

[1] Huan Ling and Sanja Fidler. Teaching Machines to Describe Images via Natural Language Feedback. In arXiv:1706.00130, 2017.

[2] Shane Griffith, Kaushik Subramanian, Jonathan Scholz, Charles L. Isbell, and Andrea Lockerd Thomaz. Policy shaping: Integrating human feedback with reinforcement learning. In NIPS, 2013.

[3] K. Judah, S. Roy, A. Fern, and T. Dietterich. Reinforcement learning via practice and critique advice. In AAAI, 2010.

[4] A. Thomaz and C. Breazeal. Reinforcement learning with human teachers: Evidence of feedback and guidance. In AAAI, 2006.

[5] Richard Maclin and Jude W. Shavlik. Incorporating advice into agents that learn from reinforcements. In National Conference on Artificial Intelligence, pages 694–699, 1994.

[6] Jason Weston. Dialog-based language learning. In arXiv:1604.06045, 2016.

[7] Russell Kaplan, Christopher Sauer, and Alexander Sosa. Beating atari with natural language guided reinforcement learning. In arXiv:1704.05539, 2017.

[8] Andrew Y. Ng, Daishi Harada, and Stuart J. Russell. Policy invariance under reward transformations: Theory and application to reward shaping. In ICML, pages 278–287, 1999.

[9] COCO Dataset http://cocodataset.org/#home

[10] https://en.wikipedia.org/wiki/BLEU

[11] Papineni, K., Roukos, S., Ward, T., Henderson, J and Reeder, F. (2002). “Corpus-based Comprehensive and Diagnostic MT Evaluation: Initial Arabic, Chinese, French, and Spanish Results” in Proceedings of Human Language Technology 2002, San Diego, pp. 132–137

[12] Callison-Burch, C., Osborne, M. and Koehn, P. (2006) "Re-evaluating the Role of BLEU in Machine Translation Research" in 11th Conference of the European Chapter of the Association for Computational Linguistics: EACL 2006 pp. 249–256

[13] Siqi Liu, Zhenhai Zhu, Ning Ye, Sergio Guadarrama, Kevin Murphy. "Improved Image Captioning via Policy Gradient optimization of SPIDEr". Under review for ICCV 2017.

[14] Junhua Mao, Wei Xu, Yi Yang, Jiang Wang, Zhiheng Huang, Alan Yuille. "Deep Captioning with Multimodal Recurrent Neural Networks (m-RNN)".arXiv:1412.6632

[15] W. Bradley Knox and Peter Stone. Reinforcement learning from simultaneous human and mdp reward. In Intl. Conf. on Autonomous Agents and Multiagent Systems, 2012.

Appendix

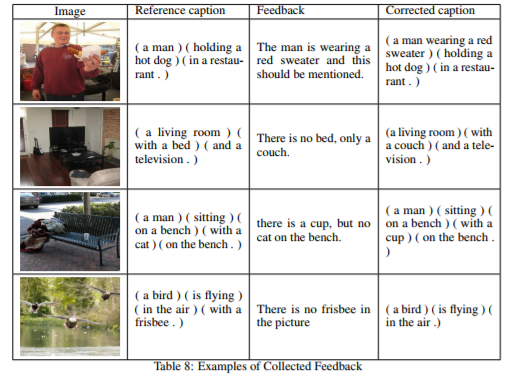

C Examples of Collected Feedback

The authors provide examples of feedback collected for the reference (MLE) model