STAT946F17/Cognitive Psychology For Deep Neural Networks: A Shape Bias Case Study

Introduction

The recent burgeon on the use of Deep Neural Networks (DNNs) has resulted in giant leaps of accuracy in prediction. They are also being used to solve a variety of complex tasks which earlier methodologies have struggled to excel in.

While it is all good to see incredibly high accuracy as a result of the use of DNN, we must begin to question why they perform so well. It has become an interesting field of study to actually represent the features/feature maps or interpret the meaning of the learned values in a DNN's hidden layers. Currently, we treat models of DNNs as black boxes which we practically tune the tweakable parameters like number of layers, number of units in each layer, number & size of feature maps(in case of CNN) etc. The opacity created by the lack of an intuitive representation of the internal learned parameters of DNNs hinders both basic research as well as its application to real-world problems.

Recent pushes have aimed to better understand DNNs: tailor-made loss functions and architectures produce more interpretable features (Higgins et al., 2016; Raposo et al., 2017) while output-behavior analyses unveil previously opaque operations of these networks (Karpathy et al., 2015). Parallel to this work, neuroscience-inspired methods such as activation visualization (Li et al., 2015), ablation analysis (Zeiler & Fergus, 2014) and activation maximization (Yosinski et al., 2015) have also been applied. Alternatively probabilistic Hierarchical Bayesian Models have demonstarted great potential in learning structured and ruch representations of the world from very little data (Lake et al., 2015).

This paper aims to provide another methodology to attempt to decipher & better understand how DNNs solve a particular task. This methodology was inspired by psychological concepts to test whether the DNN's were able to make accurate predictions with biases similar to that the human mind makes.

Research in developmental psychology shows that when learning new words, humans tend to assign the same name to similarly shaped items rather than to items with similar color, texture, or size. This bias/knowledge tend to be forged into the brains of humans and humans then take this forward to easily associate these shapes with new objects they have not seen before.

The authors of this paper try to test if DNNs behave similarly in one-shot learning applications. They attempt to prove that when the models of state-of-the-art DNNs are used to learn objects from images, they exhibit a stronger shape bias than a color bias. To emulate the human brain, they use the parameters of pre-trained DNN models and use this to perform one-shot learning on a new dataset with different labels.

Background

One Shot Learning

One-shot learning is an object categorization problem in computer vision. Whereas most machine learning based object categorization algorithms require training on hundreds or thousands of images and very large datasets, one-shot learning aims to learn information about object categories from one, or only a few, training images.

The one-shot word learning task is to label a novel data example $\hat{x}$ (e.g. a novel probe image) with a novel class label $\hat{y}$ (e.g. a new word) after only a single example.

More specifically, given a support set $S = \{(x_i, y_i) , i \in [1, k]\}$, of images $x_i$, and their associated labels $y_i$, and an unlabeled probe image $\hat{x}$, the one-shot learning task is to identify the true label of the probe image, $\hat{y}$, from the support set labels $\{y_i , i \in [1, k] \} $:

$\displaystyle \hat{y} = arg \max_{y}$ $P(y | \hat{x}, S)$

We assume that the image labels $y_i$ are represented using a one-hot encoding and that $P(y|\hat{x}, S)$ is parameterised by a DNN, allowing us to leverage the ability of deep networks to learn powerful representations.

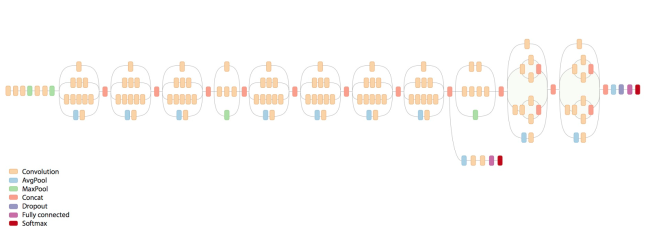

Inception Networks

A probe image $\hat{x}$ is given the label of the nearest neighbour from the support set:

$\hat{y} = y$

$(x, y) = \displaystyle arg \min_{(x_i,y_i) \in S} d(h(x_i), h(\hat{x})) $

where d is a distance function.

The function h is parameterized by Inception – one of the best performing ImageNet classification models.

Specifically, h returns features from the last layer (the softmax input) of a pre-trained Inception classifier. With these features as input and cosine distance as the distance function, the classifier achieves 87.6% accuracy on one-shot classification on the ImageNet dataset (Vinyals et al., 2016). We call the Inception classifier together with the nearest-neighbor component the Inception Baseline (IB) model.

Matching Networks

MNs (Vinyals et al.,2016) are a fully differentiable neural network architectures with state-of-the-art one shot learning performance on ImageNet (93.2% one-shot labelling accuracy). MNs are trained to assign label $\hat{y}$ to probe image $\hat{x}$ using an attention mechanism $a$ acting on image embeddings stored in the support set S: \begin{align*} a(\hat{x},x_i)=\frac{e^{d(f(\hat{x},S), g(x_i,S))}}{\sum_{j}e^{d(f(\hat{x},S), g(x_j,S))}}, \end{align*}

where d is a cosine distance and where f and g provide context-dependent embeddings of $\hat{x}$ and $x_i$ (with contextS). The embedding $g(x_i, S)$ is a bi-directional LSTM (Hochreiter & Schmidhuber, 1997) with the support set S provided as an input sequence. The embedding $f(\hat{x}, S)$ is an LSTM with a read-attention mechanism operating over the entire embedded support set. The input to the LSTM is given by the penultimate layer features of a pre-trained deep convolutional network, specifically Inception.

To train MNs we proceed as follows:

Training MN

- Step 1: At each step of training, the model is given a small support set of images and associated labels. In addition to the support set, the model is fed an unlabeled probe image $\hat{x}$

- Step 2: The model parameters are then updated to improve classification accuracy of the probe image $\hat{x}$ given the support set. Parameters are updated using stochastic gradient descent with a learning rate of 0.1

- Step 3: After each update, the labels ${y_i, i \in [1, k]}$ in the training set are randomly re-assigned to new image classes (the label indices are randomly permuted, but the image labels are not changed). This is a critical step. It prevents MNs from learning a consistent mapping between a category and a label. Usually, this is what we want in classification, but in one-shot learning, we want to train our model for classification after viewing a single in-class example from the support set.

The objective function used is:

\begin{align*} L=E_{C\sim T}\biggr[E_{S \sim C, B \sim C}\bigr[\sum_{(x,y)\in B}\log P(y|x,S)\bigr]\biggr] \end{align*}

where T is the set of all possible labelings of our classes, S is a support set sampled with a class labeling C ~ T and B is a batch of probe images and labels, also with the same randomly chosen class labeling as the support set.

Cognitive Biases

A Cognitive bias, in simple terms, is any systematic deviation from logic. Wikipedia defines a Cognitive bias as "a systematic pattern of deviation from norm or rationality in judgment, whereby inferences about other people and situations may be drawn in an illogical fashion". They can be seen as mental shortcuts (although not always correct) that the mind uses to make decisions or learn. Cognitive bias is a concept from developmental psychology which attempts to explain how children can extract meanings of words with very few examples, similar to the concept of one-shot learning discussed above. The theory, as explained by the authors, is that humans form biases that allow them to eliminate many potential hypotheses about word meaning where the amount of data available is insufficient for this purpose. These include:

- Whole object bias - Assumption that a word corresponds to an entire object, even if it may just specify a part of that object.

- Taxonomic bias - Tendency to assign a word to an object based on the group that object is in, rather than the theme related to that object.

- Mutual exclusivity bias - Assumption that a word only corresponds to one category of objects.

- Shape bias - The shape bias proposes that children apply names to same-shaped objects. This stems from the idea that children are associative learners that have abstract category knowledge at many different levels. They should be able to identify specifics of each category (e.g. pickles are round, long, green, and bumpy) [8].

A complete list of cognitive biases is given by (Bloom, 2000). The bias the authors investigate in this paper is the shape bias, which denotes a tendency to assign the same name to similarly shaped items rather than to items with similar color, texture, or size.

Methodology

Inductive Biases & Probe Data

Inductive biases are those criteria which are artificially selected or learned by the network as a classifying/distinguishing property. It has been observed that the biases that DNNs learnt are complex composite features. We, as researchers, can take advantage of the fact that DNNs learned complex distinguishing features by constructing probe data sets which particularly target on exposing a particular bias that a DNN might have.

- Step 1: Take a known composite feature which we suspect the DNNs are biased against. It is possible that the feature is not numerical but intuitive for human researchers to understand.

- Step 2: Train the target model with an appropriate dataset.

- Step 3: Transfer Learning: Use the pre-trained model with a new data set which is curated to contain data to prove/disprove the existence of the bias

- Step 4: Model/Decide on a function which quantifies the bias under study

- Step 5: Measure the bias with the bias function

Then it is possible to deduce whether the DNN uses the feature to solve the task by the value of bias function.

Data Sets Used

- Training Set: ImageNet

- Test Set:

- The Cognitive Psychology Probe Data (CogPsyc data) which consists of 150 images of objects. The images are arranged in triples consisting of a probe image, a shape-match image (that matches the probe in colour but not shape), and a color-match image (that matches the probe in shape but not colour). In the dataset there are 10 triples, each shown on 5 different backgrounds, giving a total of 50 triples.

- A real-world dataset consisting of 90 images of objects (30 triples) collected using Google Image Search. The images are arranged in triples consisting of a probe, a shape-match and a colour-match. The images are photographs of stimuli used previously in real-world shape bias experiments.

- The Cognitive Psychology Probe Data (CogPsyc data) which consists of 150 images of objects. The images are arranged in triples consisting of a probe image, a shape-match image (that matches the probe in colour but not shape), and a color-match image (that matches the probe in shape but not colour). In the dataset there are 10 triples, each shown on 5 different backgrounds, giving a total of 50 triples.

Experiments

Evaluation Criteria

- For a given probe image $\hat{x}$, we loaded the shape-match image $x_s$ and corresponding label $y_s$, along with the colour-match image $x_c$ and corresponding label $y_c$ into memory, as the support set $S = \{(x_s, ys), (x_c, y_c)\}$

- Calculate $\hat{y}$

- The model assigns either $y_c$ or $y_s$ to the probe image.

- To estimate the shape bias Bs, calculate the proportion of shape labels assigned to the probe: $B_s = E(\delta(\hat{y} - y_s))$

where E is an expectation across probe images and $\delta$ is the Dirac delta function.

- Running all IB experiments using both Euclidean and cosine distance as the distance function. We only report results for Euclidean distance since the results for the two distance functions were qualitatively similar.

Experiment 1: Shape bias statistics in Inception Baseline:

- Shape bias of IB to be $B_s = 0.68$. Similarly, the shape bias of IB using our real-world dataset was $B_s = 0.97$. Together, these results strongly suggest that IB trained on ImageNet has a stronger bias towards shape than colour. The shape bias is qualitatively similar across datasets but quantitatively different because the datasets themselves are different.

Experiment 2: Shape bias statistics in Matching Network:

- They found that MNs have a shape of bias $B_s = 0.7$ using the CogPsyc dataset and a bias of $Bs = 1$ using the real-world dataset. Once again, these results suggest that MNs trained seeding from Inception using ImageNet has a stronger bias towards shape than colour.

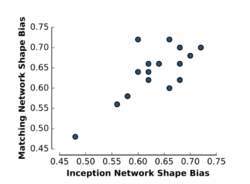

Experiment 3: Shape bias statistics between and across models:

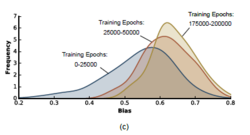

- The authors extended the shape bias analysis to calculate the shape bias in a population of IB models and in a population of MN models with different random initialization

Dependence on the initialization of parameters:

A strong variability was observed when variation in the initial values of the parameters. For the CogPsyc dataset, the average shape bias was $B_s = 0.628$ with standard deviation $\sigma B_s = 0.049$ at the end of training and for the real-world dataset the average shape bias was $B_s = 0:958$ with $\sigma B_s = 0.037$.

Dependence of shape bias on model performance:

For the CogPsych dataset, the correlation between bias and classification accuracy was $\rho = 0.15$, and for the real world dataset, the correlation between bias and classification accuracy was $\rho = -0.06$. This would be evident since the accuracy of the models remained nearly constant when the initialization parameters varied whereas the shape bias tended to vary a lot, hence highlighting the lack of correlation amongst them.

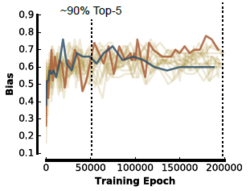

Emergence of shape bias during training:

The shape bias spiked to a large value very early.

Variation of shape bias within models & across models:

With different initialization parameters, the shape bias varied a lot within IB during training while the shape bias did not fluctuate during the training of MN. It was found that the MN inherits the shape bias of the IB which seeded its embeddings and thereafter, the shape bias remained constant throughout training. It is important to note that the output of the penultimate layer of the Inception was not fine-tuned when it was pipelined to the MN. This was to ensure that the MN properties were independent of the IB model properties.

Learnings, Inferences & Implications

- Both the Inception Baseline and the Matching Network exhibit strong shape bias when trained on ImageNet. Researchers who use Inception & MN DNNs can now use this fact as a consideration for their application while using pre-trained models for new datasets. If it is known beforehand that the new data set is strongly classifiable through a color bias, then they would either want to defer using the pre-trained models or explore methods to decrease/remove the strong shape bias.

- There exists a high variability in the shape bias with the variation in the initialization parameters. This is an important finding since it uncovers the fact that the same architecture which exhibits similar accuracy in predictions can display a variety of shape bias just with different initialization parameters. Researchers can explore methods of tuning the random initialization such that the models start out with a low shape bias without compromising the accuracy of the model.

- MNs inherit the shape bias which is seeded to it by the Inception Network's input embedding. This is also another fact which researchers & practitioners should be careful about. When using cascaded or pipelined heterogeneous architectures, the models downstream tend to inherit/become/are fed with the properties/biases of the models upstream. This may be desirable or undesirable according to the application, but it is important to be aware of its presence.

- The biases under consideration are the property of the collection of the architecture, the dataset, and the optimization procedure. Hence in order to increase or decrease the effect of a particular bias, one or more of the mentioned factors must be adjusted/tuned/changed.

- The fact that a high shape bias emerged in the early epochs with less variability in further epochs can be thought of analogous to the biases that humans develop at an infancy which gets fortified as they age.

- The phenomenon of Synesthesia also could provide answers regarding how human brains perform abstract wiring to handle multi-modal cases ( like visual and audio input abstraction in V.S. Ramachandran's TED Talk: "3 clues to understanding your brain" ). Human brains inherently possess the ability to factor out a "common denominator" via cross model synesthetic abstraction to derive, or rather, associate meaning to abstract, amorphous test case inputs ( not encountered by the brain previously) and therefore learn/perceive concepts. In other words, they are able to associate words or linguistic forms to abstract shapes ( "kiki" for sharp edged figures, "buba" for curved figures). With regard to DNNs, after further observation of deconvnet visualizations [Zeiler et. al 2014], this "Cognitive Bias" could further be theoretically quantified by analyzing the deconvoluted abstractions and the roles they play in biasing the test case outputs.

Modeling human word learning

The authors note how there have been several previous attempts [9][10][11][12] to model human word learning in the field of cognitive science. A major shortcoming of these works is that none of the models are capable of one-shot word learning when presented with real-world images. Recognizing this and given the success of MNs in this work, the authors propose MNs as a computational-level account of one-shot word learning. In the paper, it is discussed how shape bias increases dramatically during early training of the model. This agrees with what is observed in many psychological studies: older children show bias more than younger children.

Conclusion, Future Work and Open questions

- Just as cognitive psychology exposes the shape bias observed in this experiment, we should try to uncover other biases as well using multiple approaches

- Research finds that Inception and Matching Nets have a similar bias to that observed in humans: they prefer to categorize objects according to shape rather than color.

- The magnitude of the shape bias varies greatly among architecturally identical, but differently seeded models despite nearly equivalent classification accuracies.

- Study the underlying mechanisms which cause biases such as shape bias in DNNs.

- Research into various methods of probing and creating probe data sets which can be used to test architectures for various biases.

- Exploration of a research field called Artificial Cognitive Psychology which focuses on probing how DNN architectures can be understood further using known behaviors of the human brain.

- In other domains, insights from the episodic memory literature may be useful for understanding episodic memory architectures, and techniques from the semantic cognition literature may be useful for understanding recent models of concept formation [13, 14].

In summary, the authors' study works for one-shot learning from images by displaying a shape bias that which is similarly exhibited by humans. An interesting observation was that the shape bias differed quite significantly between different networks (since each network has different initial conditions) - hence the measure of accuracy indicates the significance of the network based on its behavior over the training set. This brings about an interesting observation wherein whether a network with a stronger shape bias is more resistant to adversarial attacks compared to that of a weaker shape bias. The second interesting finding was that shape bias emerges early on in training, long before convergence - the behavior of which is similar to what is observed in humans during early childhood.

Before closing off, we remark that is is not completely clear that the authors have demonstrated that an artificial neural network inherently comes equipped with a shape bias. Indeed, it may be argued that the strongest conclusion one can draw from the paper is that a neural network pretrained on a certain dataset demonstrates shape bias. However, whether this shape bias arises due to the network architecture or due to the dataset on which it is trained does not have a straightforward answer within the paper. In case the shape bias as defined emerges solely as a function of the training data, the concept of shape bias of a neural network may very well be meaningless. absent further experimentation and ablative analyses, the authors may very well be claiming much more than they have actually proved. In addition, having a shape bias in the model may not even necessarily be an undesirable thing.

References

[1] Ritter, Samuel & G. T. Barrett, David & Santoro, Adam & M. Botvinick, Matt. (2017). Cognitive Psychology for Deep Neural Networks: A Shape Bias Case Study

[2] Vinyals, Oriol, Blundell, Charles, Lillicrap, Timothy, Kavukcuoglu, Koray, and Wierstra, Daan. Matching networks for one shot learning. arXiv preprint arXiv:1606.04080, 2016.

[3] Bloom, P. (2000). How children learn the meanings of words. The MIT Press.

[4] https://www.slideshare.net/KazukiFujikawa/matching-networks-for-one-shot-learning-71257100

[5] https://deepmind.com/blog/cognitive-psychology/

[6] https://hacktilldawn.com/2016/09/25/inception-modules-explained-and-implemented/

[7] Zeiler M.D., Fergus R. (2014) Visualizing and Understanding Convolutional Networks. In: Fleet D., Pajdla T., Schiele B., Tuytelaars T. (eds) Computer Vision – ECCV 2014. ECCV 2014. Lecture Notes in Computer Science, vol 8689. Springer, Cham

[8] https://en.wikipedia.org/wiki/Word_learning_biases#Shape_bias

[9] Colunga, Eliana and Smith, Linda B. From the lexicon to expectations about kinds: a role for associative learning. Psychological review, 112(2):347, 2005

[10] Xu, Fei and Tenenbaum, Joshua B. Word learning as bayesian inference. Psychological review, 114(2):245, 2007.

[11] Schilling, Savannah M, Sims, Clare E, and Colunga, Eliana. Taking development seriously: Modeling the interactions in the emergence of different word learning biases. In CogSci, 2012.

[12] Mayor, Julien and Plunkett, Kim. A neurocomputational account of taxonomic responding and fast mapping in early word learning. Psychological review, 117(1):1, 2010.

[13] https://deepmind.com/blog/cognitive-psychology/

[14] https://en.wikipedia.org/wiki/Episodic_memory

[15] Lake, Brenden M., Ruslan Salakhutdinov, and Joshua B. Tenenbaum. "Human-level concept learning through probabilistic program induction." Science 350.6266 (2015): 1332-1338.