Improving neural networks by preventing co-adaption of feature detectors: Difference between revisions

No edit summary |

No edit summary |

||

| Line 7: | Line 7: | ||

= TIMIT = | = TIMIT = | ||

TIMIT is a standard dataset used for speech recognition systems. Hidden Markov Models (HMMs) is an acoustic model that is typically used to deal with variance and determines a level of fit from coefficients of input to each state of HMMs. Recent results show that mapping feedforward neural networks with an acoustic input coupled with a probability distribution over HMM states perform better than the traditional Gaussian mixture models on speech recognition datasets including TIMIT. | |||

A Neural network was constructed to output the classification error rate on the test set of TIMIT dataset. They have built the neural network with four fully-connected hidden layers with 4000 neurons per layer. The output layer distinguishes distinct classes from one hundred 185 softmax output neurons that are merged into 39 classes. After constructing the neural network, 21 adjacent frames with an advance of 10ms per frame was given as an input. The results show that applying dropout with 50% of hidden units on various neural networks exceed classification performance from the neural networks without dropout. The decoder, a network that knows transition probabilities between HMM states, runs the Viterbi algorithm on class probabilities for each frame from the output of the neural network to predict the best single sequence of HMM states. The classification error achieved 19.7% with dropout and 22.7% without dropout. | |||

= Reuters = | = Reuters = | ||

Revision as of 20:01, 27 November 2020

Presented by

Kyle Jung, Dae Hyun Kim, Seokho Lim, Stan Lee

Introduction to Dropout + Dataset

MNIST

TIMIT

TIMIT is a standard dataset used for speech recognition systems. Hidden Markov Models (HMMs) is an acoustic model that is typically used to deal with variance and determines a level of fit from coefficients of input to each state of HMMs. Recent results show that mapping feedforward neural networks with an acoustic input coupled with a probability distribution over HMM states perform better than the traditional Gaussian mixture models on speech recognition datasets including TIMIT.

A Neural network was constructed to output the classification error rate on the test set of TIMIT dataset. They have built the neural network with four fully-connected hidden layers with 4000 neurons per layer. The output layer distinguishes distinct classes from one hundred 185 softmax output neurons that are merged into 39 classes. After constructing the neural network, 21 adjacent frames with an advance of 10ms per frame was given as an input. The results show that applying dropout with 50% of hidden units on various neural networks exceed classification performance from the neural networks without dropout. The decoder, a network that knows transition probabilities between HMM states, runs the Viterbi algorithm on class probabilities for each frame from the output of the neural network to predict the best single sequence of HMM states. The classification error achieved 19.7% with dropout and 22.7% without dropout.

Reuters

CNN

CIFAR-10

Models for CIFAR-10:

CIFAR-10 is a popular object recognition dataset with size 32 x 32 color images searched from the web. It contains 10 classes and the images were labels with the noun used to search the image. It has images of 6000 train images and 1000 test images of a single dominant object from the label name for each 10 classes.

They implemented two different models for CIFAR-10, one with dropout and the other without. The one with dropout enables us to use more parameters because dropout forces a strong regularization on the network, and a fourth weight layer is added to take the input from the previous pooling layer. We add a fourth weight layer that is locally connected but not convolutional and this layer contains 16 banks of filters of size 3 × 3 (50% dropout). And then, the softmax layer takes its input from this fourth weight layer.

The one without dropout is a CNN with three convolutional layers each with a pooling layer. The max-pooling is performed by the pooling layer which follows the first convolutional layer, and the average-pooling is performed by remaining pooling layers. The first and second pooling layers with N = 9, α = 0.001, and β = 0.75 are followed by response normalization layers.

A ten-unit softmax layer, which is used to output a probability distribution over class labels, is connected with the upper-most pooling layer. Using filter size of 5×5, all convolutional layers have 64 filter banks.

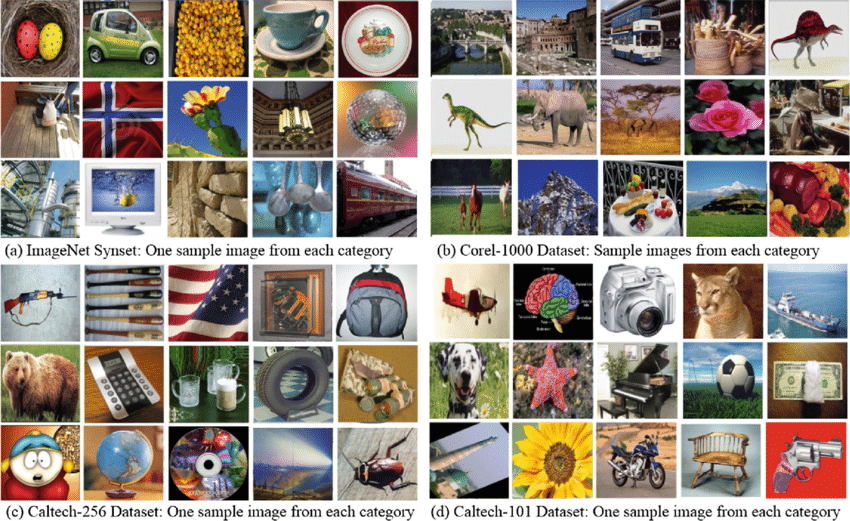

ImageNet

ImageNet is a dataset of millions of high-resolution labeled images in thousands of categories, and because of that, it is really challenging to achieve a decent score in terms of the accuracy.

Currently, the best score on this dataset is 45.7% by High-dimensional signature compression for large-scale image classification (J. Sanchez, F. Perronnin, CVPR11 (2011)). The authors of this paper could achieve a comparable performance of 48.6% error using a single neural network with five convolutional hidden layers with a max-pooling layer in between, followed by two globally connected layers and a final 1000-way softmax layer. Also, 42.4% could be achieved by using 50% dropout in the 6th hidden layer. c1 - mp - c2 - mp- c3 - mp - c4 - mp - c5 - mp - G1 - G2 - softmax (critique) They found out that making a large number of decisions was important for the architecture of the net design for the speech recognition (TIMIT) and object recognition datasets ( CIFAR-10 and ImageNet).

A separate validation set which evaluated the performance of a large number of different architectures was used to make those decisions, and then they chose the best performance architecture with dropout on the validation set so that they could apply it to the real test set.

A dataset of millions of labeled images in thousands of categories which were collected from the web and labelled by human labellers using MTerk tool (Amazon’s Mechanical Turk crowd-sourcing tool). ImageNet and CIFAR-10 are very similar, but the scale of ImageNet is about 20 times bigger (1.3M vs 60k). The size of ImageNet is about 1.3 million training images, 50000 validation images, and 150000 testing images.

Very difficult to have perfect accuracy on this dataset even for humans because the ImageNet images contain multiple instances of ImageNet objects and there are a large number of object classes. They used resized images of 256 x 256 pixels for their experiments.

H Models for ImageNet:

Our model for ImageNet with dropout (the one without dropout had a similar approach, but there was a serious issue with overfitting): They used a convolutional neural network trained by 224×224 patches randomly extracted from the 256 × 256 images. It can reduce the network’s capacity to overfit the training data and helps generalization as a form of data augmentation.