Do Vision Transformers See Like CNN

Presented by

Zeng Mingde, Lin Xiaoyu, Fan Joshua, Rao Chen Min

Introduction

Convolutional Neural Network has been the industry standard neural network for many problems (such as image classification), and it is also one of the networks we learned in this course. However, there exists a different model called Visual Transformers, and this paper argues that the visual transformer actually yields comparable and sometimes better results than the traditional convolutional neural networks. We’re going to follow the footprints of this paper and digest the internals of the visual transformers, to learn how exactly the vision transformer solves its tasks, to compare and contrast the results of these two different architectures, and eventually explore the future and potentials of the visual transformers.

Intro on transformers/ResNet

This section is not discussed in the paper, but mentioned as the model used in the paper hasn't been taught in class

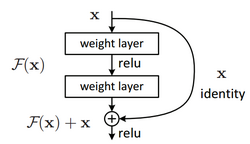

We first introduce the main model used as a baseline in the paper, the ResNet model. Typical CNNs often suffer if the model is deep, largely due to vanishing gradients.

The ResNet block introduced the concept of a skip connection (the curved line in the above figure) on every other convolution layer, giving information a way to be passed down from shallow to deep layers without having to go through any sort of transformation. As the information passed down through these skip connections was unaltered, this alleviated the vanishing gradient problem, allowing much deeper CNNs to be trained and thus improving the performance and usefulness of the CNN family.

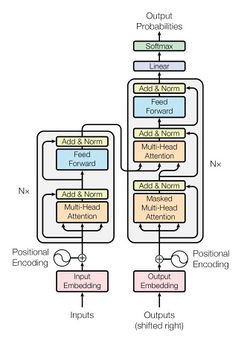

Before we talk about the visual transformer, we need to introduce a very simple overview of the “original” transformer.

Proposed as a language model, the transformer solved the main problem that most language models (RNNs, LSTMs, …) were experiencing at the time by introducing the concept of self-attention.

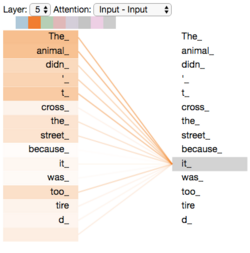

“Regular” attention quantifies the relevance of a word (query) to other words (keys), essentially telling the model how much attention the query should give to each of the keys. Self-attention is a specific application of attention. Given a sentence, it takes a word as a query and the rest of the sentence as keys. Self-attention effectively tells the model how much attention the query should give to other words in the sentence.

However, attention itself doesn't tell the model what order the keys come in, or where the query sits relative to the keys. Combined with positional encoding (assigning words a value depending on where they appeared), self-attention gave the transformer the ability to see how a word is related to the rest of the sentence while taking into account the structure of the sentence.

While previous language models had to loop over words in the input (sentence) in order to glean information regarding the relevance of words to the rest of the sentence, the use of the multi-head attention blocks (aggregation of weighted attention blocks) in the form of both attention and self-attention allowed language tasks to be performed without the loopy nature mentioned above, greatly improving training speeds as well as performance for language models.

The main takeaway, for the purpose of the paper, is that these attention and self-attention blocks allowed the transformer to view the entire sentence at once while maintaining information on relationships between words. Other than these attention blocks, the rest of the transformer is composed of simple blocks, such as feed-forward blocks, and knowledge of the complete structure is not necessary for this paper.

Now that we have introduced transformers, we can talk about the main model, visual transformers. The main change is the input. Images are divided into patches such as 7x7. The patches are then flattened and projected onto some potentially smaller dimension, resulting in 49 tokens. Each token is analogous to a word, and the group of 49 tokens is analogous to the sentence. The set of tokens is then passed to the model and the transformer is used pretty much identically.

Background and experimental setup

In this paper, the authors focus on comparing CNNs to visual transformers (ViT). To do so, they compare the CNN models ResNet50x1, ResNet152x2 to the ViTs ViT-B/32, ViT-B/16, ViT-L/16, and ViT-H/14. The data used to train the models, unless specified, is the JFT-300M dataset, a dataset containing 300 million images.

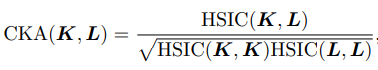

To compare layers between the models, the authors use CKA (Centered Kernel Alignment), which is a method of calculating the similarity between layers.

Where:

- HSIC is the Hilbert-Schmidt independence criterion

- K = [math]\displaystyle{ XX^T }[/math] and L = [math]\displaystyle{ YY^T }[/math]

- X, Y are representations (activation matrices) of the two layers to be compared, each of size mxp where m is the number of observations and p is however many neurons.

This allows CKA to be invariant to orthogonal transformation of the representations, while normalization allows the measure to be invariant to scaling.

Representations in VIT and CNNs

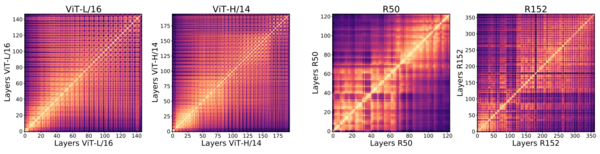

Representations in VIT and CNNs The authors first compared the representation of information and their functional differences in ViTs and CNNs. To do so, representations are taken from both output and intermediate layers (norm layers, hidden activations) and compared using CKA similarity with a heatmap.

The authors first compared layers within the same model to each other.

From the figure, there are 2 things to note. ViTs have a much more uniform similarity structure Low and high layers in ViT show much more similarity compared to those in ResNet structures. In ResNet, shallow layers are similar to other shallow layers, while deep layers are similar to other deep layers.

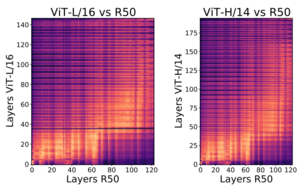

The authors also compared the layers in ViT models to those found in the ResNet model.

From the heatmap, it appears that despite the difference in model architectures, there is a noticeable similarity in some representations in the models. In fact, we can see that it takes more time for ResNet to learn a similar representation to those in ViT. In particular, the lower half of 60 ResNet layers are similar to the lower quarter of the ViT layers, and the top half of the ResNet layers is similar to the next third of ViT layers.

Difference in local/global information

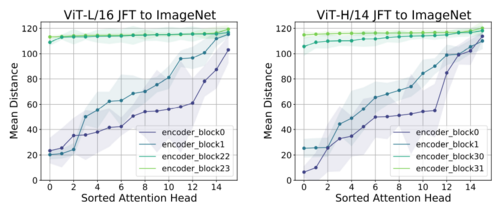

One finding the authors made that could explain the above phenomenon is the difference in the “field of view” of the layers in both models.

Due to the convolutional nature of the CNN architecture, shallow layers of the ResNet models are only able to access local information (small area of the image) , and thus learn local representations. As the image is convolved and shrunk through the layers, larger portions of the image enter the layer’s field of view, eventually giving the model the ability to learn representations using global data.

On the other hand, the ViT model is able to utilize global information from the start, and thus learn both local and global representations in the shallow layers. Note that the deeper layers eventually all consist of global representations.

Due to the difference in the field of view for the model’s layers, the representations themselves end up learning different things. To check this, the authors used CKA similarity to compare representations in shallow layers of ResNet models to the representations in the first block of the ViT models. Unsurprisingly, the authors discovered that as the ViT representations’ mean distance grew (larger distance = more global), the CKA similarity decreased. This led them to conclude that the global representations of the ViT model learned different features to those learned in the ResNet model.

Spatial information

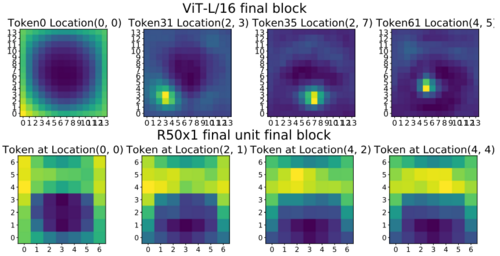

Another result of interest is the ViT’s ability to maintain information on spatial localization in deeper layers. To test this, the authors checked how each model kept positional information by plotting the CKA similarity on each patch/token between the final block of the model and the input images as a heatmap.

Due to a CNN’s architecture, particularly the convolving and pooling aspects, “image resolution” is lost with each layer. This results in the loss of local information as the data is propagated through the model.

On the other hand, ViT models tend to have good localization at deep layers, especially for the interior patches. This is due to the fact that the only form of dimension reduction occurs when tokenizing the data. For example, when the image is divided into 7x7 patches and tokenized, some information is lost when projecting the data into its tokenized form. Afterward, these tokens are kept as-is, and as such no more information is lost.

Impact of Scale of data on learning

To test the impact of dataset size in learning, the authors trained models with varying portions of the data and used CKA similarity to compare representations learned using fewer data to representations trained on all the data.

They found that with a small portion of the data, shallow layers of the ViT were able to learn representations similar to that of those trained on all of the data. On the other hand, deeper layers needed a much larger portion of data to reach a similar representation to that of the “complete” model, indicating that the ViT, in particular its deeper layers, is highly reliant on a large amount of data to perform well.

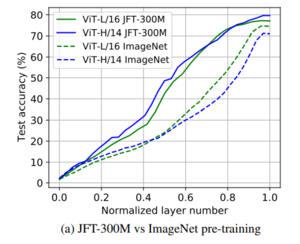

To further show the impact of the quantity of data on the ViT’s ability to perform, the authors trained identical models on the JFT-300M dataset (300 million images) and the ImageNet 2012 dataset (1.2 million images). The authors then plotted the accuracy of model predictions on the ImageNet 2012 validation set as a function of the normalized layer number (where normalized layers < 1 indicate using features learned in middle layers to classify validation images).

They found that the models trained on 300 million images significantly outperformed the models trained on 1.2 million images at deeper layers.

Conclusion

Throughout the paper, the Transformer architectures are capable of similar performance, which is remarkable and raises interest on comparing these models to CNNs. Using CKA similarity, the authors clearly shows the differences between features and internal structures of ViTs and CNNs (For example, CNN has less similarity between the representations in shallow and deep layers compared to ViT). The authors conclude that the self-attention blocks play a vital part in the appearance of global features and strong representation propagation in the ViT models. They also show that ViTs maintain strong preservation of spatial information. Finally, they investigate the effect of the scale of data on learning, concluding that larger ViT models develop significantly stronger intermediate representations through larger datasets. These results are relevant in understanding MLP architectures in vision, as well as useful in comparing the differences in ViTs and CNNs.

References

- Maithra Raghu, Thomas Unterthiner, Simon Kornblith, Chiyuan Zhang, Alexey Dosovitskiy: “Do Vision Transformers See Like Convolutional Neural Networks?”, 2021; arXiv:2108.08810.

- A. Dosovitskiy, L. Beyer, A. Kolesnikov, D. Weissenborn, X. Zhai, T. Unterthiner, M. Dehghani, M. Minderer, G. Heigold, S. Gelly, et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929, 2020.

- M. Naseer, K. Ranasinghe, S. Khan, M. Hayat, F. S. Khan, and M.-H. Yang. Intriguing properties of vision transformers, 2021.

- N. Parmar, A. Vaswani, J. Uszkoreit, L. Kaiser, N. Shazeer, A. Ku, and D. Tran. Image transformer. In International Conference on Machine Learning, pages 4055–4064. PMLR, 2018.

- T. Nguyen, M. Raghu, and S. Kornblith. Do wide and deep networks learn the same things? uncovering how neural network representations vary with width and depth. arXiv preprint arXiv:2010.15327, 2020.

- P. Ramachandran, N. Parmar, A. Vaswani, I. Bello, A. Levskaya, and J. Shlens. Stand-alone self-attention in vision models. arXiv preprint arXiv:1906.05909, 2019.

- A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, L. Kaiser, and I. Polosukhin. Attention is all you need. arXiv preprint arXiv:1706.03762, 2017.