Deep Learning for Cardiologist-level Myocardial Infarction Detection in Electrocardiograms

Presented by

Zihui (Betty) Qin, Wenqi (Maggie) Zhao, Muyuan Yang, Amartya (Marty) Mukherjee

Introduction

This paper presents ConvNetQuake, an approach to detecting heart disease from ECG signals by fine-tuning the deep learning neural network. For context, ConvNetQuake is a convolutional neural network, used by Perol, Gharbi, and Denolle [4], for Earthquake detection and location from a single waveform. A deep learning approach was used due to the model's ability to be trained using multiple GPUs and terabyte-sized datasets. This, in turn, creates a model that is robust against noise. The purpose of this paper is to provide detailed analyses of the contributions of the ECG leads on identifying heart disease, to show the use of multiple channels in ConvNetQuake enhances prediction accuracy, and to show that feature engineering is not necessary for any of the training, validation, or testing processes. In this area, the combination of data fusion and machine learning techniques exhibits great promise to healthcare innovation, and the analyses in this paper help further this realization. The benefits of translating knowledge between deep learning and its real-world applications in health are also illustrated.

Previous Work and Motivation

The database used in previous works is the Physikalisch-Technische Bundesanstalt (PTB) database, which consists of ECG records. Previous papers used techniques, such as CNN, SVM, K-nearest neighbors, naïve Bayes classification, and ANN. From these instances, the paper observes several shortcomings in the previous papers. The first being the issue that most papers use feature selection on the raw ECG data before training the model. Dabanloo and Attarodi [2] used various techniques, such as ANN, K-nearest neighbors, and Naïve Bayes. However, they extracted two features, the T-wave integral and the total integral, to help localize and detect heart disease. Sharma and Sunkaria [3] used SVM and K-nearest neighbors as their classifiers but extracted various features using stationary wavelet transforms to decompose the ECG signal into sub-bands. The second issue is that papers that do not use feature selection would arbitrarily pick ECG leads for classification without rationale. For example, Liu et al. [1] used a deep CNN that uses 3 seconds of ECG signal from lead II at a time as input. The decision to use lead II compared to the other leads was not explained.

The issue with feature selection is that it can be time-consuming and impractical with large volumes of data. The second issue with the arbitrary selection of leads is that it does not offer insight into why the lead was chosen and each lead's contributions in the identification of heart disease. Thus, this paper addresses these two issues through implementing a deep learning model that does not rely on feature selection of ECG data and to quantify the contributions of each ECG and Frank lead in identifying heart disease.

Model Architecture

The dataset, which was used to train, validate, and test the neural network models, consists of 549 ECG records taken from 290 unique patients. Each ECG record has a mean length of over 100 seconds.

This Deep Neural Network model was created by modifying the ConvNetQuake model by adding 1D batch normalization layers; this addition helps to combat overfitting. A second modification that was made was to introduce the use of label smoothing, which can help by discouraging the model from making overconfident predictions. Label smoothing refers to the method of relaxing the confidence on the model's prediction labels. The authors' experiments demonstrated that both of these modifications helped to increase model accuracy.

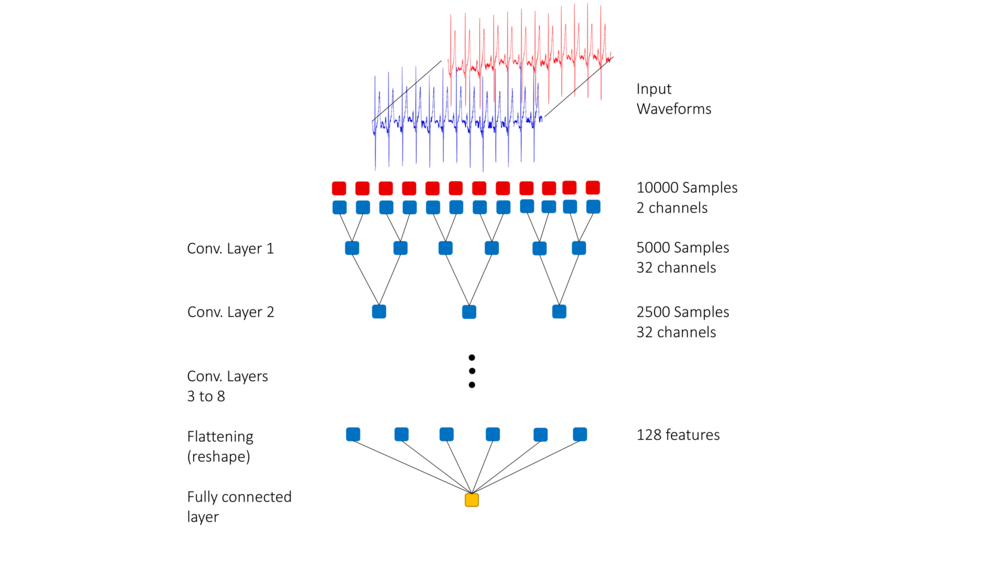

During the training stage, a 10-second long two-channel input was fed into the neural network. In order to ensure that the two channels were weighted equally, both channels were normalized. Besides, time invariance was incorporated by selecting the 10-second long segment randomly from the entire signal.

The input layer is a 10-second long ECG signal. There are 8 hidden layers in this model, each of which consists of a 1D convolution layer with the ReLu activation function followed by a batch normalization layer. The output layer is a one-dimensional layer that uses the Sigmoid activation function.

This model is trained by using batches of size 10. The learning rate is [math]\displaystyle{ 10^{-4} }[/math]. The ADAM optimizer is used. The ADAM (adaptive moment estimation) optimizer is a stochastic gradient optimization method that uses adaptive learning rates for the parameters used in the estimating the gradient's first and second moments [5]. In training the model, the dataset is split into a train set, validation set, and test set with ratios 80-10-10.

During the training process, the model was trained from scratch numerous times to avoid inserting unintended variation into the model by randomly initializing weights.

The following images gives a visual representation of the model.

Results

The paper first uses quantification of accuracies for single channels with 20-fold cross-validation, resulting in the highest individual accuracies: v5, v6, vx, vz, and ii. The researchers further investigated the accuracies for pairs of the top 5 highest individual channels using 20-fold cross-validation. They arrived at the conclusion that the highest pairs accuracies to feed into a neural network are lead v6 and lead vz. They then use 100-fold cross validation on v6 and vz pair of channels, compare outliers based on top 20, top 50 and total 100 performing models, finding that standard deviation is non-trivial and there are few models performed very poorly.

Next, they discussed 2 factors affecting model performance evaluation: 1) Random train-val-test split might have effects on the performance of the model, but it can be improved by access with a larger data set and further discussion; and 2) random initialization of the weights of the neural network shows little effects on the performance of the model performance evaluation, because of showing high average results with a fixed train-val-test split.

Comparing with other models in the other 12 papers, the model in this article has the highest accuracy, specificity, and precision. The dataset contained 549 records from 290 unique patients. In order to ensure that the model did not overfit specific patient profiles, they performed a patient-wise split, where all records associated with a given patient are either in test data or train data (but not both). They tested the 290 fold patient-wise split, resulting in the same highest accuracy of the pair v6 and vz same as record-wise split. The second best pair was ii and vz, which also contains the vz channel. Combining the two best pair channels into v6, vz, vii ultimately gave the best results over 10 trials which has an average of 97.83% in patient-wise split. Even though the patient-wise split might result in lower accuracy evaluation, however, it still maintains a very high average.

Conclusion & Discussion

The paper introduced a new architecture for heart condition classification based on raw ECG signals using multiple leads. It outperformed the state-of-art model by a large margin of 1 percent. This study finds that out of the 15 ECG channels(12 conventional ECG leads and 3 Frank Leads), channel v6, vz, and ii contain the most meaningful information for detecting myocardial infarction. Also, recent advances in machine learning can be leveraged to produce a model capable of classifying myocardial infraction with a cardiologist-level success rate. To further improve the performance of the models, access to a larger labeled data set is needed. The PTB database is small. It is difficult to test the true robustness of the model with a relatively small test set. If a larger data set can be found to help correctly identify other heart conditions beyond myocardial infraction, the research group plans to share the deep learning models and develop an open-source, computationally efficient app that can be readily used by cardiologists.

A detailed analysis of the relative importance of each of the 15 ECG channels indicates that deep learning can identify myocardial infraction by processing only ten seconds of raw ECG data from the v6, vz, and ii leads and reaches a cardiologist-level success rate. Deep learning algorithms may be readily used as commodity software. The neural network model that was originally designed to identify earthquakes may be re-designed and tuned to identify myocardial infarction. Feature engineering of ECG data is not required to identify myocardial infraction in the PTB database. This model only required ten seconds of raw ECG data to identify this heart condition with cardiologist-level performance. Access to a larger database should be provided to deep learning researchers so they can work on detecting different types of heart conditions. Deep learning researchers and the cardiology community can work together to develop deep learning algorithms that provide trustworthy, real-time information regarding heart conditions with minimal computational resources.

Fourier Transform (such as FFT) can be helpful when dealing with ECG signals. It transforms signals from the time domain to the frequency domain, which means some hidden features in frequency may be discovered.

A limitation specified by the authors is the lack of labeled data. The use of a small dataset such as PTB makes it difficult to determine the robustness of the model due to the small size of the test set. Given a larger dataset, the model could be tested to see if it generalizes to identify heart conditions other than myocardial infarction.

Critiques

- The lack of large, labelled data sets is often a common problem in most applied deep learning studies. Since the PTB database is as small as you describe it to be, the robustness of the model which may be hard to gauge. There are very likely various other physical factors that may play a role in the study which the deep neural network may not be able to adjust for as well, since health data can be somewhat subjective at times and/or may be somewhat inaccurate, especially if machines are used to measurement. This might mean error was propagated forward in the study.

- Additionally, there is a risk of confirmation bias, which may occur when a model is self-training, especially given the fact that the training set is small.

- I feel that the results of deep learning models in medical settings where the consequences of misclassification can be severe should be evaluated by assigning weights to classification. In case if the misclassification can lead to severe consequences, then the network should be trained in such a way that it errs towards safety. For example, in case if heart disease, the consequences will be very high if the system says that there is no heart disease when in fact there is. So, the evaluation metric must be selected carefully.

- This is a useful and meaningful application topic in machine learning. Using Deep Learning to detect heart disease can be very helpful if it is difficult to detect disease by looking at ECG by humans eys. This model also useful for doing statistics, such as calculating the percentage of people get heart disease. But I think the doctor should not 100% trust the result from the model, it is almost impossible to get 100% accuracy from a model. So, I think double-checking by human eyes is necessary if the result is weird. What is more, I think it will be interesting to discuss more applications in mediccal by using this method, such as detecting the Brainwave diagram to predict a person's mood and to diagnose mental diseases.

- Compared to the dataset for other topics such as object recognition, the PTB database is pretty small with only 549 ECG records. And these are highly unbiased (Table 1) with 4 records for myocarditis and 148 for myocardial infarction. Medical datasets can only be labeled by specialists. This is why these datasets are related small. It would be great if there will be a larger, more comprehensive dataset.

- Only results using 20-fold cross validation were presented. It should be shown that the results could be reproduced using a more common number of folds like 5 or 10

- There are potential issues with the inclusion of Frank leads. From a practitioner standpoint, ECGs taken with Frank leads are less common. This could prevent the use of this technique. Additionally, Frank leads are expressible as a linear combinations of the 12 traditional leads. The authors are not adding any fundamentally new information by including them and their inclusion could be viewed as a form of feature selection (going against the authors' original intentions).

- It will better if we can see how the model in this paper outperformed those methods that used feature selections. The details of the results are not enough.

- A new extended dataset for PTB dubbed PTB-XL, has 21837 records. Using this dataset could yield a more accurate result, since the original PTB's small dataset posed limitations on the deep learning model.

- The paper mentions that it has better results, but by how much? what accuracy did the methods you compared to have? Also, what methods did you compare to? (Authors mentioned feature engineering methods but this is vague) Also how much were the labels smoothed? (i.e. 1 -> 0.99 or 1-> 0.95 for example) How much of a difference did the label smoothing make?

- It is nice to see that the authors also considered training and testing the model on data via a patient-wise split, which gives more insights towards the cases when a patient has multiple records of diagnosis. Obviously and similar to what other critiques suggested, using a patient-wise split might disadvantage from the lack of training data, given that there are only 290 unique patients in the PTB database. Also, acquiring prior knowledge from professionals about correlations, such as causal relationships, between different diagnoses might be helpful for improving the model.

- As mentioned above, the dataset is comparably small in the context of machine learning. While on the other hand, each record has a length of roughly 100 seconds, which is significantly large as a single input. Therefore, it might be helpful to apply data augmentation algorithms during data preprocessing sections so that there will be a more reasonable dataset than what we currently have so far, which has a high chance of being biased or overfitted.

- There are several points from the Model Architecture section that can be improved. It mentions that both 1d batch normalization layers and label smoothing are used to improve the accuracy of the models, based on empirical experiment results. Yet, there's no breakdown of how each of these two method improves the accuracy. So it's left unclear whether each method is significant on its own, or the model simultaneously requires both methods in order to achieve improved accuracy. Some more data can be provided about this. It's mentioned that "models are trained from scratch numerous times." How many times is numerous times? Can we get the exact number? Training time about the models should also be provided. This is because if these models take a long time to train, then training them from scratch every time may cause issues with respect to runtime.

- The authors should have indicated how much the accuracy has been improved by what method. It is a little unclear that how can we define "better results". Also, this paper could be more clear if they included the details about the Model Architecture such as how it was performed and how long was the training time for the model.

- The summary is lacking several components such as explanation of model, data-preprocessing, result visualization and such. It is hard to understand how the result improved since there is no comparison. Information about dataset is unclear too, it is not explained well what they are and how they are populated.

- The authors didn't specify how many epochs the model ran for. A common practice when dealing with small datasets is to run more epochs at the risk of overfitting. However the use of batch normalization (and perhaps the introduction of Dropout layers) aid in preventing the model to overfitting the data or affirming the bias of the dataset so more epochs may have improved performance in this case.

- It is difficult to justify the effectiveness of deep learning for detecting myocardial infarction in EKG due to the lack of information available on the deep learning structure. Meanwhile, false negatives and false positives must be as close to 0 as possible, therefore the authors should test their algorithm on a variety of datasets before determining if deep learning is effective.

- The authors do not motivate the use of ConvNetQuake as their baseline model for deep transfer learning. There are likely several other model candidates that perform similar signal processing related tasks such as CNN models for gravitational wave detection.

- Further, there is very limited mention of the ECG data used, and what features are of interest. For someone who has limited domain-knowledge about Myocardial Infarctions and ECGs, it is hard to interpret and relate the information both in the original paper and the summary. There is a large use of medical terminology that the average student is not likely to know. The absence of concrete data and results leads to a lot of confusion for someone trying to understand the relevance of the model to ECGs.

- Although the application prospects mentioned by the author are exciting, the model still faces many improvements. First, misdiagnosis in a medical examination is a very serious medical malpractice, thus a confusion matrix should be added in the model robustness. Either false positive and false negative testing results should be considered. Second, the data set is still facing the issue of small size. It is recommended that the author carry out long-term tracking and supplementation of the data on different heart diseases in order to form more robust conclusions in the future.

- The large amount of medical jargon mixed within the mathematical definition is not justified, instead of giving names of diseases, we should have seen clear training labels of the database. It also does not fully justify the lack of data augmentation (amplify the intensity for example). Though the wave-related network structure was chosen, the paper later does not build upon the network but rather structure the model entirely. Within the training stage, the use of ADAM optimizer is a standard protocol but why not explore further and if not, why did they forfeit the pursuit. The training of each iteration (across all 100 iterations) is said to be done in one randomly 80-10-10 split; however, for better accuracy and further examination into the model, it is a good practice to mix up the model to allow a better fit against overfitting. The lack of an ensemble network to combat overfitting is also not presented in the paper.

- The table 9 in section 4.1 record-wise split of the paper shows a list of results compared with other studies in the literature that also use the PTB database. It was revealed that the model implemented in the paper has the highest precision, accuracy and specificity, not the best in sensitivity. It would be interesting to investigate how Huang's model got the best sensitivity, and what he was doing differently than the above model. Also, there could have been a section to compare the difference between record-wise split and patient-wise split. That is because the three statistical attributes: standard deviation, median and average of the resulting models using different split vary quite a lot.

References

[1] Na Liu et al. "A Simple and Effective Method for Detecting Myocardial Infarction Based on Deep Convolutional Neural Network". In: Journal of Medical Imaging and Health Informatics (Sept. 2018). doi: 10.1166/jmihi.2018.2463.

[2] Naser Safdarian, N.J. Dabanloo, and Gholamreza Attarodi. "A New Pattern Recognition Method for Detection and Localization of Myocardial Infarction Using T-Wave Integral and Total Integral as Extracted Features from One Cycle of ECG Signal". In: J. Biomedical Science and Engineering (Aug. 2014). doi: http://dx.doi.org/10.4236/jbise.2014.710081.

[3] L.D. Sharma and R.K. Sunkaria. "Inferior myocardial infarction detection using stationary wavelet transform and machine learning approach." In: Signal, Image and Video Processing (July 2017). doi: https://doi.org/10.1007/s11760-017-1146-z.

[4] Perol Thibaut, Gharbi Michaël, and Denolle Marin. "Convolutional neural network for earthquake detection and location". In: Science Advances (Feb. 2018). doi: 10.1126/sciadv.1700578

[5] Kingma, D. and Ba, J., 2015. Adam: A Method for Stochastic Optimization. In: International Conference for Learning Representations. [online] San Diego: 3rd International Conference for Learning Representations, p.1. Available at: <https://arxiv.org/pdf/1412.6980.pdf> [Accessed 3 December 2020].