Deep Double Descent Where Bigger Models and More Data Hurt

Presented By

Sam Senko, Tyler Verhaar and Ben Zhang

Introduction

In classical statistical learning theory, the bias-variance trade-off is a fundamental concept. The idea is that higher complexity models have lower bias but higher variance. Based on this idea, concepts like overfitting and under-fitting are introduced to classification model training process. However, the paper presents the modern theories that could overthrow the "conventional wisdoms" mentioned above.

Though with millions of parameters, complex models which generally performs much better than simpler models, behaves differently from what conventional concepts indicate. Two regimes in deep learning are introduced in this paper. In under-parameterized regime, where the model has lower complexity has the "U" shape bias-variance trade off for the test error. This regime represents the conventional idea. However, once the model has sufficiently large complexity to interpolate (- training error), then modern tuition as this paper suggests "bigger models are better".

Previous Work

Belkin er al. (2019) who first postulated in generality the phenomenon that "bigger models are better" named it "double descent" and demonstrated this phenomenon for Decision Trees, Random Features, and 2-layer Neural Networks with l2 loss on a variety of learning task including MNIST and CIFAR-10.

However, similar behaviour had been observed in Opper (1995; 2001), Advani & Saxe (2017), Spigler et al. (2018) and Geiger et al. (2019b). This concept has then been applied to various field including identity mapping in Computer Vision Deep Residual Networks by Kaiming He, Xiangyu Zhang, Shaoqing Ren and Jian Sun, explaining and harnessing adversarial examples by Ian J Goodfellow, Jonathon Shlens and Christian Szegedy.

Motivation

Since traditional notion of model complexity has not captured the model's performance well, the notion of "Effective Model Complexity" were introduced in this paper to fill the gap.

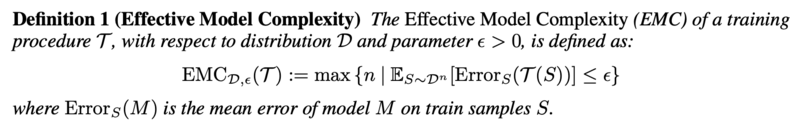

We define the training procedure T to be any procedure that takes input set S = {(x1,y1),...., (xn,yn)} of labeled training samples and output a classifier T(S) that maps data to labels to perform classification tasks. We define Effective Model Complexity of T (with respect to distribution) to be the maximum number of samples n on which T achieves on average approximately 0 training error.

The below is the formal definition of Effective Model Complexity:

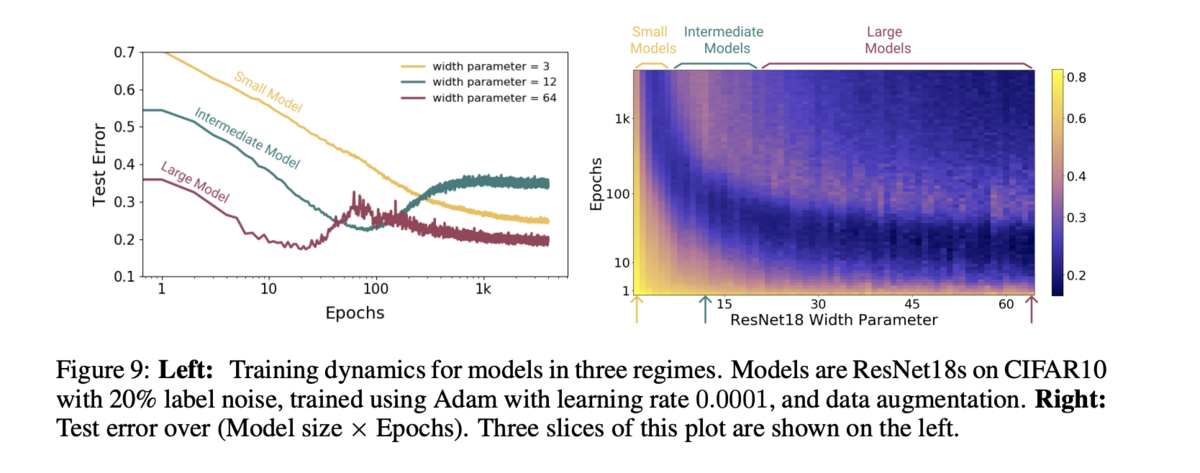

As mentioned before, in the modern complex model settings, different regimes are created to analyze model's behaviour. Three different regimes are created: "Under-parameterized regime", "Over-parameterized regime" and "Critically parameterized regime".

Model Architectures and Experiments

A variety of experiments were done to demonstrate the double descent phenomenon and its connection to effective model complexity in a number of different situations. Three main model architectures were used in these experiments:

- A simple convolutional neural network with 4 convolutional layers and 1 fully connected layer. The widths of the convolutional layers were k, 2k, 4k and 8k respectively where k is a parameter which was varied in the experiments.

- Resnets, introduced in (He, et al., 2016), with the convolutional layers having widths k, 2k, 4k and 8k respectively where k is again a parameter

- Transformers, a type of recurrent neural network often used in natural language processing. This used a 6-layer architecture. The embedding dimension was varied to vary the complexity of the model and the width of the fully connected layers was scaled proportionately to this embedding dimension.

The above models were trained with variants of gradient descent, with the number of gradient steps varying from around 5 thousand to around 500 thousand depending on the particular model and the experiment. In some experiments, label noise was used where, with probability p, the label was replaced by an incorrect label chosen uniformly at random.

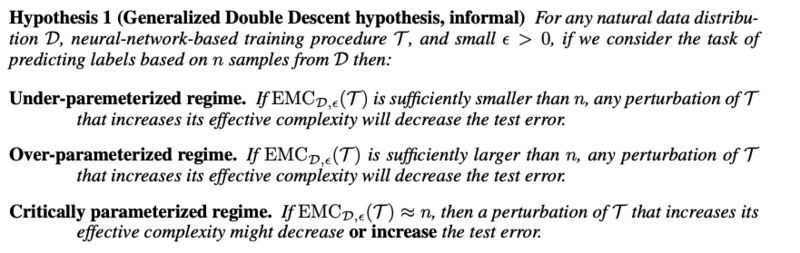

The first experiment investigated the effect of varying model complexity at various levels of label noise. Results are given below:

A few observations can be made, confirming the predictions of the paper authors. Firstly, there is double descent, with the test error decreasing until a certain point at which the model overfits leading to an increase in test error followed eventually by a second decrease in the test error. Additionally, at all levels of label noise, the peak occured around the threshold where the EMC is approximately the size of the dataset (and so the train error first approaches 0), confirming the hypotheses made by the authors. Finally, increasing label noise naturally moved this critical threshold further right which can be seen by the peaks being further right with more label noise.

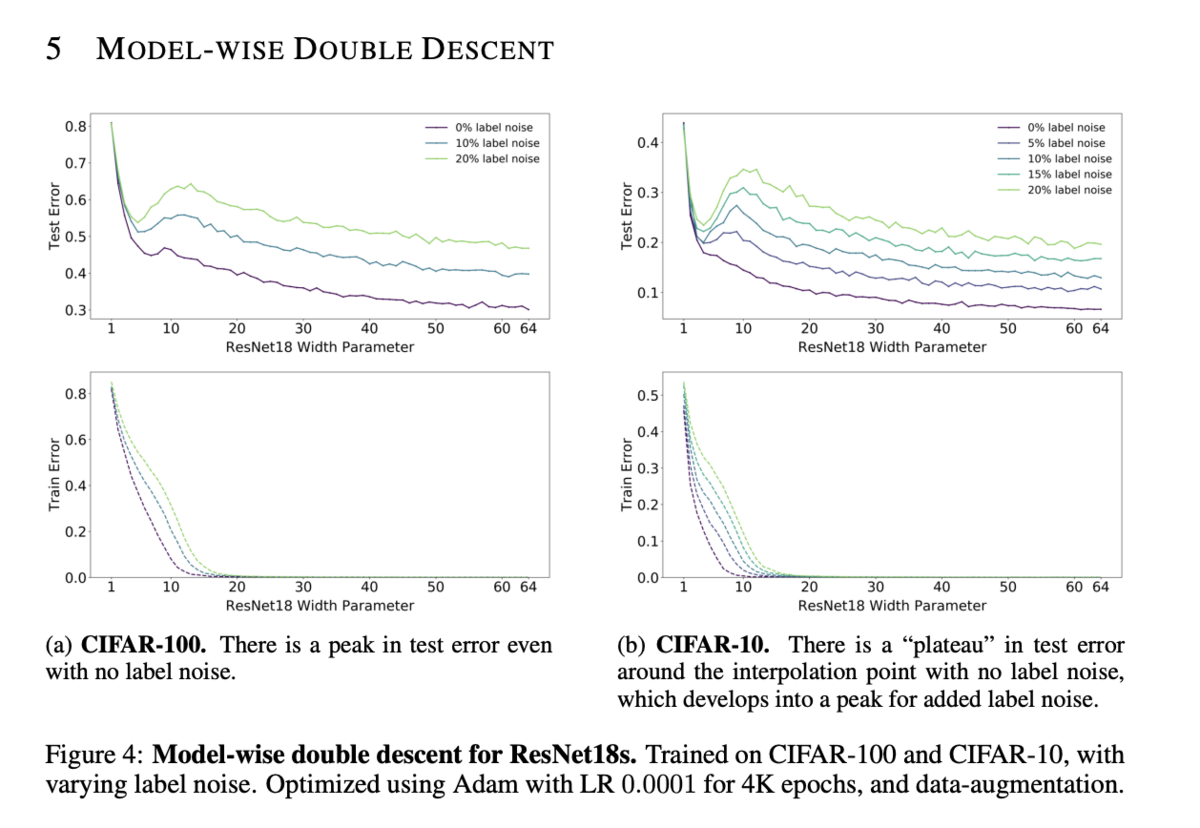

The next experiment investigated the effect of the number of epochs used on the test error for a variety of different model complexities. Note that increasing the number of epochs increases the EMC, although it may be impossible to reach a particular EMC without also increasing the model complexity.

Here, we see that the small model is unable to reach a sufficiently large EMC to see overfitting begin. For the medium-sized model, the model is just barely able to reach an EMC of approximately the size of the data and, therefore, sees a traditional U-shaped curve without a further decrease in the test error. However, the large model, which is able to exceed the threshold where EMC is approximately the data size, does see a double descent as would be expected. This has the practical implication that certain forms of early stopping may not be effective for very large models, as they may stop before reaching the second descent.

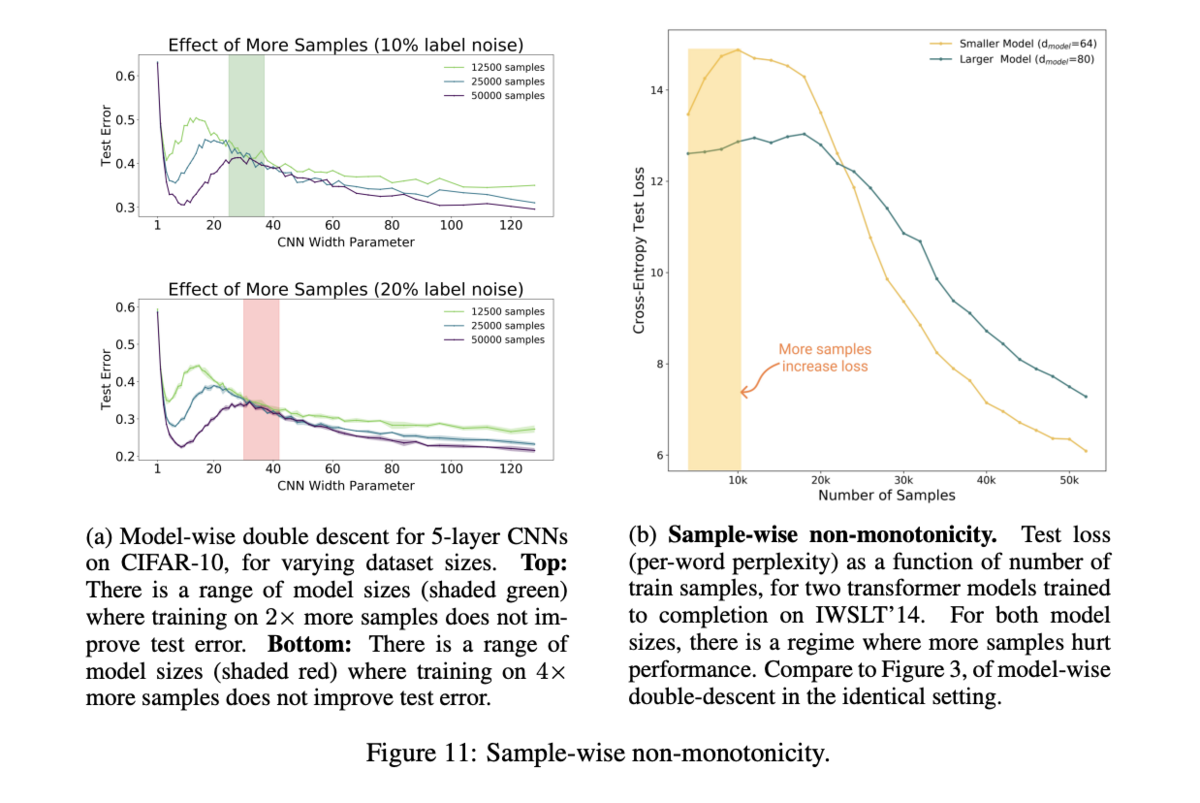

The last experiment looked at how test error changes with varying sizes of the data used to train the model. Note that as the data size is increased, the interpolation threshold (that is, the model complexity needed to achieve near-zero train error) increases. The results of this experiment are in the next figure:

As expected, the total area under the test error curve decreased as the size of the dataset used increased (meaning that, overall, more data was generally better). However, corresponding to the rightward shift in the interpolation threshold, the peak of the test error curve also shifted to the right as more data was used. This had the perhaps unexpected effect that, at certain complexity levels, the model which was trained on more data performed similarly to or in some cases even worse than the model trained on less data. Note that all of these results do agree with the hypotheses the authors made.

Conclusion

In summary, this paper defined a notion of effective model complexity (EMC) and introduced a generalized double descent hypothesis: for a natural data distribution [math]\displaystyle{ \mathcal{D} }[/math], and a neural-network-based training procedure [math]\displaystyle{ \mathcal{T} }[/math], and [math]\displaystyle{ \varepsilon \gt 0 }[/math], if we predict labels on [math]\displaystyle{ n }[/math] samples from [math]\displaystyle{ \mathcal{D} }[/math] then

- If [math]\displaystyle{ \text{EMC}_{\mathcal{D}, \varepsilon}(\mathcal{T}) \lt \lt n }[/math] then any perturbation of [math]\displaystyle{ \mathcal{T} }[/math] that increases [math]\displaystyle{ \text{EMC}_{\mathcal{D}, \varepsilon}(\mathcal{T}) }[/math] will decrease test error.

- If [math]\displaystyle{ \text{EMC}_{\mathcal{D}, \varepsilon}(\mathcal{T}) \gt \gt n }[/math] then any perturbation of [math]\displaystyle{ \mathcal{T} }[/math] that increases [math]\displaystyle{ \text{EMC}_{\mathcal{D}, \varepsilon}(\mathcal{T}) }[/math] will decrease test error.

- If [math]\displaystyle{ \text{EMC}_{\mathcal{D}, \varepsilon}(\mathcal{T}) \approx n }[/math] then any perturbation of [math]\displaystyle{ \mathcal{T} }[/math] that increases [math]\displaystyle{ \text{EMC}_{\mathcal{D}, \varepsilon}(\mathcal{T}) }[/math] may increase or decrease test error.

These three statements above correspond to the under, over, and critically parameterized regime respectively. Without defining precisely what it means for [math]\displaystyle{ \text{EMC}_{\mathcal{D}, \varepsilon}(\mathcal{T}) \lt \lt n }[/math] and [math]\displaystyle{ \text{EMC}_{\mathcal{D}, \varepsilon}(\mathcal{T}) \gt \gt n }[/math] these two sub-hypotheses are harder to study, however, the experiments conducted do suggest that there is indeed a "critical interval" around the "interpolation threshold" (i.e. when [math]\displaystyle{ \text{EMC}_{\mathcal{D}, \varepsilon}(\mathcal{T}) = n }[/math]) where (3) holds, and outside this critical interval either (1) or (2) hold (depending on what side of the interval we are on).

Evidence for the hypothesis was provided in three different settings in deep learning, which show the hypothesis is robust under different choices of model architecture (corresponding to model-wise double descent), data (corresponding to sample-wise non-monotonicity) and training procedures (which corresponds to epoch-wise double descent). In particular, for "model-wise double descent" we saw (see Figure 4) that the testing errors follow the pattern predicted by the authors, the peak in error occurred where [math]\displaystyle{ EMC \approx n }[/math], and increasing label noise moved the critical threshold rightwards. For different training procedures, the "epoch-wise double descent" phenomenon was observed as well (Figure 4). The smaller (characterized by [math]\displaystyle{ \text{EMC}_{\mathcal{D}, \varepsilon}(\mathcal{T}) \lt \lt n }[/math]) model cannot reach a large enough EMC score to exhibit the overfitting behaviour, the medium size ([math]\displaystyle{ \text{EMC}_{\mathcal{D}, \varepsilon}(\mathcal{T}) \approx n }[/math]) has the traditional U-shaped test error curve (agreeing with the classical variance-bias trade off) and the larger model ([math]\displaystyle{ \text{EMC}_{\mathcal{D}, \varepsilon}(\mathcal{T}) \gt \gt n }[/math]) is able to achieve a EMC score that corresponds with declining testing scores (as hypothesized) Lastly, we saw evidence of sample-wise non-monotonicity (see Figure 11) as the test errors decreased for larger datasets and the test error peak corresponded to a shift that was related to the size of the training data (as hypothesized).

Finally, the authors believe that the "characterization of the critical regime" provides an intutive/useful methodology for thinking about models with respect to their complexity, and training data, that is: if a model and the corresponding training procedure are only barely able to fit to the training data then it makes sense that the model may not generalize well. In particular, if a model and procedure can barely fit the training data then small changes in the model, input data, or training procedure can correspond to unexpected behaviour.

Critique

There are three primary critiques/notes worth noting.

- Label Noise - In all experiments presented by the authors the double-descent phenomenon is most readily observed in settings with label noise. The writers argue that this effect is not a result of the label noise but rather model mis-specification. The authors provide an example that allows them to argue that they view adding label noise as a proxy for making the natural data distributions harder to fit to, which in turn will increase the model mis-specification. The example given is as follows: consider some setting where label noise is not random (in particular suppose its pseudorandom with respect to a family of classifier being trained, note pseudorandom noise is deterministic and invertible). The performance of a Bayes optimal classifier would not change but we would observe an identical double descent as with truly random label noise. Therefore, by this line of reasoning adding the label noise and its relation to the double descent behaviour is not fundemenatlly about the label noise itself but rather a result of model mis-specification.

- Notion of Model Complexity - The notion of Effective Model Complexity defined by the authors differs in two main respect as opposed to classical notions of model complexity notions (for instance Rademacher complexity). Firstly, EMC depends on the true labels of the data distribution and secondly, the EMC not only depends on the model architecture but also the training procedure. The authors argue that without incorporating both of these differences into the EMC measure, the EMC will not be able to characterize the location of the double descent peak.

- Early Stopping - Many of the observed phenomenon may not occur when early stopping is implemented. However, the authors argue this is inline with their predictions, if a model cannot reach a train error of 0 then the EMC will be not sufficiently close to [math]\displaystyle{ n }[/math] such that we can expect to see the atypical behaviour in the test error. Furthermore, the authors provide evidence of model-wise double descent with optimal early stopping time (ResNet performance on CIFAR-100 with no label noise).

References

[1] Madhu S Advani and Andrew M Saxe. High-dimensional dynamics of generalization error in neural networks. arXiv preprint arXiv:1710.03667, 2017.

[2] Peter L Bartlett, Philip M Long, Ga ́bor Lugosi, and Alexander Tsigler. Benign overfitting in linear regression. arXiv preprint arXiv:1906.11300, 2019.

[3] Mikhail Belkin, Daniel Hsu, Siyuan Ma, and Soumik Mandal. Reconciling modern machine learning and the bias-variance trade-off. arXiv preprint arXiv:1812.11118, 2018. 10

[4] Mikhail Belkin, Daniel Hsu, and Ji Xu. Two models of double descent for weak features. arXiv preprint arXiv:1903.07571, 2019.

[5] Koby Bibas, Yaniv Fogel, and Meir Feder. A new look at an old problem: A universal learning approach to linear regression. arXiv preprint arXiv:1905.04708, 2019.

[6] Mauro Cettolo, Christian Girardi, and Marcello Federico. Wit3: Web inventory of transcribed and translated talks. In Proceedings of the 16th Conference of the European Association for Machine Translation (EAMT), pp. 261–268, Trento, Italy, May 2012.

[7] Mario Geiger, Arthur Jacot, Stefano Spigler, Franck Gabriel, Levent Sagun, Ste ́phane d’Ascoli, Giulio Biroli, Cle ́ment Hongler, and Matthieu Wyart. Scaling description of generalization with number of parameters in deep learning. arXiv preprint arXiv:1901.01608, 2019a.

[8] Mario Geiger, Stefano Spigler, Ste ́phane d’Ascoli, Levent Sagun, Marco Baity-Jesi, Giulio Biroli, and Matthieu Wyart. Jamming transition as a paradigm to understand the loss landscape of deep neural networks. Physical Review E, 100(1):012115, 2019b.

[9] Ian J Goodfellow, Jonathon Shlens, and Christian Szegedy. Explaining and harnessing adversarial examples. arXiv preprint arXiv:1412.6572, 2014.

[10] Trevor Hastie, Robert Tibshirani, Jerome Friedman, and James Franklin. The elements of statistical learning: data mining, inference and prediction. The Mathematical Intelligencer, 27(2):83–85, 2005.

[11] Trevor Hastie, Andrea Montanari, Saharon Rosset, and Ryan J Tibshirani. Surprises in high- dimensional ridgeless least squares interpolation. arXiv preprint arXiv:1903.08560, 2019.

[12] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Identity mappings in deep residual networks. In European conference on computer vision, pp. 630–645. Springer, 2016.

[13] Yanping Huang, Yonglong Cheng, Dehao Chen, HyoukJoong Lee, Jiquan Ngiam, Quoc V. Le, and Zhifeng Chen. Gpipe: Efficient training of giant neural networks using pipeline parallelism. CoRR, abs/1811.06965, 2018. URL http://arxiv.org/abs/1811.06965.

[14] Alex Krizhevsky. Learning multiple layers of features from tiny images. Technical report, 2009.

[15] Alex Krizhevsky, Ilya Sutskever, and Geoffrey E Hinton. Imagenet classification with deep convo- lutional neural networks. In Advances in neural information processing systems, pp. 1097–1105, 2012.

[16] Aleksander Madry, Aleksandar Makelov, Ludwig Schmidt, Dimitris Tsipras, and Adrian Vladu. Towards deep learning models resistant to adversarial attacks. arXiv preprint arXiv:1706.06083, 2017.

[17] Song Mei and Andrea Montanari. The generalization error of random features regression: Precise asymptotics and double descent curve. arXiv preprint arXiv:1908.05355, 2019.

[18] Partha P. Mitra. Understanding overfitting peaks in generalization error: Analytical risk curves for l2 and l1 penalized interpolation. ArXiv, abs/1906.03667, 2019.

[19] Vidya Muthukumar, Kailas Vodrahalli, and Anant Sahai. Harmless interpolation of noisy data in regression. arXiv preprint arXiv:1903.09139, 2019.

[20] Preetum Nakkiran, Gal Kaplun, Dimitris Kalimeris, Tristan Yang, Benjamin L Edelman, Fred Zhang, and Boaz Barak. Sgd on neural networks learns functions of increasing complexity. arXiv preprint arXiv:1905.11604, 2019.

[21] Manfred Opper. Statistical mechanics of learning: Generalization. The Handbook of Brain Theory and Neural Networks, 922-925., 1995.

[22] Manfred Opper. Learning to generalize. Frontiers of Life, 3(part 2), pp.763-775., 2001. 11

[23] Myle Ott, Sergey Edunov, Alexei Baevski, Angela Fan, Sam Gross, Nathan Ng, David Grangier, and Michael Auli. fairseq: A fast, extensible toolkit for sequence modeling. In Proceedings of NAACL-HLT 2019: Demonstrations, 2019.

[24] David Page. How to train your resnet. https://myrtle.ai/ how-to-train-your-resnet-4-architecture/, 2018.

[25] Adam Paszke, Sam Gross, Soumith Chintala, Gregory Chanan, Edward Yang, Zachary DeVito, Zeming Lin, Alban Desmaison, Luca Antiga, and Adam Lerer. Automatic differentiation in PyTorch. In NeurIPS Autodiff Workshop, 2017.

[26] Alec Radford, Jeff Wu, Rewon Child, David Luan, Dario Amodei, and Ilya Sutskever. Language models are unsupervised multitask learners. 2019.

[27] Ali Rahimi and Benjamin Recht. Random features for large-scale kernel machines. In Advances in neural information processing systems, pp. 1177–1184, 2008.

[28] Rico Sennrich, Barry Haddow, and Alexandra Birch. Neural machine translation of rare words with subword units. ArXiv, abs/1508.07909, 2015.

[29] Stefano Spigler, Mario Geiger, Ste ́phane d’Ascoli, Levent Sagun, Giulio Biroli, and Matthieu Wyart. A jamming transition from under-to over-parametrization affects loss landscape and generaliza- tion. arXiv preprint arXiv:1810.09665, 2018.

[30] Christian Szegedy, Wei Liu, Yangqing Jia, Pierre Sermanet, Scott Reed, Dragomir Anguelov, Du- mitru Erhan, Vincent Vanhoucke, and Andrew Rabinovich. Going deeper with convolutions. In Computer Vision and Pattern Recognition (CVPR), 2015. URL http://arxiv.org/abs/ 1409.4842.

[31] Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Lukasz Kaiser, and Illia Polosukhin. Attention is all you need. CoRR, abs/1706.03762, 2017.

[32] Chiyuan Zhang, Samy Bengio, Moritz Hardt, Benjamin Recht, and Oriol Vinyals. Understanding deep learning requires rethinking generalization. ICLR, abs/1611.03530, 2016.

[33] Preetum Nakkiran, Gal Kaplun, Yamini Bansal, Tristan Yang, Boaz Barak, Ilya Sutskever. Deep Double Descent: Where Bigger Models and More Data Hurt (2019). URL https://arxiv.org/abs/1912.02292v1