stat441F18/YOLO: Difference between revisions

No edit summary |

No edit summary |

||

| (7 intermediate revisions by the same user not shown) | |||

| Line 3: | Line 3: | ||

Some of the cited benefits of the YOLO model are: | Some of the cited benefits of the YOLO model are: | ||

#''Extreme speed''. This is attributable to the framing of detection as a single regression problem. | #''Extreme speed''. This is attributable to the framing of detection as a single regression problem. Both training and prediction only require a single network evaluation, hence the name ''"You Only Look Once"''. | ||

#''Learning of generalizable object representations''. YOLO is experimentally found to degrade in average precision at a slower rate than comparable methods such as R-CNN when exposed to datasets with significantly varying pixel-level test data, such as the Picasso and People-Art datasets. | #''Learning of generalizable object representations''. YOLO is experimentally found to degrade in average precision at a slower rate than comparable methods such as R-CNN when exposed to datasets with significantly varying pixel-level test data, such as the Picasso and People-Art datasets. | ||

#''Global reasoning during prediction''. Since YOLO sees whole images during training, unlike other techniques which only see a subsection of the image, its neural network implicitly encodes global contextual information about classes in addition to their local properties. As a result, YOLO makes less misclassification errors on image backgrounds than other methods which cannot benefit from this global context. | #''Global reasoning during prediction''. Since YOLO sees whole images during training, unlike other techniques which only see a subsection of the image, its neural network implicitly encodes global contextual information about classes in addition to their local properties. As a result, YOLO makes less misclassification errors on image backgrounds than other methods which cannot benefit from this global context. | ||

| Line 12: | Line 12: | ||

=Encoding predictions= | =Encoding predictions= | ||

Perhaps the most important part of the YOLO model is its novel approach to prediction encoding. | |||

The input image is first divided into an <math>S \times S</math> grid of cells. Now for the given bounding boxes of objects in the input image, the YOLO model takes the central point of the bounding box and links it to the grid cell in which it is contained. This cell will be responsible for the detection of that object. | The input image is first divided into an <math>S \times S</math> grid of cells. Now for the given bounding boxes of objects in the input image, the YOLO model takes the central point of the bounding box and links it to the grid cell in which it is contained. This cell will be responsible for the detection of that object. | ||

| Line 52: | Line 52: | ||

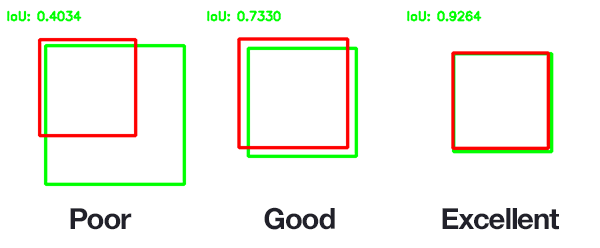

Here IOU is the ''intersection over union'', also called the ''Jaccard index''<sup>[[#References|[5]]]</sup>. It is an evaluation metric that rewards bounding boxes which significantly overlap with the ground-truth bounding boxes of labelled objects in the input. | Here IOU is the ''intersection over union'', also called the ''Jaccard index''<sup>[[#References|[5]]]</sup>. It is an evaluation metric that rewards bounding boxes which significantly overlap with the ground-truth bounding boxes of labelled objects in the input. | ||

[[File:iou_examples.png]] | |||

Each grid cell must also predict <math>C</math> class probabilities <math>P(C_i | \text{object})</math>. The set of class probabilities is only predicted once for each grid cell, irrespective of the number of boxes <math>B</math>. Thus combining the grid cell division with the bounding box and class probability predictions, we end up with a tensor output of the shape <math>S \times S \times (B \cdot 5 + C)</math>. | Each grid cell must also predict <math>C</math> class probabilities <math>P(C_i | \text{object})</math>. The set of class probabilities is only predicted once for each grid cell, irrespective of the number of boxes <math>B</math>. Thus combining the grid cell division with the bounding box and class probability predictions, we end up with a tensor output of the shape <math>S \times S \times (B \cdot 5 + C)</math>. | ||

| Line 165: | Line 167: | ||

*<math>\lambda_{\text{coord}}</math> is a parameter designed to increase the loss stemming from bounding box coordinate predictions, set in the paper to be <math>5</math> | *<math>\lambda_{\text{coord}}</math> is a parameter designed to increase the loss stemming from bounding box coordinate predictions, set in the paper to be <math>5</math> | ||

*<math>\lambda_{\text{noobj}}</math> is a parameter designed to decrease the loss stemming from confidence predictions for boxes that do not contain objects, set in the paper to be <math>0.5</math> | *<math>\lambda_{\text{noobj}}</math> is a parameter designed to decrease the loss stemming from confidence predictions for boxes that do not contain objects, set in the paper to be <math>0.5</math> | ||

*<math>(\hat{x}, \hat{y})</math> are | *<math>(\hat{x}, \hat{y})</math> are positional coordinates for the given prediction | ||

*<math>(\hat{w}, \hat{h})</math> are | *<math>(\hat{w}, \hat{h})</math> are dimensional coordinates for the given prediction | ||

*<math>\hat{C}</math> is the IOU of the predicted bounding box with the ground-truth bounding box. | *<math>\hat{C}</math> is the IOU of the predicted bounding box with the ground-truth bounding box. | ||

| Line 212: | Line 214: | ||

Naive sum-of-squared error would weigh localization error equally to classification error. This is not a desirable property, as it can shift the confidence of many grid cells which do not contain objects towards zero and "overpower" the gradients for grid cells containing objects. This would lead to model instability, and this problem can be alleviated by modifying the weighting of localization error relative to classification error with the <math>\lambda</math> parameters described above. | Naive sum-of-squared error would weigh localization error equally to classification error. This is not a desirable property, as it can shift the confidence of many grid cells which do not contain objects towards zero and "overpower" the gradients for grid cells containing objects. This would lead to model instability, and this problem can be alleviated by modifying the weighting of localization error relative to classification error with the <math>\lambda</math> parameters described above. | ||

====What is unique about this loss function?==== | |||

Unlike classifier-based approaches to object detection such as R-CNN and its variants, this loss function directly corresponds to the performance of our object detector. Furthermore, our model is trained in its entirety on this loss function - we do not have a multi-stage pipeline which requires separate training mechanisms. | |||

==Network design limitations== | |||

* Since each grid cell is constrained to producing <math>B</math> bounding boxes, the number of objects in close proximity in an image which can be detected at once is limited. For instance, a gaggle of 4 geese centralized in the same grid cell could not be predicted at once by the model if <math>B < 4</math>, and in the paper's implementation, <math>B = 2</math>. | |||

* The loss function treats errors in large bounding boxes the same as small bounding boxes to some extent, which is inconsistent with the relative contributions on IOU of errors in boxes of varying size. Thus, the main source of error in the model is incorrect localizations. | |||

=Experimental results= | |||

YOLO was compared to other real-time detection systems on the PASCAL VOC detection dataset<sup>[[#References|[8]]]</sup> from 2007 and 2012. | |||

===Real-Time System Results on PASCAL VOC 2007=== | |||

{| class="wikitable" style="text-align: left;" | |||

|+ <!-- caption --> | |||

|- | |||

! Real-Time Detector !! Train !! align="right"| mAP !! align="right"| FPS | |||

|- | |||

| 100Hz DPM<sup>[[#References|[8]]]</sup> || 2007 || align="right"| 16 || align="right"| 100 | |||

|- | |||

| 30Hz DPM<sup>[[#References|[8]]]</sup> || 2007 || align="right"| 26.1 || align="right"| 30 | |||

|- | |||

| Fast YOLO || 2007+2012 || align="right"| 52.7 || align="right"| 155 | |||

|- | |||

| YOLO || 2007+2012 || align="right"| 63.4 || align="right"| 45 | |||

|} | |||

Here DPM is the deformable parts model, which as previously mentioned uses a sliding window approach for detection. The single convolutional network employed by YOLO leads to both a faster and more accurate model, as seen in the results. | |||

===Less-Than-Real-Time System Results on PASCAL VOC 2007=== | |||

{| class="wikitable" style="text-align: left;" | |||

|+ <!-- caption --> | |||

|- | |||

! Less-Than-Real-Time Detector !! Train !! align="right"| mAP !! align="right"| FPS | |||

|- | |||

| Fastest DPM<sup>[[#References|[10]]]</sup> || 2007 || align="right"| 30.4 || align="right"| 15 | |||

|- | |||

| R-CNN Minus R<sup>[[#References|[11]]]</sup> || 2007 || align="right"| 53.5 || align="right"| 6 | |||

|- | |||

| Fast R-CNN<sup>[[#References|[3]]]</sup> || 2007+2012 || align="right"| 70 || align="right"| 0.5 | |||

|- | |||

| Faster R-CNN VGG-16<sup>[[#References|[4]]]</sup> || 2007+2012 || align="right"| 73.2 || align="right"| 7 | |||

|- | |||

| Faster R-CNN ZF <sup>[[#References|[4]]]</sup> || 2007+2012 || align="right"| 62.1 || align="right"| 18 | |||

|- | |||

| YOLO VGG-16 || 2007+2012 || align="right"| 66.4 || align="right"| 21 | |||

|} | |||

Here YOLO VGG-16 is the same YOLO model, except trained with VGG-16. It is correspondingly much more accurate yet slower than the network discussed previously. | |||

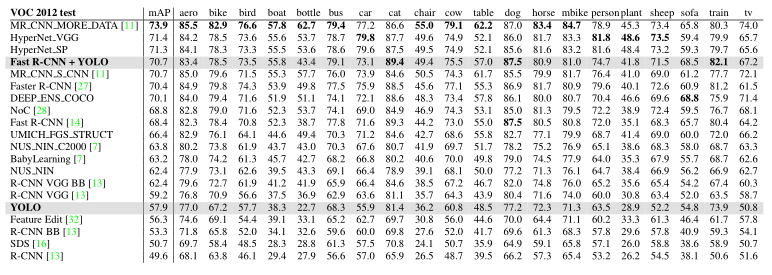

===VOC 2012 results=== | |||

[[File:voc_results.png]] | |||

One can see that YOLO struggles with small objects due to localization error being the primary problem of the model design, with lower scores in categories such as "sheep", "bottle", and "tv". We can also see that YOLO is not competitive in terms of mAP compared to state of the art models, however these results are not measuring FPS (or speed) in which YOLO excels. | |||

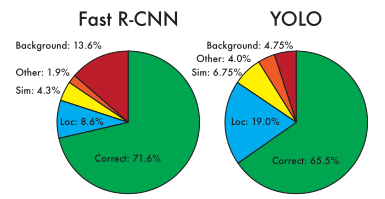

===Error component analysis compared to R-CNN=== | |||

[[File:error_components.png]] | |||

In this experiment, the methodology of Hoiem et al. <sup>[[#References|[12]]]</sup> was used to investigate the error characteristics of YOLO compared to R-CNN. Here the areas on the pie chart can be interpreted as follows: | |||

* Correct: the correct class was predicted, and <math>\text{IOU} > 0.5</math> | |||

* Localization (Loc.): the correct class was predicted, and <math> 0.1 < \text{IOU} < 0.5 </math> | |||

* Similar (Sim.): a similar class was predicted, and <math>\text{IOU} > 0.1</math> | |||

* Other: a wrong class was predicted, and <math>\text{IOU} > 0.1</math> | |||

* Background: <math>\text{IOU} < 0.1</math> for any given object | |||

We can see that YOLO indeed makes relatively less background errors than R-CNN, due to its usage of global context. Also as described earlier, localization error has a severe uptick in YOLO and is the primary issue with the model design. | |||

=Conclusion= | |||

The YOLO model was introduced in this paper, which presents a novel prediction encoding that allows training and prediction to be unified under a single neural network architecture. YOLO proves in experimental results to be an extremely fast object detector, which may provide suitable accuracy depending on the object detection task at hand. | |||

=References= | =References= | ||

| Line 232: | Line 306: | ||

* <sup>[https://arxiv.org/abs/1504.06066 [7]]</sup>S. Ren, K. He, R. B. Girshick, X. Zhang, and J. Sun. Object detection networks on convolutional feature maps. ''CoRR'', abs/1504.06066, 2015. | * <sup>[https://arxiv.org/abs/1504.06066 [7]]</sup>S. Ren, K. He, R. B. Girshick, X. Zhang, and J. Sun. Object detection networks on convolutional feature maps. ''CoRR'', abs/1504.06066, 2015. | ||

* <sup>[https://www.researchgate.net/publication/269375158_The_Pascal_Visual_Object_Classes_Challenge_A_Retrospective [8]]</sup>M. Everingham, S. M. A. Eslami, L. Van Gool, C. K. I. Williams, J. Winn, and A. Zisserman. The pascal visual object classes challenge: A retrospective. ''International Journal of Computer Vision'', 111(1):98–136, Jan. 2015. | |||

* <sup>[https://link.springer.com/chapter/10.1007/978-3-319-10590-1_5 [9]]</sup>M. A. Sadeghi and D. Forsyth. 30hz object detection with dpm v5. ''In Computer Vision–ECCV 2014, pages 65–79. Springer'', 2014. | |||

* <sup>[https://ieeexplore.ieee.org/document/6909716 [10]]</sup>J. Yan, Z. Lei, L. Wen, and S. Z. Li. The fastest deformable part model for object detection. ''In Computer Vision and Pattern Recognition (CVPR), 2014 IEEE Conference on, pages 2497–2504''. IEEE, 2014. | |||

* <sup>[https://arxiv.org/abs/1506.06981 [11]]</sup>K. Lenc and A. Vedaldi. R-CNN minus R. ''arXiv preprint arXiv:1506.06981'', 2015. | |||

* <sup>[https://arxiv.org/abs/1506.06981 [12]]</sup>D. Hoiem, Y. Chodpathumwan, and Q. Dai. Diagnosing error in object detectors. ''In Computer Vision–ECCV 2012, pages 340–353''. Springer, 2012. 6 | |||

Latest revision as of 15:04, 20 November 2018

The You Only Look Once (YOLO) object detection model is a one-shot object detection network aimed to combine suitable accuracy with extreme performance. Unlike other popular approaches to object detection such as sliding window DPM [1] or regional proposal models such as the R-CNN variants [2][3][4], YOLO does not comprise of a multi-step pipeline which can become difficult to optimize. Instead it frames object detection as a single regression problem, and uses a single convolutional neural network to predict bounding boxes and class probabilities for each box.

Some of the cited benefits of the YOLO model are:

- Extreme speed. This is attributable to the framing of detection as a single regression problem. Both training and prediction only require a single network evaluation, hence the name "You Only Look Once".

- Learning of generalizable object representations. YOLO is experimentally found to degrade in average precision at a slower rate than comparable methods such as R-CNN when exposed to datasets with significantly varying pixel-level test data, such as the Picasso and People-Art datasets.

- Global reasoning during prediction. Since YOLO sees whole images during training, unlike other techniques which only see a subsection of the image, its neural network implicitly encodes global contextual information about classes in addition to their local properties. As a result, YOLO makes less misclassification errors on image backgrounds than other methods which cannot benefit from this global context.

Presented by

- Mitchell Snaith | msnaith@edu.uwaterloo.ca

Encoding predictions

Perhaps the most important part of the YOLO model is its novel approach to prediction encoding.

The input image is first divided into an [math]\displaystyle{ S \times S }[/math] grid of cells. Now for the given bounding boxes of objects in the input image, the YOLO model takes the central point of the bounding box and links it to the grid cell in which it is contained. This cell will be responsible for the detection of that object.

Now for each grid cell, YOLO predicts [math]\displaystyle{ B }[/math] bounding boxes along with confidence scores. These bounding boxes are predicted directly with fully connected layers at the end of the single neural network, as seen later in the network architecture. Each bounding box is comprised of 5 predictions:

[math]\displaystyle{ \begin{align*} &\left. \begin{aligned} x \\ y \end{aligned} \right\rbrace \text{the center of the bounding box relative to the grid cell} \\ &\left. \begin{aligned} w \\ h \end{aligned} \right\rbrace \text{the width and height of the bounding box relative to the whole input} \\ &\left. \begin{aligned} p_c \end{aligned} \right\rbrace \text{the confidence of presence of an object of any class} \end{align*} }[/math]

[math]\displaystyle{ (x, y) }[/math] and [math]\displaystyle{ (w, h) }[/math] are normalized to the range [math]\displaystyle{ (0, 1) }[/math]. Further, [math]\displaystyle{ p_c }[/math] in this context is defined as follows:

[math]\displaystyle{ p_c = P(\text{object}) \cdot \text{IOU}^{\text{truth}}_{\text{pred}} }[/math]

Here IOU is the intersection over union, also called the Jaccard index[5]. It is an evaluation metric that rewards bounding boxes which significantly overlap with the ground-truth bounding boxes of labelled objects in the input.

Each grid cell must also predict [math]\displaystyle{ C }[/math] class probabilities [math]\displaystyle{ P(C_i | \text{object}) }[/math]. The set of class probabilities is only predicted once for each grid cell, irrespective of the number of boxes [math]\displaystyle{ B }[/math]. Thus combining the grid cell division with the bounding box and class probability predictions, we end up with a tensor output of the shape [math]\displaystyle{ S \times S \times (B \cdot 5 + C) }[/math].

Neural network architecture

The network is structured quite conventionally, with convolutional and max pooling layers to perform feature extraction, along with some convolutional layers and 2 fully connected layers at the end which predict the bounding boxes along with class probabilities.

| layer | filters | stride | out dimension |

|---|---|---|---|

| Conv 1 | 7 x 7 x 64 | 2 | 224 x 224 x 64 |

| Max Pool 1 | 2 x 2 | 2 | 112 x 112 x 64 |

| Conv 2 | 3x3x192 | 1 | 112 x 112 x 192 |

| Max Pool 2 | 2 x 2 | 2 | 56 x 56 x 192 |

| Conv 3 | 1 x 1 x 128 | 1 | 56 x 56 x 128 |

| Conv 4 | 3 x 3 x 256 | 1 | 56 x 56 x 256 |

| Conv 5 | 1 x 1 x 256 | 1 | 56 x 56 x 256 |

| Conv 6 | 1 x 1 x 512 | 1 | 56 x 56 x 512 |

| Max Pool 3 | 2 x 2 | 2 | 28 x 28 x 512 |

| Conv 7 | 1 x 1 x 256 | 1 | 28 x 28 x 256 |

| Conv 8 | 3 x 3 x 512 | 1 | 28 x 28 x 512 |

| Conv 9 | 1 x 1 x 256 | 1 | 28 x 28 x 256 |

| Conv 10 | 3 x 3 x 512 | 1 | 28 x 28 x 512 |

| Conv 11 | 1 x 1 x 256 | 1 | 28 x 28 x 256 |

| Conv 12 | 3 x 3 x 512 | 1 | 28 x 28 x 512 |

| Conv 13 | 1 x 1 x 256 | 1 | 28 x 28 x 256 |

| Conv 14 | 3 x 3 x 512 | 1 | 28 x 28 x 512 |

| Conv 15 | 1 x 1 x 512 | 1 | 28 x 28 x 512 |

| Conv 16 | 3 x 3 x 1024 | 1 | 28 x 28 x 1024 |

| Max Pool 4 | 2 x 2 | 2 | 14 x 14 x 1024 |

| Conv 17 | 1 x 1 x 512 | 1 | 14 x 14 x 512 |

| Conv 18 | 3 x 3 x 1024 | 1 | 14 x 14 x 1024 |

| Conv 19 | 1 x 1 x 512 | 1 | 14 x 14 x 512 |

| Conv 20 | 3 x 3 x 1024 | 1 | 14 x 14 x 1024 |

| Conv 21 | 3 x 3 x 1024 | 1 | 14 x 14 x 1024 |

| Conv 22 | 3 x 3 x 1024 | 2 | 7 x 7 x 1024 |

| Conv 23 | 3 x 3 x 1024 | 1 | 7 x 7 x 1024 |

| Conv 24 | 3 x 3 x 1024 | 1 | 7 x 7 x 1024 |

| Fully Connected 1 | - | - | 4096 |

| Fully Connected 2 | - | - | 7 x 7 x 30 |

Network training details

Activation functions

A linear activation function is used for the final layer which predicts class probabilities and bounding boxes, while a leaky ReLu defined as follows is used for all other layers:

[math]\displaystyle{ \phi(x) = x \cdot \mathbf{1}_{\{x \gt 0\}} + 0.1x \cdot \mathbf{1}_{\{x \leq 0\}} }[/math]

Layers for feature extraction and detection

The author elects to pretrain the first 20 convolutional layers followed by an average-pooling layer and a fully connected layer with a large dataset, which in the paper's case was ImageNet's 1000-class competition dataset [6]. Afterwards, 4 convolutional layers and two fully connected layers with randomly initialized weights are used to perform detection, as shown to be beneficial in Ren et al[6].

Loss function

The entire loss function which YOLO optimizes for is defined as follows:

[math]\displaystyle{ \begin{align*} L &= \lambda_{\text{coord}} \sum_{i=0}^{S^2} \sum_{j=0}^{B} \mathbf{1}_{ij}^{\text{obj}} \left[ (x_i - \hat{x}_i)^2 + (y_i - \hat{y}_i)^2 \right] \\ & \ \ \ \ \ + \lambda_{\text{coord}} \sum_{i=0}^{S^2}\sum_{j=0}^{B} \mathbf{1}_{ij}^{\text{obj}} \left[ \left( \sqrt{w_i} - \sqrt{\hat{w}_i} \right)^2 + \left( \sqrt{h_i} - \sqrt{\hat{h}_i}\right)^2 \right] \\ & \ \ \ \ \ + \sum_{i=0}^{S^2}\sum_{j=0}^{B} \mathbf{1}_{ij}^{\text{obj}} (C_i - \hat{C}_i)^2 \\ & \ \ \ \ \ + \lambda_{\text{noobj}} \sum_{i=0}^{S^2}\sum_{j=0}^{B} \mathbf{1}_{ij}^{\text{noobj}} (C_i - \hat{C}_i)^2 \\ & \ \ \ \ \ + \sum_{i=0}^{S^2} \mathbf{1}_{i}^{\text{obj}} \sum_{c \in \text{classes}} (p_i(c) - \hat{p}_i(c))^2, \end{align*} }[/math]

where:

- [math]\displaystyle{ \mathbf{1}_{i}^{\text{obj}} }[/math] is an indicator for some bounding box in the [math]\displaystyle{ i }[/math]-th grid cell being responsible for the given prediction

- [math]\displaystyle{ \mathbf{1}_{ij}^{\text{obj}} }[/math] is an indicator for the [math]\displaystyle{ j }[/math]-th bounding box in the [math]\displaystyle{ i }[/math]-th grid cell being responsible for the given prediction

- [math]\displaystyle{ \mathbf{1}_{ij}^{\text{noobj}} }[/math] is an indicator for the [math]\displaystyle{ j }[/math]-th bounding box in the [math]\displaystyle{ i }[/math]-th grid cell not being responsible for the given prediction

- [math]\displaystyle{ \lambda_{\text{coord}} }[/math] is a parameter designed to increase the loss stemming from bounding box coordinate predictions, set in the paper to be [math]\displaystyle{ 5 }[/math]

- [math]\displaystyle{ \lambda_{\text{noobj}} }[/math] is a parameter designed to decrease the loss stemming from confidence predictions for boxes that do not contain objects, set in the paper to be [math]\displaystyle{ 0.5 }[/math]

- [math]\displaystyle{ (\hat{x}, \hat{y}) }[/math] are positional coordinates for the given prediction

- [math]\displaystyle{ (\hat{w}, \hat{h}) }[/math] are dimensional coordinates for the given prediction

- [math]\displaystyle{ \hat{C} }[/math] is the IOU of the predicted bounding box with the ground-truth bounding box.

This loss function appears quite complex, and may be easier to understand in parts.

The bounding box position loss

[math]\displaystyle{ \lambda_{\text{coord}} \sum_{i=0}^{S^2} \sum_{j=0}^{B} \mathbf{1}_{ij}^{\text{obj}} \left[ (x_i - \hat{x}_i)^2 + (y_i - \hat{y}_i)^2 \right] }[/math]

Notice that it is the sum-of-squared error which is being optimized for in this term. Sum-of-squared error was selected as the underlying loss function for the model due to its simplicity in optimization.

The bounding box dimension loss

[math]\displaystyle{ \lambda_{\text{coord}} \sum_{i=0}^{S^2}\sum_{j=0}^{B} \mathbf{1}_{ij}^{\text{obj}} \left[ \left( \sqrt{w_i} - \sqrt{\hat{w}_i} \right)^2 + \left( \sqrt{h_i} - \sqrt{\hat{h}_i}\right)^2 \right] }[/math]

The sum-of-squared error is used here as well, with a slight adjustment - taking square roots of each coordinate. This is done to ensure that the error metric properly manages scales of deviations. That is, small deviations in large boxes should be of less significance than small deviations in small boxes. The author claims that predicting the square root of the bounding box dimensions partially addresses this issue.

The bounding box predictor loss

[math]\displaystyle{ \sum_{i=0}^{S^2}\sum_{j=0}^{B} \mathbf{1}_{ij}^{\text{obj}} (C_i - \hat{C}_i)^2 + \lambda_{\text{noobj}} \sum_{i=0}^{S^2}\sum_{j=0}^{B} \mathbf{1}_{ij}^{\text{noobj}} (C_i - \hat{C}_i)^2 }[/math]

This is the loss associated with the confidence score for each bounding box predictor, again using sum-of-squared error.

The classification loss

[math]\displaystyle{ \sum_{i=0}^{S^2} \mathbf{1}_{i}^{\text{obj}} \sum_{c \in \text{classes}} (p_i(c) - \hat{p}_i(c))^2 }[/math]

This is synonymous with the regular sum-of-squared error for classification, however we included the indicator [math]\displaystyle{ \mathbf{1}_{i}^{\text{obj}} }[/math] to ensure that classification error is not penalized for cells which do not contain objects.

Why the [math]\displaystyle{ \lambda }[/math] parameters?

Naive sum-of-squared error would weigh localization error equally to classification error. This is not a desirable property, as it can shift the confidence of many grid cells which do not contain objects towards zero and "overpower" the gradients for grid cells containing objects. This would lead to model instability, and this problem can be alleviated by modifying the weighting of localization error relative to classification error with the [math]\displaystyle{ \lambda }[/math] parameters described above.

What is unique about this loss function?

Unlike classifier-based approaches to object detection such as R-CNN and its variants, this loss function directly corresponds to the performance of our object detector. Furthermore, our model is trained in its entirety on this loss function - we do not have a multi-stage pipeline which requires separate training mechanisms.

Network design limitations

- Since each grid cell is constrained to producing [math]\displaystyle{ B }[/math] bounding boxes, the number of objects in close proximity in an image which can be detected at once is limited. For instance, a gaggle of 4 geese centralized in the same grid cell could not be predicted at once by the model if [math]\displaystyle{ B \lt 4 }[/math], and in the paper's implementation, [math]\displaystyle{ B = 2 }[/math].

- The loss function treats errors in large bounding boxes the same as small bounding boxes to some extent, which is inconsistent with the relative contributions on IOU of errors in boxes of varying size. Thus, the main source of error in the model is incorrect localizations.

Experimental results

YOLO was compared to other real-time detection systems on the PASCAL VOC detection dataset[8] from 2007 and 2012.

Real-Time System Results on PASCAL VOC 2007

| Real-Time Detector | Train | mAP | FPS |

|---|---|---|---|

| 100Hz DPM[8] | 2007 | 16 | 100 |

| 30Hz DPM[8] | 2007 | 26.1 | 30 |

| Fast YOLO | 2007+2012 | 52.7 | 155 |

| YOLO | 2007+2012 | 63.4 | 45 |

Here DPM is the deformable parts model, which as previously mentioned uses a sliding window approach for detection. The single convolutional network employed by YOLO leads to both a faster and more accurate model, as seen in the results.

Less-Than-Real-Time System Results on PASCAL VOC 2007

| Less-Than-Real-Time Detector | Train | mAP | FPS |

|---|---|---|---|

| Fastest DPM[10] | 2007 | 30.4 | 15 |

| R-CNN Minus R[11] | 2007 | 53.5 | 6 |

| Fast R-CNN[3] | 2007+2012 | 70 | 0.5 |

| Faster R-CNN VGG-16[4] | 2007+2012 | 73.2 | 7 |

| Faster R-CNN ZF [4] | 2007+2012 | 62.1 | 18 |

| YOLO VGG-16 | 2007+2012 | 66.4 | 21 |

Here YOLO VGG-16 is the same YOLO model, except trained with VGG-16. It is correspondingly much more accurate yet slower than the network discussed previously.

VOC 2012 results

One can see that YOLO struggles with small objects due to localization error being the primary problem of the model design, with lower scores in categories such as "sheep", "bottle", and "tv". We can also see that YOLO is not competitive in terms of mAP compared to state of the art models, however these results are not measuring FPS (or speed) in which YOLO excels.

Error component analysis compared to R-CNN

In this experiment, the methodology of Hoiem et al. [12] was used to investigate the error characteristics of YOLO compared to R-CNN. Here the areas on the pie chart can be interpreted as follows:

- Correct: the correct class was predicted, and [math]\displaystyle{ \text{IOU} \gt 0.5 }[/math]

- Localization (Loc.): the correct class was predicted, and [math]\displaystyle{ 0.1 \lt \text{IOU} \lt 0.5 }[/math]

- Similar (Sim.): a similar class was predicted, and [math]\displaystyle{ \text{IOU} \gt 0.1 }[/math]

- Other: a wrong class was predicted, and [math]\displaystyle{ \text{IOU} \gt 0.1 }[/math]

- Background: [math]\displaystyle{ \text{IOU} \lt 0.1 }[/math] for any given object

We can see that YOLO indeed makes relatively less background errors than R-CNN, due to its usage of global context. Also as described earlier, localization error has a severe uptick in YOLO and is the primary issue with the model design.

Conclusion

The YOLO model was introduced in this paper, which presents a novel prediction encoding that allows training and prediction to be unified under a single neural network architecture. YOLO proves in experimental results to be an extremely fast object detector, which may provide suitable accuracy depending on the object detection task at hand.

References

- [1]P. F. Felzenszwalb, R. B. Girshick, D. McAllester, and D. Ramanan. Object detection with discriminatively trained part based models. IEEE Transactions on Pattern Analysis and Machine Intelligence, 32(9):1627–1645, 2010.

- [2]R. Girshick, J. Donahue, T. Darrell, and J. Malik. Rich feature hierarchies for accurate object detection and semantic segmentation. In Computer Vision and Pattern Recognition (CVPR), 2014 IEEE Conference on, pages 580–587. IEEE, 2014.

- [3]R. B. Girshick. Fast R-CNN. CoRR, abs/1504.08083, 2015.

- [4]S. Ren, K. He, R. Girshick, and J. Sun. Faster R-CNN: Towards real-time object detection with region proposal networks. arXiv preprint arXiv:1506.01497, 2015.

- [5]P. Jaccard. Étude comparative de la distribution florale dans une portion des Alpes et des Jura. Bulletin de la Société Vaudoise des Sciences Naturelles, 37: 547–579, 1901.

- [6]O. Russakovsky, J. Deng, H. Su, J. Krause, S. Satheesh, S. Ma, Z. Huang, A. Karpathy, A. Khosla, M. Bernstein, A. C. Berg, and L. Fei-Fei. ImageNet Large Scale Visual Recognition Challenge. International Journal of Computer Vision (IJCV), 2015.

- [7]S. Ren, K. He, R. B. Girshick, X. Zhang, and J. Sun. Object detection networks on convolutional feature maps. CoRR, abs/1504.06066, 2015.

- [8]M. Everingham, S. M. A. Eslami, L. Van Gool, C. K. I. Williams, J. Winn, and A. Zisserman. The pascal visual object classes challenge: A retrospective. International Journal of Computer Vision, 111(1):98–136, Jan. 2015.

- [9]M. A. Sadeghi and D. Forsyth. 30hz object detection with dpm v5. In Computer Vision–ECCV 2014, pages 65–79. Springer, 2014.

- [10]J. Yan, Z. Lei, L. Wen, and S. Z. Li. The fastest deformable part model for object detection. In Computer Vision and Pattern Recognition (CVPR), 2014 IEEE Conference on, pages 2497–2504. IEEE, 2014.

- [11]K. Lenc and A. Vedaldi. R-CNN minus R. arXiv preprint arXiv:1506.06981, 2015.

- [12]D. Hoiem, Y. Chodpathumwan, and Q. Dai. Diagnosing error in object detectors. In Computer Vision–ECCV 2012, pages 340–353. Springer, 2012. 6