summary: Difference between revisions

No edit summary |

(→Source) |

||

| (80 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

XGBoost: A Scalable Tree Boosting System | '''''XGBoost: A Scalable Tree Boosting System''''' | ||

== Presented by == | == Presented by == | ||

| Line 10: | Line 11: | ||

Zhang, Wenling | Zhang, Wenling | ||

= Introduction = | |||

The Extreme Gradiant Boosting, also known as XGBoost, has been the leading model that dominates Kaggle, as well as other machine learning and data mining challenges. The main reason to its success is that this tree boosting system is highly scalable , which means it could be used to solve various problems with significantly less time and fewer resources. | |||

This paper is written by the father of XGBoost, Tianqi Chen and Carlos Guestrin. It gives us an overview on how he used algorithmic optimizations as well as some important systems to develop XGBoost. He explained it in the following manner: | |||

1. Based on gradient tree boosting algorithm, Chen and Guestrin make some modifications to the regularized objective function and introduces an end-to-end tree boosting system. He also applies shrinkage and feature subsampling to prevent overfitting, | |||

2. The system supports existing split finding algorithms including exact greedy and approximate algorithms. For weighted data, the writers propose a distributed weighted quantile sketch algorithm which is provable; while for sparse data, he develops a novel sparsity-aware algorithm which is much faster than the naive model. | |||

3. They claim that storing the data in ''blocks'' could effectively reduce sorting cost, and the cache-aware block structure is highly effective for out-of-core tree learning. | |||

4. XGBoost is evaluated by designed experiments with 4 datasets. The paper compares it with adjusted setting as well as other systems to test its performance. | |||

= Tree Boosting= | |||

=== Regularized Learning Objective === | |||

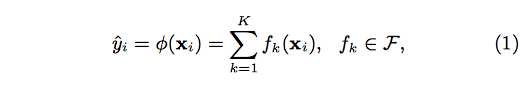

Suppose the given data set has m features and n examples. A tree ensemble model uses K additive functions to predict the output. | |||

[[File:eq1.png|center|eq1.png]] | |||

where F is the space of regression trees(CART). | |||

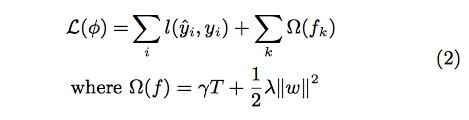

The author suggests the following regularized objective function to make improvements comparing to gradient boosting | |||

[[File:eq2.png|center|eq2.png]] | |||

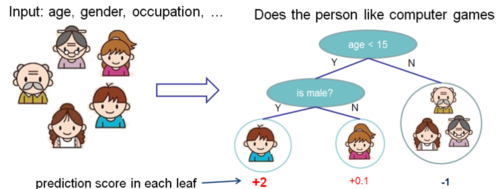

Regression tree contains continuous scores on each leaf, which is different with decision trees we have learned in class. The following 2 figures represent how to use the decision rules in the trees to classify it into the leaves and calculate the final prediction by summing up the score in the corresponding leaves. | |||

[[File:Picture2.1.png|500px| thumb| center| Figure 0: Prediction Score of Tree Structure.]] | |||

[[File:Picture2.2.png|500px|thumb|center|Figure 1: Tree Ensemble Model. The final prediction for a given example is the sum of predictions from each tree. ]] | |||

=== Gradient Tree Boosting === | |||

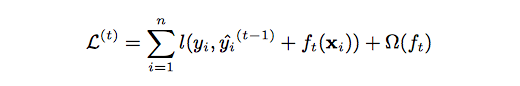

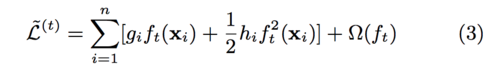

The tree ensemble model (Equation 2.) is trained in an additive manner. We need to add a new <math>f</math> to our objective. | |||

[[File:eq2.2.png|center|eq2.2.png]] | |||

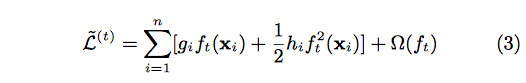

After applying the second-order Taylor expansion to the above formula and removing constant terms, we can finally have | |||

[[File:eq3.png|center|eq3.png]] | |||

Define <math>I_{j} = \{i| q(X_{i}) \}</math> | |||

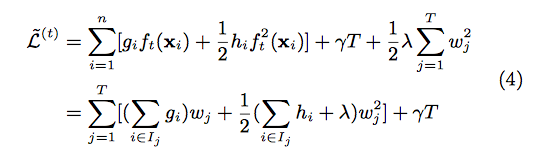

as the instance set of leaf j. We can rewrite Equation (3) as | |||

[[File:eq4.png|center|eq4.png]] | |||

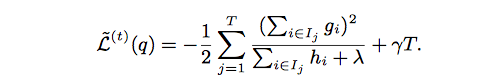

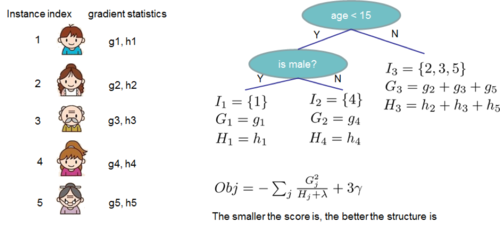

By computing the optimal weight and corresponding optimal value, we can use following equation as the scoring function which can measure the quality of a tree structure. Lower score means better tree structure. | |||

[[File:eq5.png|center|eq5.png]] | |||

The following figure shows an example how this score is calculated. | |||

[[File:Picture2.3.png|500px| thumb|center|Figure 2: Structure Score Calculation. We only need to sum up the gradient and second order gradient statistics on each leaf, then apply the scoring formula to get the quality score. ]] | |||

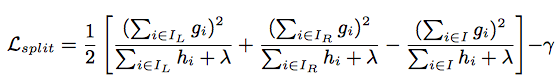

<math> | Normally it is impossible to enumerate all the possible tree structures. Assume <math>I_{L}</math> and <math>I_{R}</math> are the instance sets of left and right nodes after the split, and <math> I = I_{L} + I_{R}</math>. Then the loss reduction after the split is given by | ||

[[File:eq6.png|center]] | |||

=== Shrinkage and Column Subsampling === | |||

In addition to the regularized objective, the paper also introduces two techniques to prevent over-fitting. | |||

Method 1: Shrinkage. After each step of tree boosting, we scale newly added weights by a shrinkage factor η, which reduces the influence of every individual tree and leaves the space for future trees. | |||

Method 2: Column (feature) subsampling. We try to build each individual tree with only a portion of predictors chosen randomly. This procedure encourages the variance between the trees (i.e. no same tree occurs). Implemented in an opensource packages, this method prevents over-fitting, compared with the traditional row subsampling, and speeds up the computations of parallel algorithm as well. | |||

= Split Finding Algorithms = | |||

The ways to find the best split can be realized by two main methods. | |||

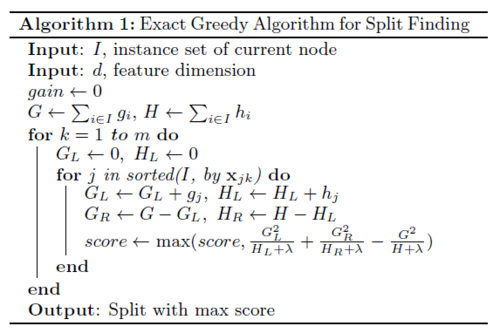

Basic exact greedy algorithm: enumerate over all the possible splits on all the features, which is computationally demanding. Sort the data first, and visit the data to accumulate the gradient statistics greedily. | |||

[[File:exact_greedy.png|500px| thumb|center ]] | |||

[[File:appro_greedy.png|500px| thumb|center]] | |||

[[File: | |||

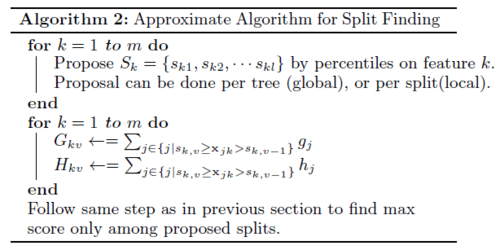

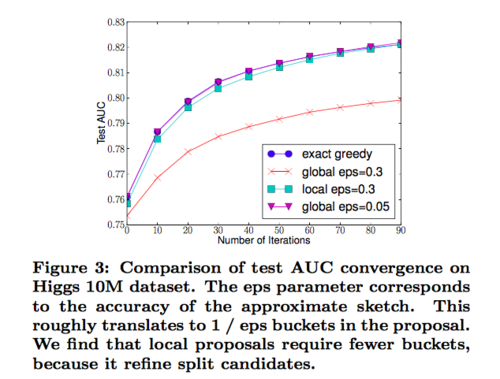

Approximate algorithm: To achieve an effective gradient tree boosting model, The author introduces an approximate algorithm. The algorithm first proposes candidate splitting points by the percentiles of feature distribution, maps the continuous features into buckets split and then finds the best solution among the proposals by the aggregated statistics. | |||

The algorithm can be varied by the time the proposal is given. | |||

The | The global variant: all candidate splits are proposed at the initial phase of tree construction and are used for all split finding. It requires fewer proposal steps. | ||

The local variant: the candidate splits are refined after each split. It requires fewer candidate points, so it is potentially more appropriate for deeper trees. | |||

Methods can be chosen according to users’ needs. | |||

[[File:auc.png|500px| thumb|center]] | |||

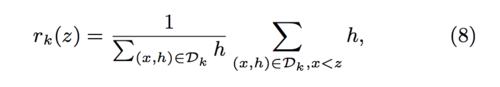

=== Weighted Quantile Sketch === | |||

Motivation: One key step in the approximate algorithm is to propose candidate split points. Usually, percentiles of feature are used to make candidates distribute evenly on the data. | |||

let multi-set | |||

<math> | <math> | ||

\mathbf{ | \mathbf{D}_k = { (\mathbf{x}_{1k}, \mathbf{h}_1), (\mathbf{x}_{2k}, \mathbf{h}_2) , ... (\mathbf{x}_{nk}, \mathbf{h}_n) } | ||

</math> | |||

represent the k-th feature values and second order gradient statistics of each training instances. | |||

The define a rank function <math> | |||

\mathbf{r}_k : \mathbf{R} \rightarrow [0, \infty) | |||

</math>: | |||

[[File:For4.png|500px| thumb|center ]] | |||

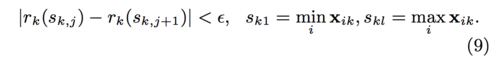

which represents the proportion of instances whose feature value <math> k </math> is smaller than <math> z </math>. The goal is to find candidate split points <math> ({\mathbf{s}_{k1}, \mathbf{s}_{k2}, ... \mathbf{s}_{kl} }) </math> | |||

[[File:For5.png|500px| thumb|center ]] | |||

Here <math> \epsilon </math> is an approximation factor. This means that there is roughly <math> </math> candidate points. | |||

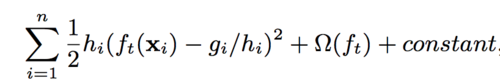

Here each data point is weighted by <math> h_i </math>, since rewriting Eq (3) | |||

[[File:For3.png|500px| thumb|center ]] | |||

we have | |||

[[File:For6.png|500px| thumb|center ]] | |||

which is exactly weighted squared loss with labels <math> g_i/ h_i </math> with <math> h_i </math>. | |||

=== Sparsity--aware Split Finding === | |||

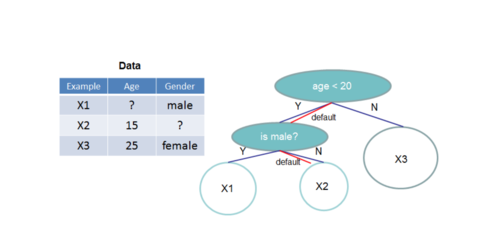

<math> | In real world problem, it is quite common for the input <math> X </math> to be sparse. The reasons that causes for sparsity are following: 1. presence of missing values in the data; 2.frequent zero entries in the statistics; and, 3.artifacts of feature engineering such as one-hot encoding. | ||

</math> | |||

[[File:Figure 4.png|500px| thumb|center | Figure 4. Tree structure with default directions. An example will be classified into the default direction when the feature needed for the split is missing]] | |||

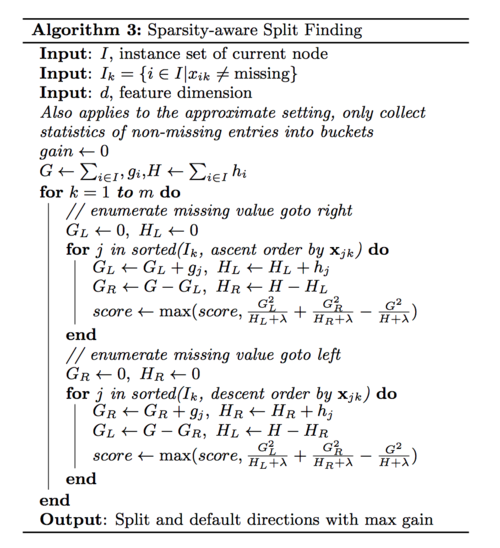

[ | This paper propose to add a default direction in each tree node, which is shown in Figure 4. When a value is missing in the sparse matrix <math> X </math>, the instance is classified into the default direction. There are two choices of default direction in each branch. The optimal default direction is learn from data. Algorithm 3 shows Sparsity aware Split Finding. | ||

[[File:Algorithm 3.png|500px| thumb|center ]] | |||

The important improvement is to only visit the non-missing entries <math> I_k</math>. The presented algorithm treats the non-presence as a missing value and learns the best direction to handle missing values. | |||

XGBoost handles all sparsity patterns in a unified way. More importantly, this paper exploits the sparsity to make computation complexity linear to number of non-missing entries in the input. | |||

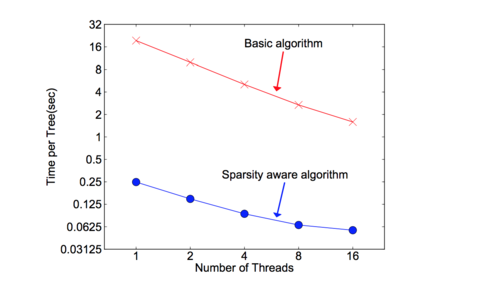

[[File:Figure 5.png|500px| thumb|center | Figure 5. Impact of the sparsity aware algorithm on Allstate-10K. The dataset is sparse mainly due to one-hot encoding. The sparsity aware algorithm is more than 50 times faster than the naive version that does not take sparsity into consideration. ]] | |||

Figure 5 shows the comparison of sparsity aware and a naive implementation on an Allstate-10K dataset. The results shows that sparsity aware algorithm runs 50 times faster than the naive version. This confirms the importance of the sparsity aware algorithm. | |||

= System Design = | |||

=== Column Blocks and Parallelization === | |||

[[File:Figure6.png|500px| thumb|center ]] | |||

1. Feature values are sorted. | |||

2. A block contains one or more feature values. | |||

3. Instance indices are stored in blocks. | |||

4. Missing features are not stored. | |||

5. With column blocks, a parallel split finding algorithm is easy to design. | |||

=== Cache Aware Access === | |||

A thread pre-fetches data from non-continuous memory into a continuous buffer. | |||

The main thread accumulates gradients statistics in the continuous buffer. | |||

=Evaluations= | |||

===System Implementation=== | |||

Implemented as an open source package, XGBoost is flexible, portable and reusable. It also supports various languages and ecosystems. | |||

=== | ===Dataset and Setup=== | ||

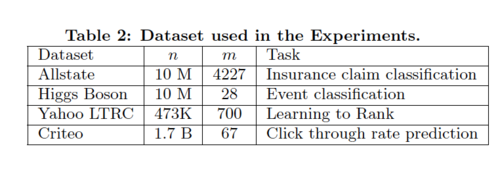

Table 2 summarizes the 4 datasets used in the experiments. | |||

[[File:t2.png|500px| thumb|center ]] | |||

The first three datasets are used for the single machine parallel setting, which is run on a Dell PowerEdge R420 with two 8-core Intel Xeon and memory 64GB. Meanwhile, AWS c3.8xlarge machine is used for experiment in distributed and the out-of-core setting, which uses the criteo dataset. All available cores in the machines are devoted into the experiments, unless otherwise specified. | |||

=== Experiments=== | |||

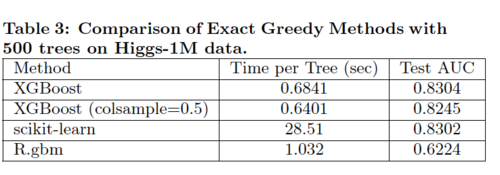

The classification experiment uses Higgs Boson dataset to classify whether an event corresponds to the Higgs boson. The goal is to evaluate XGBoost using Exact Greedy Methods and compare it with column sampling XGBoost and two other well-known systems. Table 3 below summarizes the results. | |||

[[File:t3.png|500px| thumb|center ]] | |||

Overall, both XGBoost and scikit-learn performs better than R.gbm by 25% of Test AUC. In terms of time, however, XGBoost runs significantly faster than both scikit-learn and R.gbm. Note that XGBoost with column sampling performs slightly worse because the number of key features is limited. We could conclude that XGBoost using the exact greedy algorithm without column sampling works the best under this scenario. | |||

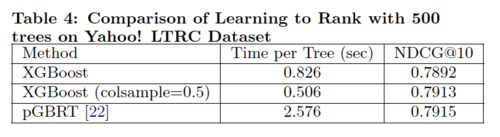

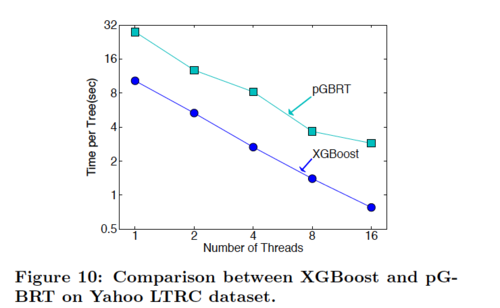

The learning to rank experiment compares XGBoost(using exact greedy algorithm) with pGBRT(using approximate algorithm) in order to evaluate the system's performance on rank problem. | |||

[[File:t4.png|500px| thumb|center ]] | |||

[[File:f10.png|500px| thumb|center ]] | |||

According to Table 4, XGBoost with column sampling performs almost as well as pGBRT, while using only 1/5 of the time. Note that pGBRT is the best previously published system to solve this rank problem. | |||

The out-of-score and distributed experiments demonstrate XGBoost's ability to handle large data with a fast speed. | |||

= | = Conclusion= | ||

This paper propose a scalable end-to-end tree boosting system with cache access patterns, block structure and other essential elements. He also introduces two novel split finding algorithms, one is sparsity-aware algorithm and the other is weighted quantile sketch with proofs. Compared to other popular systems, XGBoost gives state-of-the-art results in different experiment settings, which explains why it it considered one of the best and fastest algorithm for structured or tabular data. | |||

= Source = | = Source = | ||

Chen, T. Guestrin C. (2016) XGBoost: A Scalable Tree Boosting System. Retrieved from http://www.kdd.org/kdd2016/papers/files/rfp0697-chenAemb.pdf. arXiv:1603.02754 [cs.LG]. | |||

Chen, T. (2014) Introduction to Boosted Trees. Retrieved from https://homes.cs.washington.edu/~tqchen/pdf/BoostedTree.pdf. | |||

DMLC. (2015-2016) Introduction to Boosted Trees. Retrieved from https://xgboost.readthedocs.io/en/latest/model.html. | |||

Latest revision as of 17:34, 18 March 2018

XGBoost: A Scalable Tree Boosting System

Presented by

Jiang, Cong

Song, Ziwei

Ye, Zhaoshan

Zhang, Wenling

Introduction

The Extreme Gradiant Boosting, also known as XGBoost, has been the leading model that dominates Kaggle, as well as other machine learning and data mining challenges. The main reason to its success is that this tree boosting system is highly scalable , which means it could be used to solve various problems with significantly less time and fewer resources.

This paper is written by the father of XGBoost, Tianqi Chen and Carlos Guestrin. It gives us an overview on how he used algorithmic optimizations as well as some important systems to develop XGBoost. He explained it in the following manner:

1. Based on gradient tree boosting algorithm, Chen and Guestrin make some modifications to the regularized objective function and introduces an end-to-end tree boosting system. He also applies shrinkage and feature subsampling to prevent overfitting,

2. The system supports existing split finding algorithms including exact greedy and approximate algorithms. For weighted data, the writers propose a distributed weighted quantile sketch algorithm which is provable; while for sparse data, he develops a novel sparsity-aware algorithm which is much faster than the naive model.

3. They claim that storing the data in blocks could effectively reduce sorting cost, and the cache-aware block structure is highly effective for out-of-core tree learning.

4. XGBoost is evaluated by designed experiments with 4 datasets. The paper compares it with adjusted setting as well as other systems to test its performance.

Tree Boosting

Regularized Learning Objective

Suppose the given data set has m features and n examples. A tree ensemble model uses K additive functions to predict the output.

where F is the space of regression trees(CART). The author suggests the following regularized objective function to make improvements comparing to gradient boosting

Regression tree contains continuous scores on each leaf, which is different with decision trees we have learned in class. The following 2 figures represent how to use the decision rules in the trees to classify it into the leaves and calculate the final prediction by summing up the score in the corresponding leaves.

Gradient Tree Boosting

The tree ensemble model (Equation 2.) is trained in an additive manner. We need to add a new [math]\displaystyle{ f }[/math] to our objective.

After applying the second-order Taylor expansion to the above formula and removing constant terms, we can finally have

Define [math]\displaystyle{ I_{j} = \{i| q(X_{i}) \} }[/math] as the instance set of leaf j. We can rewrite Equation (3) as

By computing the optimal weight and corresponding optimal value, we can use following equation as the scoring function which can measure the quality of a tree structure. Lower score means better tree structure.

The following figure shows an example how this score is calculated.

Normally it is impossible to enumerate all the possible tree structures. Assume [math]\displaystyle{ I_{L} }[/math] and [math]\displaystyle{ I_{R} }[/math] are the instance sets of left and right nodes after the split, and [math]\displaystyle{ I = I_{L} + I_{R} }[/math]. Then the loss reduction after the split is given by

Shrinkage and Column Subsampling

In addition to the regularized objective, the paper also introduces two techniques to prevent over-fitting.

Method 1: Shrinkage. After each step of tree boosting, we scale newly added weights by a shrinkage factor η, which reduces the influence of every individual tree and leaves the space for future trees.

Method 2: Column (feature) subsampling. We try to build each individual tree with only a portion of predictors chosen randomly. This procedure encourages the variance between the trees (i.e. no same tree occurs). Implemented in an opensource packages, this method prevents over-fitting, compared with the traditional row subsampling, and speeds up the computations of parallel algorithm as well.

Split Finding Algorithms

The ways to find the best split can be realized by two main methods.

Basic exact greedy algorithm: enumerate over all the possible splits on all the features, which is computationally demanding. Sort the data first, and visit the data to accumulate the gradient statistics greedily.

Approximate algorithm: To achieve an effective gradient tree boosting model, The author introduces an approximate algorithm. The algorithm first proposes candidate splitting points by the percentiles of feature distribution, maps the continuous features into buckets split and then finds the best solution among the proposals by the aggregated statistics. The algorithm can be varied by the time the proposal is given.

The global variant: all candidate splits are proposed at the initial phase of tree construction and are used for all split finding. It requires fewer proposal steps.

The local variant: the candidate splits are refined after each split. It requires fewer candidate points, so it is potentially more appropriate for deeper trees. Methods can be chosen according to users’ needs.

Weighted Quantile Sketch

Motivation: One key step in the approximate algorithm is to propose candidate split points. Usually, percentiles of feature are used to make candidates distribute evenly on the data.

let multi-set [math]\displaystyle{ \mathbf{D}_k = { (\mathbf{x}_{1k}, \mathbf{h}_1), (\mathbf{x}_{2k}, \mathbf{h}_2) , ... (\mathbf{x}_{nk}, \mathbf{h}_n) } }[/math] represent the k-th feature values and second order gradient statistics of each training instances. The define a rank function [math]\displaystyle{ \mathbf{r}_k : \mathbf{R} \rightarrow [0, \infty) }[/math]:

which represents the proportion of instances whose feature value [math]\displaystyle{ k }[/math] is smaller than [math]\displaystyle{ z }[/math]. The goal is to find candidate split points [math]\displaystyle{ ({\mathbf{s}_{k1}, \mathbf{s}_{k2}, ... \mathbf{s}_{kl} }) }[/math]

Here [math]\displaystyle{ \epsilon }[/math] is an approximation factor. This means that there is roughly [math]\displaystyle{ }[/math] candidate points. Here each data point is weighted by [math]\displaystyle{ h_i }[/math], since rewriting Eq (3)

we have

which is exactly weighted squared loss with labels [math]\displaystyle{ g_i/ h_i }[/math] with [math]\displaystyle{ h_i }[/math].

Sparsity--aware Split Finding

In real world problem, it is quite common for the input [math]\displaystyle{ X }[/math] to be sparse. The reasons that causes for sparsity are following: 1. presence of missing values in the data; 2.frequent zero entries in the statistics; and, 3.artifacts of feature engineering such as one-hot encoding.

This paper propose to add a default direction in each tree node, which is shown in Figure 4. When a value is missing in the sparse matrix [math]\displaystyle{ X }[/math], the instance is classified into the default direction. There are two choices of default direction in each branch. The optimal default direction is learn from data. Algorithm 3 shows Sparsity aware Split Finding.

The important improvement is to only visit the non-missing entries [math]\displaystyle{ I_k }[/math]. The presented algorithm treats the non-presence as a missing value and learns the best direction to handle missing values. XGBoost handles all sparsity patterns in a unified way. More importantly, this paper exploits the sparsity to make computation complexity linear to number of non-missing entries in the input.

Figure 5 shows the comparison of sparsity aware and a naive implementation on an Allstate-10K dataset. The results shows that sparsity aware algorithm runs 50 times faster than the naive version. This confirms the importance of the sparsity aware algorithm.

System Design

Column Blocks and Parallelization

1. Feature values are sorted.

2. A block contains one or more feature values.

3. Instance indices are stored in blocks.

4. Missing features are not stored.

5. With column blocks, a parallel split finding algorithm is easy to design.

Cache Aware Access

A thread pre-fetches data from non-continuous memory into a continuous buffer. The main thread accumulates gradients statistics in the continuous buffer.

Evaluations

System Implementation

Implemented as an open source package, XGBoost is flexible, portable and reusable. It also supports various languages and ecosystems.

Dataset and Setup

Table 2 summarizes the 4 datasets used in the experiments.

The first three datasets are used for the single machine parallel setting, which is run on a Dell PowerEdge R420 with two 8-core Intel Xeon and memory 64GB. Meanwhile, AWS c3.8xlarge machine is used for experiment in distributed and the out-of-core setting, which uses the criteo dataset. All available cores in the machines are devoted into the experiments, unless otherwise specified.

Experiments

The classification experiment uses Higgs Boson dataset to classify whether an event corresponds to the Higgs boson. The goal is to evaluate XGBoost using Exact Greedy Methods and compare it with column sampling XGBoost and two other well-known systems. Table 3 below summarizes the results.

Overall, both XGBoost and scikit-learn performs better than R.gbm by 25% of Test AUC. In terms of time, however, XGBoost runs significantly faster than both scikit-learn and R.gbm. Note that XGBoost with column sampling performs slightly worse because the number of key features is limited. We could conclude that XGBoost using the exact greedy algorithm without column sampling works the best under this scenario.

The learning to rank experiment compares XGBoost(using exact greedy algorithm) with pGBRT(using approximate algorithm) in order to evaluate the system's performance on rank problem.

According to Table 4, XGBoost with column sampling performs almost as well as pGBRT, while using only 1/5 of the time. Note that pGBRT is the best previously published system to solve this rank problem.

The out-of-score and distributed experiments demonstrate XGBoost's ability to handle large data with a fast speed.

Conclusion

This paper propose a scalable end-to-end tree boosting system with cache access patterns, block structure and other essential elements. He also introduces two novel split finding algorithms, one is sparsity-aware algorithm and the other is weighted quantile sketch with proofs. Compared to other popular systems, XGBoost gives state-of-the-art results in different experiment settings, which explains why it it considered one of the best and fastest algorithm for structured or tabular data.

Source

Chen, T. Guestrin C. (2016) XGBoost: A Scalable Tree Boosting System. Retrieved from http://www.kdd.org/kdd2016/papers/files/rfp0697-chenAemb.pdf. arXiv:1603.02754 [cs.LG].

Chen, T. (2014) Introduction to Boosted Trees. Retrieved from https://homes.cs.washington.edu/~tqchen/pdf/BoostedTree.pdf.

DMLC. (2015-2016) Introduction to Boosted Trees. Retrieved from https://xgboost.readthedocs.io/en/latest/model.html.