stat946w18/Synthetic and natural noise both break neural machine translation: Difference between revisions

| Line 20: | Line 20: | ||

[[File:BLEU_plot.PNG]] | [[File:BLEU_plot.PNG]] | ||

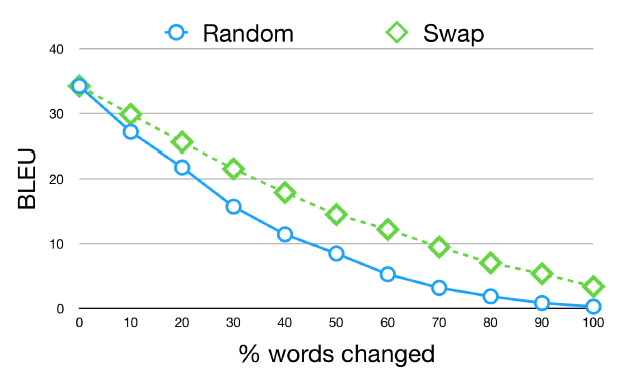

BLEU (bilingual evaluation understudy) is an algorithm for evaluating the quality of text which has been machine-translated from one natural language to another. Quality is considered to be the correspondence between a machine's output and that of a human: "the closer a machine translation is to a professional human translation, the better it is".0 | BLEU (bilingual evaluation understudy) is an algorithm for evaluating the quality of text which has been machine-translated from one natural language to another. Quality is considered to be the correspondence between a machine's output and that of a human: "the closer a machine translation is to a professional human translation, the better it is". BLEU is between 0 and 1. | ||

This paper explores two simple strategies for increasing model robustness: | |||

# using structure-invariant representations ( character CNN representation) | |||

# robust training on noisy data, a form of adversarial training. | |||

== Adversarial examples == | |||

The growing literature on adversarial examples has demonstrated how dangerous it can be to have brittle machine learning systems being used so pervasively in the real world. | |||

The paper devises simple methods for generating adversarial examples for NMT. They do not assume any access to the NMT models' gradients, instead relying on cognitively-informed and naturally occurring language errors to generate noise. | |||

== MT system == | |||

We experiment with three different NMT systems with access to character information at different levels. | |||

# Use the fully character-level model (Lee et al. 2017). This is a sequence to sequence model with attention that is trained on \code{char2char}. | |||

Revision as of 23:40, 28 February 2018

Introduction

- Humans have surprisingly robust language processing systems which can easily overcome typos, e.g.

Aoccdrnig to a rscheearch at Cmabrigde Uinervtisy, it deosn't mttaer in waht oredr the ltteers in a wrod are, the olny iprmoetnt tihng is taht the frist and lsat ltteer be at the rghit pclae.

- A person's ability to read this text comes as no surprise to the Psychology literature

- Saberi \& Perrott (1999) found that this robustness extends to audio as well.

- Rayner et al. (2006) found that in noisier settings reading comprehension only slowed by 11 \%.

- McCusker et al. (1981) found that the common case of swapping letters could often go unnoticed by the reader.

- Mayall et al (1997) shows that we rely on word shape.

- Reicher, 1969; Pelli et al., (2003) found that we can switch between whole word recognition but the first and last letter positions are required to stay constant for comprehension

However, NMT(neural machine translation) systems are brittle. i.e. The Arabic word

means a blessing for good_morning, however

means hunt or slaughter.

Facebook's MT system mistakenly confused two words that only differ by one character, a situation that is challenging for a character-based NMT system.

Figure 1 shows the performance translating German to English as a function of the percent of German words modified. Here we show two types of noise: (1) Random permutation of the word and (2) Swapping a pair of adjacent letters in the centre of words. The important thing to note is that even small amounts of noise lead to substantial drops in performance.

BLEU (bilingual evaluation understudy) is an algorithm for evaluating the quality of text which has been machine-translated from one natural language to another. Quality is considered to be the correspondence between a machine's output and that of a human: "the closer a machine translation is to a professional human translation, the better it is". BLEU is between 0 and 1.

This paper explores two simple strategies for increasing model robustness:

- using structure-invariant representations ( character CNN representation)

- robust training on noisy data, a form of adversarial training.

Adversarial examples

The growing literature on adversarial examples has demonstrated how dangerous it can be to have brittle machine learning systems being used so pervasively in the real world.

The paper devises simple methods for generating adversarial examples for NMT. They do not assume any access to the NMT models' gradients, instead relying on cognitively-informed and naturally occurring language errors to generate noise.

MT system

We experiment with three different NMT systems with access to character information at different levels.

- Use the fully character-level model (Lee et al. 2017). This is a sequence to sequence model with attention that is trained on \code{char2char}.