stat946f11: Difference between revisions

(Blanked the page) |

No edit summary |

||

| Line 1: | Line 1: | ||

==Introduction== | |||

===Notation=== | |||

We will begin with short section about the notation used in these notes. \newline | |||

Capital letters will be used to denote random variables and lower case letters denote observations for those random variables: | |||

* <math>\{X_1,\ X_2,\ \dots,\ X_n\}</math> random variables | |||

* <math>\{x_1,\ x_2,\ \dots,\ x_n\}</math> observations of the random variables | |||

The joint ''probability mass function'' can be written as: | |||

<center><math> P( X_1 = x_1, X_2 = x_2, \dots, X_n = x_n )</math></center> | |||

or as shorthand, we can write this as <math>p( x_1, x_2, \dots, x_n )</math>. In these notes both types of notation will be used. | |||

We can also define a set of random variables <math>X_Q</math> where <math>Q</math> represents a set of subscripts. | |||

===Example=== | |||

Let <math>A = \{1,4\}</math>, so <math>X_A = \{X_1, X_4\}</math>; <math>A</math> is the set of indices for | |||

the r.v. <math>X_A</math>.<br /> | |||

Also let <math>B = \{2\},\ X_B = \{X_2\}</math> so we can write | |||

<center><math>P( X_A | X_B ) = P( X_1 = x_1, X_4 = x_4 | X_2 = x_2 ).\,\!</math></center> | |||

===Graphical Models=== | |||

Graphs can be represented as a pair of vertices and edges: <math>G = (V, E).</math> | |||

* <math>V</math> is the set of nodes (vertices). | |||

* <math>E</math> is the set of edges. | |||

If the edges have a direction associated with them then we consider the graph to be directed as in Figure 1, otherwise the graph is undirected as in Figure 2. | |||

[[File:directed.png|thumb|right|Fig.1 A directed graph.]] | |||

[[File:undirected.png|thumb|right|Fig.2 An undirected graph.]] | |||

We will use graphs in this course to represent the relationship between different random variables. | |||

====Directed graphical models==== | |||

In the case of directed graphs, the direction of the arrow indicates "causation". For example: | |||

<br /> | |||

<math>A \longrightarrow B</math>: \ <math>A</math> "causes" <math>B</math> | |||

In this case we must assume that our directed graphs are ''acyclic''. If our causation graph contains a cycle then it would mean that for example: | |||

* A causes B | |||

* B causes C | |||

* C causes A again. | |||

Clearly, this would confuse the order of the events. An example of a graph with a cycle can be seen in Figure 3. Such a graph could not be used to represent causation. The graph in Figure 4 does not have cycle and we can say that the node <math>X_1</math> causes, or affects, <math>X_2</math> and <math>X_3</math> while they in turn cause <math>X_4</math>. | |||

[[File:cyclic.png|thumb|right|Fig.3 A cyclic graph.]] | |||

[[File:acyclic.png|thumb|right|Fig.4 An acyclic graph.]] | |||

We will consider a 1-1 map between our graph's vertices and a set of random variables. Consider the following example that uses boolean random variables. It is important to note that the variables need not be boolean and can indeed be discrete over a range or even continuous. | |||

====Example==== | |||

In this example we will consider the possible causes for wet grass. | |||

The wet grass could be caused by rain, or a sprinkler. Rain can be caused by clouds. On the other hand one can not say that clouds cause the use of a sprinkler. However, the causation exists because the presence of clouds does affect whether or not a sprinkler will be used. If there are more clouds there is a smaller probability that one will rely on a sprinkler to water the grass. As we can see from this example the relationship between two variables can also act like a negative correlation. The corresponding graphical model is shown in Figure 5. | |||

[[File:wetgrass.png|thumb|right|Fig.5 The wet grass example.]] | |||

This directed graph shows the relation between the 4 random variables. If we have | |||

the joint probability <math>P(C,R,S,W)</math>, then we can answer many queries about this | |||

system. | |||

This all seems very simple at first but then we must consider the fact that in the discrete case the joint probability function grows exponentially with the number of variables. If we consider the wet grass example once more we can see that we need to define <math>2^4 = 16</math> different probabilities for this simple example. The table bellow that contains all of the probabilities and their corresponding boolean values for each random variable is called an ''interaction table''. | |||

'''Example:''' | |||

<center><math>\begin{matrix} | |||

P(C,R,S,W):\\ | |||

p_1\\ | |||

p_2\\ | |||

p_3\\ | |||

.\\ | |||

.\\ | |||

.\\ | |||

p_{16} \\ \\ | |||

\end{matrix}</math></center> | |||

<br /><br /> | |||

<center><math>\begin{matrix} | |||

~~~ & C & R & S & W \\ | |||

& 0 & 0 & 0 & 0 \\ | |||

& 0 & 0 & 0 & 1 \\ | |||

& 0 & 0 & 1 & 0 \\ | |||

& . & . & . & . \\ | |||

& . & . & . & . \\ | |||

& . & . & . & . \\ | |||

& 1 & 1 & 1 & 1 \\ | |||

\end{matrix}</math></center> | |||

Now consider an example where there are not 4 such random variables but 400. The interaction table would become too large to manage. In fact, it would require <math>2^{400}</math> rows! The purpose of the graph is to help avoid this intractability by considering only the variables that are directly related. In the wet grass example Sprinkler (S) and Rain (R) are not directly related. | |||

To solve the intractability problem we need to consider the way those relationships are represented in the graph. Let us define the following parameters. For each vertex <math>i \in V</math>, | |||

* <math>\pi_i</math>: is the set of parents of <math>i</math> | |||

** ex. <math>\pi_R = C</math> \ (the parent of <math>R = C</math>) | |||

* <math>f_i(x_i, x_{\pi_i})</math>: is the joint p.d.f. of <math>i</math> and <math>\pi_i</math> for which it is true that: | |||

** <math>f_i</math> is nonnegative for all <math>i</math> | |||

** <math>\displaystyle\sum_{x_i} f_i(x_i, x_{\pi_i}) = 1</math> | |||

'''Claim''': There is a family of probability functions <math> P(X_V) = \prod_{i=1}^n f_i(x_i, x_{\pi_i})</math> where this function is nonnegative, and | |||

<center><math> | |||

\sum_{x_1}\sum_{x_2}\cdots\sum_{x_n} P(X_V) = 1 | |||

</math></center> | |||

To show the power of this claim we can prove the equation (\ref{eqn:WetGrass}) for our wet grass example: | |||

<center><math>\begin{matrix} | |||

P(X_V) &=& P(C,R,S,W) \\ | |||

&=& f(C) f(R,C) f(S,C) f(W,S,R) | |||

\end{matrix}</math></center> | |||

We want to show that | |||

<center><math>\begin{matrix} | |||

\sum_C\sum_R\sum_S\sum_W P(C,R,S,W) & = &\\ | |||

\sum_C\sum_R\sum_S\sum_W f(C) f(R,C) | |||

f(S,C) f(W,S,R) | |||

& = & 1. | |||

\end{matrix}</math></center> | |||

Consider factors <math>f(C)</math>, <math>f(R,C)</math>, <math>f(S,C)</math>: they do not depend on <math>W</math>, so we | |||

can write this all as | |||

<center><math>\begin{matrix} | |||

& & \sum_C\sum_R\sum_S f(C) f(R,C) f(S,C) \cancelto{1}{\sum_W f(W,S,R)} \\ | |||

& = & \sum_C\sum_R f(C) f(R,C) \cancelto{1}{\sum_S f(S,C)} \\ | |||

& = & \cancelto{1}{\sum_C f(C)} \cancelto{1}{\sum_R f(R,C)} \\ | |||

& = & 1 | |||

\end{matrix}</math></center> | |||

since we had already set <math>\displaystyle \sum_{x_i} f_i(x_i, x_{\pi_i}) = 1</math>. | |||

Let us consider another example with a different directed graph. <br /> | |||

'''Example:'''<br /> | |||

Consider the simple directed graph in Figure 6. | |||

[[File:1234.png|thumb|right|Fig.6 Simple 4 node graph.]] | |||

Assume that we would like to calculate the following: <math> p(x_3|x_2) </math>. We know that we can write the joint probability as: | |||

<center><math> p(x_1,x_2,x_3,x_4) = f(x_1) f(x_2,x_1) f(x_3,x_2) f(x_4,x_3) </math></center> | |||

We can also make use of Bayes' Rule here: | |||

<center><math>p(x_3|x_2) = \frac{p(x_2,x_3)}{ p(x_2)}</math></center> | |||

<center><math>\begin{matrix} | |||

p(x_2,x_3) & = & \sum_{x_1} \sum_{x_4} p(x_1,x_2,x_3,x_4) ~~~~ \hbox{(marginalization)} \\ | |||

& = & \sum_{x_1} \sum_{x_4} f(x_1) f(x_2,x_1) f(x_3,x_2) f(x_4,x_3) \\ | |||

& = & \sum_{x_1} f(x_1) f(x_2,x_1) f(x_3,x_2) \cancelto{1}{\sum_{x_4}f(x_4,x_3)} \\ | |||

& = & f(x_3,x_2) \sum_{x_1} f(x_1) f(x_2,x_1). | |||

\end{matrix}</math></center> | |||

We also need | |||

<center><math>\begin{matrix} | |||

p(x_2) & = & \sum_{x_1}\sum_{x_3}\sum_{x_4} f(x_1) f(x_2,x_1) f(x_3,x_2) | |||

f(x_4,x_3) \\ | |||

& = & \sum_{x_1}\sum_{x_3} f(x_1) f(x_2,x_1) f(x_3,x_2) \\ | |||

& = & \sum_{x_1} f(x_1) f(x_2,x_1). | |||

\end{matrix}</math></center> | |||

Thus, | |||

<center><math>\begin{matrix} | |||

p(x_3|x_2) & = & \frac{ f(x_3,x_2) \sum_{x_1} f(x_1) | |||

f(x_2,x_1)}{ \sum_{x_1} f(x_1) f(x_2,x_1)} \\ | |||

& = & f(x_3,x_2). | |||

\end{matrix}</math></center> | |||

'''Theorem 1.''' | |||

<center><math>f_i(x_i,x_{\pi_i}) = p(x_i|x_{\pi_i}).</math></center> | |||

<center><math> \therefore \ P(X_V) = \prod_{i=1}^n p(x_i|x_{\pi_i})</math></center>. | |||

In our simple graph, the joint probability can be written as | |||

<center><math>p(x_1,x_2,x_3,x_4) = p(x_1)p(x_2|x_1) p(x_3|x_2) p(x_4|x_3).</math></center> | |||

Instead, had we used the chain rule we would have obtained a far more complex equation: | |||

<center><math>p(x_1,x_2,x_3,x_4) = p(x_1) p(x_2|x_1)p(x_3|x_2,x_1) p(x_4|x_3,x_2,x_1).</math></center> | |||

The ''Markov Property'', or ''Memoryless Property'' is when the variable <math>X_i</math> is only affected by <math>X_j</math> and so the random variable <math>X_i</math> given <math>X_j</math> is independent of every other random variable. In our example the history of <math>x_4</math> is completely determined by <math>x_3</math>. <br /> | |||

By simply applying the Markov Property to the chain-rule formula we would also have obtained the same result. | |||

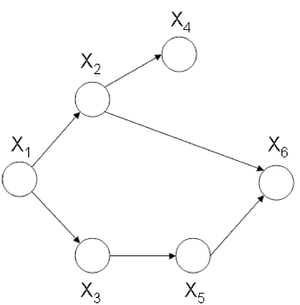

Now let us consider the joint probability of the following six-node example found in Figure 7. | |||

[[File:ClassicExample1.png|thumb|right|Fig.7 Six node example.]] | |||

If we use Theorem 1 it can be seen that the joint probability density function for Figure 7 can be written as follows: | |||

<center><math> P(X_1,X_2,X_3,X_4,X_5,X_6) = P(X_1)P(X_2|X_1)P(X_3|X_1)P(X_4|X_2)P(X_5|X_3)P(X_6|X_5,X_2) </math></center> | |||

Once again, we can apply the Chain Rule and then the Markov Property and arrive at the same result. | |||

<center><math>\begin{matrix} | |||

&& P(X_1,X_2,X_3,X_4,X_5,X_6) \\ | |||

&& = P(X_1)P(X_2|X_1)P(X_3|X_2,X_1)P(X_4|X_3,X_2,X_1)P(X_5|X_4,X_3,X_2,X_1)P(X_6|X_5,X_4,X_3,X_2,X_1) \\ | |||

&& = P(X_1)P(X_2|X_1)P(X_3|X_1)P(X_4|X_2)P(X_5|X_3)P(X_6|X_5,X_2) | |||

\end{matrix}</math></center> | |||

Revision as of 23:00, 26 September 2011

Introduction

Notation

We will begin with short section about the notation used in these notes. \newline Capital letters will be used to denote random variables and lower case letters denote observations for those random variables:

- [math]\displaystyle{ \{X_1,\ X_2,\ \dots,\ X_n\} }[/math] random variables

- [math]\displaystyle{ \{x_1,\ x_2,\ \dots,\ x_n\} }[/math] observations of the random variables

The joint probability mass function can be written as:

or as shorthand, we can write this as [math]\displaystyle{ p( x_1, x_2, \dots, x_n ) }[/math]. In these notes both types of notation will be used. We can also define a set of random variables [math]\displaystyle{ X_Q }[/math] where [math]\displaystyle{ Q }[/math] represents a set of subscripts.

Example

Let [math]\displaystyle{ A = \{1,4\} }[/math], so [math]\displaystyle{ X_A = \{X_1, X_4\} }[/math]; [math]\displaystyle{ A }[/math] is the set of indices for

the r.v. [math]\displaystyle{ X_A }[/math].

Also let [math]\displaystyle{ B = \{2\},\ X_B = \{X_2\} }[/math] so we can write

Graphical Models

Graphs can be represented as a pair of vertices and edges: [math]\displaystyle{ G = (V, E). }[/math]

- [math]\displaystyle{ V }[/math] is the set of nodes (vertices).

- [math]\displaystyle{ E }[/math] is the set of edges.

If the edges have a direction associated with them then we consider the graph to be directed as in Figure 1, otherwise the graph is undirected as in Figure 2.

We will use graphs in this course to represent the relationship between different random variables.

Directed graphical models

In the case of directed graphs, the direction of the arrow indicates "causation". For example:

[math]\displaystyle{ A \longrightarrow B }[/math]: \ [math]\displaystyle{ A }[/math] "causes" [math]\displaystyle{ B }[/math]

In this case we must assume that our directed graphs are acyclic. If our causation graph contains a cycle then it would mean that for example:

- A causes B

- B causes C

- C causes A again.

Clearly, this would confuse the order of the events. An example of a graph with a cycle can be seen in Figure 3. Such a graph could not be used to represent causation. The graph in Figure 4 does not have cycle and we can say that the node [math]\displaystyle{ X_1 }[/math] causes, or affects, [math]\displaystyle{ X_2 }[/math] and [math]\displaystyle{ X_3 }[/math] while they in turn cause [math]\displaystyle{ X_4 }[/math].

We will consider a 1-1 map between our graph's vertices and a set of random variables. Consider the following example that uses boolean random variables. It is important to note that the variables need not be boolean and can indeed be discrete over a range or even continuous.

Example

In this example we will consider the possible causes for wet grass.

The wet grass could be caused by rain, or a sprinkler. Rain can be caused by clouds. On the other hand one can not say that clouds cause the use of a sprinkler. However, the causation exists because the presence of clouds does affect whether or not a sprinkler will be used. If there are more clouds there is a smaller probability that one will rely on a sprinkler to water the grass. As we can see from this example the relationship between two variables can also act like a negative correlation. The corresponding graphical model is shown in Figure 5.

This directed graph shows the relation between the 4 random variables. If we have the joint probability [math]\displaystyle{ P(C,R,S,W) }[/math], then we can answer many queries about this system.

This all seems very simple at first but then we must consider the fact that in the discrete case the joint probability function grows exponentially with the number of variables. If we consider the wet grass example once more we can see that we need to define [math]\displaystyle{ 2^4 = 16 }[/math] different probabilities for this simple example. The table bellow that contains all of the probabilities and their corresponding boolean values for each random variable is called an interaction table.

Example:

Now consider an example where there are not 4 such random variables but 400. The interaction table would become too large to manage. In fact, it would require [math]\displaystyle{ 2^{400} }[/math] rows! The purpose of the graph is to help avoid this intractability by considering only the variables that are directly related. In the wet grass example Sprinkler (S) and Rain (R) are not directly related.

To solve the intractability problem we need to consider the way those relationships are represented in the graph. Let us define the following parameters. For each vertex [math]\displaystyle{ i \in V }[/math],

- [math]\displaystyle{ \pi_i }[/math]: is the set of parents of [math]\displaystyle{ i }[/math]

- ex. [math]\displaystyle{ \pi_R = C }[/math] \ (the parent of [math]\displaystyle{ R = C }[/math])

- [math]\displaystyle{ f_i(x_i, x_{\pi_i}) }[/math]: is the joint p.d.f. of [math]\displaystyle{ i }[/math] and [math]\displaystyle{ \pi_i }[/math] for which it is true that:

- [math]\displaystyle{ f_i }[/math] is nonnegative for all [math]\displaystyle{ i }[/math]

- [math]\displaystyle{ \displaystyle\sum_{x_i} f_i(x_i, x_{\pi_i}) = 1 }[/math]

Claim: There is a family of probability functions [math]\displaystyle{ P(X_V) = \prod_{i=1}^n f_i(x_i, x_{\pi_i}) }[/math] where this function is nonnegative, and

To show the power of this claim we can prove the equation (\ref{eqn:WetGrass}) for our wet grass example:

We want to show that

Consider factors [math]\displaystyle{ f(C) }[/math], [math]\displaystyle{ f(R,C) }[/math], [math]\displaystyle{ f(S,C) }[/math]: they do not depend on [math]\displaystyle{ W }[/math], so we can write this all as

since we had already set [math]\displaystyle{ \displaystyle \sum_{x_i} f_i(x_i, x_{\pi_i}) = 1 }[/math].

Let us consider another example with a different directed graph.

Example:

Consider the simple directed graph in Figure 6.

Assume that we would like to calculate the following: [math]\displaystyle{ p(x_3|x_2) }[/math]. We know that we can write the joint probability as:

We can also make use of Bayes' Rule here:

We also need

Thus,

Theorem 1.

.

In our simple graph, the joint probability can be written as

Instead, had we used the chain rule we would have obtained a far more complex equation:

The Markov Property, or Memoryless Property is when the variable [math]\displaystyle{ X_i }[/math] is only affected by [math]\displaystyle{ X_j }[/math] and so the random variable [math]\displaystyle{ X_i }[/math] given [math]\displaystyle{ X_j }[/math] is independent of every other random variable. In our example the history of [math]\displaystyle{ x_4 }[/math] is completely determined by [math]\displaystyle{ x_3 }[/math].

By simply applying the Markov Property to the chain-rule formula we would also have obtained the same result.

Now let us consider the joint probability of the following six-node example found in Figure 7.

If we use Theorem 1 it can be seen that the joint probability density function for Figure 7 can be written as follows:

Once again, we can apply the Chain Rule and then the Markov Property and arrive at the same result.