stat340s13: Difference between revisions

No edit summary |

|||

| Line 2,642: | Line 2,642: | ||

=P(y>=x) | =P(y>=x) | ||

use e^ | use <math>e^{-\lambda}=1-p</math> to figure out the mean and variance. | ||

'''Code'''<br> | '''Code'''<br> | ||

<pre style="font-size:16px"> | <pre style="font-size:16px"> | ||

Revision as of 10:57, 12 June 2013

Introduction, Class 1 - Tuesday, May 7

Course Instructor: Ali Ghodsi

Lecture:

001: TTh 8:30-9:50 MC1085

002: TTh 1:00-2:20 DC1351

Tutorial:

2:30-3:20 Mon M3 1006

Midterm

Monday June 17 2013 from 2:30-3:30

TA(s):

| TA | Day | Time | Location |

|---|---|---|---|

| Lu Cheng | Monday | 3:30-5:30 pm | M3 3108, space 2 |

| Han ShengSun | Tuesday | 4:00-6:00 pm | M3 3108, space 2 |

| Yizhou Fang | Wednesday | 1:00-3:00 pm | M3 3108, space 1 |

| Huan Cheng | Thursday | 3:00-5:00 pm | M3 3111, space 1 |

| Wu Lin | Friday | 11:00-1:00 pm | M3 3108, space 1 |

Four Fundamental Problems

1. Classification: Given an input object X, we have a function which will take in this input X and identify which 'class (Y)' it belongs to (Discrete Case)

i.e taking value from x, we could predict y.

(For example, an image of a fruit can be classified, through some sort of algorithm to be a picture of either an apple or an orange.)

2. Regression: Same as classification but in the continuous case except y is discrete. (Non discrete case)

3. Clustering: Use common features of objects in same class or group to form clusters.(in this case, x is given, y is unknown)

4. Dimensionality Reduction (aka Feature extraction, Manifold learning): Used when we have a variable in high dimension space and we want to reduce the dimension

Applications

Most useful when structure of the task is not well understood but can be characterized by a dataset with strong statistical regularity

Examples:

- Computer Vision, Computer Graphics, Finance (fraud detection), Machine Learning

- Search and recommendation (eg. Google, Amazon)

- Automatic speech recognition, speaker verification

- Text parsing

- Face identification

- Tracking objects in video

- Financial prediction(e.g. credit cards)

- Fraud detection

- Medical diagnosis

Course Information

Prerequisite: (One of CS 116, 126/124, 134, 136, 138, 145, SYDE 221/322) and (STAT 230 with a grade of at least 60% or STAT 240) and (STAT 231 or 241)

Antirequisite: CM 361/STAT 341, CS 437, 457

General Information

- No required textbook

- Recommended: "Simulation" by Sheldon M. Ross

- Computing parts of the course will be done in Matlab, but prior knowledge of Matlab is not essential (will have a tutorial on it)

- First midterm will be held on Monday, June 17 from 2:30 to 3:30

- Announcements and assignments will be posted on Learn.

- Other course material on: http://wikicoursenote.com/wiki/

- Log on to both Learn and wikicoursenote frequently.

- Email all questions and concerns to UWStat340@gmail.com. Do not use your personal email address! Do not email instructor or TAs about the class directly to theri personal accounts!

Wikicourse note (10% of final mark):

When applying an account in the wikicourse note, please use the quest account as your login name while the uwaterloo email as the registered email. This is important as the quest id will use to identify the students who make the contributions.

Example:

User: questid

Email: questid@uwaterloo.ca

After the student has made the account request, do wait for several hours before students can login into the account using the passwords stated in the email. During the first login, students will be ask to create a new password for their account.

As a technical/editorial contributor: Make contributions within 1 week and do not copy the notes on the blackboard.

Must do both All contributions are now considered general contributions you must contribute to 50% of lectures for full marks

- A general contribution can be correctional (fixing mistakes) or technical (expanding content, adding examples, etc) but at least half of your contributions should be technical for full marks

Do not submit copyrighted work without permission, cite original sources. Each time you make a contribution, check mark the table. Marks are calculated on honour system, although there will be random verifications. If you are caught claiming to contribute but didn't, you will lose marks.

Wikicoursenote contribution form : [1]

- you can submit your contributions in multiple times.

- you will be able to edit the response right after submitting

- send email to make changes to an old response : uwstat340@gmail.com

Tentative Topics

- Random variable and stochastic process generation

- Discrete-Event Systems

- Variance reduction

- Markov Chain Monte Carlo

Tentative Marking Scheme

| Item | Value |

|---|---|

| Assignments (~6) | 30% |

| WikiCourseNote | 10% |

| Midterm | 20% |

| Final | 40% |

The final exam is going to be closed book and only non-programmable calculators are allowed

A passing mark must be achieved in the final to pass the course

Sampling (Generating random numbers), Class 2 - Thursday, May 9

Introduction

Some people believe that sampling activities such as rolling a dice and flipping a coin are not truly random but are deterministic, since the result can be reliably calculated using things such as physics and math. In general, a deterministic model produces specific results given certain inputs by the model user, contrasting with a stochastic model which encapsulates randomness and probabilistic events.

A computer cannot generate truly random numbers because computers can only run algorithms, which are deterministic in nature. They can, however, generate Pseudo Random Numbers; numbers that seem random but are actually deterministic. Although the pseudo random numbers are deterministic, these numbers have a sequence of value and all of them have the appearances of being independent uniform random variables. Being deterministic, pseudo random numbers are valuable and beneficial due to the ease to generate and manipulate.

When people do the test for many times, the results will be closed the express values,that makes the trial looks like deterministic, however for each trial, the result is random. So, it looks like pseudo random numbers.

Mod

Let [math]\displaystyle{ n \in \N }[/math] and [math]\displaystyle{ m \in \N^+ }[/math], then by Division Algorithm,

[math]\displaystyle{ \exists q, \, r \in \N \;\text{with}\; 0\leq r \lt m, \; \text{s.t.}\; n = mq+r }[/math],

where [math]\displaystyle{ q }[/math] is called the quotient and [math]\displaystyle{ r }[/math] the remainder. Hence we can define a binary function

[math]\displaystyle{ \mod : \N \times \N^+ \rightarrow \N }[/math] given by [math]\displaystyle{ r:=n \mod m }[/math] which means take the remainder after division by m.

We say that n is congruent to r mod m if n = mq + r, where m is an integer.

if y = ax + b, then [math]\displaystyle{ b:=y \mod a }[/math].

4.2 = 3 * 1.1 + 0.9 mod 1.1

0.9 = 4.2 mod 1.1

For example:

30 = 4 * 7 + 2 mod 7

2 = 30 mod 7

25 = 8 * 3 + 1 mod 3

1 = 25 mod 3

Note: [math]\displaystyle{ \mod }[/math] here is different from the modulo congruence relation in [math]\displaystyle{ \Z_m }[/math], which is an equivalence relation instead of a function.

mod can figure out one integer can be divided by another integer with no remainder or not. But both two integer should follow function: n = mq + r. m, r, q n are all integer. and r is smaller than q.

Multiplicative Congruential Algorithm

This is a simple algorithm used to generate uniform pseudo random numbers. It is also referred to as the Linear Congruential Method or Mixed Congruential Method. We define the Linear Congruential Method to be [math]\displaystyle{ x_{k+1}=(ax_k + b) \mod m }[/math], where [math]\displaystyle{ x_k, a, b, m \in \N, \;\text{with}\; a, m \gt 0 }[/math]. ( [math]\displaystyle{ \mod m }[/math] means taking the remainder after division by m) Given a "seed"(all integers and an initial value [math]\displaystyle{ .x_0 }[/math] called seed) [math]\displaystyle{ .(x_0 \in \N }[/math], we can obtain values for [math]\displaystyle{ x_1, \, x_2, \, \cdots, x_n }[/math] inductively. The Multiplicative Congruential Method may also refer to the special case where [math]\displaystyle{ b=0 }[/math].

An interesting fact about Linear Congruential Method is that it is one of the oldest and best-known pseudorandom number generator algorithms. It is very fast and requires minimal memory to retain state. However, this method should not be used for applications where high-quality randomness is required. They should not be used for Monte Carlo simulation and cryptographic applications. (Monte Carlo simulation will consider possibilities for every choice of consideration, and it shows the extreme possibilities. This method is not precise enough.)

First consider the following algorithm

[math]\displaystyle{ x_{k+1}=x_{k} \mod m }[/math]

Example

[math]\displaystyle{ \text{Let }x_{k}=10,\,m=3 }[/math]

- [math]\displaystyle{ \begin{align} x_{1} &{}= 10 &{}\mod{3} = 1 \\ x_{2} &{}= 1 &{}\mod{3} = 1 \\ x_{3} &{}= 1 &{}\mod{3} =1 \\ \end{align} }[/math]

[math]\displaystyle{ \ldots }[/math]

Excluding x0, this example generates a series of ones. In general, excluding x0, the algorithm above will always generate a series of the same number less than M. Hence, it has a period of 1. We can modify this algorithm to form the Multiplicative Congruential Algorithm.

Multiplicative Congruential Algorithm

[math]\displaystyle{ x_{k+1}=(a \cdot x_{k} + b) \mod m }[/math](a little tip: (a*b)mod c = (a mod c)*(b mod c))

Example

[math]\displaystyle{ \text{Let }a=2,\, b=1, \, m=3, \, x_{0} = 10 }[/math]

[math]\displaystyle{ \begin{align}

\text{Step 1: } 0&{}=(2\cdot 10 + 1) &{}\mod 3 \\

\text{Step 2: } 1&{}=(2\cdot 0 + 1) &{}\mod 3 \\

\text{Step 3: } 0&{}=(2\cdot 1 + 1) &{}\mod 3 \\

\end{align} }[/math]

[math]\displaystyle{ \ldots }[/math]

This example generates a sequence with a repeating cycle of two integers.

(If we choose the numbers properly, we could get a sequence of "random" numbers. However, how do we find the value of [math]\displaystyle{ a,b, }[/math] and [math]\displaystyle{ m }[/math]? At the very least [math]\displaystyle{ m }[/math] should be a very large, preferably prime number. The larger [math]\displaystyle{ m }[/math] is, the higher possibility people get a sequence of "random" numbers. This is easier to solve in Matlab. In Matlab, the command rand() generates random numbers which are uniformly distributed in the interval (0,1)). Matlab uses [math]\displaystyle{ a=7^5, b=0, m=2^{31}-1 }[/math] – recommended in a 1988 paper, "Random Number Generators: Good Ones Are Hard To Find" by Stephen K. Park and Keith W. Miller (Important part is that [math]\displaystyle{ m }[/math] should be large and prime)

MatLab Instruction for Multiplicative Congruential Algorithm:

Before you start, you need to clear all existing defined variables and operations:

>>clear all >>close all

>>a=17 >>b=3 >>m=31 >>x=5 >>mod(a*x+b,m) ans=26 >>x=mod(a*x+b,m)

(Note:

1. Keep repeating this command over and over again and you will seem to get random numbers – this is how the command rand works in a computer.

2. There is a function in MATLAB called RAND to generate a number between 0 and 1.

3. If we would like to generate 1000 and more numbers, we could use a for loop)

(Note on MATLAB commands:

1. clear all: clears all variables.

2. close all: closes all figures.

3. who: displays all defined variables.

4. clc: clears screen.)

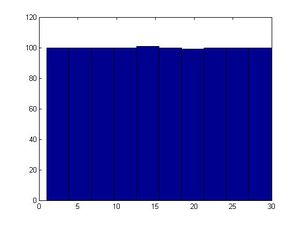

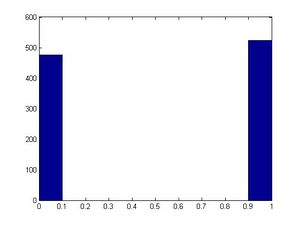

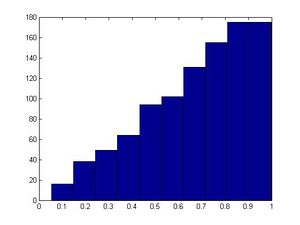

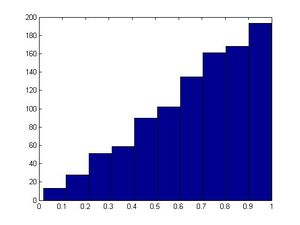

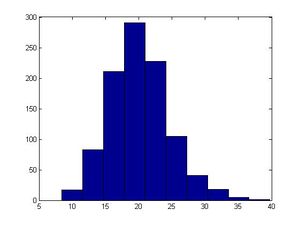

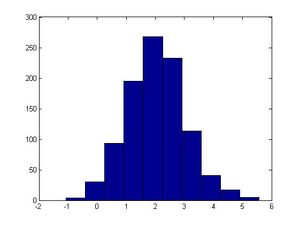

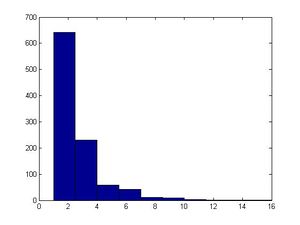

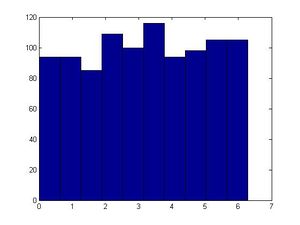

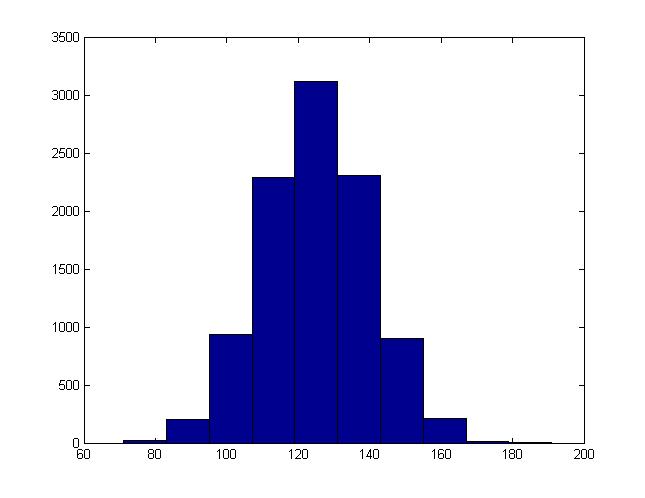

>>a=13 >>b=0 >>m=31 >>x(1)=1 >>for ii=2:1000 x(ii)=mod(a*x(ii-1)+b,m); end >>size(x) ans=1 1000 >>hist(x)

(Note: The semicolon after the x(ii)=mod(a*x(ii-1)+b,m) ensures that Matlab will not print the entire vector of x. It will instead calculate it internally and you will be able to work with it. Adding the semicolon to the end of this line reduces the run time significantly.)

This algorithm involves three integer parameters [math]\displaystyle{ a, b, }[/math] and [math]\displaystyle{ m }[/math] and an initial value, [math]\displaystyle{ x_0 }[/math] called the seed. A sequence of numbers is defined by [math]\displaystyle{ x_{k+1} = ax_k+ b \mod m }[/math]. [math]\displaystyle{ \mod m }[/math] means taking the remainder after division by [math]\displaystyle{ m }[/math].

Note: For some bad [math]\displaystyle{ a }[/math] and [math]\displaystyle{ b }[/math], the histogram may not look uniformly distributed.

Note: In MATLAB, hist(x) will generate a graph representing the distribution. Use this function after you run the code to check the real sample distribution.

Example: [math]\displaystyle{ a=13, b=0, m=31 }[/math]

The first 30 numbers in the sequence are a permutation of integers from 1 to 30, and then the sequence repeats itself so it is important to choose [math]\displaystyle{ m }[/math] large to decrease the probability of each number repeating itself too early. Values are between [math]\displaystyle{ 0 }[/math] and [math]\displaystyle{ m-1 }[/math]. If the values are normalized by dividing by [math]\displaystyle{ m-1 }[/math], then the results are approximately numbers uniformly distributed in the interval [0,1]. There is only a finite number of values (30 possible values in this case). In MATLAB, you can use function "hist(x)" to see if it looks uniformly distributed. We saw that the values between 0-30 had the same frequency in the histogram, so we can conclude that they are uniformly distributed.

If [math]\displaystyle{ x_0=1 }[/math], then

- [math]\displaystyle{ x_{k+1} = 13x_{k}\mod{31} }[/math]

So,

- [math]\displaystyle{ \begin{align} x_{0} &{}= 1 \\ x_{1} &{}= 13 \times 1 + 0 &{}\mod{31} = 13 \\ x_{2} &{}= 13 \times 13 + 0 &{}\mod{31} = 14 \\ x_{3} &{}= 13 \times 14 + 0 &{}\mod{31} =27 \\ \end{align} }[/math]

etc.

For example, with [math]\displaystyle{ a = 3, b = 2, m = 4, x_0 = 1 }[/math], we have:

- [math]\displaystyle{ x_{k+1} = (3x_{k} + 2)\mod{4} }[/math]

So,

- [math]\displaystyle{ \begin{align}

x_{0} &{}= 1 \\

x_{1} &{}= 3 \times 1 + 2 \mod{4} = 1 \\

x_{2} &{}= 3 \times 1 + 2 \mod{4} = 1 \\

\end{align} }[/math]

etc.

FAQ:

1.Why in the example above is 1 to 30 not 0 to 30?

[math]\displaystyle{ b = 0 }[/math] so in order to have [math]\displaystyle{ x_k }[/math] equal to 0, [math]\displaystyle{ x_{k-1} }[/math] must be 0 (since [math]\displaystyle{ a=13 }[/math] is relatively prime to 31). However, the seed is 1. Hence, we will never observe 0 in the sequence.

Alternatively, {0} and {1,2,...,30} are two orbits of the left multiplication by 13 in the group [math]\displaystyle{ \Z_{31} }[/math].

2.Will the number 31 ever appear?Is there a probability that a number never appears?

The number 31 will never appear. When you perform the operation [math]\displaystyle{ \mod m }[/math], the largest possible answer that you could receive is [math]\displaystyle{ m-1 }[/math]. Whether or not a particular number in the range from 0 to [math]\displaystyle{ m - 1 }[/math] appears in the above algorithm will be dependent on the values chosen for [math]\displaystyle{ a, b }[/math] and [math]\displaystyle{ m }[/math].

Examples:[From Textbook]

If [math]\displaystyle{ x_0=3 }[/math] and [math]\displaystyle{ x_n=(5x_{n-1}+7)\mod 200 }[/math], find [math]\displaystyle{ x_1,\cdots,x_{10} }[/math].

Solution:

[math]\displaystyle{ \begin{align}

x_1 &{}= (5 \times 3+7) &{}\mod{200} &{}= 22 \\

x_2 &{}= 117 &{}\mod{200} &{}= 117 \\

x_3 &{}= 592 &{}\mod{200} &{}= 192 \\

x_4 &{}= 2967 &{}\mod{200} &{}= 167 \\

x_5 &{}= 14842 &{}\mod{200} &{}= 42 \\

x_6 &{}= 74217 &{}\mod{200} &{}= 17 \\

x_7 &{}= 371092 &{}\mod{200} &{}= 92 \\

x_8 &{}= 1855467 &{}\mod{200} &{}= 67 \\

x_9 &{}= 9277342 &{}\mod{200} &{}= 142 \\

x_{10} &{}= 46386717 &{}\mod{200} &{}= 117 \\

\end{align} }[/math]

Comments:

Typically, it is good to choose [math]\displaystyle{ m }[/math] such that [math]\displaystyle{ m }[/math] is large, and [math]\displaystyle{ m }[/math] is prime. Careful selection of parameters '[math]\displaystyle{ a }[/math]' and '[math]\displaystyle{ b }[/math]' also helps generate relatively "random" output values, where it is harder to identify patterns. For example, when we used a composite (non prime) number such as 40 for [math]\displaystyle{ m }[/math], our results were not satisfactory in producing an output resembling a uniform distribution.

The computed values are between 0 and [math]\displaystyle{ m-1 }[/math]. If the values are normalized by dividing by [math]\displaystyle{ m-1 }[/math], their result is numbers uniformly distributed on the interval [math]\displaystyle{ \left[0,1\right] }[/math] (similar to computing from uniform distribution).

From the example shown above, if we want to create a large group of random numbers, it is better to have large [math]\displaystyle{ m }[/math] so that the random values generated will not repeat after several iterations.

There has been a research about how to choose uniform sequence. Many programs give you the options to choose the seed. Sometimes the seed is chosen by CPU.

this part i learnt how to use R code to figure out the relationship between two ingeter

division, and their remainder. And when we use R to calculate R with random variables for a range such as(1:1000),the graph of distribution is like uniform distribution.

Summary of Multiplicative Congruential Algorithm

Problem: generate Pseudo Random Numbers.

Plan:

- find integer: a b m(large prime) x0(the seed) .

- [math]\displaystyle{ x_{x+1}=(ax_{k}+b) }[/math]mod m

Matlab Instruction:

>>clear all >>close all >>a=17 >>b=3 >>m=31 >>x=5 >>mod(a*x+b,m) ans=26 >>x=mod(a*x+b,m)

Inverse Transform Method

This method is useful for generating types of distribution other than uniform distribution, such as exponential distribution and normal distribution. However, to easily use this method in generating pseudorandom numbers, the probability distribution consumed must have a cumulative distribution function (cdf) [math]\displaystyle{ F }[/math] with a tractable inverse [math]\displaystyle{ F^{-1} }[/math].

Theorem:

If we want to generate the value of a discrete random variable X, we must generate a random number U, uniformly distributed over (0,1).

Let [math]\displaystyle{ F:\R \rightarrow \left[0,1\right] }[/math] be a cdf. If [math]\displaystyle{ U \sim U\left[0,1\right] }[/math], then the random variable given by [math]\displaystyle{ X:=F^{-1}\left(U\right) }[/math]

follows the distribution function [math]\displaystyle{ F\left(\cdot\right) }[/math],

where [math]\displaystyle{ F^{-1}\left(u\right):=\inf F^{-1}\big(\left[u,+\infty\right)\big) = \inf\{x\in\R | F\left(x\right) \geq u\} }[/math] is the generalized inverse.

Note: [math]\displaystyle{ F }[/math] need not be invertible, but if it is, then the generalized inverse is the same as the inverse in the usual case.

Proof of the theorem:

The generalized inverse satisfies the following:

[math]\displaystyle{ \begin{align}

\forall u \in \left[0,1\right], \, x \in \R, \\

&{} F^{-1}\left(u\right) \leq x &{} \\

\Rightarrow &{} F\Big(F^{-1}\left(u\right)\Big) \leq F\left(x\right) &&{} F \text{ is non-decreasing} \\

\Rightarrow &{} F\Big(\inf \{y \in \R | F(y)\geq u \}\Big) \leq F\left(x\right) &&{} \text{by definition of } F^{-1} \\

\Rightarrow &{} \inf \{F(y) \in [0,1] | F(y)\geq u \} \leq F\left(x\right) &&{} F \text{ is right continuous and non-decreasing} \\

\Rightarrow &{} u \leq F\left(x\right) &&{} \text{by definition of } \inf \\

\Rightarrow &{} x \in \{y \in \R | F(y) \geq u\} &&{} \\

\Rightarrow &{} x \geq \inf \{y \in \R | F(y)\geq u \}\Big) &&{} \text{by definition of } \inf \\

\Rightarrow &{} x \geq F^{-1}(u) &&{} \text{by definition of } F^{-1} \\

\end{align} }[/math]

That is [math]\displaystyle{ F^{-1}\left(u\right) \leq x \Leftrightarrow u \leq F\left(x\right) }[/math]

Finally, [math]\displaystyle{ P(X \leq x) = P(F^{-1}(U) \leq x) = P(U \leq F(x)) = F(x) }[/math], since [math]\displaystyle{ U }[/math] is uniform on the unit interval.

This completes the proof.

Therefore, in order to generate a random variable X~F, it can generate U according to U(0,1) and then make the transformation x=[math]\displaystyle{ F^{-1}(U) }[/math]

Note that we can apply the inverse on both sides in the proof of the inverse transform only if the pdf of X is monotonic. A monotonic function is one that is either increasing for all x, or decreasing for all x.

Inverse Transform Algorithm for Generating Binomial(n,p) Random Variable

Step 1: Generate a random number [math]\displaystyle{ U }[/math].

Step 2: [math]\displaystyle{ c = \frac {p}{(1-p)} }[/math], [math]\displaystyle{ i = 0 }[/math], [math]\displaystyle{ pr = (1-p)^n }[/math], [math]\displaystyle{ F = pr }[/math]

Step 3: If U<F, set X = i and stop,

Step 4: [math]\displaystyle{ pr = \, {\frac {c(n-i)}{(i+1)}} {pr}, F = F +pr, i = i+1 }[/math]

Step 5: Go to step 3

*

- Note: These steps can be found in Simulation 5th Ed. by Sheldon Ross.

Example: [math]\displaystyle{ f(x) = \lambda e^{-\lambda x} }[/math]

[math]\displaystyle{ F(x)= \int_0^x f(t) dt }[/math]

[math]\displaystyle{ = \int_0^x \lambda e ^{-\lambda t}\ dt }[/math]

[math]\displaystyle{ = \frac{\lambda}{-\lambda}\, e^{-\lambda t}\, | \underset{0}{x} }[/math]

[math]\displaystyle{ = -e^{-\lambda x} + e^0 }[/math]

[math]\displaystyle{ =1 - e^{- \lambda x} }[/math]

[math]\displaystyle{ y=1-e^{- \lambda x} }[/math]

[math]\displaystyle{ 1-y=e^{- \lambda x} }[/math]

[math]\displaystyle{ x=-\frac {ln(1-y)}{\lambda} }[/math]

[math]\displaystyle{ y=-\frac {ln(1-x)}{\lambda} }[/math]

[math]\displaystyle{ F^{-1}(x)=-\frac {ln(1-x)}{\lambda} }[/math]

Step 1: Draw U ~U[0,1];

Step 2: [math]\displaystyle{ x=\frac{-ln(1-U)}{\lambda} }[/math]

Example:

[math]\displaystyle{ X= a + (b-a), }[/math] U is uniform on [a, b]

[math]\displaystyle{ x=\frac{-ln(U)}{\lambda} }[/math] is exponential with parameter [math]\displaystyle{ {\lambda} }[/math]

Example 2:

Given a CDF of X: [math]\displaystyle{ F(x) = x^5 }[/math], transform U~U[0,1].

Sol:

Let [math]\displaystyle{ y=x^5 }[/math], solve for x: [math]\displaystyle{ x=y^\frac {1}{5} }[/math]. Therefore, [math]\displaystyle{ F^{-1} (x) = x^\frac {1}{5} }[/math]

Hence, to obtain a value of x from F(x), we first set u as an uniform distribution, then obtain the inverse function of F(x), and set

[math]\displaystyle{ x= u^\frac{1}{5} }[/math]

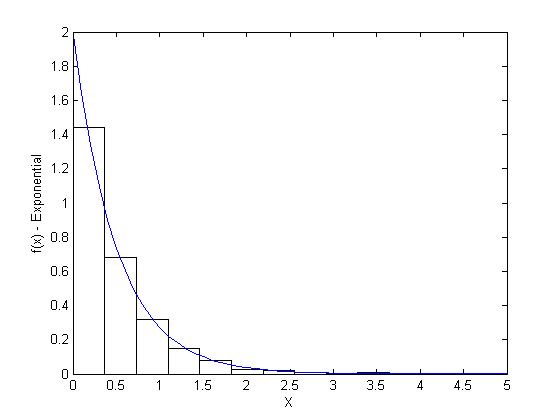

Matlab Code:

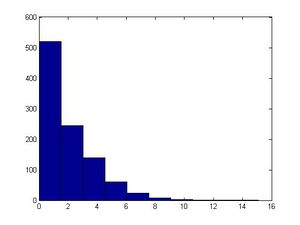

For this exponential distribution, we will let lambda be 2.

Code:

% Set up the parameters.

lam = 2;

n = 1000;

% Generate the random variables.

uni = rand(1,n);

X = -log(uni)/lam;

% Get the values to draw the theoretical curve.

x = 0:.1:5;

% This is a fuction in the Statistics Toolbox.

y = exppdf(x,1/2);

% Get the information for the histogram.

[N, h] = hist(X,10);

% Change bar heights to make it correspond to the theoretical density.

N = N/(h(2)-h(1))/n;

% Do the plots.

bar(h,N,1,'w')

% hold on retains the current plot and certain axes properties so that subsequent graphing commands add to the existing graph.

hold on

plot(x,y)

% hold off resets axes properties to their defaults before drawing new plots. hold off is the default.

hold off

xlabel('X')

ylabel('f(x) - Exponential')

Example 3:

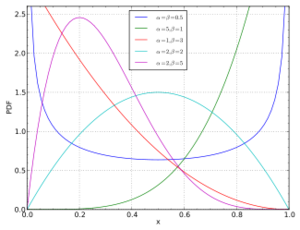

Given u~U[0,1], generate x from BETA(1,β)

Solution:

[math]\displaystyle{ F(x)= 1-(1-x)^\beta }[/math],

[math]\displaystyle{ u= 1-(1-x)^\beta }[/math]

Solve for x:

[math]\displaystyle{ (1-x)^\beta = 1-u }[/math],

[math]\displaystyle{ 1-x = (1-u)^\frac {1}{\beta} }[/math],

[math]\displaystyle{ x = 1-(1-u)^\frac {1}{\beta} }[/math]

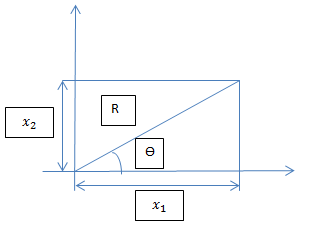

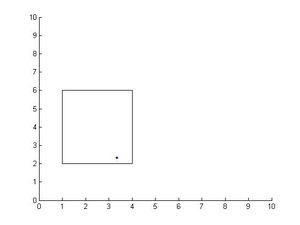

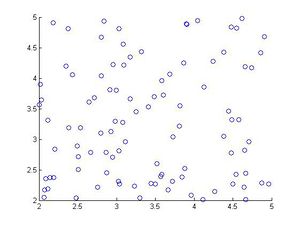

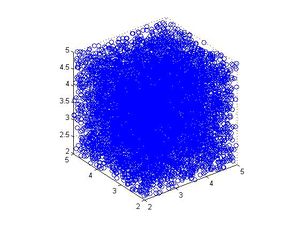

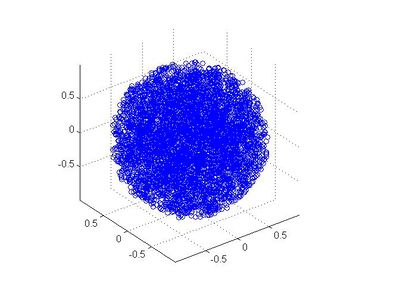

Example 4-Estimating pi:

Let's use rand() and Monte Carlo Method to estimate [math]\displaystyle{ pi }[/math]

N= total number of points

Nc = total number of points inside the circle

Prob[(x,y) lies in the circle]=[math]\displaystyle{ Area of circle/Area of Square }[/math]

If we take square of size 2, circle will have area pi.

Thus pi= [math]\displaystyle{ 4*(Nc/N) }[/math]

Matlab Code:

>>N=10000;

>>Nc=0;

>>a=0;

>>b=2;

>>for t=1:N

x=a+(b-a)*rand();

y=a+(b-a)*rand();

if (x-1)^2+(y-1)^2<=1

Nc=Nc+1;

end

end

>>4*(Nc/N)

ans = 3.1380

In Matlab, you can use functions: "who" to see what variables you have defined "clear all" to clear all variables you have defined "close all" to close all figures

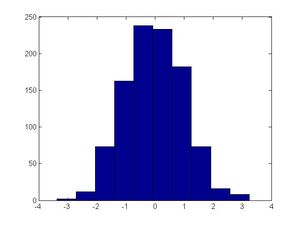

MatLab for Inverse Transform Method:

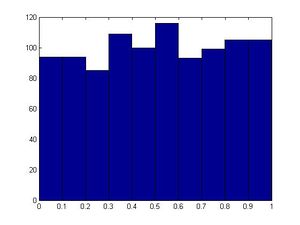

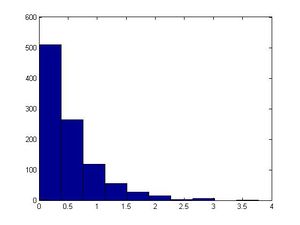

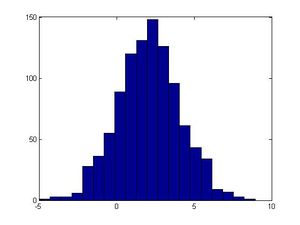

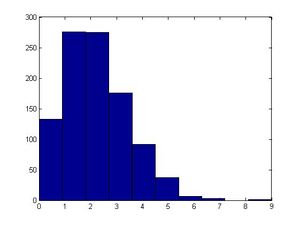

>>u=rand(1,1000); >>hist(u) #will generate a fairly uniform diagram

#let λ=2 in this example; however, you can make another value for λ >>x=(-log(1-u))/2; >>size(x) #1000 in size >>figure >>hist(x) #exponential

Limitations:

1. This method is flawed since not all functions are invertible or monotonic: generalized inverse is hard to work on.

2. It may be impractical since some CDF's and/or integrals are not easy to compute such as Gaussian distribution.

We learned how to prove the cdf transfer to inverse cdf,and use the uniform distribution to obtain a value of x from F(x). We also can use uniform distribution in inverse mothed to determine other distribution. The probability of getting a point for a circle over the triangle is a closed uniform distribution, each point in the circle and over the triangle is almost the same. and we can look at the graph to determine what kind of distribution the graph belongs to.

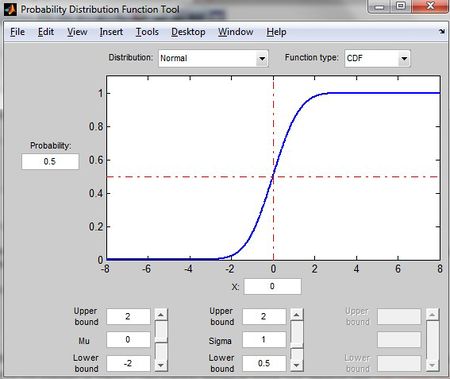

Probability Distribution Function Tool in MATLAB

disttool #shows different distributions

This command allows users to explore the effect of changes of parameters on the plot of either a CDF or PDF.

change the value of mu and sigma can change the graph skew side.

change the value of mu and sigma can change the graph skew side.

(Generating random numbers continue) Class 3 - Tuesday, May 14

Recall the Inverse Transform Method

1. Draw U~U(0,1)

2. X = F-1(U)

Proof

First note that

[math]\displaystyle{ P(U\leq a)=a, \forall a\in[0,1] }[/math]

- [math]\displaystyle{ P(X\leq x) }[/math]

[math]\displaystyle{ = P(F^{-1}(U)\leq x) }[/math] (since [math]\displaystyle{ U }[/math] has a uniform distribution)

[math]\displaystyle{ = P((F(F^{-1}(U))\leq F(x)) }[/math] (since [math]\displaystyle{ F(\cdot ) }[/math] is monotonically increasing)

[math]\displaystyle{ = P(U\leq F(x)) }[/math]

[math]\displaystyle{ = F(x) , \text{ where } 0 \leq F(x) \leq 1 }[/math]

This is the c.d.f. of X.

Note: that the CDF of a U(a,b) random variable is:

- [math]\displaystyle{ F(x)= \begin{cases} 0 & \text{for }x \lt a \\[8pt] \frac{x-a}{b-a} & \text{for }a \le x \lt b \\[8pt] 1 & \text{for }x \ge b \end{cases} }[/math]

Thus, for [math]\displaystyle{ U }[/math] ~ [math]\displaystyle{ U(0,1) }[/math], we have [math]\displaystyle{ P(U\leq 1) = 1 }[/math] and [math]\displaystyle{ P(U\leq 1/2) = 1/2 }[/math].

More generally, we see that [math]\displaystyle{ P(U\leq a) = a }[/math].

For this reason, we had [math]\displaystyle{ P(U\leq F(x)) = F(x) }[/math].

Reminder:

This is only for uniform distribution [math]\displaystyle{ U~ \sim~ Unif [0,1] }[/math]

[math]\displaystyle{ P (U \le 1) = 1 }[/math]

[math]\displaystyle{ P (U \le 0.5) = 0.5 }[/math]

[math]\displaystyle{ P (U \le a) = a }[/math]

[math]\displaystyle{ P(U\leq a)=a }[/math]

[math]\displaystyle{ P(U\leq a)=a }[/math]

Note that on a single point there is no mass probability (i.e. if [math]\displaystyle{ u }[/math] <= 0.5, then 0.5> [math]\displaystyle{ u }[/math], or if [math]\displaystyle{ u }[/math] < 0.5, then 0.5 <= [math]\displaystyle{ u }[/math]

LIMITATIONS OF THE INVERSE TRANSFORM METHOD

Though this method is very easy to use and apply, it does have disadvantages/limitations:

1. We have to find the inverse c.d.f function [math]\displaystyle{ F^{-1}(\cdot) }[/math] and make sure it is monotonically increasing, in some cases this function does not exist

2. For many distributions such as Gaussian, it is too difficult to find the inverse cdf function , making this method inefficient

Discrete Case

The same technique can be used for discrete case. We want to generate a discrete random variable x, that has probability mass function:

In general in the discrete case, we have [math]\displaystyle{ x_0, \dots , x_n }[/math] where:

- [math]\displaystyle{ \begin{align}P(X = x_i) &{}= p_i \end{align} }[/math]

- [math]\displaystyle{ x_0 \leq x_1 \leq x_2 \dots \leq x_n }[/math]

- [math]\displaystyle{ \sum p_i = 1 }[/math]

Algorithm for applying Inverse Transformation Method in Discrete Case (Procedure):

1. Define a probability mass function for [math]\displaystyle{ x_{i} }[/math] where i = 1,....,k. Note: k could grow infinitely.

2. Generate a uniform random number U, [math]\displaystyle{ U~ \sim~ Unif [0,1] }[/math]

3. If [math]\displaystyle{ U\leq p_{o} }[/math], deliver [math]\displaystyle{ X = x_{o} }[/math]

4. Else, if [math]\displaystyle{ U\leq p_{o} + p_{1} }[/math], deliver [math]\displaystyle{ X = x_{1} }[/math]

5. Repeat the process again till we reached to [math]\displaystyle{ U\leq p_{o} + p_{1} + ......+ p_{k} }[/math], deliver [math]\displaystyle{ X = x_{k} }[/math]

Example in class: (Coin Flipping Example)

We want to simulate a coin flip. We have U~U(0,1) and X = 0 or X = 1.

We can define the U function so that:

If U <= 0.5, then X = 0

and if 0.5 < U <= 1, then X =1.

This allows the probability of Heads occurring to be 0.5 and is a good generator of a random coin flip.

[math]\displaystyle{ U~ \sim~ Unif [0,1] }[/math]

- [math]\displaystyle{ \begin{align} P(X = 0) &{}= 0.5\\ P(X = 1) &{}= 0.5\\ \end{align} }[/math]

The answer is:

- [math]\displaystyle{ x = \begin{cases} 0, & \text{if } U\leq 0.5 \\ 1, & \text{if } 0.5 \lt U \leq 1 \end{cases} }[/math]

- Code

>>for ii=1:1000

u=rand;

if u<0.5

x(ii)=0;

else

x(ii)=1;

end

end

>>hist(x)

Note: The role of semi-colon in Matlab: Matlab will not print out the results if the line ends in a semi-colon and vice versa.

Example in class:

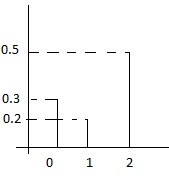

Suppose we have the following discrete distribution:

- [math]\displaystyle{ \begin{align} P(X = 0) &{}= 0.3 \\ P(X = 1) &{}= 0.2 \\ P(X = 2) &{}= 0.5 \end{align} }[/math]

The cumulative distribution function (cdf) for this distribution is then:

- [math]\displaystyle{ F(x) = \begin{cases} 0, & \text{if } x \lt 0 \\ 0.3, & \text{if } x \lt 1 \\ 0.5, & \text{if } x \lt 2 \\ 1, & \text{if } x \ge 2 \end{cases} }[/math]

Then we can generate numbers from this distribution like this, given [math]\displaystyle{ U \sim~ Unif[0, 1] }[/math]:

- [math]\displaystyle{ x = \begin{cases} 0, & \text{if } U\leq 0.3 \\ 1, & \text{if } 0.3 \lt U \leq 0.5 \\ 2, & \text{if } 0.5 \lt U\leq 1 \end{cases} }[/math]

"Procedure"

1. Draw U~u (0,1)

2. if U<=0.3 deliver x=0

3. else if 0.3<U<=0.5 deliver x=1

4. else 0.5<U<=1 deliver x=2

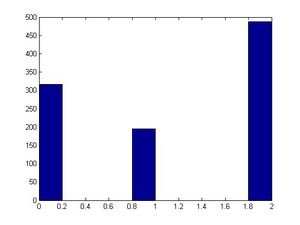

- Code (as shown in class)

Use Editor window to edit the code

>>close all

>>clear all

>>for ii=1:1000

u=rand;

if u<=0.3

x(ii)=0;

elseif u<=0.5

x(ii)=1;

else

x(ii)=2;

end

end

>>size(x)

>>hist(x)

Example: Generating a random variable from pdf

- [math]\displaystyle{ f_{x}(x) = \begin{cases} 2x, & \text{if } 0\leq x \leq 1 \\ 0, & \text{if } otherwise \end{cases} }[/math]

- [math]\displaystyle{ F_{x}(x) = \begin{cases} 0, & \text{if } x \lt 0 \\ \int_{0}^{x}2sds = x^{2}, & \text{if } 0\leq x \leq 1 \\ 1, & \text{if } x \gt 1 \end{cases} }[/math]

- [math]\displaystyle{ \begin{align} U = x^{2}, X = F^{-1}x(U)= U^{\frac{1}{2}}\end{align} }[/math]

Example: Generating a Bernoulli random variable

- [math]\displaystyle{ \begin{align} P(X = 1) = p, P(X = 0) = 1 - p\end{align} }[/math]

- [math]\displaystyle{ F(x) = \begin{cases} 1-p, & \text{if } x \lt 1 \\ 1, & \text{if } x \ge 1 \end{cases} }[/math]

1. Draw [math]\displaystyle{ U~ \sim~ Unif [0,1] }[/math]

2. [math]\displaystyle{

X = \begin{cases}

1, & \text{if } U\leq p \\

0, & \text{if } U \gt p

\end{cases} }[/math]

Example: Generating a Poisson random variable

Let X ~ Poi(u). Write an algorithm to generate X. The PDF of a poisson is:

- [math]\displaystyle{ \begin{align} f(x) = \frac {\, e^{-u} u^x}{x!} \end{align} }[/math]

We know that

- [math]\displaystyle{ \begin{align} P_{x+1} = \frac {\, e^{-u} u^{x+1}}{(x+1)!} \end{align} }[/math]

The ratio is [math]\displaystyle{ \begin{align} \frac {P_{x+1}}{P_x} = ... = \frac {u}{{x+1}} \end{align} }[/math] Therefore, [math]\displaystyle{ \begin{align} P_{x+1} = \, {\frac {u}{x+1}} P_x\end{align} }[/math]

Algorithm:

1) Generate U ~ U(0,1)

2) [math]\displaystyle{ \begin{align} X = 0 \end{align} }[/math]

[math]\displaystyle{ \begin{align} F = P(X = 0) = e^{-u}*u^0/{0!} = e^{-u} = p \end{align} }[/math]

3) If U<F, output x

Else, [math]\displaystyle{ \begin{align} p = (u/(x+1))^p \end{align} }[/math]

[math]\displaystyle{ \begin{align} F = F + p \end{align} }[/math]

[math]\displaystyle{ \begin{align} x = x + 1 \end{align} }[/math]

4) Go to x

Acknowledgements: This is from Stat 340 Winter 2013

Example: Generating Geometric Distribution:

Consider Geo(p) where p is the probability of success, and define random variable X such that X is the number of failure before the first success. x=1,2,3..... We have pmf: [math]\displaystyle{ P(X=x_i) = \, p (1-p)^{x_{i-1}} }[/math] We have CDF: [math]\displaystyle{ F(x)=P(X \leq x)=1-P(X\gt x) = 1-(1-p)^x }[/math], P(X>x) means we get at least x failures before observe the first success. Now consider the inverse transform:

- [math]\displaystyle{ x = \begin{cases} 1, & \text{if } U\leq p \\ 2, & \text{if } p \lt U \leq 1-(1-p)^2 \\ 3, & \text{if } 1-(1-p)^2 \lt U\leq 1-(1-p)^3 \\ .... k, & \text{if } 1-(1-p)^{k-1} \lt U\leq 1-(1-p)^k .... \end{cases} }[/math]

Note: Unlike the continuous case, the discrete inverse-transform method can always be used for any discrete distribution (but it may not be the most efficient approach)

General Procedure

1. Draw U ~ U(0,1)

2. If [math]\displaystyle{ U \leq P_{0} }[/math] deliver [math]\displaystyle{ x = x_{0} }[/math]

3. Else if [math]\displaystyle{ U \leq P_{0} + P_{1} }[/math] deliver [math]\displaystyle{ x = x_{1} }[/math]

4. Else if [math]\displaystyle{ U \leq P_{0} + P_{1} + P_{2} }[/math] deliver [math]\displaystyle{ x = x_{2} }[/math]

...

Else if [math]\displaystyle{ U \leq P_{0} + ... + P_{k} }[/math] deliver [math]\displaystyle{ x = x_{k} }[/math]

Problems

1. We have to find [math]\displaystyle{ F^{-1} }[/math]

2. For many distributions, such as Gaussian, it is too difficult to find the inverse of [math]\displaystyle{ F(x) , }[/math] flipping a coin is a discrete case of uniform distribution, and for the code it is randomly flipped 1000 times for the coin, and the result we can see is closed to the express value(0.5) and example 2 is another discrete distribution, it shows that we can discrete uniform for 3 part like ,0,1,2, and the probability of each part or each trial is the same. Example 3 is use inverse method to figure out the probability range of each random varibles.

Summary of Inverse Transform Method

Problem:generate types of distribution.

Plan:

Continuous case:

- find CDF F

- find the inverse F-1

- Generate a list of uniformly distributed number {x}

- {F-1(x)} is what we want

Matlab Instruction

>>u=rand(1,1000); >>hist(u) >>x=(-log(1-u))/2; >>size(x) >>figure >>hist(x)

Discrete case:

- generate a list of uniformly distributed number {u}

- di=xi if[math]\displaystyle{ X=x_i, }[/math] if [math]\displaystyle{ F(x_{i-1})\lt U\leq F(x_i) }[/math]

- {di=xi} is what we want

Matlab Instruction

>>for ii=1:1000

u=rand;

if u<0.5

x(ii)=0;

else

x(ii)=1;

end

end

>>hist(x)

Acceptance-Rejection Method

Although the inverse transformation method does allow us to change our uniform distribution, it has two limits;

- Not all functions have inverse functions (ie, the range of x and y have limit and do not fix the inverse functions)

- For some distributions, such as Gaussian, it is too difficult to find the inverse

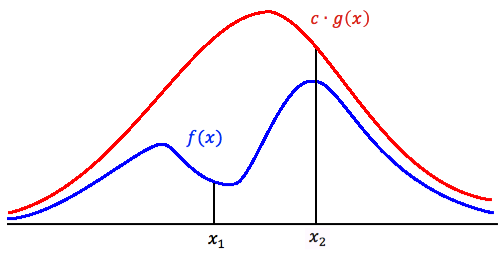

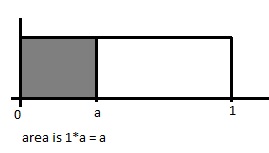

To generate random samples for these functions, we will use different methods, such as the Acceptance-Rejection Method. This method is more efficient than the inverse transform method. The basic idea is to find an alternative probability distribution with density function f(x);

Suppose we want to draw random sample from a target density function f(x), x∈Sx, where Sx is the support of f(x). If we can find some constant c(≥1) (In practise, we prefer c as close to 1 as possible) and a density function g(x) having the same support Sx so that f(x)≤cg(x), ∀x∈Sx, then we can apply the procedure for Acceptance-Rejection Method. Typically we choose a density function that we already know how to sample from for g(x).

{{

Template:namespace detect

| type = style | image = | imageright = | style = | textstyle = | text = This article may require cleanup to meet Wikicoursenote's quality standards. The specific problem is: Do not write [math]\displaystyle{ c*g(x) }[/math]. Instead write [math]\displaystyle{ c \times g(x) }[/math] or [math]\displaystyle{ \,c g(x) }[/math]. Please improve this article if you can. | small = | smallimage = | smallimageright = | smalltext = }}

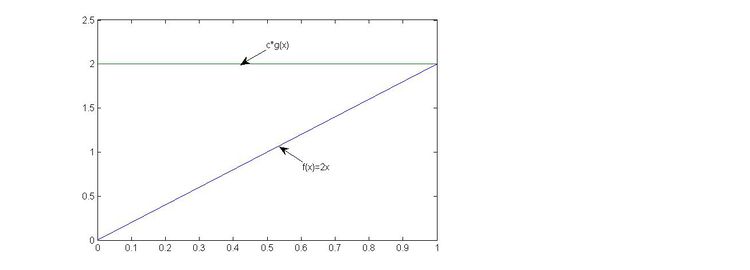

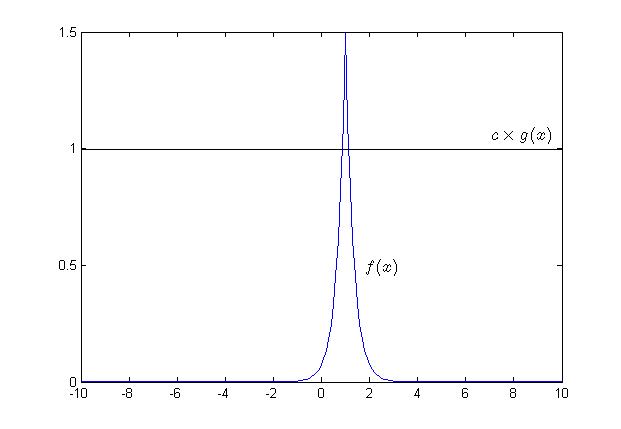

The main logic behind the Acceptance-Rejection Method is that:

1. We want to generate sample points from an unknown distribution, say f(x).

2. We use cg(x) to generate points so that we have more points than f(x) could ever generate for all x. (where c is a constant, and g(x) is a known distribution)

3. For each value of x, we accept and reject some points based on a probability, which will be discussed below.

Note: If the red line was only g(x) as opposed to [math]\displaystyle{ \,c g(x) }[/math] (i.e. c=1), then [math]\displaystyle{ g(x) \geq f(x) }[/math] for all values of x if and only if g and f are the same functions. This is because the sum of pdf of g(x)=1 and the sum of pdf of f(x)=1, hence, [math]\displaystyle{ g(x) \ngeqq f(x) }[/math] ∀x.

Also remember that [math]\displaystyle{ \,c g(x) }[/math] always generates higher probability than what we need. Thus we need an approach of getting the proper probabilities.

c must be chosen so that [math]\displaystyle{ f(x)\leqslant c g(x) }[/math] for all value of x. c can only equal 1 when f and g have the same distribution. Otherwise:

Either use a software package to test if [math]\displaystyle{ f(x)\leqslant c g(x) }[/math] for an arbitrarily chosen c > 0, or:

1. Find first and second derivatives of f(x) and g(x).

2. Identify and classify all local and absolute maximums and minimums, using the First and Second Derivative Tests, as well as all inflection points.

3. Verify that [math]\displaystyle{ f(x)\leqslant c g(x) }[/math] at all the local maximums as well as the absolute maximums.

4. Verify that [math]\displaystyle{ f(x)\leqslant c g(x) }[/math] at the tail ends by calculating [math]\displaystyle{ \lim_{x \to +\infty} \frac{f(x)}{\, c g(x)} }[/math] and [math]\displaystyle{ \lim_{x \to -\infty} \frac{f(x)}{\, c g(x)} }[/math] and seeing that they are both < 1. Use of L'Hopital's Rule should make this easy, since both f and g are p.d.f's, resulting in both of them approaching 0.

5.Efficiency: the number of times N that steps 1 and 2 need to be called(also the number of iterations needed to successfully generate X) is a random variable and has a geometric distribution with success probability p=P(U<= f(Y)/(cg(Y),P(N=n))=(1-p^(n-1)*p,n>=1.Thus on average the number of iterations required is given by E(N)=1/p

c should be close to the maximum of f(x)/g(x), not just some arbitrarily picked large number. Otherwise, the Acceptance-Rejection method will have more rejections (since our probability [math]\displaystyle{ f(x)\leqslant c g(x) }[/math] will be close to zero). This will render our algorithm inefficient.

The expected number of iterations of the algorithm required with an X is c.

Note:

1. Value around x1 will be sampled more often under cg(x) than under f(x).There will be more samples than we actually need, if [math]\displaystyle{ \frac{f(y)}{\, c g(y)} }[/math] is small, the acceptance-rejection technique will need to be done to these points to get the accurate amount.In the region above x1, we should accept less and reject more.

2. Value around x2: number of sample that are drawn and the number we need are much closer. So in the region above x2, we accept more. As a result, g(x) and f(x) are comparable.

3. The constant c is needed because we need to adjust the height of g(x) to ensure that it is above f(x). Besides that, it is best to keep the number of rejected varieties small for maximum efficiency.

Another way to understand why the the acceptance probability is [math]\displaystyle{ \frac{f(y)}{\, c g(y)} }[/math], is by thinking of areas. From the graph above, we see that the target function in under the proposed function c g(y). Therefore, [math]\displaystyle{ \frac{f(y)}{\, c g(y)} }[/math] is the proportion or the area under c g(y) that also contains f(y). Therefore we say we accept sample points for which u is less then [math]\displaystyle{ \frac{f(y)}{\, c g(y)} }[/math] because then the sample points are guaranteed to fall under the area of c g(y) that contains f(y).

There are 2 cases that are possible:

-Sample of points is more than enough, [math]\displaystyle{ c g(x) \geq f(x) }[/math]

-Similar or the same amount of points, [math]\displaystyle{ c g(x) \geq f(x) }[/math]

There is 1 case that is not possible:

-Less than enough points, such that [math]\displaystyle{ g(x) }[/math] is greater than [math]\displaystyle{ f }[/math], [math]\displaystyle{ g(x) \geq f(x) }[/math]

Procedure

- Draw Y~g(.)

- Draw U~u(0,1) (Note: U and Y are independent)

- If [math]\displaystyle{ u\leq \frac{f(y)}{cg(y)} }[/math] (which is [math]\displaystyle{ P(accepted|y) }[/math]) then x=y, else return to Step 1

Note: Recall [math]\displaystyle{ P(U\leq a)=a }[/math]. Thus by comparing u and [math]\displaystyle{ \frac{f(y)}{\, c g(y)} }[/math], we can get a probability of accepting y at these points. For instance, at some points that cg(x) is much larger than f(x), the probability of accepting x=y is quite small.

ie. At X1, low probability to accept the point since f(x) much smaller than cg(x).

At X2, high probability to accept the point. [math]\displaystyle{ P(U\leq a)=a }[/math] in Uniform Distribution.

Note: Since U is the variable for uniform distribution between 0 and 1. It equals to 1 for all. The condition depends on the constant c. so the condition changes to [math]\displaystyle{ c\leq \frac{f(y)}{g(y)} }[/math]

introduce the relationship of cg(x)and f(x),and prove why they have that relationship and where we can use this rule to reject some cases.

and learn how to see the graph to find the accurate point to reject or accept the ragion above the random variable x.

for the example, x1 is bad point and x2 is good point to estimate the rejection and acceptance

Theorem

Let [math]\displaystyle{ f: \R \rightarrow [0,+\infty] }[/math] be a well-defined pdf, and [math]\displaystyle{ \displaystyle Y }[/math] be a random variable with pdf [math]\displaystyle{ g: \R \rightarrow [0,+\infty] }[/math] such that [math]\displaystyle{ \exists c \in \R^+ }[/math] with [math]\displaystyle{ f \leq c \cdot g }[/math]. If [math]\displaystyle{ \displaystyle U \sim~ U(0,1) }[/math] is independent of [math]\displaystyle{ \displaystyle Y }[/math], then the random variable defined as [math]\displaystyle{ X := Y \vert U \leq \frac{f(Y)}{c \cdot g(Y)} }[/math] has pdf [math]\displaystyle{ \displaystyle f }[/math], and the condition [math]\displaystyle{ U \leq \frac{f(Y)}{c \cdot g(Y)} }[/math] is denoted by "Accepted".

Proof

(to be updated later)

[math]\displaystyle{ P(y|accepted)=f(y) }[/math]

[math]\displaystyle{ P(y|accepted)=\frac{P(accepted|y)P(y)}{P(accepted)} }[/math]

Recall the conditional probability formulas:

[math]\displaystyle{ \begin{align}

P(A|B)=\frac{P(A \cap B)}{P(B)}, \text{ or }P(A|B)=\frac{P(B|A)P(A)}{P(B)} \text{ for pmf}

\end{align} }[/math]

based on the concept from procedure-step1:

[math]\displaystyle{ P(y)=g(y) }[/math]

[math]\displaystyle{ P(accepted|y)=\frac{f(y)}{cg(y)} }[/math]

(the larger the value is, the larger the chance it will be selected)

[math]\displaystyle{

\begin{align}

P(accepted)&=\int_y\ P(accepted|y)P(y)\\

&=\int_y\ \frac{f(s)}{cg(s)}g(s)ds\\

&=\frac{1}{c} \int_y\ f(s) ds\\

&=\frac{1}{c}

\end{align} }[/math]

Therefore:

[math]\displaystyle{ \begin{align}

P(x)&=P(y|accepted)\\

&=\frac{\frac{f(y)}{cg(y)}g(y)}{1/c}\\

&=\frac{\frac{f(y)}{c}}{1/c}\\

&=f(y)\end{align} }[/math]

Here is an alternative introduction of Acceptance-Rejection Method

Comments:

-Acceptance-Rejection Method is not good for all cases. One obvious cons is that it could be very hard to pick the g(y) and the constant c in some cases. And usually, c should be a small number otherwise the amount of work when applying the method could be HUGE.

-Note: When f(y) is very different than g(y), it is less likely that the point will be accepted as the ratio above would be very small and it will be difficult for u to be less than this small value.

An example would be when the target function (f) has a spike or several spikes in its domain - this would force the known distribution (g) to have density at least as large as the spikes, making the value of c larger than desired. As a result, the algorithm would be highly inefficient.

Acceptance-Rejection Method

Example 1 (discrete case)

We wish to generate X~Bi(2,0.5), assuming that we cannot generate this directly.

We use a discrete distribution DU[0,2] to approximate this.

[math]\displaystyle{ f(x)=Pr(X=x)=2Cx*(0.5)^2 }[/math]

| x | 0 | 1 | 2 |

| f(x) | 1/4 | 1/2 | 1/4 |

| g(x) | 1/3 | 1/3 | 1/3 |

| c=f(x)/g(x) | 3/4 | 3/2 | 3/4 |

| f(x)/(cg(x)) | 1/2 | 1 | 1/2 |

Since we need [math]\displaystyle{ c\gt =f(x)/g(x) }[/math]

We need [math]\displaystyle{ c=3/2 }[/math]

Therefore, the algorithm is:

1. Generate [math]\displaystyle{ u,v~U(0,1) }[/math]

2. Set [math]\displaystyle{ y= \lfloor 3*u \rfloor }[/math] (This is using uniform distribution to generate DU[0,2]

3. If [math]\displaystyle{ (y=0) }[/math] and [math]\displaystyle{ (v\lt 1/2), output=0 }[/math]

If [math]\displaystyle{ (y=2) }[/math] and [math]\displaystyle{ (v\lt 1/2), output=2 }[/math]

Else if [math]\displaystyle{ y=1, output=1 }[/math]

An elaboration of “c”

c is the expected number of times the code runs to output 1 random variable. Remember that when [math]\displaystyle{ u \lt f(x)/(cg(x)) }[/math] is not satisfied, we need to go over the code again.

Proof

Let [math]\displaystyle{ f(x) }[/math] be the function we wish to generate from, but we cannot use inverse transform method to generate directly.

Let [math]\displaystyle{ g(x) }[/math] be the helper function

Let [math]\displaystyle{ kg(x)\gt =f(x) }[/math]

Since we need to generate y from [math]\displaystyle{ g(x) }[/math],

[math]\displaystyle{ Pr(select y)=g(y) }[/math]

[math]\displaystyle{ Pr(output y|selected y)=Pr(u\lt f(y)/(cg(y)))= (y)/(cg(y)) }[/math] (Since u~Unif(0,1))

[math]\displaystyle{ Pr(output y)=Pr(output y1|selected y1)Pr(select y1)+ Pr(output y2|selected y2)Pr(select y2)+…+ Pr(output yn|selected yn)Pr(select yn)=1/c }[/math]

Consider that we are asking for expected time for the first success, it is a geometric distribution with probability of success=c

Therefore, [math]\displaystyle{ E(X)=1/(1/c))=c }[/math]

Acknowledgements: Some materials have been borrowed from notes from Stat340 in Winter 2013.

Use the conditional probability to proof if the probability is accepted, then the result is closed pdf of the original one. the example shows how to choose the c for the two function g(x) and f(x).

Example of Acceptance-Rejection Method

Generating a random variable having p.d.f.

[math]\displaystyle{ f(x) = 20x(1 - x)^3, 0\lt x \lt 1 }[/math]

Since this random variable (which is beta with parameters 2, 4) is concentrated in the interval (0, 1), let us consider the acceptance-rejection method with

g(x) = 1, 0 < x < 1

To determine the constant c such that f(x)/g(x) <= c, we use calculus to determine the maximum value of

[math]\displaystyle{ f(x)/g(x) = 20x(1 - x)^3 }[/math]

Differentiation of this quantity yields

[math]\displaystyle{ d/dx[f(x)/g(x)]=20*[(1-x)^3-3x(1-x)^2] }[/math]

Setting this equal to 0 shows that the maximal value is attained when x = 1/4, and thus,

[math]\displaystyle{ f(x)/g(x)\lt = 20*(1/4)*(3/4)^3=135/64=c }[/math]

Hence,

[math]\displaystyle{ f(x)/cg(x)=(256/27)*(x*(1-x)^3) }[/math]

and thus the simulation procedure is as follows:

1) Generate two random numbers U1 and U2 .

2) If U2<(256/27)*U1*(1-U1)3, set X=U2, and stop Otherwise return to step 1). The average number of times that step 1) will be performed is c = 135/64.

(The above example is from http://www.cs.bgu.ac.il/~mps042/acceptance.htm, example 2.)

use the derivative to proof the accepetance-rejection method, find the local maximum of f(x)/g(x). and we can calculate the best constant c.

Simple Example of Acceptance-Rejection Method

Consider the random variable X, with distribution [math]\displaystyle{ X }[/math] ~ [math]\displaystyle{ U[0,0.5] }[/math]

So we let [math]\displaystyle{ f(x) = 2x }[/math] on [math]\displaystyle{ [0, 1/2] }[/math]

Let [math]\displaystyle{ g(.) }[/math] be [math]\displaystyle{ U[0,1] }[/math] distributed. So [math]\displaystyle{ g(x) = x }[/math] on [math]\displaystyle{ [0,1] }[/math]

Then take [math]\displaystyle{ c = 2 }[/math]

So [math]\displaystyle{ f(x)/cg(x) = (2x) / {(2)(x) } = 1 }[/math] on the interval [math]\displaystyle{ [0, 1/2] }[/math] and

[math]\displaystyle{ f(x)/cg(x) = (0) / {(2)(x) } = 0 }[/math] on the interval [math]\displaystyle{ (1/2, 1] }[/math]

So we reject:

None of the numbers generated in the interval [math]\displaystyle{ [0, 1/2] }[/math]

All of the numbers generated in the interval [math]\displaystyle{ (1/2, 1] }[/math]

And this results in the distribution [math]\displaystyle{ f(.) }[/math] which is [math]\displaystyle{ U[0,1/2] }[/math]

a example to show why the we reject a case by using acceptance-rejection method.

Another Example of Acceptance-Rejection Method

Generate a random variable from:

[math]\displaystyle{ f(x)=3*x^2 }[/math], 0< x <1

Assume g(x) to be uniform over interval (0,1), where 0< x <1

Therefore:

[math]\displaystyle{ c = max(f(x)/(g(x)))= 3 }[/math]

the best constant c is the max(f(x)/(cg(x))) and the c make the area above the f(x) and below the g(x) to be small. because g(.) is uniform so the g(x) is 1. max(g(x)) is 1

[math]\displaystyle{ f(x)/(cg(x))= x^2 }[/math]

Acknowledgement: this is example 1 from http://www.cs.bgu.ac.il/~mps042/acceptance.htm

an example to show how to figure out c and f(x)/c*g(x).

Class 4 - Thursday, May 16

- When we want to find a target distribution, denoted as [math]\displaystyle{ f(x) }[/math]; we need to first find a proposal distribution [math]\displaystyle{ g(x) }[/math] which is easy to sample from.

The area of the f(x) is under the area of the g(x). - The relationship between the proposal distribution and target distribution is: [math]\displaystyle{ c \cdot g(x) \geq f(x) }[/math].

- Chance of acceptance is less if the distance between [math]\displaystyle{ f(x) }[/math] and [math]\displaystyle{ c \cdot g(x) }[/math] is big, and vice-versa, [math]\displaystyle{ c }[/math] keeps [math]\displaystyle{ \frac {f(x)}{c \cdot g(x)} }[/math] below 1 (so [math]\displaystyle{ f(x) \leq c \cdot g(x) }[/math]), and we must to choose the constant [math]\displaystyle{ C }[/math] to achieve this.

- In other words, [math]\displaystyle{ C }[/math] is chosen to make sure [math]\displaystyle{ c \cdot g(x) \geq f(x) }[/math]. However, it will not make sense if [math]\displaystyle{ C }[/math] is simply chosen to be arbitrarily large. We need to choose [math]\displaystyle{ C }[/math] such that [math]\displaystyle{ c \cdot g(x) }[/math] fits [math]\displaystyle{ f(x) }[/math] as tightly as possible.

- The constant c can not be negative number.

How to find C:

[math]\displaystyle{ \begin{align}

&c \cdot g(x) \geq f(x)\\

&c\geq \frac{f(x)}{g(x)} \\

&c= \max \left(\frac{f(x)}{g(x)}\right)

\end{align} }[/math]

If [math]\displaystyle{ f }[/math] and [math]\displaystyle{ g }[/math] are continuous, we can find the extremum by taking the derivative and solve for [math]\displaystyle{ x_0 }[/math] such that:

[math]\displaystyle{ 0=\frac{d}{dx}\frac{f(x)}{g(x)}|_{x=x_0} }[/math]

Thus [math]\displaystyle{ c = \frac{f(x_0)}{g(x_0)} }[/math]

- The logic behind this:

The Acceptance-Rejection method involves finding a distribution that we know how to sample from (g(x)) and multiplying g(x) by a constant c so that [math]\displaystyle{ c \cdot g(x) }[/math] is always greater than or equal to f(x). Mathematically, we want [math]\displaystyle{ c \cdot g(x) \geq f(x) }[/math].

And it means c has to be greater or equal to [math]\displaystyle{ \frac{f(x)}{g(x)} }[/math]. So the smallest possible c that satisfies the condition is the maximum value of [math]\displaystyle{ \frac{f(x)}{g(x)} }[/math]

. If c is made to be too large, the chance of acceptance of generated values will be small, and the algorithm will lose its purpose since the acceptance will be very small. Therefore, it is best to get the smallest posisble c such that [math]\displaystyle{ c g(x) \geq f(x) }[/math].

- For this method to be efficient, the constant c must be selected so that the rejection rate is low.(The efficiency for this method is[math]\displaystyle{ \left ( \frac{1}{c} \right ) }[/math])

- It is easy to show that the expected number of trials for an acceptance is c. Thus, the smaller the c is, the lower the rejection rate, and the better the algorithm:

- recall the acceptance rate is 1/c.(not rejection rate)

- Let [math]\displaystyle{ X }[/math] be the number of trials for an acceptance, [math]\displaystyle{ X \sim~ Geo(\frac{1}{c}) }[/math]

- [math]\displaystyle{ \mathbb{E}[X] = \frac{1}{\frac{1}{c}} = c }[/math]

- The number of trials needed to generate a sample size of [math]\displaystyle{ N }[/math] follows a negative binomial distribution. The expected number of trials needed is then [math]\displaystyle{ cN }[/math].

- So far, the only distribution we know how to sample from is the UNIFORM distribution.

Procedure:

1. Choose [math]\displaystyle{ g(x) }[/math] (simple density function that we know how to sample, i.e. Uniform so far)

The easiest case is UNIF(0,1). However, in other cases we need to generate UNIF(a,b). We may need to perform a linear transformation on the UNIF(0,1) variable.

2. Find a constant c such that :[math]\displaystyle{ c \cdot g(x) \geq f(x) }[/math], otherwise return to step 1.

Recall the general procedure of Acceptance-Rejection Method

- Let [math]\displaystyle{ Y \sim~ g(y) }[/math]

- Let [math]\displaystyle{ U \sim~ Unif [0,1] }[/math]

- If [math]\displaystyle{ U \leq \frac{f(x)}{c \cdot g(x)} }[/math] then X=Y; else return to step 1 (This is not the way to find C. This is the general procedure.)

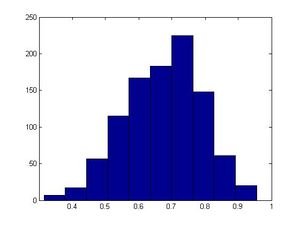

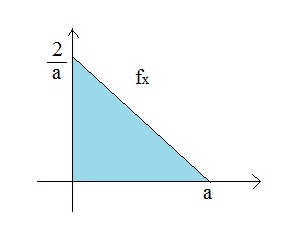

Example: Generate a random variable from the pdf

[math]\displaystyle{ f(x) = \begin{cases} 2x, & \mbox{if }0 \leqslant x \leqslant 1 \\ 0, & \mbox{otherwise} \end{cases} }[/math]

We can note that this is a special case of Beta(2,1), where,

[math]\displaystyle{ beta(a,b)=\frac{\Gamma(a+b)}{\Gamma(a)\Gamma(b)}x^{(a-1)}(1-x)^{(b-1)} }[/math]

Where Γ (n)=(n-1)! if n is positive integer

[math]\displaystyle{ Gamma(z)=\int _{0}^{\infty }t^{z-1}e^{t}dt }[/math]

Aside: Beta function

In mathematics, the beta function, also called the Euler integral of the first kind, is a special function defined by

[math]\displaystyle{ B(x,y)=\int_0^1 \! {t^{(x-1)}}{(1-t)^{(y-1)}}\,dt }[/math]

[math]\displaystyle{ beta(2,1)= \frac{\Gamma(3)}{(\Gamma(2)\Gamma(1))}x^1 (1-x)^0 = 2x }[/math]

[math]\displaystyle{ g=u(0,1) }[/math]

[math]\displaystyle{ y=g }[/math]

[math]\displaystyle{ f(x)\leq c\cdot g(x) }[/math]

[math]\displaystyle{ c\geq \frac{f(x)}{g(x)} }[/math]

[math]\displaystyle{ c = \max \frac{f(x)}{g(x)} }[/math]

[math]\displaystyle{ c = \max \frac{2x}{1}, 0 \leq x \leq 1 }[/math]

Taking x = 1 gives the highest possible c, which is c=2

Note that c is a scalar greater than 1.

Note: g follows uniform distribution, it only covers half of the graph which runs from 0 to 1 on y-axis. Thus we need to multiply by c to ensure that [math]\displaystyle{ c\cdot g }[/math] can cover entire f(x) area. In this case, c=2, so that makes g runs from 0 to 2 on y-axis which covers f(x).

Comment:

From the picture above, we could observe that the area under f(x)=2x is a half of the area under the pdf of UNIF(0,1). This is why in order to sample 1000 points of f(x) we need to sample approximately 2000 points in UNIF(0,1).

And in general, if we want to sample n points from a distritubion with pdf f(x), we need to scan approximately [math]\displaystyle{ n\cdot c }[/math] points from the proposal distribution (g(x)) in total.

Step

- Draw y~u(0,1)

- Draw u~u(0,1)

- if [math]\displaystyle{ u \leq \frac{(2\cdot y)}{(2\cdot 1)}, u \leq y, }[/math] then [math]\displaystyle{ x=y }[/math]

- Else go to Step 1

Note: In the above example, we sample 2 numbers. If second number is equal to first one then accept, if not then start all over.

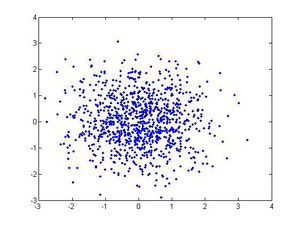

Matlab Code

>>close all

>>clear all

>>ii=1; # ii:numbers that are accepted

>>jj=1; # jj:numbers that are generated

>>while ii<1000

y=rand;

u=rand;

jj=jj+1;

if u<=y

x(ii)=y;

ii=ii+1;

end

end

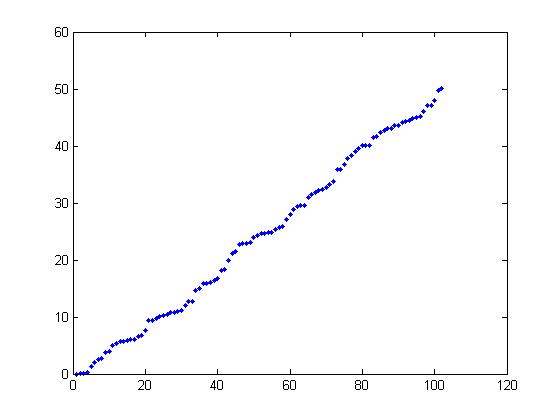

>>hist(x)

>>jj

jj = 2024 # should be around 2000

- *Note: The reason that a for loop is not used is that we need continue the looping until we get 1000 successful samples. We will reject some samples during the process and therefore do not know the number of y we are going to generate.

- *Note2: In this example, we used c=2, which means we accept half of the points we generate on average. Generally speaking, 1/c would be the probability of acceptance, and an indicator of the efficiency of your chosen proposal distribution and algorithm.

- *Note3: We use while instead of for when looping because we do not know how many iterations are required to generate 1000 successful samples.

- *Note4: If c=1, we will accept all points, which is the ideal situation.

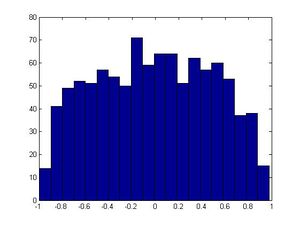

Example for A-R method:'

Given [math]\displaystyle{ f(x)= \frac{3}{4} (1-x^2), -1 \leq x \leq 1 }[/math], use A-R method to generate random number

Let g=U(-1,1) and g(x)=1/2

let y ~ f, [math]\displaystyle{ cg(x)\geq f(x), c\frac{1}{2} \geq \frac{3}{4} (1-x^2) /1, c=max 2*\frac{3}{4} (1-x^2) = 3/2 }[/math]

The process:

- 1: Draw U1 ~ U(0,1)

- 2: Draw U2~U(0,1)

- 3: let [math]\displaystyle{ y = U1*2 - 1 }[/math]

- 4: if [math]\displaystyle{ U2 \leq \frac { \frac{3}{4} * (1-y^2)} { \frac{3}{4}} = {1-y^2} }[/math], then x=y, note that (3/4(1-y^2)/(3/4) is getting from f(y) / (cg(y)) )

- 5: else: return to step 1

Use Inverse Method for this Example

- [math]\displaystyle{ F(x)=\int_0^x \! 2s\,ds={x^2} -0={x^2} }[/math]

- [math]\displaystyle{ y=x^2 }[/math]

- [math]\displaystyle{ x=\sqrt y }[/math]

- [math]\displaystyle{ F^{-1}\left (\, x \, \right) =\sqrt x }[/math]

- Procedure

- 1: Draw [math]\displaystyle{ U~ \sim~ Unif [0,1] }[/math]

- 2: [math]\displaystyle{ x=F^{-1}\left (\, u\, \right) =\sqrt u }[/math]

Matlab Code

>>u=rand(1,1000); >>x=u.^0.5; >>hist(x)

Matlab Tip: Periods, ".",meaning "element-wise", are used to describe the operation you want performed on each element of a vector. In the above example, to take the square root of every element in U, the notation U.^0.5 is used. However if you want to take the Square root of the entire matrix U the period, "*.*" would be excluded. i.e. Let matrix B=U^0.5, then [math]\displaystyle{ B^T*B=U }[/math]. For example if we have a two 1 X 3 matrices and we want to find out their product; using "." in the code will give us their product; however, if we don't use "." it will just give us an error. For example, a =[1 2 3] b=[2 3 4] are vectors, a.*b=[2 6 12], but a*b does not work since matrix dimensions must agree.

Example of Acceptance-Rejection Method

[math]\displaystyle{ f(x) = 3x^2, 0\lt x\lt 1 }[/math] [math]\displaystyle{ g(x)=1, 0\lt x\lt 1 }[/math]

[math]\displaystyle{ c = \max \frac{f(x)}{g(x)} = \max \frac{3x^2}{1} = 3 }[/math]

[math]\displaystyle{ \frac{f(x)}{c \cdot g(x)} = x^2 }[/math]

1. Generate two uniform numbers in the unit interval [math]\displaystyle{ U_1, U_2 \sim~ U(0,1) }[/math]

2. If [math]\displaystyle{ U_2 \leqslant {U_1}^2 }[/math], accept [math]\displaystyle{ U_1 }[/math] as the random variable with pdf [math]\displaystyle{ f }[/math], if not return to Step 1

We can also use [math]\displaystyle{ g(x)=2x }[/math] for a more efficient algorithm

[math]\displaystyle{ c = \max \frac{f(x)}{g(x)} = \max \frac {3x^2}{2x} = \frac {3x}{2} }[/math]. Use the inverse method to sample from [math]\displaystyle{ g(x) }[/math] [math]\displaystyle{ G(x)=x^2 }[/math]. Generate [math]\displaystyle{ U }[/math] from [math]\displaystyle{ U(0,1) }[/math] and set [math]\displaystyle{ x=sqrt(u) }[/math]

1. Generate two uniform numbers in the unit interval [math]\displaystyle{ U_1, U_2 \sim~ U(0,1) }[/math]

2. If [math]\displaystyle{ U_2 \leq \frac{3\sqrt{U_1}}{2} }[/math], accept [math]\displaystyle{ U_1 }[/math] as the random variable with pdf [math]\displaystyle{ f }[/math], if not return to Step 1

Possible Limitations

-This method could be computationally inefficient depending on the rejection rate. We may have to sample many points before

we get the 1000 accepted points. In the example we did in class relating the [math]\displaystyle{ f(x)=2x }[/math],

we had to sample around 2070 points before we finally accepted 1000 sample points.

-If the form of the proposal distribution, g, is very different from target distribution, f, then c is very large and the algorithm is not computationally efficient.

Acceptance - Rejection Method Application on Normal Distribution

[math]\displaystyle{ X \sim∼ N(\mu,\sigma^2), \text{ or } X = \sigma Z + \mu, Z \sim~ N(0,1) }[/math]

[math]\displaystyle{ \vert Z \vert }[/math] has probability density function of

f(x) = (2/[math]\displaystyle{ \sqrt{2\pi} }[/math]) e-x2/2

g(x) = e-x

Take h(x) = f(x)/g(x) and solve for h'(x) = 0 to find x so that h(x) is maximum.

Hence x=1 maximizes h(x) => c = [math]\displaystyle{ \sqrt{2e/\pi} }[/math]

Thus f(y)/cg(y) = e-(y-1)2/2

learn how to use code to calculate the c between f(x) and g(x).

How to transform [math]\displaystyle{ U(0,1) }[/math] to [math]\displaystyle{ U(a, b) }[/math]

1. Draw U from [math]\displaystyle{ U(0,1) }[/math]

2. Take [math]\displaystyle{ Y=(b-a)U+a }[/math]

3. Now Y follows [math]\displaystyle{ U(a,b) }[/math]

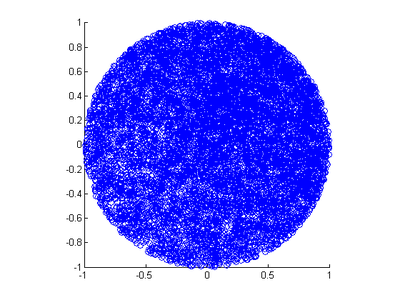

Example: Generate a random variable z from the Semicircular density [math]\displaystyle{ f(x)= \frac{2}{\pi R^2} \sqrt{R^2-x^2}, -R\leq x\leq R }[/math].

-> Proposal distribution: UNIF(-R, R)

-> We know how to generate using [math]\displaystyle{ U \sim UNIF (0,1) }[/math] Let [math]\displaystyle{ Y= 2RU-R=R(2U-1) }[/math], therefore Y follows [math]\displaystyle{ U(-R,R) }[/math]

-> In order to maximize the function we must maximize the top and minimize the bottom.

Now, we need to find c:

Since c=max[f(x)/g(x)], where

[math]\displaystyle{ f(x)= \frac{2}{\pi R^2} \sqrt{R^2-x^2} }[/math], [math]\displaystyle{ g(x)=\frac{1}{2R} }[/math], [math]\displaystyle{ -R\leq x\leq R }[/math]

Thus, we have to maximize R^2-x^2.

=> When x=0, it will be maximized.

Therefore, c=4/pi. * Note: This also means that the probability of accepting a point is pi/4.

We will accept the points with limit f(x)/[cg(x)]. Since [math]\displaystyle{ \frac{f(y)}{cg(y)}=\frac{\frac{2}{\pi R^{2}} \sqrt{R^{2}-y^{2}}}{\frac{4}{\pi} \frac{1}{2R}}=\frac{\frac{2}{\pi R^{2}} \sqrt{R^{2}-R^{2}(2U-1)^{2}}}{\frac{2}{\pi R}} }[/math]

- Note: Y= R(2U-1)

We can also get Y= R(2U-1) by using the formula y = a+(b-a)*u, to transform U~(0,1) to U~(a,b). Letting a=-R and b=R, and substituting it in the formula y = a+(b-a)*u, we get Y= R(2U-1).

Thus, [math]\displaystyle{ \frac{f(y)}{cg(y)}=\sqrt{1-(2U-1)^{2}} }[/math] * this also means the probability we can accept points

1. Draw [math]\displaystyle{ \ U }[/math] from [math]\displaystyle{ \ U(0,1) }[/math]

2. Draw [math]\displaystyle{ \ U_{1} }[/math] from [math]\displaystyle{ \ U(0,1) }[/math]

3. If [math]\displaystyle{ U_{1} \leq \sqrt{1-(2U-1)^2}, x = y }[/math]

else return to step 1.

The condition is

[math]\displaystyle{ U_{1} \leq \sqrt{(1-(2U-1)^2)} }[/math]

[math]\displaystyle{ \ U_{1}^2 \leq 1 - (2U -1)^2 }[/math]

[math]\displaystyle{ \ U_{1}^2 - 1 \leq -(2U - 1)^2 }[/math]

[math]\displaystyle{ \ 1 - U_{1}^2 \geq (2U - 1)^2 }[/math]

One more example about AR method

(In this example, we will see how to determine the value of c when c is a function with unknown parameters instead of a value)

Let [math]\displaystyle{ f(x)=x*e^{-x}, x\gt 0 }[/math]

Use [math]\displaystyle{ g(x)=a*e^{-a*x} }[/math]to generate random variable

Solution: First of all, we need to find c

[math]\displaystyle{ cg(x)\gt =f(x) }[/math]

[math]\displaystyle{ c\gt =\frac{f(x)}{g(x)} }[/math]

[math]\displaystyle{ \frac{f(x)}{g(x)}=\frac{x}{a} * e^{-(1-a)x} }[/math]

take derivative with respect to x, and set it to 0 to get the maximum,

[math]\displaystyle{ \frac{1}{a} * e^{-(1-a)x} - \frac{x}{a} * e^{-(1-a)x} * (1-a) = 0 }[/math]

[math]\displaystyle{ x=\frac {1}{1-a} }[/math]

[math]\displaystyle{ \frac {f(x)}{g(x)} = \frac {e^{-1}}{a*(1-a)} }[/math]

[math]\displaystyle{ \frac {f(0)}{g(0)} = 0 }[/math]

[math]\displaystyle{ \frac {f(infinity)}{g(infinity)} = 0 }[/math]

therefore, [math]\displaystyle{ c= \frac {e^{-1}}{a*(1-a)} }[/math]

In order to minimize c, we need to find the appropriate a

Take derivative with respect to a and set it to be zero,

We could get [math]\displaystyle{ a= \frac {1}{2} }[/math]

[math]\displaystyle{ c=\frac{4}{e} }[/math]

Procedure:

1. Generate u v ~unif(0,1)

2. Generate y from g, since g is exponential with rate 2, let y=-ln(u)

3. If [math]\displaystyle{ v\lt \frac{f(y)}{c\cdot g(y)} }[/math], output y

Else, go to 1

Acknowledgements: The example above is from Stat 340 Winter 2013 notes.

Summary of how to find the value of c

Let [math]\displaystyle{ h(x) = \frac {f(x)}{g(x)} }[/math], and then we have the following:

1. First, take derivative of h(x) with respect to x, get x1;

2. Plug x1 into h(x) and get the value(or a function) of c, denote as c1;

3. Check the endpoints of x and sub the endpoints into h(x);

4. (if c1 is a value, then we can ignore this step) Since we want the smallest value of c such that [math]\displaystyle{ f(x) \leq c\cdot g(x) }[/math] for all x, we want the unknown parameter that minimizes c.

So we take derivative of c1 with respect to the unknown parameter (ie k=unknown parameter) to get the value of k.

Then we submit k to get the value of c1. (Double check that [math]\displaystyle{ c_1 \geq 1 }[/math]

5. Pick the maximum value of h(x) to be the value of c.

For the two examples above, we need to generate the probability function to uniform distribution, and figure out [math]\displaystyle{ c=max\frac {f(y)}{g(y)} }[/math]. If [math]\displaystyle{ v\lt \frac {f(y)}{c\cdot g(y)} }[/math], output y.

Summary of when to use the Accept Rejection Method

1) When the calculation of inverse cdf cannot to be computed or too difficult to compute.

2) When f(x) can be evaluated to at least one of the normalizing constant.

3) A constant c where [math]\displaystyle{ f(x)\leq c\cdot g(x) }[/math]

4) A uniform draw

Interpretation of 'C'

We can use the value of c to calculate the acceptance rate by '1/c'.

For instance, assume c=1.5, then we can tell that 66.7% of the points will be accepted (1/1.5=0.667).

Class 5 - Tuesday, May 21

Recall the example in the last lecture. The following code will generate a random variable required by the question in that question.

- Code

>>close all

>>clear all

>>ii=1;

>>R=1; #Note: that R is a constant in which we can change

i.e. if we changed R=4 then we would have a density between -4 and 4

>>while ii<1000

u1 = rand;

u2 = rand;

y = R*(2*u2-1);

if (1-u1^2)>=(2*u2-1)^2

x(ii) = y;

ii = ii + 1; #Note: for beginner programmers that this step increases

the ii value for next time through the while loop

end

end

>>hist(x,20)

MATLAB tips: hist(x,y) where y is the number of bars in the graph.

a histogram to show variable x, and the bars number is y.

Discrete Examples

- Example 1

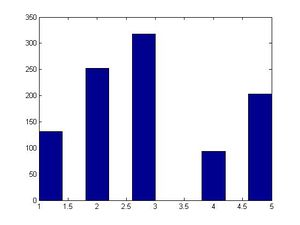

Generate random variable [math]\displaystyle{ X }[/math] according to p.m.f

[math]\displaystyle{ \begin{align}

P(x &=1) &&=0.15 \\

P(x &=2) &&=0.25 \\

P(x &=3) &&=0.3 \\

P(x &=4) &&=0.1 \\

P(x &=5) &&=0.2 \\

\end{align} }[/math]

The discrete case is analogous to the continuous case. Suppose we want to generate an X that is a discrete random variable with pmf f(x)=P(X=x). Suppose we can already easily generate a discrete random variable Y with pmf g(x)=P(Y=x)such that supx {f(x)/g(x)}<= c < ∞. The following algorithm yields our X:

Step 1. Draw discrete uniform distribution of 1, 2, 3, 4 and 5, [math]\displaystyle{ Y \sim~ g }[/math].

Step 2. Draw [math]\displaystyle{ U \sim~ U(0,1) }[/math].

Step 3. If [math]\displaystyle{ U \leq \frac{f(Y)}{c \cdot g(Y)} }[/math], then X = Y ;

Else return to Step 1.

How do we compute c? Recall that c can be found by maximizing the ratio :[math]\displaystyle{ \frac{f(x)}{g(x)} }[/math]. Note that this is different from maximizing [math]\displaystyle{ f(x) }[/math] and [math]\displaystyle{ g(x) }[/math] independently of each other and then taking the ratio to find c.

- [math]\displaystyle{ c = max \frac{f(x)}{g(x)} = \frac {0.3}{0.2} = 1.5 }[/math]

- [math]\displaystyle{ \frac{p(x)}{cg(x)} = \frac{p(x)}{1.5*0.2} = \frac{p(x)}{0.3} }[/math]

Note: The U is independent from y in Step 2 and 3 above. ~The constant c is a indicator of rejection rate

the acceptance-rejection method of pmf, the uniform probability is the same for all variables, and there 5 parameters(1,2,3,4,5), so g(x) is 0.2

Remember that we always want to choose [math]\displaystyle{ cg }[/math] to be equal to or greater than [math]\displaystyle{ f }[/math], but as close as possible.

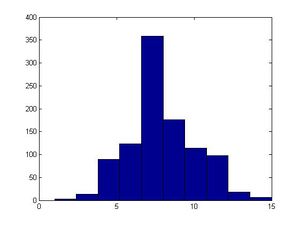

- Code for example 1

>>close all

>>clear all

>>p=[.15 .25 .3 .1 .2]; #This a vector holding the values

>>ii=1;

>>while ii < 1000

y=unidrnd(5);

u=rand;

if u<= p(y)/0.3

x(ii)=y;

ii=ii+1;

end

end

>>hist(x)

unidrnd(k) draws from the discrete uniform distribution of integers [math]\displaystyle{ 1,2,3,...,k }[/math] If this function is not built in to your MATLAB then we can do simple transformation on the rand(k) function to make it work like the unidrnd(k) function.

The acceptance rate is [math]\displaystyle{ \frac {1}{c} }[/math], so the lower the c, the more efficient the algorithm. Theoretically, c equals 1 is the best case because all samples would be accepted; however it would only be true when the proposal and target distributions are exactly the same, which would never happen in practice.

For example, if c = 1.5, the acceptance rate would be [math]\displaystyle{ \frac {1}{1.5}=\frac {2}{3} }[/math]. Thus, in order to generate 1000 random values, a total of 1500 iterations would be required.

A histogram to show 1000 random values of f(x), more random value make the probability close to the express probability value.

- Example 2

p(x=1)=0.1

p(x=2)=0.3

p(x=3)=0.6

Let g be the uniform distribution of 1, 2, or 3

g(x)= 1/3

[math]\displaystyle{ c=max(p_{x}/g(x))=0.6/(1/3)=1.8 }[/math]

1,y~g

2,u~U(0,1)

3, If [math]\displaystyle{ U \leq \frac{f(y)}{cg(y)} }[/math], set x = y. Else go to 1.

- Code for example 2

>>close all

>>clear all

>>p=[.1 .3 .6];

>>ii=1;

>>while ii < 1000

y=unidrnd(3);

u=rand;

if u<= p(y)/1.8

x(ii)=y;

ii=ii+1;

end

end

>>hist(x)

- Example 3

[math]\displaystyle{ p_{x}=e^{-3}3^{x}/x! , x\gt =0 }[/math]

(poisson distribution)

Try the first few p_{x}'s: .0498 .149 .224 .224 .168 .101 .0504 .0216 .0081 .0027

Use the geometric distribution for [math]\displaystyle{ g(x) }[/math];

[math]\displaystyle{ g(x)=p(1-p)^{x} }[/math], choose p=0.25

Look at [math]\displaystyle{ p_{x}/g(x) }[/math] for the first few numbers: .199 .797 1.59 2.12 2.12 1.70 1.13 .647 .324 .144

We want [math]\displaystyle{ c=max(p_{x}/g(x)) }[/math] which is approximately 2.12

1. Generate [math]\displaystyle{ U_{1} \sim~ U(0,1); U_{2} \sim~ U(0,1) }[/math]

2. [math]\displaystyle{ j = \lfloor \frac{ln(U_{1})}{ln(.75)} \rfloor; }[/math]

3. if [math]\displaystyle{ U_{2} \lt \frac{p_{j}}{cg(j)} }[/math], set X = xj, else go to step 1.

- Example 4 (Hypergeometric & Binomial)

Suppose we are given f(x) such that it is hypergeometically distributed, given 10 white balls, 5 red balls, and select 3 balls, let X be the number of red ball selected, without replacement.

Choose g(x) such that it is binomial distribution, Bin(3, 1/3). Find the rejection constant, c

Solution:

For hypergeometric: [math]\displaystyle{ P(X=0) =\binom{10}{3}/\binom{15}{3} =0.2637, P(x=1)=\binom{10}{2} * \binom{5}{1} /\binom{15}{3}=0.4945, P(X=2)=\binom{10}{1} * \binom{5}{2} /\binom{15}{3}=0.2198, }[/math]

[math]\displaystyle{ P(X=3)=\binom{5}{3}/\binom{15}{3}= 0.02198 }[/math]

For Binomial g(x): P(X=0) = (2/3)^3=0.2963; P(X=1)= 3*(1/3)*(2/3)^2 = 0.4444, P(X=2)=3*(1/3)^2*(2/3)=0.2222, P(X=3)=(1/3)^3=0.03704

Find the value of f/g for each X

X=0: 0.8898; X=1: 1.1127; X=2: 0.9891; X=3: 0.5934

Choose the maximum which is c=1.1127

Looking for the max f(x) is 0.4945 and the max g(x) is 0.4444, so we can calculate the max c is 1.1127. But for the graph, this c is not the best because it does not cover all the point of f(x), so we need to move the c*g(x) graph to cover all f(x), and decreasing the rejection ratio.

Limitation: If the shape of the proposed distribution g is very different from the target distribution f, then the rejection rate will be high (High c value). Computationally, the algorithm is always right; however it is inefficient and requires many iterations.

Here is an example:

In the above example, we need to move c*g(x) to the peak of f to cover the whole f. Thus c will be very large and 1/c will be small.

The higher the rejection rate, more points will be rejected.

More on rejection/acceptance rate: 1/c is the acceptance rate. As c decreases (note: the minimum value of c is 1), the acceptance rate increases. In our last example, 1/c=1/1.5≈66.67%. Around 67% of points generated will be accepted.

which brings the acceptance rate low which leads to very time consuming sampling

Acceptance-Rejection Method

Problem: The CDF is not invertible or it is difficult to find the inverse.

Plan:

- Draw y~g(.)

- Draw u~Unif(0,1)

- If [math]\displaystyle{ u\leq \frac{f(y)}{cg(y)} }[/math]then set x=y. Else return to Step 1

x will have the desired distribution.

Matlab Example

close all

clear all

ii=1;

R=1;

while ii<1000

u1 = rand;

u2 = rand;

y = R*(2*u2-1);

if (1-u1^2)>=(2*u2-1)^2

x(ii) = y;

ii = ii + 1;

end

end

hist(x,20)

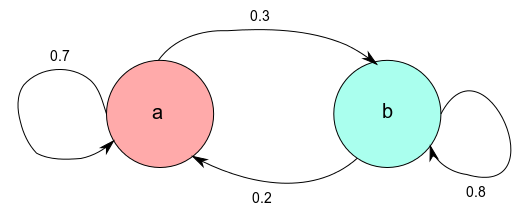

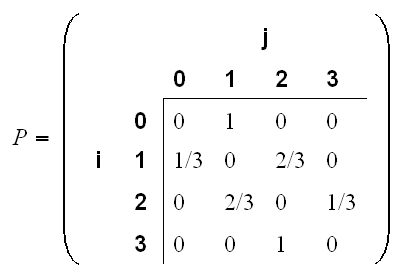

Recall that,