stat340s13: Difference between revisions

m (Conversion script moved page Stat340s13 to stat340s13: Converting page titles to lowercase) |

|||

| Line 1: | Line 1: | ||

<div style = "align:left; background:#00ffff; font-size: 150%"> | |||

If you | |||

use ideas, plots, text, code and other intellectual property developed by someone else | |||

in your `wikicoursenote' contribution , you have to cite the | |||

original source. If you copy a sentence or a paragraph from work done by someone | |||

else, in addition to citing the original source you have to use quotation marks to | |||

identify the scope of the copied material. Evidence of copying or plagiarism will | |||

cause a failing mark in the course. | |||

Example of citing the original source | |||

Assumptions Underlying Principal Component Analysis can be found here<ref>http://support.sas.com/publishing/pubcat/chaps/55129.pdf</ref> | |||

</div> | |||

==Important Notes== | |||

<span style="color:#ff0000;font-size: 200%"> To make distinction between the material covered in class and additional material that you have add to the course, use the following convention. For anything that is not covered in the lecture write:</span> | |||

<div style = "align:left; background:#F5F5DC; font-size: 120%"> | |||

In the news recently was a story that captures some of the ideas behind PCA. Over the past two years, Scott Golder and Michael Macy, researchers from Cornell University, collected 509 million Twitter messages from 2.4 million users in 84 different countries. The data they used were words collected at various times of day and they classified the data into two different categories: positive emotion words and negative emotion words. Then, they were able to study this new data to evaluate subjects' moods at different times of day, while the subjects were in different parts of the world. They found that the subjects generally exhibited positive emotions in the mornings and late evenings, and negative emotions mid-day. They were able to "project their data onto a smaller dimensional space" using PCS. Their paper, "Diurnal and Seasonal Mood Vary with Work, Sleep, and Daylength Across Diverse Cultures," is available in the journal Science.<ref>http://www.pcworld.com/article/240831/twitter_analysis_reveals_global_human_moodiness.html</ref>. | |||

Assumptions Underlying Principal Component Analysis can be found here<ref>http://support.sas.com/publishing/pubcat/chaps/55129.pdf</ref> | |||

</div> | |||

== Introduction, Class 1 - Tuesday, May 7 == | == Introduction, Class 1 - Tuesday, May 7 == | ||

| Line 6: | Line 31: | ||

<!-- br tag for spacing--> | <!-- br tag for spacing--> | ||

Lecture: <br /> | Lecture: <br /> | ||

001: | 001: T/Th 8:30-9:50am MC1085 <br /> | ||

002: | 002: T/Th 1:00-2:20pm DC1351 <br /> | ||

Tutorial: <br /> | Tutorial: <br /> | ||

2:30-3: | 2:30-3:20pm Mon M3 1006 <br /> | ||

Office Hours: <br /> | |||

Friday at 10am, M3 4208 <br /> | |||

=== Midterm === | === Midterm === | ||

Monday June 17 2013 from 2: | Monday June 17,2013 from 2:30pm-3:20pm | ||

=== Final === | |||

Saturday August 10,2013 from 7:30pm-10:00pm | |||

=== TA(s): === | === TA(s): === | ||

| Line 51: | Line 81: | ||

=== Four Fundamental Problems === | === Four Fundamental Problems === | ||

<!-- br tag for spacing--> | <!-- br tag for spacing--> | ||

1 | 1 Classification: Given input object X, we have a function which will take this input X and identify which 'class (Y)' it belongs to (Discrete Case) <br /> | ||

i.e taking value from x, we could predict y. | <font size="3">i.e taking value from x, we could predict y.</font> | ||

(For example, | (For example, if you have 40 images of oranges and 60 images of apples (represented by x), you can estimate a function that takes the images and states what type of fruit it is - note Y is discrete in this case.) <br /> | ||

2 | 2 Regression: Same as classification but in the continuous case except y is non discrete. Results from regression are often used for prediction,forecasting and etc. (Example of stock prices, height, weight, etc.) <br /> | ||

3 | (A simple practice might be investigating the hypothesis that higher levels of education cause higher levels of income.) <br /> | ||

4 | 3 Clustering: Use common features of objects in same class or group to form clusters.(in this case, x is given, y is unknown; For example, clustering by provinces to measure average height of Canadian men.) <br /> | ||

4 Dimensionality Reduction (also known as Feature extraction, Manifold learning): Used when we have a variable in high dimension space and we want to reduce the dimension <br /> | |||

=== Applications === | === Applications === | ||

| Line 86: | Line 117: | ||

*Other course material on: http://wikicoursenote.com/wiki/ | *Other course material on: http://wikicoursenote.com/wiki/ | ||

*Log on to both Learn and wikicoursenote frequently. | *Log on to both Learn and wikicoursenote frequently. | ||

*Email all questions and concerns to UWStat340@gmail.com. Do not use your personal email address! Do not email instructor or TAs about the class directly to | *Email all questions and concerns to UWStat340@gmail.com. Do not use your personal email address! Do not email instructor or TAs about the class directly to their personal accounts! | ||

'''Wikicourse note (10% of final mark):''' | '''Wikicourse note (complete at least 12 contributions to get 10% of final mark):''' | ||

When applying an account in the wikicourse note, please use the quest account as your login name while the uwaterloo email as the registered email. This is important as the quest id will | When applying for an account in the wikicourse note, please use the quest account as your login name while the uwaterloo email as the registered email. This is important as the quest id will be used to identify the students who make the contributions. | ||

Example:<br/> | Example:<br/> | ||

User: questid<br/> | User: questid<br/> | ||

| Line 97: | Line 128: | ||

'''As a technical/editorial contributor''': Make contributions within 1 week and do not copy the notes on the blackboard. | '''As a technical/editorial contributor''': Make contributions within 1 week and do not copy the notes on the blackboard. | ||

''All contributions are now considered general contributions you must contribute to 50% of lectures for full marks'' | |||

*A general contribution can be correctional (fixing mistakes) or technical (expanding content, adding examples, etc) but at least half of your contributions should be technical for full marks | *A general contribution can be correctional (fixing mistakes) or technical (expanding content, adding examples, etc.) but at least half of your contributions should be technical for full marks. | ||

Do not submit copyrighted work without permission, cite original sources. | Do not submit copyrighted work without permission, cite original sources. | ||

Each time you make a contribution, check mark the table. Marks are calculated on honour system, although there will be random verifications. If you are caught claiming to contribute but | Each time you make a contribution, check mark the table. Marks are calculated on an honour system, although there will be random verifications. If you are caught claiming to contribute but have not, you will not be credited. | ||

'''Wikicoursenote contribution form''' : | '''Wikicoursenote contribution form''' : https://docs.google.com/forms/d/1Sgq0uDztDvtcS5JoBMtWziwH96DrBz2JiURvHPNd-xs/viewform | ||

- you can submit your contributions | - you can submit your contributions multiple times.<br /> | ||

- you will be able to edit the response right after submitting<br /> | - you will be able to edit the response right after submitting<br /> | ||

- send email to make changes to an old response : uwstat340@gmail.com<br /> | - send email to make changes to an old response : uwstat340@gmail.com<br /> | ||

| Line 116: | Line 147: | ||

- Markov Chain Monte Carlo | - Markov Chain Monte Carlo | ||

=== | ==Class 2 - Thursday, May 9== | ||

===Generating Random Numbers=== | |||

==== Introduction ==== | |||

Simulation is the imitation of a process or system over time. Computational power has introduced the possibility of using simulation study to analyze models used to describe a situation. | |||

In order to perform a simulation study, we should: | |||

<br\> 1 Use a computer to generate (pseudo*) random numbers (rand in MATLAB).<br> | |||

2 Use these numbers to generate values of random variable from distributions: for example, set a variable in terms of uniform u ~ U(0,1).<br> | |||

3 Using the concept of discrete events, we show how the random variables can be used to generate the behavior of a stochastic model over time. (Note: A stochastic model is the opposite of deterministic model, where there are several directions the process can evolve to)<br> | |||

4 After continually generating the behavior of the system, we can obtain estimators and other quantities of interest.<br> | |||

The building block of a simulation study is the ability to generate a random number. This random number is a value from a random variable distributed uniformly on (0,1). There are many different methods of generating a random number: <br> | |||

<br><font size="3">Physical Method: Roulette wheel, lottery balls, dice rolling, card shuffling etc. <br> | |||

<br>Numerically/Arithmetically: Use of a computer to successively generate pseudorandom numbers. The <br />sequence of numbers can appear to be random; however they are deterministically calculated with an <br />equation which defines pseudorandom. <br></font> | |||

(Source: Ross, Sheldon M., and Sheldon M. Ross. Simulation. San Diego: Academic, 1997. Print.) | |||

*We use the prefix pseudo because computer generates random numbers based on algorithms, which suggests that generated numbers are not truly random. Therefore pseudo-random numbers is used. | |||

In general, a deterministic model produces specific results given certain inputs by the model user, contrasting with a '''stochastic''' model which encapsulates randomness and probabilistic events. | |||

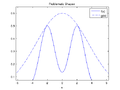

[[File:Det_vs_sto.jpg]] | |||

<br>A computer cannot generate truly random numbers because computers can only run algorithms, which are deterministic in nature. They can, however, generate Pseudo Random Numbers<br> | |||

'''Pseudo Random Numbers''' are the numbers that seem random but are actually determined by a relative set of original values. It is a chain of numbers pre-set by a formula or an algorithm, and the value jump from one to the next, making it look like a series of independent random events. The flaw of this method is that, eventually the chain returns to its initial position and pattern starts to repeat, but if we make the number set large enough we can prevent the numbers from repeating too early. Although the pseudo random numbers are deterministic, these numbers have a sequence of value and all of them have the appearances of being independent uniform random variables. Being deterministic, pseudo random numbers are valuable and beneficial due to the ease to generate and manipulate. | |||

When people | When people repeat the test many times, the results will be the closed express values, which make the trials look deterministic. However, for each trial, the result is random. So, it looks like pseudo random numbers. | ||

So, it looks like pseudo random numbers. | |||

=== Mod === | ==== Mod ==== | ||

Let <math>n \in \N</math> and <math>m \in \N^+</math>, then by Division Algorithm, | Let <math>n \in \N</math> and <math>m \in \N^+</math>, then by Division Algorithm, | ||

<math>\exists q, \, r \in \N \;\text{with}\; 0\leq r < m, \; \text{s.t.}\; n = mq+r</math>, | <math>\exists q, \, r \in \N \;\text{with}\; 0\leq r < m, \; \text{s.t.}\; n = mq+r</math>, | ||

where <math>q</math> is called the quotient and <math>r</math> the remainder. Hence we can define a binary function | where <math>q</math> is called the quotient and <math>r</math> the remainder. Hence we can define a binary function | ||

<math>\mod : \N \times \N^+ \rightarrow \N </math> given by <math>r:=n \mod m</math> which | <math>\mod : \N \times \N^+ \rightarrow \N </math> given by <math>r:=n \mod m</math> which returns the remainder after division by m. | ||

<br /> | <br /> | ||

Generally, mod means taking the reminder after division by m. | |||

<br /> | <br /> | ||

We say that n is congruent to r mod m if n = mq + r, where m is an integer. | We say that n is congruent to r mod m if n = mq + r, where m is an integer. | ||

Values are between 0 and m-1 <br /> | |||

if y = ax + b, then <math>b:=y \mod a</math>. <br /> | if y = ax + b, then <math>b:=y \mod a</math>. <br /> | ||

'''Example 1:'''<br /> | |||

<math>30 = 4 \cdot 7 + 2</math><br /> | |||

mod | <math>2 := 30\mod 7</math><br /> | ||

<br /> | |||

<math>25 = 8 \cdot 3 + 1</math><br /> | |||

<math>1: = 25\mod 3</math><br /> | |||

<br /> | |||

<math>-3=5\cdot (-1)+2</math><br /> | |||

<math>2:=-3\mod 5</math><br /> | |||

<br /> | |||

'''Example 2:'''<br /> | |||

If <math>23 = 3 \cdot 6 + 5</math> <br /> | |||

Then equivalently, <math>5 := 23\mod 6</math><br /> | |||

<math> | <br /> | ||

If <math>31 = 31 \cdot 1</math> <br /> | |||

Then equivalently, <math>0 := 31\mod 31</math><br /> | |||

<br /> | |||

If <math>-37 = 40\cdot (-1)+ 3</math> <br /> | |||

Then equivalently, <math>3 := -37\mod 40</math><br /> | |||

<math> | |||

:<math>\ | '''Example 3:'''<br /> | ||

<math>77 = 3 \cdot 25 + 2</math><br /> | |||

<math>2 := 77\mod 3</math><br /> | |||

<br /> | |||

<math>25 = 25 \cdot 1 + 0</math><br /> | |||

<math>0: = 25\mod 25</math><br /> | |||

<br /> | |||

'''Multiplicative Congruential | '''Note:''' <math>\mod</math> here is different from the modulo congruence relation in <math>\Z_m</math>, which is an equivalence relation instead of a function. | ||

<math>x_{k+1}=(a \cdot x_{k} + b) \mod m </math>(a little tip: (a | |||

The modulo operation is useful for determining if an integer divided by another integer produces a non-zero remainder. But both integers should satisfy <math>n = mq + r</math>, where <math>m</math>, <math>r</math>, <math>q</math>, and <math>n</math> are all integers, and <math>r</math> is smaller than <math>m</math>. The above rules also satisfy when any of <math>m</math>, <math>r</math>, <math>q</math>, and <math>n</math> is negative integer, see the third example. | |||

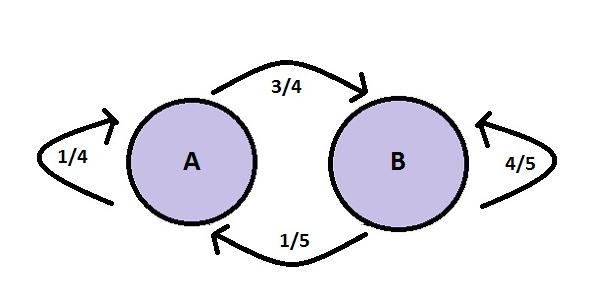

==== Mixed Congruential Algorithm ==== | |||

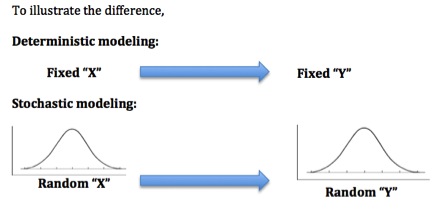

We define the Linear Congruential Method to be <math>x_{k+1}=(ax_k + b) \mod m</math>, where <math>x_k, a, b, m \in \N, \;\text{with}\; a, m \neq 0</math>. Given a '''seed''' (i.e. an initial value <math>x_0 \in \N</math>), we can obtain values for <math>x_1, \, x_2, \, \cdots, x_n</math> inductively. The Multiplicative Congruential Method, invented by Berkeley professor D. H. Lehmer, may also refer to the special case where <math>b=0</math> and the Mixed Congruential Method is case where <math>b \neq 0</math> <br />. Their title as "mixed" arises from the fact that it has both a multiplicative and additive term. | |||

An interesting fact about '''Linear Congruential Method''' is that it is one of the oldest and best-known pseudo random number generator algorithms. It is very fast and requires minimal memory to retain state. However, this method should not be used for applications that require high randomness. They should not be used for Monte Carlo simulation and cryptographic applications. (Monte Carlo simulation will consider possibilities for every choice of consideration, and it shows the extreme possibilities. This method is not precise enough.)<br /> | |||

[[File:Linear_Congruential_Statment.png|600px]] "Source: STAT 340 Spring 2010 Course Notes" | |||

'''First consider the following algorithm'''<br /> | |||

<math>x_{k+1}=x_{k} \mod m</math> <br /> | |||

such that: if <math>x_{0}=5(mod 150)</math>, <math>x_{n}=3x_{n-1}</math>, find <math>x_{1},x_{8},x_{9}</math>. <br /> | |||

<math>x_{n}=(3^n)*5(mod 150)</math> <br /> | |||

<math>x_{1}=45,x_{8}=105,x_{9}=15</math> <br /> | |||

'''Example'''<br /> | |||

<math>\text{Let }x_{0}=10,\,m=3</math><br //> | |||

:<math>\begin{align} | |||

x_{1} &{}= 10 &{}\mod{3} = 1 \\ | |||

x_{2} &{}= 1 &{}\mod{3} = 1 \\ | |||

x_{3} &{}= 1 &{}\mod{3} =1 \\ | |||

\end{align}</math> | |||

<math>\ldots</math><br /> | |||

Excluding <math>x_{0}</math>, this example generates a series of ones. In general, excluding <math>x_{0}</math>, the algorithm above will always generate a series of the same number less than M. Hence, it has a period of 1. The '''period''' can be described as the length of a sequence before it repeats. We want a large period with a sequence that is random looking. We can modify this algorithm to form the Multiplicative Congruential Algorithm. <br /> | |||

<math>x_{k+1}=(a \cdot x_{k} + b) \mod m </math>(a little tip: <math>(a \cdot b)\mod c = (a\mod c)\cdot(b\mod c))</math><br/> | |||

'''Example'''<br /> | '''Example'''<br /> | ||

| Line 214: | Line 278: | ||

This example generates a sequence with a repeating cycle of two integers.<br /> | This example generates a sequence with a repeating cycle of two integers.<br /> | ||

(If we choose the numbers properly, we could get a sequence of "random" numbers. | (If we choose the numbers properly, we could get a sequence of "random" numbers. How do we find the value of <math>a,b,</math> and <math>m</math>? At the very least <math>m</math> should be a very '''large''', preferably prime number. The larger <math>m</math> is, the higher the possibility to get a sequence of "random" numbers. This is easier to solve in Matlab. In Matlab, the command rand() generates random numbers which are uniformly distributed on the interval (0,1)). Matlab uses <math>a=7^5, b=0, m=2^{31}-1</math> – recommended in a 1988 paper, "Random Number Generators: Good Ones Are Hard To Find" by Stephen K. Park and Keith W. Miller (Important part is that <math>m</math> should be '''large and prime''')<br /> | ||

Note: <math>\frac {x_{n+1}}{m-1}</math> is an approximation to the value of a U(0,1) random variable.<br /> | |||

'''MatLab Instruction for Multiplicative Congruential Algorithm:'''<br /> | '''MatLab Instruction for Multiplicative Congruential Algorithm:'''<br /> | ||

| Line 234: | Line 302: | ||

''(Note: <br /> | ''(Note: <br /> | ||

1. Keep repeating this command over and over again and you will | 1. Keep repeating this command over and over again and you will get random numbers – this is how the command rand works in a computer. <br /> | ||

2. There is a function in MATLAB called '''RAND''' to generate a number between 0 and 1. <br /> | 2. There is a function in MATLAB called '''RAND''' to generate a random number between 0 and 1. <br /> | ||

3. If we would like to generate 1000 | For example, in MATLAB, we can use '''rand(1,1000)''' to generate 1000's numbers between 0 and 1. This is essentially a vector with 1 row, 1000 columns, with each entry a random number between 0 and 1.<br /> | ||

3. If we would like to generate 1000 or more numbers, we could use a '''for''' loop<br /><br /> | |||

''(Note on MATLAB commands: <br /> | ''(Note on MATLAB commands: <br /> | ||

| Line 242: | Line 311: | ||

2. close all: closes all figures.<br /> | 2. close all: closes all figures.<br /> | ||

3. who: displays all defined variables.<br /> | 3. who: displays all defined variables.<br /> | ||

4. clc: clears screen. | 4. clc: clears screen.<br /> | ||

5. ; : prevents the results from printing.<br /> | |||

6. disstool: displays a graphing tool.<br /><br /> | |||

<pre style="font-size:16px"> | <pre style="font-size:16px"> | ||

| Line 261: | Line 332: | ||

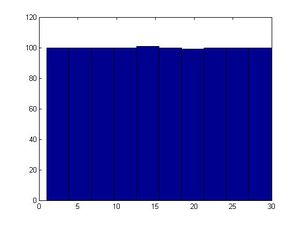

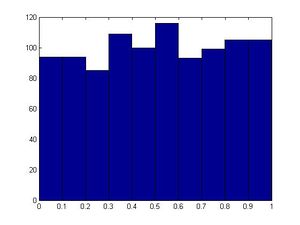

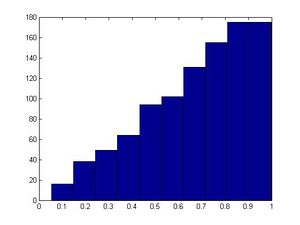

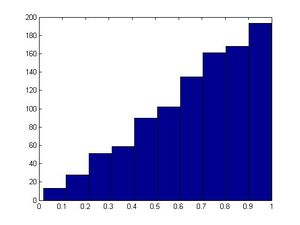

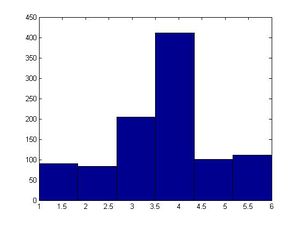

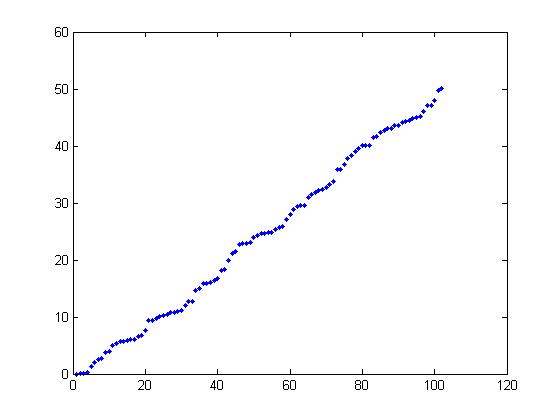

This algorithm involves three integer parameters <math>a, b,</math> and <math>m</math> and an initial value, <math>x_0</math> called the '''seed'''. A sequence of numbers is defined by <math>x_{k+1} = ax_k+ b \mod m</math>. | This algorithm involves three integer parameters <math>a, b,</math> and <math>m</math> and an initial value, <math>x_0</math> called the '''seed'''. A sequence of numbers is defined by <math>x_{k+1} = ax_k+ b \mod m</math>. <br /> | ||

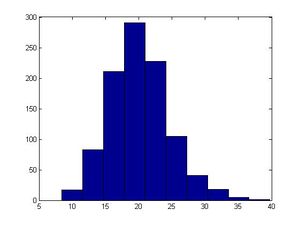

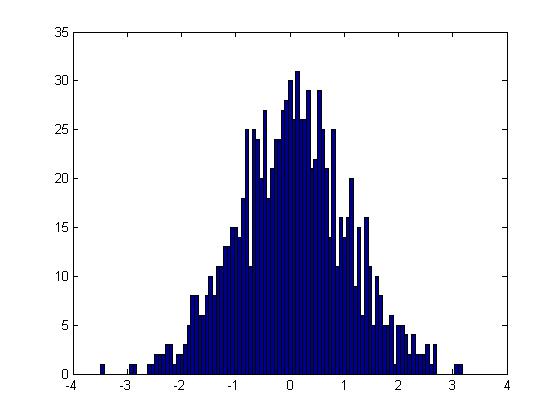

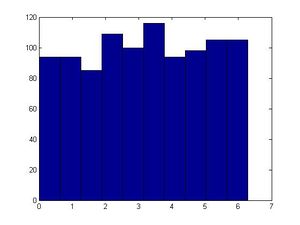

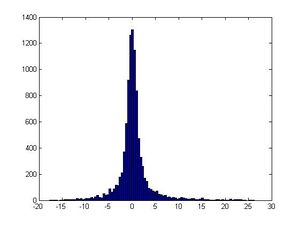

Note: For some bad <math>a</math> and <math>b</math>, the histogram may not | Note: For some bad <math>a</math> and <math>b</math>, the histogram may not look uniformly distributed.<br /> | ||

Note: hist(x) will generate a graph | Note: In MATLAB, hist(x) will generate a graph representing the distribution. Use this function after you run the code to check the real sample distribution. | ||

'''Example''': <math>a=13, b=0, m=31</math><br /> | '''Example''': <math>a=13, b=0, m=31</math><br /> | ||

The first 30 numbers in the sequence are a permutation of integers from 1 to 30, and then the sequence repeats itself so '''it is important to choose <math>m</math> large''' to decrease the probability of each number repeating itself too early. Values are between <math>0</math> and <math>m-1</math>. If the values are normalized by dividing by <math>m-1</math>, then the results are '''approximately''' numbers uniformly distributed in the interval [0,1]. There is only a finite number of values (30 possible values in this case). In MATLAB, you can use function "hist(x)" to see if it looks uniformly distributed. <br /> | The first 30 numbers in the sequence are a permutation of integers from 1 to 30, and then the sequence repeats itself so '''it is important to choose <math>m</math> large''' to decrease the probability of each number repeating itself too early. Values are between <math>0</math> and <math>m-1</math>. If the values are normalized by dividing by <math>m-1</math>, then the results are '''approximately''' numbers uniformly distributed in the interval [0,1]. There is only a finite number of values (30 possible values in this case). In MATLAB, you can use function "hist(x)" to see if it looks uniformly distributed. We saw that the values between 0-30 had the same frequency in the histogram, so we can conclude that they are uniformly distributed. <br /> | ||

If <math>x_0=1</math>, then <br /> | If <math>x_0=1</math>, then <br /> | ||

| Line 297: | Line 368: | ||

x_{2} &{}= 3 \times 1 + 2 \mod{4} = 1 \\ | x_{2} &{}= 3 \times 1 + 2 \mod{4} = 1 \\ | ||

\end{align}</math><br /> | \end{align}</math><br /> | ||

Another Example, a =3, b =2, m = 5, x_0=1 | |||

etc. | etc. | ||

<hr/> | <hr/> | ||

<p style="color:red;font-size:16px;">FAQ:</P> | <p style="color:red;font-size:16px;">FAQ:</P> | ||

1.Why | 1.Why is it 1 to 30 instead of 0 to 30 in the example above?<br> | ||

''<math>b = 0</math> so in order to have <math>x_k</math> equal to 0, <math>x_{k-1}</math> must be 0 (since <math>a=13</math> is relatively prime to 31). However, the seed is 1. Hence, we will never observe 0 in the sequence.''<br> | ''<math>b = 0</math> so in order to have <math>x_k</math> equal to 0, <math>x_{k-1}</math> must be 0 (since <math>a=13</math> is relatively prime to 31). However, the seed is 1. Hence, we will never observe 0 in the sequence.''<br> | ||

Alternatively, {0} and {1,2,...,30} are two orbits of the left multiplication by 13 in the group <math>\Z_{31}</math>.<br> | Alternatively, {0} and {1,2,...,30} are two orbits of the left multiplication by 13 in the group <math>\Z_{31}</math>.<br> | ||

| Line 309: | Line 380: | ||

'''Examples:[From Textbook]'''<br /> | '''Examples:[From Textbook]'''<br /> | ||

<math>\text{If }x_0=3 \text{ and } x_n=(5x_{n-1}+7)\mod 200</math>, <math>\text{find }x_1,\cdots,x_{10}</math>.<br /> | |||

'''Solution:'''<br /> | '''Solution:'''<br /> | ||

<math>\begin{align} | <math>\begin{align} | ||

| Line 325: | Line 396: | ||

'''Comments:'''<br /> | '''Comments:'''<br /> | ||

Matlab code: | |||

a=5; | |||

b=7; | |||

m=200; | |||

x(1)=3; | |||

for ii=2:1000 | |||

x(ii)=mod(a*x(ii-1)+b,m); | |||

end | |||

size(x); | |||

hist(x) | |||

Typically, it is good to choose <math>m</math> such that <math>m</math> is large, and <math>m</math> is prime. Careful selection of parameters '<math>a</math>' and '<math>b</math>' also helps generate relatively "random" output values, where it is harder to identify patterns. For example, when we used a composite (non prime) number such as 40 for <math>m</math>, our results were not satisfactory in producing an output resembling a uniform distribution.<br /> | |||

The computed values are between 0 and <math>m-1</math>. If the values are normalized by dividing by '''<math>m-1</math>''', their result is numbers uniformly distributed on the interval <math>\left[0,1\right]</math> (similar to computing from uniform distribution).<br /> | |||

From the example shown above, if we want to create a large group of random numbers, it is better to have large, prime <math>m</math> so that the generated random values will not repeat after several iterations. Note: the period for this example is 8: from '<math>x_2</math>' to '<math>x_9</math>'.<br /> | |||

this part | There has been a research on how to choose uniform sequence. Many programs give you the options to choose the seed. Sometimes the seed is chosen by CPU.<br /> | ||

<span style="background:#F5F5DC">Theorem (extra knowledge)</span><br /> | |||

Let c be a non-zero constant. Then for any seed x0, and LCG will have largest max. period if and only if<br /> | |||

(i) m and c are coprime;<br /> | |||

(ii) (a-1) is divisible by all prime factor of m;<br /> | |||

(iii) if and only if m is divisible by 4, then a-1 is also divisible by 4.<br /> | |||

We want our LCG to have a large cycle. | |||

We call a cycle with m element the maximal period. | |||

We can make it bigger by making m big and prime. | |||

Recall:any number you can think of can be broken into a factor of prime | |||

Define coprime:Two numbers X and Y, are coprime if they do not share any prime factors. | |||

Example:<br /> | |||

<font size="3">Xn=(15Xn-1 + 4) mod 7</font><br /> | |||

(i) m=7 c=4 -> coprime;<br /> | |||

(ii) a-1=14 and a-1 is divisible by 7;<br /> | |||

(iii) dose not apply.<br /> | |||

(The extra knowledge stops here) | |||

In this part, I learned how to use R code to figure out the relationship between two integers | |||

division, and their remainder. And when we use R to calculate R with random variables for a range such as(1:1000),the graph of distribution is like uniform distribution. | division, and their remainder. And when we use R to calculate R with random variables for a range such as(1:1000),the graph of distribution is like uniform distribution. | ||

<div style="border:1px solid #cccccc;border-radius:10px;box-shadow: 0 5px 15px 1px rgba(0, 0, 0, 0.6), 0 0 200px 1px rgba(255, 255, 255, 0.5);padding:20px;margin:20px;background:#FFFFAD;"> | <div style="border:1px solid #cccccc;border-radius:10px;box-shadow: 0 5px 15px 1px rgba(0, 0, 0, 0.6), 0 0 200px 1px rgba(255, 255, 255, 0.5);padding:20px;margin:20px;background:#FFFFAD;"> | ||

< | <h4 style="text-align:center;">Summary of Multiplicative Congruential Algorithm</h4> | ||

<p><b>Problem:</b> generate Pseudo Random Numbers.</p> | <p><b>Problem:</b> generate Pseudo Random Numbers.</p> | ||

<b>Plan:</b> | <b>Plan:</b> | ||

<ol> | <ol> | ||

<li>find integer: <i>a b m</i>(large prime) < | <li>find integer: <i>a b m</i>(large prime) <i>x<sub>0</sub></i>(the seed) .</li> | ||

<li><math>x_{ | <li><math>x_{k+1}=(ax_{k}+b)</math>mod m</li> | ||

</ol> | </ol> | ||

<b>Matlab Instruction:</b> | <b>Matlab Instruction:</b> | ||

| Line 358: | Line 461: | ||

</pre> | </pre> | ||

</div> | </div> | ||

Another algorithm for generating pseudo random numbers is the multiply with carry method. Its simplest form is similar to the linear congruential generator. They differs in that the parameter b changes in the MWC algorithm. It is as follows: <br> | |||

1.) x<sub>k+1</sub> = ax<sub>k</sub> + b<sub>k</sub> mod m <br> | |||

2.) b<sub>k+1</sub> = floor((ax<sub>k</sub> + b<sub>k</sub>)/m) <br> | |||

3.) set k to k + 1 and go to step 1 | |||

[http://www.javamex.com/tutorials/random_numbers/multiply_with_carry.shtml Source] | |||

=== Inverse Transform Method === | === Inverse Transform Method === | ||

Now that we know how to generate random numbers, we use these values to sample form distributions such as exponential. However, to easily use this method, the probability distribution consumed must have a cumulative distribution function (cdf) <math>F</math> with a tractable (that is, easily found) inverse <math>F^{-1}</math>.<br /> | |||

'''Theorem''': <br /> | '''Theorem''': <br /> | ||

| Line 367: | Line 476: | ||

follows the distribution function <math>F\left(\cdot\right)</math>, | follows the distribution function <math>F\left(\cdot\right)</math>, | ||

where <math>F^{-1}\left(u\right):=\inf F^{-1}\big(\left[u,+\infty\right)\big) = \inf\{x\in\R | F\left(x\right) \geq u\}</math> is the generalized inverse.<br /> | where <math>F^{-1}\left(u\right):=\inf F^{-1}\big(\left[u,+\infty\right)\big) = \inf\{x\in\R | F\left(x\right) \geq u\}</math> is the generalized inverse.<br /> | ||

'''Note''': <math>F</math> need not be invertible, but if it is, then the generalized inverse is the same as the inverse in the usual case. | '''Note''': <math>F</math> need not be invertible everywhere on the real line, but if it is, then the generalized inverse is the same as the inverse in the usual case. We only need it to be invertible on the range of F(x), [0,1]. | ||

'''Proof of the theorem:'''<br /> | '''Proof of the theorem:'''<br /> | ||

The generalized inverse satisfies the following: <br /> | The generalized inverse satisfies the following: <br /> | ||

<math>\ | |||

:<math>P(X\leq x)</math> <br /> | |||

<math>= P(F^{-1}(U)\leq x)</math> (since <math>X= F^{-1}(U)</math> by the inverse method)<br /> | |||

<math>= P((F(F^{-1}(U))\leq F(x))</math> (since <math>F </math> is monotonically increasing) <br /> | |||

<math>= P(U\leq F(x)) </math> (since <math> P(U\leq a)= a</math> for <math>U \sim U(0,1), a \in [0,1]</math>,<br /> | |||

<math>= F(x) , \text{ where } 0 \leq F(x) \leq 1 </math> <br /> | |||

This is the c.d.f. of X. <br /> | |||

<br /> | |||

That is <math>F^{-1}\left(u\right) \leq x \Leftrightarrow u \leq F\left(x\right)</math><br /> | That is <math>F^{-1}\left(u\right) \leq x \Leftrightarrow u \leq F\left(x\right)</math><br /> | ||

| Line 391: | Line 498: | ||

Therefore, in order to generate a random variable X~F, it can generate U according to U(0,1) and then make the transformation x=<math> F^{-1}(U) </math> <br /> | Therefore, in order to generate a random variable X~F, it can generate U according to U(0,1) and then make the transformation x=<math> F^{-1}(U) </math> <br /> | ||

Note that we can apply the inverse on both sides in the proof of the inverse transform only if the pdf of X is monotonic. A monotonic function is one that is either increasing for all x, or decreasing for all x. | Note that we can apply the inverse on both sides in the proof of the inverse transform only if the pdf of X is monotonic. A monotonic function is one that is either increasing for all x, or decreasing for all x. Of course, this holds true for all CDFs, since they are monotonic by definition. <br /> | ||

In short, what the theorem tells us is that we can use a random number <math> U from U(0,1) </math> to randomly sample a point on the CDF of X, then apply the inverse of the CDF to map the given probability to its domain, which gives us the random variable X.<br/> | |||

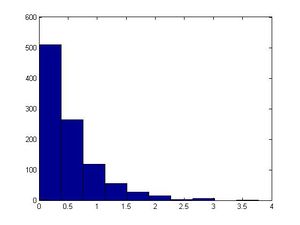

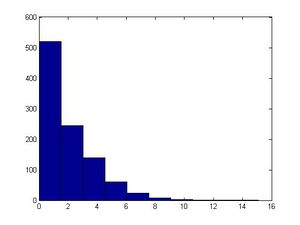

< | '''Example 1 - Exponential''': <math> f(x) = \lambda e^{-\lambda x}</math><br/> | ||

Calculate the CDF:<br /> | |||

<math> F(x)= \int_0^x f(t) dt = \int_0^x \lambda e ^{-\lambda t}\ dt</math> | |||

<math> = \frac{\lambda}{-\lambda}\, e^{-\lambda t}\, | \underset{0}{x} </math> | |||

<math> = -e^{-\lambda x} + e^0 =1 - e^{- \lambda x} </math><br /> | |||

Solve the inverse:<br /> | |||

<math> y=1-e^{- \lambda x} \Rightarrow 1-y=e^{- \lambda x} \Rightarrow x=-\frac {ln(1-y)}{\lambda}</math><br /> | |||

<math> y=-\frac {ln(1-x)}{\lambda} \Rightarrow F^{-1}(x)=-\frac {ln(1-x)}{\lambda}</math><br /> | |||

Note that 1 − U is also uniform on (0, 1) and thus −log(1 − U) has the same distribution as −logU. <br /> | |||

Steps: <br /> | |||

Step 1: Draw U ~U[0,1];<br /> | Step 1: Draw U ~U[0,1];<br /> | ||

Step 2: <math> x=\frac{-ln( | Step 2: <math> x=\frac{-ln(U)}{\lambda} </math> <br /><br /> | ||

''' | EXAMPLE 2 Normal distribution | ||

G(y)=P[Y<=y) | |||

=P[-sqr (y) < z < sqr (y)) | |||

=integrate from -sqr(z) to Sqr(z) 1/sqr(2pi) e ^(-z^2/2) dz | |||

= 2 integrate from 0 to sqr(y) 1/sqr(2pi) e ^(-z^2/2) dz | |||

its the cdf of Y=z^2 | |||

pdf g(y)= G'(y) | |||

pdf pf x^2 (1) | |||

'''MatLab Code''':<br /> | |||

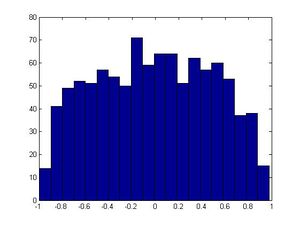

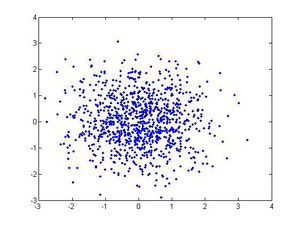

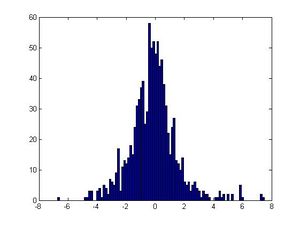

<pre style="font-size:16px"> | <pre style="font-size:16px"> | ||

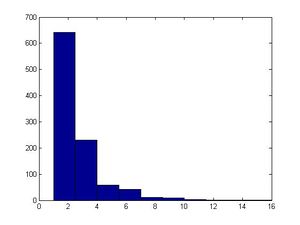

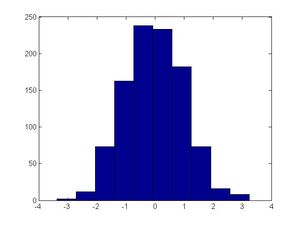

>>u=rand(1,1000); | |||

>>hist(u) # this will generate a fairly uniform diagram | |||

</pre> | </pre> | ||

[[File:ITM_example_hist(u).jpg|300px]] | |||

<pre style="font-size:16px"> | |||

#let λ=2 in this example; however, you can make another value for λ | |||

[[File:ITM_example_hist(u).jpg|300px]] | |||

<pre style="font-size:16px"> | |||

#let λ=2 in this example; however, you can make another value for λ | |||

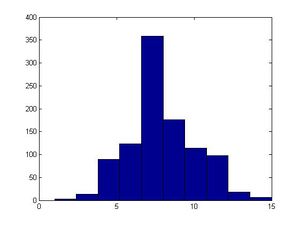

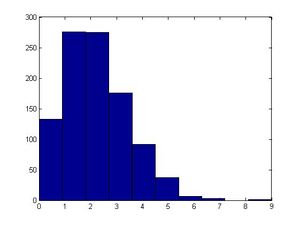

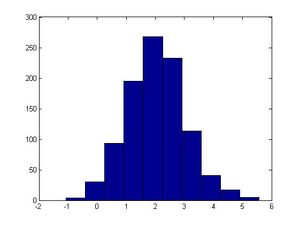

>>x=(-log(1-u))/2; | >>x=(-log(1-u))/2; | ||

>>size(x) #1000 in size | >>size(x) #1000 in size | ||

| Line 516: | Line 543: | ||

[[File:ITM_example_hist(x).jpg|300px]] | [[File:ITM_example_hist(x).jpg|300px]] | ||

'''Example 2 - Continuous Distribution''':<br /> | |||

''' | |||

<math> f(x) = \dfrac {\lambda } {2}e^{-\lambda \left| x-\theta \right| } for -\infty < X < \infty , \lambda >0 </math><br/> | |||

Calculate the CDF:<br /> | |||

</ | |||

<math> F(x)= \frac{1}{2} e^{-\lambda (\theta - x)} , for \ x \le \theta </math><br/> | |||

<math> F(x) = 1 - \frac{1}{2} e^{-\lambda (x - \theta)}, for \ x > \theta </math><br/> | |||

Solve for the inverse:<br /> | |||

= | <math>F^{-1}(x)= \theta + ln(2y)/\lambda, for \ 0 \le y \le 0.5</math><br/> | ||

<math>F^{-1}(x)= \theta - ln(2(1-y))/\lambda, for \ 0.5 < y \le 1</math><br/> | |||

Algorithm:<br /> | |||

Steps: <br /> | |||

Step 1: Draw U ~ U[0, 1];<br /> | |||

Step 2: Compute <math>X = F^-1(U)</math> i.e. <math>X = \theta + \frac {1}{\lambda} ln(2U)</math> for U < 0.5 else <math>X = \theta -\frac {1}{\lambda} ln(2(1-U))</math> | |||

'''Example 3 - <math>F(x) = x^5</math>''':<br/> | |||

<math> | Given a CDF of X: <math>F(x) = x^5</math>, transform U~U[0,1]. <br /> | ||

<math>= | Sol: | ||

<math> | Let <math>y=x^5</math>, solve for x: <math>x=y^\frac {1}{5}</math>. Therefore, <math>F^{-1} (x) = x^\frac {1}{5}</math><br /> | ||

Hence, to obtain a value of x from F(x), we first set 'u' as an uniform distribution, then obtain the inverse function of F(x), and set | |||

<math>x= u^\frac{1}{5}</math><br /><br /> | |||

Algorithm:<br /> | |||

<br /> | Steps: <br /> | ||

Step 1: Draw U ~ rand[0, 1];<br /> | |||

Step 2: X=U^(1/5);<br /> | |||

''' | '''Example 4 - BETA(1,β)''':<br/> | ||

Given u~U[0,1], generate x from BETA(1,β)<br /> | |||

Solution: | |||

<math>F(x)= 1-(1-x)^\beta</math>, | |||

<math>u= 1-(1-x)^\beta</math><br /> | |||

Solve for x: | |||

<math>(1-x)^\beta = 1-u</math>, | |||

</ | <math>1-x = (1-u)^\frac {1}{\beta}</math>, | ||

<math>x = 1-(1-u)^\frac {1}{\beta}</math><br /> | |||

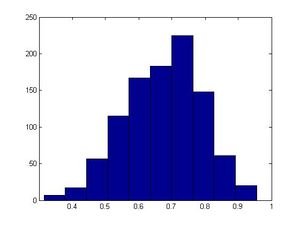

let β=3, use Matlab to construct N=1000 observations from Beta(1,3)<br /> | |||

'''MatLab Code''':<br /> | |||

<pre style="font-size:16px"> | |||

>> u = rand(1,1000); | |||

x = 1-(1-u)^(1/3); | |||

>> hist(x,50) | |||

>> mean(x) | |||

</pre> | |||

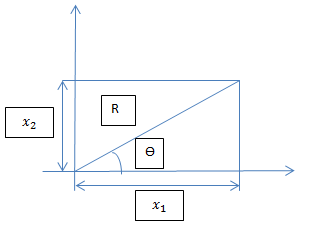

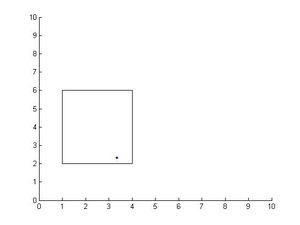

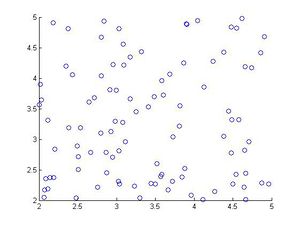

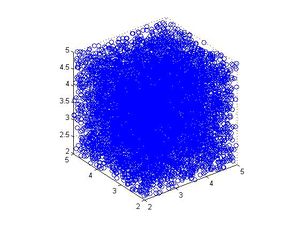

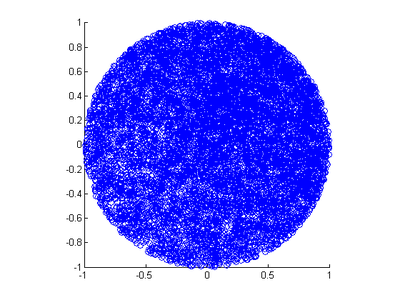

''' | '''Example 5 - Estimating <math>\pi</math>''':<br/> | ||

' | Let's use rand() and Monte Carlo Method to estimate <math>\pi</math> <br /> | ||

<math> | N= total number of points <br /> | ||

<math> | N<sub>c</sub> = total number of points inside the circle<br /> | ||

Prob[(x,y) lies in the circle=<math>\frac {Area(circle)}{Area(square)}</math><br /> | |||

If we take square of size 2, circle will have area =<math>\pi (\frac {2}{2})^2 =\pi</math>.<br /> | |||

Thus <math>\pi= 4(\frac {N_c}{N})</math><br /> | |||

<font size="3">For example, '''UNIF(a,b)'''<br /> | |||

<math>y = F(x) = (x - a)/ (b - a) </math> | |||

<math>x = (b - a ) * y + a</math> | |||

<math>X = a + ( b - a) * U</math><br /> | |||

where U is UNIF(0,1)</font> | |||

'''Limitations:'''<br /> | |||

1. This method is flawed since not all functions are invertible or monotonic: generalized inverse is hard to work on.<br /> | |||

2. It may be impractical since some CDF's and/or integrals are not easy to compute such as Gaussian distribution.<br /> | |||

We learned how to prove the transformation from cdf to inverse cdf,and use the uniform distribution to obtain a value of x from F(x). | |||

We can also use uniform distribution in inverse method to determine other distributions. | |||

The probability of getting a point for a circle over the triangle is a closed uniform distribution, each point in the circle and over the triangle is almost the same. Then, we can look at the graph to determine what kind of distribution the graph resembles. | |||

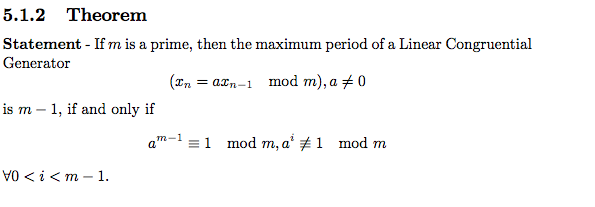

==== Probability Distribution Function Tool in MATLAB ==== | |||

<pre style="font-size:16px"> | |||

disttool #shows different distributions | |||

</pre> | |||

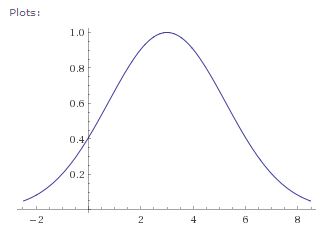

This command allows users to explore different types of distribution and see how the changes affect the parameters on the plot of either a CDF or PDF. | |||

: | [[File:Disttool.jpg|450px]] | ||

change the value of mu and sigma can change the graph skew side. | |||

== Class 3 - Tuesday, May 14 == | |||

=== Recall the Inverse Transform Method === | |||

Let U~Unif(0,1),then the random variable X = F<sup>-1</sup>(u) has distribution F. <br /> | |||

To sample X with CDF F(x), <br /> | |||

<math>1) U~ \sim~ Unif [0,1] </math> | |||

'''2) X = F<sup>-1</sup>(u) '''<br /> | |||

<br /> | |||

'''Note''': CDF of a U(a,b) random variable is: | |||

:<math> | :<math> | ||

x = \begin{cases} | F(x)= \begin{cases} | ||

0 | 0 & \text{for }x < a \\[8pt] | ||

1 | \frac{x-a}{b-a} & \text{for }a \le x < b \\[8pt] | ||

\ | 1 & \text{for }x \ge b | ||

\end{cases} | |||

</math> | |||

Thus, for <math> U </math> ~ <math>U(0,1) </math>, we have <math>P(U\leq 1) = 1</math> and <math>P(U\leq 1/2) = 1/2</math>.<br /> | |||

More generally, we see that <math>P(U\leq a) = a</math>.<br /> | |||

For this reason, we had <math>P(U\leq F(x)) = F(x)</math>.<br /> | |||

'''Reminder: ''' <br /> | |||

'''This is only for uniform distribution <math> U~ \sim~ Unif [0,1] </math> '''<br /> | |||

<math> P (U \le 1) = 1 </math> <br /> | |||

<math> P (U \le 0.5) = 0.5 </math> <br /> | |||

<math> P (U \le a) = a </math> <br /> | |||

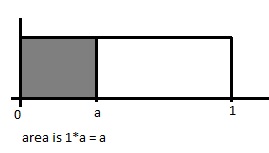

[[File:2.jpg]] <math>P(U\leq a)=a</math> | |||

< | |||

</ | |||

Note | Note that on a single point there is no mass probability (i.e. <math>u</math> <= 0.5, is the same as <math> u </math> < 0.5) | ||

More formally, this is saying that <math> P(X = x) = F(x)- \lim_{s \to x^-}F(x)</math> , which equals zero for any continuous random variable | |||

====Limitations of the Inverse Transform Method==== | |||

Though this method is very easy to use and apply, it does have a major disadvantage/limitation: | |||

* We need to find the inverse cdf <math> F^{-1}(\cdot) </math>. In some cases the inverse function does not exist, or is difficult to find because it requires a closed form expression for F(x). | |||

For example, it is too difficult to find the inverse cdf of the Gaussian distribution, so we must find another method to sample from the Gaussian distribution. | |||

:<math> | In conclusion, we need to find another way of sampling from more complicated distributions | ||

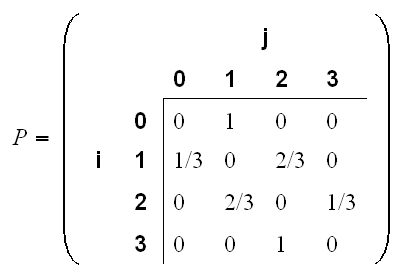

=== Discrete Case === | |||

The same technique can be used for discrete case. We want to generate a discrete random variable x, that has probability mass function: <br/> | |||

:<math>\begin{align}P(X = x_i) &{}= p_i \end{align}</math> | |||

\ | :<math>x_0 \leq x_1 \leq x_2 \dots \leq x_n</math> | ||

:<math>\sum p_i = 1</math> | |||

Algorithm for applying Inverse Transformation Method in Discrete Case (Procedure):<br> | |||

1. Define a probability mass function for <math>x_{i}</math> where i = 1,....,k. Note: k could grow infinitely. <br> | |||

2. Generate a uniform random number U, <math> U~ \sim~ Unif [0,1] </math><br> | |||

3. If <math>U\leq p_{o}</math>, deliver <math>X = x_{o}</math><br> | |||

4. Else, if <math>U\leq p_{o} + p_{1} </math>, deliver <math>X = x_{1}</math><br> | |||

5. Repeat the process again till we reached to <math>U\leq p_{o} + p_{1} + ......+ p_{k}</math>, deliver <math>X = x_{k}</math><br> | |||

Note that after generating a random U, the value of X can be determined by finding the interval <math>[F(x_{j-1}),F(x_{j})]</math> in which U lies. <br /> | |||

:< | In summary: | ||

Generate a discrete r.v.x that has pmf:<br /> | |||

P(X=xi)=Pi, x0<x1<x2<... <br /> | |||

1 | 1. Draw U~U(0,1);<br /> | ||

2 | 2. If F(x(i-1))<U<F(xi), x=xi.<br /> | ||

'''Example 3.0:''' <br /> | |||

Generate a random variable from the following probability function:<br /> | |||

{| class="wikitable" | |||

|- | |||

|- | |||

| x | |||

| -2 | |||

| -1 | |||

| 0 | |||

| 1 | |||

| 2 | |||

|- | |||

| f(x) | |||

| 0.1 | |||

| 0.5 | |||

| 0.07 | |||

| 0.03 | |||

</ | | 0.3 | ||

|} | |||

Answer:<br /> | |||

1. Gen U~U(0,1)<br /> | |||

2. If U < 0.5 then output -1<br /> | |||

else if U < 0.8 then output 2<br /> | |||

else if U < 0.9 then output -2<br /> | |||

else if U < 0.97 then output 0 else output 1<br /> | |||

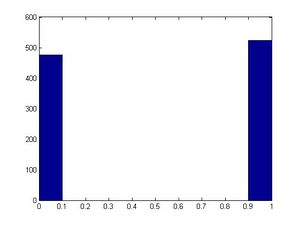

'''Example 3.1 (from class):''' (Coin Flipping Example)<br /> | |||

We want to simulate a coin flip. We have U~U(0,1) and X = 0 or X = 1. | |||

We can define the U function so that: | |||

If <math>U\leq 0.5</math>, then X = 0 | |||

0 | |||

and if <math>0.5 < U\leq 1</math>, then X =1. | |||

This allows the probability of Heads occurring to be 0.5 and is a good generator of a random coin flip. | |||

:<math> | <math> U~ \sim~ Unif [0,1] </math> | ||

:<math>\begin{align} | |||

P(X = 0) &{}= 0.5\\ | |||

1 | P(X = 1) &{}= 0.5\\ | ||

\end{ | \end{align}</math> | ||

The answer is: | |||

:<math> | |||

x = \begin{cases} | |||

0, & \text{if } U\leq 0.5 \\ | |||

1, & \text{if } 0.5 < U \leq 1 | |||

\end{cases}</math> | \end{cases}</math> | ||

''' | * '''Code'''<br /> | ||

<pre style="font-size:16px"> | |||

>>for ii=1:1000 | |||

u=rand; | |||

if u<0.5 | |||

x(ii)=0; | |||

else | |||

x(ii)=1; | |||

end | |||

end | |||

>>hist(x) | |||

</pre> | |||

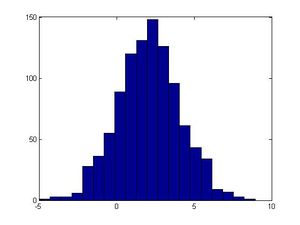

[[File:Coin_example.jpg|300px]] | |||

Note: The role of semi-colon in Matlab: Matlab will not print out the results if the line ends in a semi-colon and vice versa. | |||

'''Example 3.2 (From class):''' | |||

: | |||

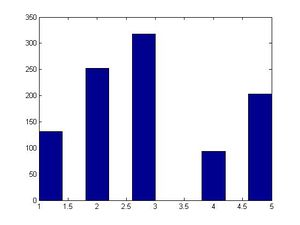

Suppose we have the following discrete distribution: | |||

:<math>\begin{align} | |||

P(X = 0) &{}= 0.3 \\ | |||

P(X = 1) &{}= 0.2 \\ | |||

P(X = 2) &{}= 0.5 | |||

\end{align}</math> | |||

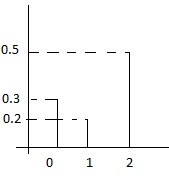

[[File:33.jpg]] | |||

The cumulative distribution function (cdf) for this distribution is then: | |||

:<math> | :<math> | ||

x = \begin{cases} | F(x) = \begin{cases} | ||

0, & \text{if } x < 0 \\ | |||

0.3, & \text{if } x < 1 \\ | |||

0.5, & \text{if } x < 2 \\ | |||

1, & \text{if } x \ge 2 | |||

\end{cases}</math> | \end{cases}</math> | ||

Then we can generate numbers from this distribution like this, given <math>U \sim~ Unif[0, 1]</math>: | |||

:<math> | |||

x = \begin{cases} | |||

0, & \text{if } U\leq 0.3 \\ | |||

1, & \text{if } 0.3 < U \leq 0.5 \\ | |||

2, & \text{if } 0.5 <U\leq 1 | |||

\end{cases}</math> | |||

"Procedure"<br /> | |||

1. Draw U~u (0,1)<br /> | |||

2. if U<=0.3 deliver x=0<br /> | |||

3. else if 0.3<U<=0.5 deliver x=1<br /> | |||

4. else 0.5<U<=1 deliver x=2 | |||

Can you find a faster way to run this algorithm? Consider: | |||

:<math> | |||

x = \begin{cases} | |||

2, & \text{if } U\leq 0.5 \\ | |||

1, & \text{if } 0.5 < U \leq 0.7 \\ | |||

0, & \text{if } 0.7 <U\leq 1 | |||

\end{cases}</math> | |||

The logic for this is that U is most likely to fall into the largest range. Thus by putting the largest range (in this case x >= 0.5) we can improve the run time of this algorithm. Could this algorithm be improved further using the same logic? | |||

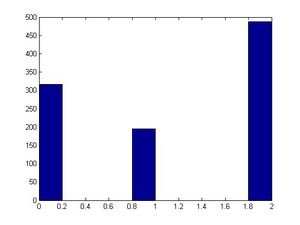

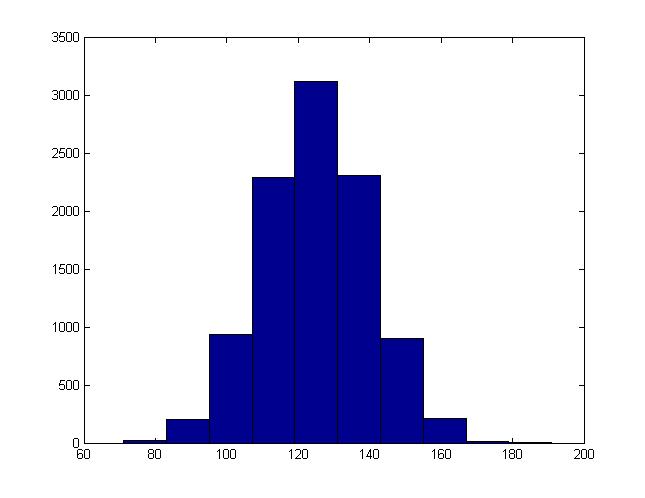

* '''Code''' (as shown in class)<br /> | |||

Use Editor window to edit the code <br /> | |||

<pre style="font-size:16px"> | |||

>>close all | |||

< | >>clear all | ||

< | >>for ii=1:1000 | ||

u=rand; | |||

if u<=0.3 | |||

x(ii)=0; | |||

< | elseif u<=0.5 | ||

x(ii)=1; | |||

else | |||

x(ii)=2; | |||

end | |||

end | |||

>>size(x) | |||

>>hist(x) | |||

</pre> | </pre> | ||

[[File:Discrete_example.jpg|300px]] | |||

< | The algorithm above generates a vector (1,1000) containing 0's ,1's and 2's in differing proportions. Due to the criteria for accepting 0, 1 or 2 into the vector we get proportions of 0,1 &2 that correspond to their respective probabilities. So plotting the histogram (frequency of 0,1&2) doesn't give us the pmf but a frequency histogram that shows the proportions of each, which looks identical to the pmf. | ||

'''Example 3.3''': Generating a random variable from pdf <br> | |||

:<math> | |||

</ | f_{x}(x) = \begin{cases} | ||

2x, & \text{if } 0\leq x \leq 1 \\ | |||

< | 0, & \text{if } otherwise | ||

\end{cases}</math> | |||

:<math> | |||

F_{x}(x) = \begin{cases} | |||

0, & \text{if } x < 0 \\ | |||

\int_{0}^{x}2sds = x^{2}, & \text{if } 0\leq x \leq 1 \\ | |||

1, & \text{if } x > 1 | |||

& | \end{cases}</math> | ||

</ | |||

== | :<math>\begin{align} U = x^{2}, X = F^{-1}x(U)= U^{\frac{1}{2}}\end{align}</math> | ||

'''Example 3.4''': Generating a Bernoulli random variable <br> | |||

:<math>\begin{align} P(X = 1) = p, P(X = 0) = 1 - p\end{align}</math> | |||

:<math> | |||

F(x) = \begin{cases} | |||

1-p, & \text{if } x < 1 \\ | |||

1, & \text{if } x \ge 1 | |||

\end{cases}</math> | |||

1. Draw <math> U~ \sim~ Unif [0,1] </math><br> | |||

2. <math> | |||

X = \begin{cases} | |||

0, & \text{if } 0 < U < 1-p \\ | |||

1, & \text{if } 1-p \le U < 1 | |||

\end{cases}</math> | |||

'''Example 3.5''': Generating Binomial(n,p) Random Variable<br> | |||

<math> use p\left( x=i+1\right) =\dfrac {n-i} {i+1}\dfrac {p} {1-p}p\left( x=i\right) </math> | |||

Step 1: Generate a random number <math>U</math>.<br> | |||

Step 2: <math>c = \frac {p}{(1-p)}</math>, <math>i = 0</math>, <math>pr = (1-p)^n</math>, <math>F = pr</math><br> | |||

Step 3: If U<F, set X = i and stop,<br> | |||

Step 4: <math> pr = \, {\frac {c(n-i)}{(i+1)}} {pr}, F = F +pr, i = i+1</math><br> | |||

Step 5: Go to step 3<br> | |||

*Note: These steps can be found in Simulation 5th Ed. by Sheldon Ross. | |||

*Note: Another method by seeing the Binomial as a sum of n independent Bernoulli random variables, U1, ..., Un. Then set X equal to the number of Ui that are less than or equal to p. To use this method, n random numbers are needed and n comparisons need to be done. On the other hand, the inverse transformation method is simpler because only one random variable needs to be generated and it makes 1 + np comparisons.<br> | |||

Step 1: Generate n uniform numbers U1 ... Un.<br> | |||

Step 2: X = <math>\sum U_i < = p</math> where P is the probability of success. | |||

'''Example 3.6''': Generating a Poisson random variable <br> | |||

The | "Let X ~ Poi(u). Write an algorithm to generate X. | ||

The PDF of a poisson is: | |||

:<math>\begin{align} f(x) = \frac {\, e^{-u} u^x}{x!} \end{align}</math> | |||

We know that | |||

:<math>\begin{align} P_{x+1} = \frac {\, e^{-u} u^{x+1}}{(x+1)!} \end{align}</math> | |||

The ratio is <math>\begin{align} \frac {P_{x+1}}{P_x} = ... = \frac {u}{{x+1}} \end{align}</math> | |||

Therefore, <math>\begin{align} P_{x+1} = \, {\frac {u}{x+1}} P_x\end{align}</math> | |||

Algorithm: <br> | |||

1) Generate U ~ U(0,1) <br> | |||

2) <math>\begin{align} X = 0 \end{align}</math> | |||

<math>\begin{align} F = P(X = 0) = e^{-u}*u^0/{0!} = e^{-u} = p \end{align}</math> | |||

3) If U<F, output x <br> | |||

<font size="3">Else,</font> <math>\begin{align} p = (u/(x+1))^p \end{align}</math> <br> | |||

<math>\begin{align} F = F + p \end{align}</math> <br> | |||

<math>\begin{align} x = x + 1 \end{align}</math> <br> | |||

4) Go to 1" <br> | |||

Acknowledgements: This is an example from Stat 340 Winter 2013 | |||

'''Example 3.7''': Generating Geometric Distribution: | |||

3. | |||

Consider Geo(p) where p is the probability of success, and define random variable X such that X is the total number of trials required to achieve the first success. x=1,2,3..... We have pmf: | |||

<math>P(X=x_i) = \, p (1-p)^{x_{i}-1}</math> | |||

We have CDF: | |||

<math>F(x)=P(X \leq x)=1-P(X>x) = 1-(1-p)^x</math>, P(X>x) means we get at least x failures before we observe the first success. | |||

Now consider the inverse transform: | |||

2 | :<math> | ||

x = \begin{cases} | |||

1, & \text{if } U\leq p \\ | |||

2, & \text{if } p < U \leq 1-(1-p)^2 \\ | |||

3, & \text{if } 1-(1-p)^2 <U\leq 1-(1-p)^3 \\ | |||

.... | |||

k, & \text{if } 1-(1-p)^{k-1} <U\leq 1-(1-p)^k | |||

.... | |||

\end{cases}</math> | |||

''' | '''Note''': Unlike the continuous case, the discrete inverse-transform method can always be used for any discrete distribution (but it may not be the most efficient approach) <br> | ||

'''General Procedure'''<br /> | |||

1. Draw U ~ U(0,1)<br /> | |||

2. If <math>U \leq P_{0}</math> deliver <math>x = x_{0}</math><br /> | |||

3. Else if <math>U \leq P_{0} + P_{1}</math> deliver <math>x = x_{1}</math><br /> | |||

4. Else if <math>U \leq P_{0} + P_{1} + P_{2} </math> deliver <math>x = x_{2}</math><br /> | |||

... | |||

<font size="3">Else if</font> <math>U \leq P_{0} + ... + P_{k} </math> <font size="3">deliver</font> <math>x = x_{k}</math><br /> | |||

<br /'''>===Inverse Transform Algorithm for Generating a Binomial(n,p) Random Variable(from textbook)===''' | |||

<br />step 1: Generate a random number U | |||

<br />step 2: c=p/(1-p),i=0, pr=(1-p)<sup>n</sup>, F=pr. | |||

<br />step 3: If U<F, set X=i and stop. | |||

<br />step 4: pr =[c(n-i)/(i+1)]pr, F=F+pr, i=i+1. | |||

<br />step 5: Go to step 3. | |||

'''Problems'''<br /> | |||

and | Though this method is very easy to use and apply, it does have a major disadvantage/limitation: | ||

We need to find the inverse cdf F^{-1}(\cdot) . In some cases the inverse function does not exist, or is difficult to find because it requires a closed form expression for F(x). | |||

For example, it is too difficult to find the inverse cdf of the Gaussian distribution, so we must find another method to sample from the Gaussian distribution. | |||

= | In conclusion, we need to find another way of sampling from more complicated distributions | ||

Flipping a coin is a discrete case of uniform distribution, and the code below shows an example of flipping a coin 1000 times; the result is close to the expected value 0.5.<br> | |||

Example 2, as another discrete distribution, shows that we can sample from parts like 0,1 and 2, and the probability of each part or each trial is the same.<br> | |||

Example 3 uses inverse method to figure out the probability range of each random varible. | |||

<div style="border:1px solid #cccccc;border-radius:10px;box-shadow: 0 5px 15px 1px rgba(0, 0, 0, 0.6), 0 0 200px 1px rgba(255, 255, 255, 0.5);padding:20px;margin:20px;background:#FFFFAD;"> | |||

<h2 style="text-align:center;">Summary of Inverse Transform Method</h2> | |||

<p><b>Problem:</b>generate types of distribution.</p> | |||

<p><b>Plan:</b></p> | |||

<b style='color:lightblue;'>Continuous case:</b> | |||

<ol> | |||

<li>find CDF F</li> | |||

<li>find the inverse F<sup>-1</sup></li> | |||

<li>Generate a list of uniformly distributed number {x}</li> | |||

<li>{F<sup>-1</sup>(x)} is what we want</li> | |||

</ol> | |||

<b>Matlab Instruction</b> | |||

<pre style="font-size:16px">>>u=rand(1,1000); | |||

>>hist(u) | |||

>>x=(-log(1-u))/2; | |||

>>size(x) | |||

>>figure | |||

>>hist(x) | |||

</pre> | |||

<br> | |||

<b style='color:lightblue'>Discrete case:</b> | |||

<ol> | |||

<li>generate a list of uniformly distributed number {u}</li> | |||

<li>d<sub>i</sub>=x<sub>i</sub> if<math> X=x_i, </math> if <math> F(x_{i-1})<U\leq F(x_i) </math></li> | |||

<li>{d<sub>i</sub>=x<sub>i</sub>} is what we want</li> | |||

</ol> | |||

<b>Matlab Instruction</b> | |||

<pre style="font-size:16px">>>for ii=1:1000 | |||

u=rand; | |||

if u<0.5 | |||

x(ii)=0; | |||

else | |||

x(ii)=1; | |||

end | |||

end | |||

>>hist(x) | |||

</pre> | |||

</div> | |||

=== | === Generalized Inverse-Transform Method === | ||

( | |||

Valid for any CDF F(x): return X=min{x:F(x)<math>\leq</math> U}, where U~U(0,1) | |||

1. Continues, possibly with flat spots (i.e. not strictly increasing) | |||

2. Discrete | |||

3. Mixed continues discrete | |||

'''Advantages of Inverse-Transform Method''' | |||

Inverse transform method preserves monotonicity and correlation | |||

which helps in | |||

1. Variance reduction methods ... | |||

2. Generating truncated distributions ... | |||

3. Order statistics ... | |||

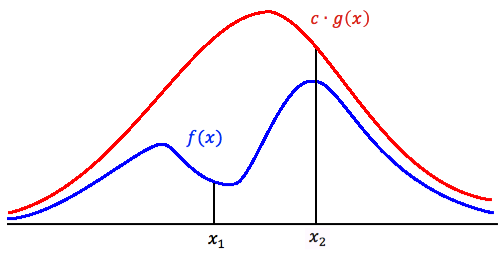

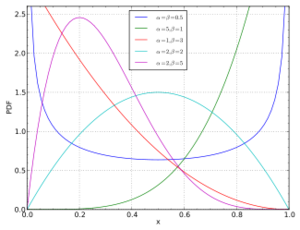

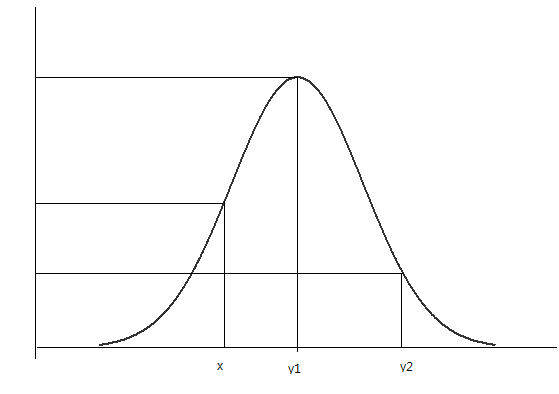

===Acceptance-Rejection Method=== | |||

Although the inverse transformation method does allow us to change our uniform distribution, it has two limits; | |||

# Not all functions have inverse functions (ie, the range of x and y have limit and do not fix the inverse functions) | |||

# For some distributions, such as Gaussian, it is too difficult to find the inverse | |||

To generate random samples for these functions, we will use different methods, such as the '''Acceptance-Rejection Method'''. This method is more efficient than the inverse transform method. The basic idea is to find an alternative probability distribution with density function f(x); | |||

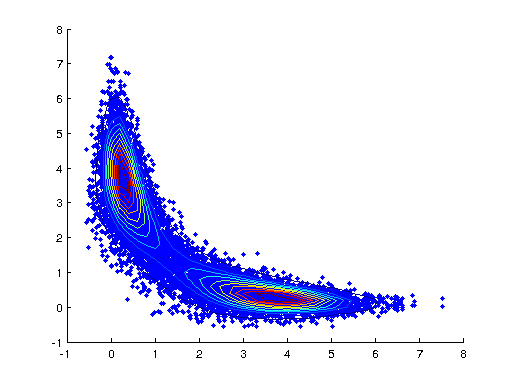

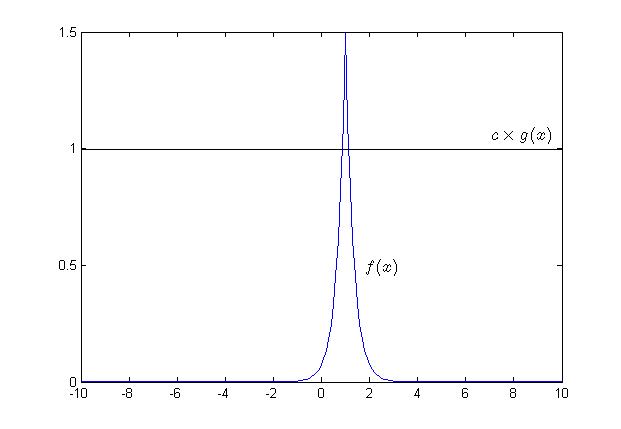

''' | Suppose we want to draw random sample from a target density function ''f(x)'', ''x∈S<sub>x</sub>'', where ''S<sub>x</sub>'' is the support of ''f(x)''. If we can find some constant ''c''(≥1) (In practice, we prefer c as close to 1 as possible) and a density function ''g(x)'' having the same support ''S<sub>x</sub>'' so that ''f(x)≤cg(x), ∀x∈S<sub>x</sub>'', then we can apply the procedure for Acceptance-Rejection Method. Typically we choose a density function that we already know how to sample from for ''g(x)''. | ||

''' | |||

[[File:AR_Method.png]] | |||

The main logic behind the Acceptance-Rejection Method is that:<br> | |||

1. We want to generate sample points from an unknown distribution, say f(x).<br> | |||

2. We use <math>\,cg(x)</math> to generate points so that we have more points than f(x) could ever generate for all x. (where c is a constant, and g(x) is a known distribution)<br> | |||

3. For each value of x, we accept and reject some points based on a probability, which will be discussed below.<br> | |||

Note: If the red line was only g(x) as opposed to <math>\,c g(x)</math> (i.e. c=1), then <math>g(x) \geq f(x)</math> for all values of x if and only if g and f are the same functions. This is because the sum of pdf of g(x)=1 and the sum of pdf of f(x)=1, hence, <math>g(x) \ngeqq f(x)</math> \,∀x. <br> | |||

Also remember that <math>\,c g(x)</math> always generates higher probability than what we need. Thus we need an approach of getting the proper probabilities.<br><br> | |||

c must be chosen so that <math>f(x)\leqslant c g(x)</math> for all value of x. c can only equal 1 when f and g have the same distribution. Otherwise:<br> | |||

c | Either use a software package to test if <math>f(x)\leqslant c g(x)</math> for an arbitrarily chosen c > 0, or:<br> | ||

1. Find first and second derivatives of f(x) and g(x).<br> | |||

2. Identify and classify all local and absolute maximums and minimums, using the First and Second Derivative Tests, as well as all inflection points.<br> | |||

3. Verify that <math>f(x)\leqslant c g(x)</math> at all the local maximums as well as the absolute maximums.<br> | |||

4. Verify that <math>f(x)\leqslant c g(x)</math> at the tail ends by calculating <math>\lim_{x \to +\infty} \frac{f(x)}{\, c g(x)}</math> and <math>\lim_{x \to -\infty} \frac{f(x)}{\, c g(x)}</math> and seeing that they are both < 1. Use of L'Hopital's Rule should make this easy, since both f and g are p.d.f's, resulting in both of them approaching 0.<br> | |||

5.Efficiency: the number of times N that steps 1 and 2 need to be called(also the number of iterations needed to successfully generate X) is a random variable and has a geometric distribution with success probability <math>p=P(U \leq f(Y)/(cg(Y)))</math> , <math>P(N=n)=(1-p(n-1))p ,n \geq 1</math>.Thus on average the number of iterations required is given by <math> E(N)=\frac{1} p</math> | |||

c should be close to the maximum of f(x)/g(x), not just some arbitrarily picked large number. Otherwise, the Acceptance-Rejection method will have more rejections (since our probability <math>f(x)\leqslant c g(x)</math> will be close to zero). This will render our algorithm inefficient. | |||

The expected number of iterations of the algorithm required with an X is c. | |||

<br> | |||

'''Note:''' <br> | |||

1. Value around x<sub>1</sub> will be sampled more often under cg(x) than under f(x).There will be more samples than we actually need, if <math>\frac{f(y)}{\, c g(y)}</math> is small, the acceptance-rejection technique will need to be done to these points to get the accurate amount.In the region above x<sub>1</sub>, we should accept less and reject more. <br> | |||

2. Value around x<sub>2</sub>: number of sample that are drawn and the number we need are much closer. So in the region above x<sub>2</sub>, we accept more. As a result, g(x) and f(x) are comparable.<br> | |||

< | 3. The constant c is needed because we need to adjust the height of g(x) to ensure that it is above f(x). Besides that, it is best to keep the number of rejected varieties small for maximum efficiency. <br> | ||

Another way to understand why the the acceptance probability is <math>\frac{f(y)}{\, c g(y)}</math>, is by thinking of areas. From the graph above, we see that the target function in under the proposed function c g(y). Therefore, <math>\frac{f(y)}{\, c g(y)}</math> is the proportion or the area under c g(y) that also contains f(y). Therefore we say we accept sample points for which u is less then <math>\frac{f(y)}{\, c g(y)}</math> because then the sample points are guaranteed to fall under the area of c g(y) that contains f(y). <br> | |||

<br> | |||

'''There are 2 cases that are possible:''' <br> | |||

-Sample of points is more than enough, <math>c g(x) \geq f(x) </math> <br> | |||

-Similar or the same amount of points, <math>c g(x) \geq f(x) </math> <br> | |||

'''There is 1 case that is not possible:''' <br> | |||

-Less than enough points, such that <math> g(x) </math> is greater than <math> f </math>, <math>g(x) \geq f(x)</math> <br> | |||

<br> | |||

'''Procedure''' | |||

#Draw Y~g(.) | |||

#Draw U~u(0,1) (Note: U and Y are independent) | |||

#If <math>u\leq \frac{f(y)}{cg(y)}</math> (which is <math>P(accepted|y)</math>) then x=y, else return to Step 1<br> | |||

Note: Recall <math>P(U\leq a)=a</math>. Thus by comparing u and <math>\frac{f(y)}{\, c g(y)}</math>, we can get a probability of accepting y at these points. For instance, at some points that cg(x) is much larger than f(x), the probability of accepting x=y is quite small.<br> | |||

ie. At X<sub>1</sub>, low probability to accept the point since f(x) is much smaller than cg(x).<br> | |||

At X<sub>2</sub>, high probability to accept the point. <math>P(U\leq a)=a</math> in Uniform Distribution. | |||

Note: Since U is the variable for uniform distribution between 0 and 1. It equals to 1 for all. The condition depends on the constant c. so the condition changes to <math>c\leq \frac{f(y)}{g(y)}</math> | |||

introduce the relationship of cg(x)and f(x),and prove why they have that relationship and where we can use this rule to reject some cases. | |||

and learn how to see the graph to find the accurate point to reject or accept the ragion above the random variable x. | |||

for the example, x1 is bad point and x2 is good point to estimate the rejection and acceptance | |||

'''Some notes on the constant C'''<br> | |||

1. C is chosen such that <math> c g(y)\geq f(y)</math>, that is,<math> c g(y)</math> will always dominate <math>f(y)</math>. Because of this, | |||

C will always be greater than or equal to one and will only equal to one if and only if the proposal distribution and the target distribution are the same. It is normally best to choose C such that the absolute maxima of both <math> c g(y)</math> and <math> f(y)</math> are the same.<br> | |||

2. <math> \frac {1}{C} </math> is the area of <math> F(y)</math> over the area of <math> c G(y)</math> and is the acceptance rate of the points generated. For example, if <math> \frac {1}{C} = 0.7</math> then on average, 70 percent of all points generated are accepted.<br> | |||

3. C is the average number of times Y is generated from g . | |||

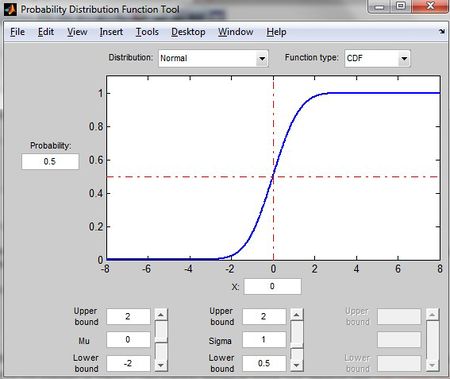

=== Theorem === | |||

<math>f | Let <math>f: \R \rightarrow [0,+\infty]</math> be a well-defined pdf, and <math>\displaystyle Y</math> be a random variable with pdf <math>g: \R \rightarrow [0,+\infty]</math> such that <math>\exists c \in \R^+</math> with <math>f \leq c \cdot g</math>. If <math>\displaystyle U \sim~ U(0,1)</math> is independent of <math>\displaystyle Y</math>, then the random variable defined as <math>X := Y \vert U \leq \frac{f(Y)}{c \cdot g(Y)}</math> has pdf <math>\displaystyle f</math>, and the condition <math>U \leq \frac{f(Y)}{c \cdot g(Y)}</math> is denoted by "Accepted". | ||

=== Proof === | |||

Recall the conditional probability formulas:<br /> | |||

<math>\begin{align} | |||

P(A|B)=\frac{P(A \cap B)}{P(B)}, \text{ or }P(A|B)=\frac{P(B|A)P(A)}{P(B)} \text{ for pmf} | |||

\end{align}</math><br /> | |||

<math>P(y|accepted)=f(y)=\frac{P(accepted|y)P(y)}{P(accepted)}</math><br /> | |||

<br />based on the concept from '''procedure-step1''':<br /> | |||

<math>P(y)=g(y)</math><br /> | |||

<math>P(accepted|y)=\frac{f(y)}{cg(y)}</math> <br /> | |||

(the larger the value is, the larger the chance it will be selected) <br /><br /> | |||

<math> | |||

\begin{align} | |||

P(accepted)&=\int_y\ P(accepted|y)P(y)\\ | |||

&=\int_y\ \frac{f(s)}{cg(s)}g(s)ds\\ | |||

&=\frac{1}{c} \int_y\ f(s) ds\\ | |||

&=\frac{1}{c} | |||

\end{align}</math><br /> | |||

Therefore:<br /> | |||

<math>\begin{align} | |||

P(x)&=P(y|accepted)\\ | |||

&=\frac{\frac{f(y)}{cg(y)}g(y)}{1/c}\\ | |||

&=\frac{\frac{f(y)}{c}}{1/c}\\ | |||

&=f(y)\end{align}</math><br /><br /><br /> | |||

'''''Here is an alternative introduction of Acceptance-Rejection Method''''' | |||

'''Comments:''' | |||

-Acceptance-Rejection Method is not good for all cases. The limitation with this method is that sometimes many points will be rejected. One obvious disadvantage is that it could be very hard to pick the <math>g(y)</math> and the constant <math>c</math> in some cases. We have to pick the SMALLEST C such that <math>cg(x) \leq f(x)</math> else the the algorithm will not be efficient. This is because <math>f(x)/cg(x)</math> will become smaller and probability <math>u \leq f(x)/cg(x)</math> will go down and many points will be rejected making the algorithm inefficient. | |||

-'''Note:''' When <math>f(y)</math> is very different than <math>g(y)</math>, it is less likely that the point will be accepted as the ratio above would be very small and it will be difficult for <math>U</math> to be less than this small value. <br/>An example would be when the target function (<math>f</math>) has a spike or several spikes in its domain - this would force the known distribution (<math>g</math>) to have density at least as large as the spikes, making the value of <math>c</math> larger than desired. As a result, the algorithm would be highly inefficient. | |||

'''Acceptance-Rejection Method'''<br/> | |||

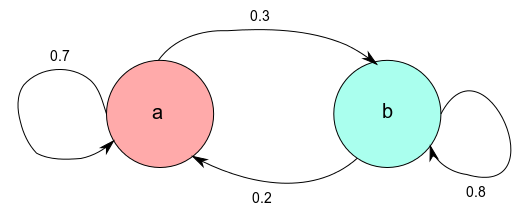

'''Example 1''' (discrete case)<br/> | |||

We wish to generate X~Bi(2,0.5), assuming that we cannot generate this directly.<br/> | |||

We use a discrete distribution DU[0,2] to approximate this.<br/> | |||

<math>f(x)=Pr(X=x)=2Cx×(0.5)^2\,</math><br/> | |||

{| class=wikitable align=left | |||

|<math>x</math>||0||1||2 | |||

|- | |||

|<math>f(x)</math>||1/4||1/2||1/4 | |||

|- | |||

|<math>g(x)</math>||1/3||1/3||1/3 | |||

|- | |||

<math> | |<math>c=f(x)/g(x)</math>||3/4||3/2||3/4 | ||

|- | |||

|<math>f(x)/(cg(x))</math>||1/2||1||1/2 | |||

|} | |||

Since we need <math>c \geq f(x)/g(x)</math><br/> | |||

We need <math>c=3/2</math><br/> | |||

Therefore, the algorithm is:<br/> | |||

1. | 1. Generate <math>u,v~U(0,1)</math><br/> | ||

2. Set <math>y= \lfloor 3*u \rfloor</math> (This is using uniform distribution to generate DU[0,2]<br/> | |||

2 | 3. If <math>(y=0)</math> and <math>(v<\tfrac{1}{2}), output=0</math> <br/> | ||

If <math>(y=2) </math> and <math>(v<\tfrac{1}{2}), output=2 </math><br/> | |||

Else if <math>y=1, output=1</math><br/> | |||

< | An elaboration of “c”<br/> | ||

c is the expected number of times the code runs to output 1 random variable. Remember that when <math>u < \tfrac{f(x)}{cg(x)}</math> is not satisfied, we need to go over the code again.<br/> | |||

Proof<br/> | |||

Let <math>f(x)</math> be the function we wish to generate from, but we cannot use inverse transform method to generate directly.<br/> | |||

Let <math>g(x)</math> be the helper function <br/> | |||

Let <math>kg(x)>=f(x)</math><br/> | |||

Since we need to generate y from <math>g(x)</math>,<br/> | |||

<math>Pr(select y)=g(y)</math><br/> | |||

<math>Pr(output y|selected y)=Pr(u<f(y)/(cg(y)))= f(y)/(cg(y))</math> (Since u~Unif(0,1))<br/> | |||

<math>Pr(output y)=Pr(output y1|selected y1)Pr(select y1)+ Pr(output y2|selected y2)Pr(select y2)+…+ Pr(output yn|selected yn)Pr(select yn)=1/c</math> <br/> | |||

Consider that we are asking for expected time for the first success, it is a geometric distribution with probability of success=1/c<br/> | |||

Therefore, <math>E(X)=1/(1/c))=c</math> <br/> | |||

Acknowledgements: Some materials have been borrowed from notes from Stat340 in Winter 2013. | |||

Use the conditional probability to proof if the probability is accepted, then the result is closed pdf of the original one. | |||

the example shows how to choose the c for the two function <math>g(x)</math> and <math>f(x)</math>. | |||

=== Example of Acceptance-Rejection Method=== | |||

Generating a random variable having p.d.f. <br /> | |||

<math>\displaystyle f(x) = 20x(1 - x)^3, 0< x <1 </math><br /> | |||

Since this random variable (which is beta with parameters (2,4)) is concentrated in the interval (0, 1), let us consider the acceptance-rejection method with<br /> | |||

<math>\displaystyle g(x) = 1,0<x<1</math><br /> | |||

To determine the constant c such that f(x)/g(x) <= c, we use calculus to determine the maximum value of<br /> | |||

<math>\displaystyle f(x)/g(x) = 20x(1 - x)^3 </math><br /> | |||

Differentiation of this quantity yields <br /> | |||

<math>\displaystyle d/dx[f(x)/g(x)]=20*[(1-x)^3-3x(1-x)^2]</math><br /> | |||

Setting this equal to 0 shows that the maximal value is attained when x = 1/4, | |||

and thus, <br /> | |||

<math>\displaystyle f(x)/g(x)<= 20*(1/4)*(3/4)^3=135/64=c </math><br /> | |||

Hence,<br /> | |||

<math>\displaystyle f(x)/cg(x)=(256/27)*(x*(1-x)^3)</math><br /> | |||

and thus the simulation procedure is as follows: | |||

1) Generate two random numbers U1 and U2 . | |||

2) If U<sub>2</sub><(256/27)*U<sub>1</sub>*(1-U<sub>1</sub>)<sup>3</sup>, set X=U<sub>1</sub>, and stop | |||

Otherwise return to step 1). | |||

The average number of times that step 1) will be performed is c = 135/64. | |||

(The above example is from http://www.cs.bgu.ac.il/~mps042/acceptance.htm, example 2.) | |||

use the derivative to proof the accepetance-rejection method, | |||

find the local maximum of f(x)/g(x). | |||

and we can calculate the best constant c. | |||

===Another Example of Acceptance-Rejection Method=== | |||

Generate a random variable from:<br /> | |||

<math>\displaystyle f(x)=3*x^2, 0<x<1 </math><br /> | |||

Assume g(x) to be uniform over interval (0,1), where 0< x <1<br /> | |||

Therefore:<br /> | |||

<math>\displaystyle c = max(f(x)/(g(x)))= 3</math><br /> | |||

the best constant c is the max(f(x)/(cg(x))) and the c make the area above the f(x) and below the g(x) to be small. | |||

because g(.) is uniform so the g(x) is 1. max(g(x)) is 1<br /> | |||

<math>\displaystyle f(x)/(cg(x))= x^2</math><br /> | |||

Acknowledgement: this is example 1 from http://www.cs.bgu.ac.il/~mps042/acceptance.htm | |||

== Class 4 - Thursday, May 16 == | |||

4 | |||

'''Goals'''<br> | |||

*When we want to find target distribution <math>f(x)</math>, we need to first find a proposal distribution <math>g(x)</math> that is easy to sample from. <br> | |||

*Relationship between the proposal distribution and target distribution is: <math> c \cdot g(x) \geq f(x) </math>, where c is constant. This means that the area of f(x) is under the area of <math> c \cdot g(x)</math>. <br> | |||

*Chance of acceptance is less if the distance between <math>f(x)</math> and <math> c \cdot g(x)</math> is big, and vice-versa, we use <math> c </math> to keep <math> \frac {f(x)}{c \cdot g(x)} </math> below 1 (so <math>f(x) \leq c \cdot g(x)</math>). Therefore, we must find the constant <math> C </math> to achieve this.<br /> | |||

*In other words, <math>C</math> is chosen to make sure <math> c \cdot g(x) \geq f(x) </math>. However, it will not make sense if <math>C</math> is simply chosen to be arbitrarily large. We need to choose <math>C</math> such that <math>c \cdot g(x)</math> fits <math>f(x)</math> as tightly as possible. This means that we must find the minimum c such that the area of f(x) is under the area of c*g(x). <br /> | |||

*The constant c cannot be a negative number.<br /> | |||

'''How to find C''':<br /> | |||

<math>\begin{align} | |||

&c \cdot g(x) \geq f(x)\\ | |||

&c\geq \frac{f(x)}{g(x)} \\ | |||

&c= \max \left(\frac{f(x)}{g(x)}\right) | |||

\end{align}</math><br> | |||

If <math>f</math> and <math> g </math> are continuous, we can find the extremum by taking the derivative and solve for <math>x_0</math> such that:<br/> | |||

<math> 0=\frac{d}{dx}\frac{f(x)}{g(x)}|_{x=x_0}</math> <br/> | |||

Thus <math> c = \frac{f(x_0)}{g(x_0)} </math><br/> | |||

Note: This procedure is called the Acceptance-Rejection Method.<br> | |||

'''The Acceptance-Rejection method''' involves finding a distribution that we know how to sample from, g(x), and multiplying g(x) by a constant c so that <math>c \cdot g(x)</math> is always greater than or equal to f(x). Mathematically, we want <math> c \cdot g(x) \geq f(x) </math>. | |||

And it means, c has to be greater or equal to <math>\frac{f(x)}{g(x)}</math>. So the smallest possible c that satisfies the condition is the maximum value of <math>\frac{f(x)}{g(x)}</math><br/>. | |||

But in case of c being too large, the chance of acceptance of generated values will be small, thereby losing efficiency of the algorithm. Therefore, it is best to get the smallest possible c such that <math> c g(x) \geq f(x)</math>. <br> | |||

'''Important points:'''<br> | |||

*For this method to be efficient, the constant c must be selected so that the rejection rate is low. (The efficiency for this method is <math>\left ( \frac{1}{c} \right )</math>)<br> | |||

<math> | *It is easy to show that the expected number of trials for an acceptance is <math> \frac{Total Number of Trials} {C} </math>. <br> | ||

*recall the '''acceptance rate is 1/c'''. (Not rejection rate) | |||

:Let <math>X</math> be the number of trials for an acceptance, <math> X \sim~ Geo(\frac{1}{c})</math><br> | |||

:<math>\mathbb{E}[X] = \frac{1}{\frac{1}{c}} = c </math> | |||

*The number of trials needed to generate a sample size of <math>N</math> follows a negative binomial distribution. The expected number of trials needed is then <math>cN</math>.<br> | |||

*So far, the only distribution we know how to sample from is the '''UNIFORM''' distribution. <br> | |||

'''Procedure''': <br> | |||

: | |||

1. Choose <math>g(x)</math> (simple density function that we know how to sample, i.e. Uniform so far) <br> | |||

The easiest case is <math>U~ \sim~ Unif [0,1] </math>. However, in other cases we need to generate UNIF(a,b). We may need to perform a linear transformation on the <math>U~ \sim~ Unif [0,1] </math> variable. <br> | |||

2. Find a constant c such that :<math> c \cdot g(x) \geq f(x) </math>, otherwise return to step 1. | |||

:<math> | |||

'''Recall the general procedure of Acceptance-Rejection Method''' | |||

#Let <math>Y \sim~ g(y)</math> | |||

#Let <math>U \sim~ Unif [0,1] </math> | |||

#If <math>U \leq \frac{f(Y)}{c \cdot g(Y)}</math> then X=Y; else return to step 1 (This is not the way to find C. This is the general procedure.) | |||

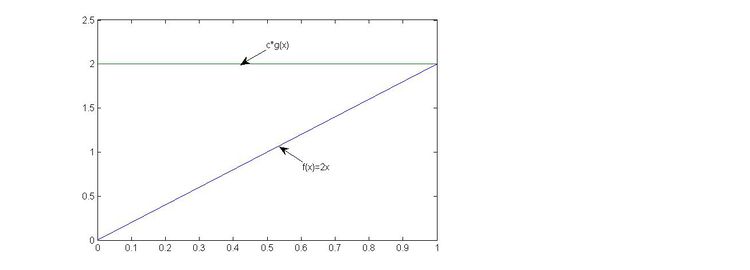

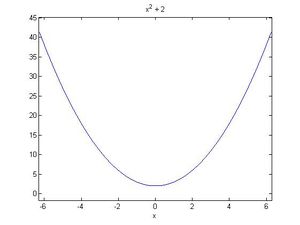

< | <hr><b>Example: <br> | ||

< | |||

< | Generate a random variable from the pdf</b><br> | ||

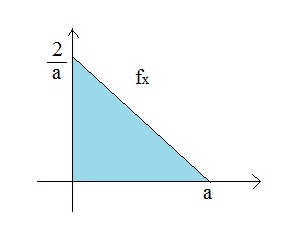

<math> f(x) = | |||

\begin{cases} | |||

2x, & \mbox{if }0 \leqslant x \leqslant 1 \\ | |||

0, & \mbox{otherwise} | |||

\end{cases} </math> | |||

= | We can note that this is a special case of Beta(2,1), where, | ||

<math>beta(a,b)=\frac{\Gamma(a+b)}{\Gamma(a)\Gamma(b)}x^{(a-1)}(1-x)^{(b-1)}</math><br> | |||

Where Γ (n) = (n - 1)! if n is positive integer | |||

<math> | <math>Gamma(z)=\int _{0}^{\infty }t^{z-1}e^{-t}dt</math> | ||

Aside: Beta function | |||

In mathematics, the beta function, also called the Euler integral of the first kind, is a special function defined by | |||

<math>B(x,y)=\int_0^1 \! {t^{(x-1)}}{(1-t)^{(y-1)}}\,dt</math><br> | |||

<math>beta(2,1)= \frac{\Gamma(3)}{(\Gamma(2)\Gamma(1))}x^1 (1-x)^0 = 2x</math><br> | |||

<hr> | |||

<math>g=u(0,1)</math><br> | |||

<math>y=g</math><br> | |||

<math>f(x)\leq c\cdot g(x)</math><br> | |||

<math>c\geq \frac{f(x)}{g(x)}</math><br> | |||

<math>c = \max \frac{f(x)}{g(x)} </math><br> | |||

<br><math>c = \max \frac{2x}{1}, 0 \leq x \leq 1</math><br> | |||

Taking x = 1 gives the highest possible c, which is c=2 | |||

<br />Note that c is a scalar greater than 1. | |||

<br />cg(x) is proposal dist, and f(x) is target dist. | |||

[[File:Beta(2,1)_example.jpg|750x750px]] | |||

''' | '''Note:''' g follows uniform distribution, it only covers half of the graph which runs from 0 to 1 on y-axis. Thus we need to multiply by c to ensure that <math>c\cdot g</math> can cover entire f(x) area. In this case, c=2, so that makes g run from 0 to 2 on y-axis which covers f(x). | ||

''' | '''Comment:'''<br> | ||

From the picture above, we could observe that the area under f(x)=2x is a half of the area under the pdf of UNIF(0,1). This is why in order to sample 1000 points of f(x), we need to sample approximately 2000 points in UNIF(0,1). | |||

<math> | And in general, if we want to sample n points from a distritubion with pdf f(x), we need to scan approximately <math>n\cdot c</math> points from the proposal distribution (g(x)) in total. <br> | ||

< | <b>Step</b> | ||

<ol> | |||

<li>Draw y~U(0,1)</li> | |||

<li>Draw u~U(0,1)</li> | |||

<li>if <math>u \leq \frac{(2\cdot y)}{(2\cdot 1)}, u \leq y,</math> then <math> x=y</math><br> | |||