show, Attend and Tell: Neural Image Caption Generation with Visual Attention: Difference between revisions

| Line 70: | Line 70: | ||

Stochastic "hard" attention means learning to maximize the context vector <math>\hat{z}</math> from a combination of a one-hot encoded variable <math>s_{t,i}</math> and the extracted features <math>a_{i}</math>. This is called "hard" attention, because a hard choice is made at each feature, however it is stochastic since <math>s_{t,i}</math> is chosen from a mutlinoulli distribution. In this approach the location variable <math>s_t</math> is presented as where the model decides to focus attention when generating the <math>t^{th}</math> word. [http://cs.brown.edu/courses/cs195-5/spring2012/lectures/2012-01-31_probabilityDecisions.pdf (see page 11 for an explanation of the distribution of this link)]. | Stochastic "hard" attention means learning to maximize the context vector <math>\hat{z}</math> from a combination of a one-hot encoded variable <math>s_{t,i}</math> and the extracted features <math>a_{i}</math>. This is called "hard" attention, because a hard choice is made at each feature, however it is stochastic since <math>s_{t,i}</math> is chosen from a mutlinoulli distribution. In this approach the location variable <math>s_t</math> is presented as where the model decides to focus attention when generating the <math>t^{th}</math> word. [http://cs.brown.edu/courses/cs195-5/spring2012/lectures/2012-01-31_probabilityDecisions.pdf (see page 11 for an explanation of the distribution of this link)]. | ||

Deterministic soft-attention means learning by maximizing the expectation of the context vector. It is deterministic, since <math>s_{t,i}</math> is not picked from a distribution and it is soft since the individual choices are not optimized, but the whole distribution. | Learning stochastic attention requires sampling the attention location st each time, instead we can take the expectation of the context vector <math>zˆt</math> directly and formulate a deterministic attention model by computing a soft attention weighted annotation vector. Deterministic soft-attention means learning by maximizing the expectation of the context vector. It is deterministic, since <math>s_{t,i}</math> is not picked from a distribution and it is soft since the individual choices are not optimized, but the whole distribution. | ||

The actual optimization methods for both of these attention methods are outside the scope of this summary. | The actual optimization methods for both of these attention methods are outside the scope of this summary. | ||

Revision as of 23:20, 19 November 2015

Introduction

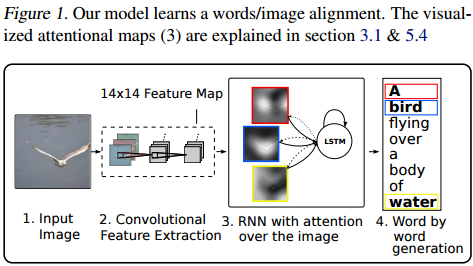

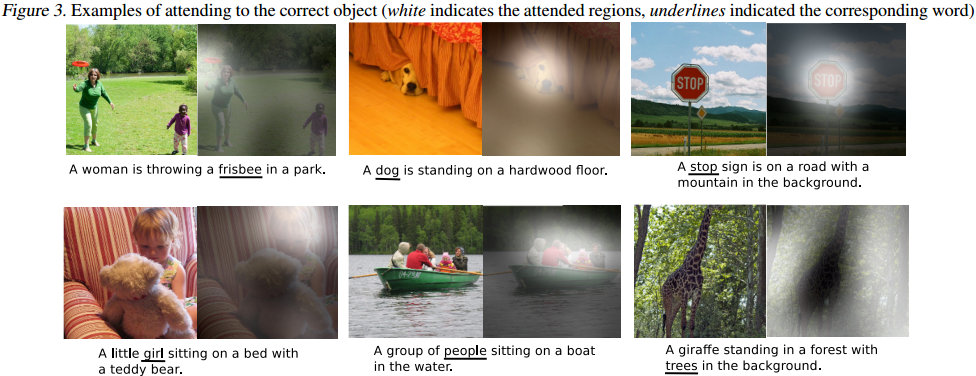

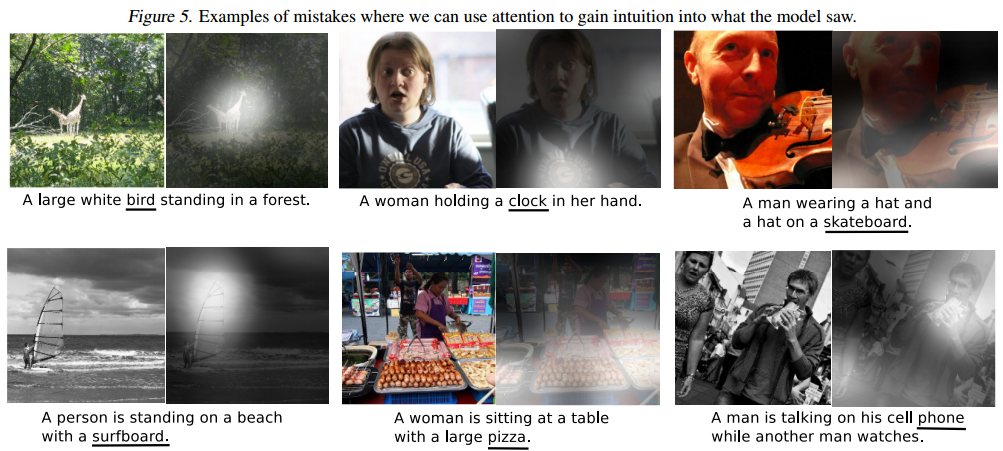

This paper<ref> Xu, Kelvin, et al. "Show, attend and tell: Neural image caption generation with visual attention." arXiv preprint arXiv:1502.03044 (2015). </ref> introduces an attention based model that automatically learns to describe the content of images. It is able to focus on salient parts of the image while generating the corresponding word in the output sentence. A visualization is provided showing which part of the image was attended to to generate each specific word in the output. This can be used to get a sense of what is going on in the model and is especially useful for understanding the kinds of mistakes it makes. The model is tested on three datasets, Flickr8k, Flickr30k, and MS COCO.

Motivation

Caption generation and compressing huge amounts of salient visual information into descriptive language were recently improved by combination of convolutional neural networks and recurrent neural networks. . Using representations from the top layer of a convolutional net that distill information in image down to the most salient objects can lead to losing information which could be useful for richer, more descriptive captions. Retaining this information using more low-level representation was the motivation for the current work.

Contributions

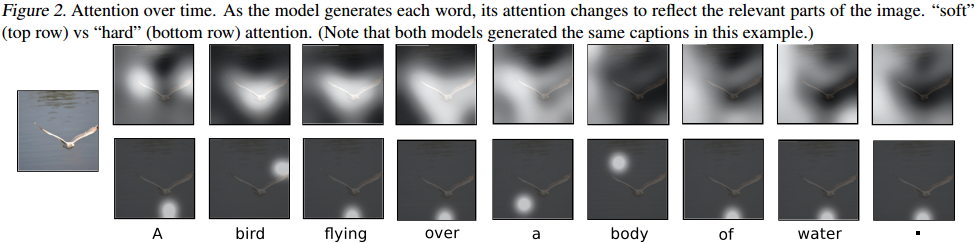

- Two attention-based image caption generators using a common framework. A "soft" deterministic attention mechanism and a "hard" stochastic mechanism.

- Show how to gain insight and interpret results of this framework by visualizing "where" and "what" the attention focused on.

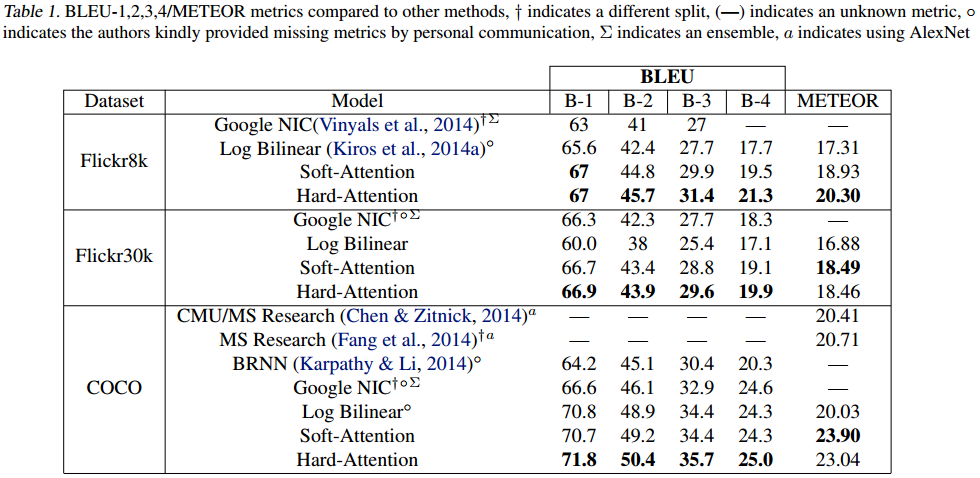

- Quantitatively validate the usefulness of attention in caption generation with state of the art performance on three datasets (Flickr8k, Flickr30k, and MS COCO)

Model

The model takes in a single image and generates a caption of arbitrary length. The caption is a sequence of one-hot encoded words (binary vector) from a given vocabulary.

Encoder: Convolutional Features

Feature vectors are extracted from a convolutional neural network to use as input for the attention mechanism. The extractor produces L D-dimensional vectors corresponding to a part of the image.

Unlike previous work, features are extracted from a lower convolutional layer instead of a fully connected layer. This allows the feature vectors to have a correspondence with portions of the 2D image.

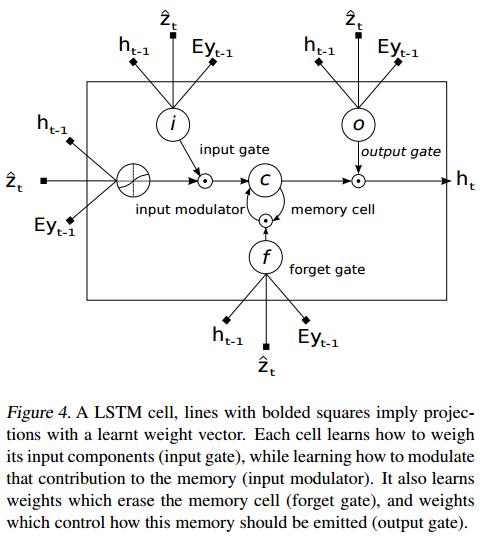

Decoder: Long Short-Term Memory Network

The purpose of the LSTM is to output a sequence of 1-of-K encodings represented as:

[math]\displaystyle{ y={y_1,\dots,y_C},y_i\in\mathbb{R}^K }[/math], where C is the length of the caption and K is the vocabulary size

To generate this sequence of outputs, a set of feature vectors was extracted from the image using a convolutional neural network and represented as:

[math]\displaystyle{ a={a_1,\dots,a_L},a_i\in\mathbb{R}^D }[/math], where D is the dimension of the feature vector extracted by the convolutional neural network

Let [math]\displaystyle{ T_{s,t} : \mathbb{R}^s -\gt \mathbb{R}^t }[/math] be a simple affine transformation, i.e.[math]\displaystyle{ \,Wx + b }[/math] for some projection weight matrix W and some bias vector b learned as parameters in the LSTM.

The equations for the LSTM can then be simplified as:

[math]\displaystyle{ \begin{pmatrix}i_t\\f_t\\o_t\\g_t\end{pmatrix}=\begin{pmatrix}\sigma\\\sigma\\\sigma\\tanh\end{pmatrix}T_{D+m+n,n}\begin{pmatrix}Ey_{t-1}\\h_{t-1}\\\hat z_{t}\end{pmatrix} }[/math]

[math]\displaystyle{ c_t=f_t\odot c_{t-1} + i_t \odot g_t }[/math]

[math]\displaystyle{ h_t=o_t \odot tanh(c_t) }[/math]

where [math]\displaystyle{ \,i_t,f_t,o_t,g_t,c_t,h_t }[/math] corresponds the values and gate labels in the diagram. Additionally, [math]\displaystyle{ \,\sigma }[/math] is the logistic sigmoid function and both it and [math]\displaystyle{ \,tanh }[/math] are applied element wise in the first equation.

At each time step, the LSTM outputs the relative probability of every single word in the vocabulary given a context vector, the previous hidden state and the previously generated word. This is done through additional feedforward layers between the LSTM layers and the output layer, known as deep output layer setup, that take the state of the LSTM [math]\displaystyle{ \,h_t }[/math] and applies additional transformations to the get relative probability:

[math]\displaystyle{ p(y_t,a,y_1^{t-1})\propto exp(L_o(Ey_{t-1}+L_hh_t+L_z\hat z_t)) }[/math]

where [math]\displaystyle{ L_o\in\mathbb{R}^{Kxm},L_h\in\mathbb{R}^{mxn},L_z\in\mathbb{R}^{mxD},E\in\mathbb{R}^{mxK} }[/math] are randomly initialized parameters that are learned through training the LSTM. This series of matrix and vector multiplication then results in a vector of dimension K where each element represents the relative probability of the word indexed with that element being next in the sequence of outputs.

[math]\displaystyle{ \hat{z} }[/math] is the context vector which is a function of the feature vectors [math]\displaystyle{ a={a_1,\dots,a_L} }[/math] and the attention model as discussed in the next section.

Attention: Two Variants

The attention algorithm is one of the arguments that influences the state of the LSTM. There are two variants of the attention algorithm used: stochastic "hard" and deterministic "soft" attention. The visual differences between the two can be seen in the "Properties" section.

Stochastic "hard" attention means learning to maximize the context vector [math]\displaystyle{ \hat{z} }[/math] from a combination of a one-hot encoded variable [math]\displaystyle{ s_{t,i} }[/math] and the extracted features [math]\displaystyle{ a_{i} }[/math]. This is called "hard" attention, because a hard choice is made at each feature, however it is stochastic since [math]\displaystyle{ s_{t,i} }[/math] is chosen from a mutlinoulli distribution. In this approach the location variable [math]\displaystyle{ s_t }[/math] is presented as where the model decides to focus attention when generating the [math]\displaystyle{ t^{th} }[/math] word. (see page 11 for an explanation of the distribution of this link).

Learning stochastic attention requires sampling the attention location st each time, instead we can take the expectation of the context vector [math]\displaystyle{ zˆt }[/math] directly and formulate a deterministic attention model by computing a soft attention weighted annotation vector. Deterministic soft-attention means learning by maximizing the expectation of the context vector. It is deterministic, since [math]\displaystyle{ s_{t,i} }[/math] is not picked from a distribution and it is soft since the individual choices are not optimized, but the whole distribution.

The actual optimization methods for both of these attention methods are outside the scope of this summary.

Properties

"where" the network looks next depends on the sequence of words that has already been generated.

The attention framework learns latent alignments from scratch instead of explicitly using object detectors. This allows the model to go beyond "objectness" and learn to attend to abstract concepts.

Training

Each mini-batch used in training contained captions with similar length. This is because the implementation requires time proportional to the longest length sentence per update, so having all of the sentences in each update have similar length improved the convergence speed dramatically.

Two regularization techniques were used, drop out and early stopping on BLEU score. Since BLEU is the more commonly reported metric, BLEU is used on the validation set for model selection.

The MS COCO dataset has more than 5 reference sentences for some of the images, while the Flickr datasets have exactly 5. For consistency, the reference sentences for all images in the MS COCO dataset was truncated to 5. There was also some basic tokenization applied to the MS COCO dataset to be consistent with the tokenization in the Flickr datasets.

On the largest dataset (MS COCO) the attention model took less than 3 days to train on NVIDIA Titan Black GPU.

Results

Results reported with the BLEU and METEOR metrics. BLEU is one of the most common metrics for translation tasks, but due to some criticism of the metric, another is used as well. Both of these metrics are designed for evaluating machine translation, which is typically from one language to another. Caption generation can be thought of as analogous to translation, where the image is a sentence in the original 'language' and the caption is its translation to English (or another language, but in this case the captions are only in English).

References

<references />