discLDA: Discriminative Learning for Dimensionality Reduction and Classification: Difference between revisions

(→LDA) |

No edit summary |

||

| Line 1: | Line 1: | ||

==Introduction== | ==Introduction== | ||

Dimensionality reduction is a common and often necessary step in most machine learning applications and high-dimensional data analyses. There exists some linear methods for dimensionality reduction such as principal component analysis (PCA) and Fisher discriminant analysis (FDA) and some nonlinear procedures such as kernelized versions of PCA and FDA as well as manifold learning algorithms. | [http://en.wikipedia.org/wiki/Dimension_reduction Dimensionality reduction] is a common and often necessary step in most [http://en.wikipedia.org/wiki/Machine_learning machine learning] applications and [http://en.wikipedia.org/wiki/High-dimensional_statistics high-dimensional data analyses]. There exists some linear methods for dimensionality reduction such as [http://en.wikipedia.org/wiki/Principal_component_analysis principal component analysis] (PCA) and [http://en.wikipedia.org/wiki/Linear_discriminant_analysis Fisher discriminant analysis] (FDA) and some [http://en.wikipedia.org/wiki/Nonlinear_dimensionality_reduction nonlinear procedures] such as [http://en.wikipedia.org/wiki/Kernel_(statistics) kernelized] [http://en.wikipedia.org/wiki/Kernel_principal_component_analysis versions] of PCA and FDA as well as manifold learning algorithms. | ||

A recent trend in dimensionality reduction is to focus on probabilistic models. These models, which include generative topological mapping, factor analysis, independent component analysis and probabilistic latent semantic analysis (pLSA), are generally specified in terms of an underlying independence assumption or low-rank assumption. The models are generally fit with maximum likelihood, although Bayesian methods are sometimes used. | A recent trend in dimensionality reduction is to focus on probabilistic models. These models, which include [http://en.wikipedia.org/wiki/Generative_topographic_map generative topological mapping], [http://en.wikipedia.org/wiki/Factor_analysis factor analysis], [http://en.wikipedia.org/wiki/Independent_component_analysis independent component analysis] and [http://en.wikipedia.org/wiki/Probabilistic_latent_semantic_analysis probabilistic latent semantic analysis] (pLSA), are generally specified in terms of an underlying independence assumption or low-rank assumption. The models are generally fit with [http://en.wikipedia.org/wiki/Maximum_likelihood maximum likelihood], although Bayesian methods are sometimes used. | ||

==LDA== | ==LDA== | ||

Latent Dirichlet Allocation (LDA) is a Bayesian model in the spirit of | [http://en.wikipedia.org/wiki/Latent_Dirichlet_allocation Latent Dirichlet Allocation] (LDA) is a Bayesian model in the spirit of probabilistic latent semantic analysis (pLSA) that models each data point (e.g., a document) as a collection of draws from a [http://en.wikipedia.org/wiki/Mixture_model mixture model] in which each mixture component is known as a topic. | ||

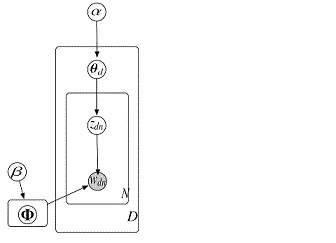

The figure 1 shows the generative process for the vector w<sub>d</sub> which is a bag-of-word representation of document d. It contains three steps which are as follows: | The figure 1 shows the generative process for the vector w<sub>d</sub> which is a bag-of-word representation of document d. It contains three steps which are as follows: | ||

Revision as of 18:24, 9 November 2010

Introduction

Dimensionality reduction is a common and often necessary step in most machine learning applications and high-dimensional data analyses. There exists some linear methods for dimensionality reduction such as principal component analysis (PCA) and Fisher discriminant analysis (FDA) and some nonlinear procedures such as kernelized versions of PCA and FDA as well as manifold learning algorithms.

A recent trend in dimensionality reduction is to focus on probabilistic models. These models, which include generative topological mapping, factor analysis, independent component analysis and probabilistic latent semantic analysis (pLSA), are generally specified in terms of an underlying independence assumption or low-rank assumption. The models are generally fit with maximum likelihood, although Bayesian methods are sometimes used.

LDA

Latent Dirichlet Allocation (LDA) is a Bayesian model in the spirit of probabilistic latent semantic analysis (pLSA) that models each data point (e.g., a document) as a collection of draws from a mixture model in which each mixture component is known as a topic.

The figure 1 shows the generative process for the vector wd which is a bag-of-word representation of document d. It contains three steps which are as follows:

1) [math]\displaystyle{ \theta_d }[/math] ~ Dir [math]\displaystyle{ (\alpha) }[/math]

2) zdn ~ Multi [math]\displaystyle{ (\theta_d) }[/math]

3) wdn ~ Multi [math]\displaystyle{ (\phi_{z_{dn}}) }[/math]

Given a set of documents, {wd}Dd=1, the principle task is to estimate parameter {[math]\displaystyle{ \Phi_k }[/math]}Kk=1. This is done by maximum likelihood, [math]\displaystyle{ \Phi }[/math]* = argmax [math]\displaystyle{ \phi }[/math] p ({wd};[math]\displaystyle{ \Phi }[/math])