a neural representation of sketch drawings

Introduction

In this paper, The authors presented a recurrent neural network: sketch-rnn to construct stroke-based drawings. Besides new robust training methods, they also outlined a framework for conditional and unconditional sketch generation.

Neural networks had been heavily used as image generation tools, for example, Generative Adversarial Networks, Variational Inference and Autoregressive models. Most of those models were focusing on modelling pixels of the images. However, people learn to draw using sequences of strokes since very young ages. The authors decided to use this character to create a new model that utilize strokes of the images as a new approach to vector images generations and abstract concept generalization.

Related Work

Methodology

Dataset

Sketch-RNN

Unconditional Generation

Training

Experiments

Conditional Reconstruction

Latent Space Interpolation

Sketch Drawing Analogies

Predicting Different Endings of Incomplete Sketches

Applications and Future Work

Conclusion

References

- Bahdanau, Dzmitry, Kyunghyun Cho, and Yoshua Bengio. "Neural machine translation by jointly learning to align and translate." arXiv preprint arXiv:1409.0473 (2014).

fonts and examples

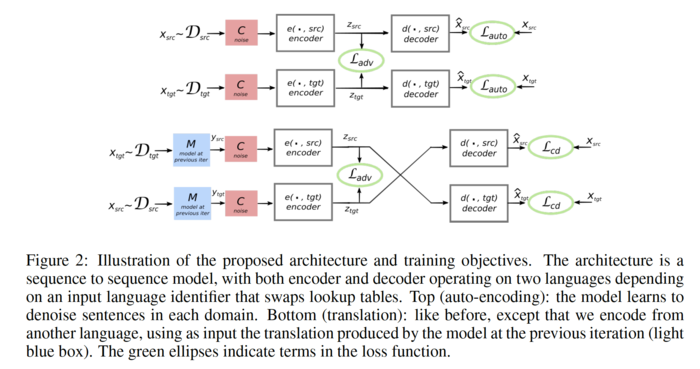

The unsupervised translation scheme has the following outline:

- The word-vector embeddings of the source and target languages are aligned in an unsupervised manner.

- Sentences from the source and target language are mapped to a common latent vector space by an encoder, and then mapped to probability distributions over

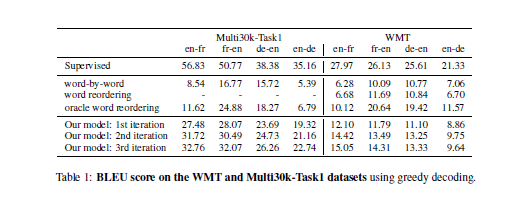

The objective function is the sum of:

- The de-noising auto-encoder loss,

I shall describe these in the following sections.