a neural representation of sketch drawings: Difference between revisions

No edit summary |

No edit summary |

||

| Line 1: | Line 1: | ||

== Introduction == | == Introduction == | ||

In this paper, The authors | In this paper, The authors present a recurrent neural network: sketch-rnn to construct stroke-based drawings. Besides new robust training methods, they also outline a framework for conditional and unconditional sketch generation. | ||

Neural networks | Neural networks have been heavily used as image generation tools, for example, Generative Adversarial Networks, Variational Inference and Autoregressive models. Most of those models are focusing on modelling pixels of the images. However, people learn to draw using sequences of strokes since very young ages. The authors decide to use this character to create a new model that utilize strokes of the images as a new approach to vector images generations and abstract concept generalization. | ||

The model | The model is trained with hand-drawn sketches as input sequences. The model is able to produce sketches in vector format. In the conditional generation model, they also explore the latent space representation for vector images and discuss a few future application of this model. The model and dataset are now available as an open source project. | ||

== Related Work == | == Related Work == | ||

There are some works in the history that used a similar approach to generate images such as Portrait Drawing by Paul the Robot and some reinforcement learning approaches. They work more like a mimic of digitized photographs. There are some Neural network based approaches too, but those are mostly dealing with pixel images. Little work is done on vector images generation. There are models that use Hidden Markov Models or Mixture Density Networks to generate human sketches, continuous data points or vectorized Kanji characters. | |||

The model also allows us to explore the latent space representation of vector images. There are previous works that achieved similar functions as well, such as combining Sequence-to-Sequence models with Variational Autoencoder to model sentences into latent space and using probabilistic program induction to model Omniglot dataset. | |||

The dataset they use contains 50 million vector sketches. Before this paper, there is a Sketch data with 20k vector sketches, a Sketchy dataset with 70k vector sketches along with pixel images, and a ShadowDraw system that used 30k raster images along with extracted vectorized features. They are all comparatively small. | |||

== Methodology == | == Methodology == | ||

=== Dataset === | |||

QuickDraw is a dataset with 50 million vector drawings collected by a game Quick Draw!. It contains hundreds of classes, each class has 70k training samples, 2.5k validation samples and 2.5k test samples. | |||

The data format of each sample is a representation of a pen stroke action event. The Origin is the initial coordinate of the drawing. The sketches are points in a list. Each point consists of 5 elements ###( x, y, p 1 , p 2 , p 3 )### where x and y are the offset distance in x and y directions from the previous point. ### p1, p2 and p3### are three possible states in binary one-hot representation where ### p1 ### indicates the pen is touching the paper, ### p2 ### indicates the pen will be lifted from here, and ###p3### represents the drawing has ended. | |||

=== Sketch-RNN === | === Sketch-RNN === | ||

=== Unconditional Generation === | === Unconditional Generation === | ||

Revision as of 17:49, 16 November 2018

Introduction

In this paper, The authors present a recurrent neural network: sketch-rnn to construct stroke-based drawings. Besides new robust training methods, they also outline a framework for conditional and unconditional sketch generation.

Neural networks have been heavily used as image generation tools, for example, Generative Adversarial Networks, Variational Inference and Autoregressive models. Most of those models are focusing on modelling pixels of the images. However, people learn to draw using sequences of strokes since very young ages. The authors decide to use this character to create a new model that utilize strokes of the images as a new approach to vector images generations and abstract concept generalization.

The model is trained with hand-drawn sketches as input sequences. The model is able to produce sketches in vector format. In the conditional generation model, they also explore the latent space representation for vector images and discuss a few future application of this model. The model and dataset are now available as an open source project.

Related Work

There are some works in the history that used a similar approach to generate images such as Portrait Drawing by Paul the Robot and some reinforcement learning approaches. They work more like a mimic of digitized photographs. There are some Neural network based approaches too, but those are mostly dealing with pixel images. Little work is done on vector images generation. There are models that use Hidden Markov Models or Mixture Density Networks to generate human sketches, continuous data points or vectorized Kanji characters.

The model also allows us to explore the latent space representation of vector images. There are previous works that achieved similar functions as well, such as combining Sequence-to-Sequence models with Variational Autoencoder to model sentences into latent space and using probabilistic program induction to model Omniglot dataset.

The dataset they use contains 50 million vector sketches. Before this paper, there is a Sketch data with 20k vector sketches, a Sketchy dataset with 70k vector sketches along with pixel images, and a ShadowDraw system that used 30k raster images along with extracted vectorized features. They are all comparatively small.

Methodology

Dataset

QuickDraw is a dataset with 50 million vector drawings collected by a game Quick Draw!. It contains hundreds of classes, each class has 70k training samples, 2.5k validation samples and 2.5k test samples.

The data format of each sample is a representation of a pen stroke action event. The Origin is the initial coordinate of the drawing. The sketches are points in a list. Each point consists of 5 elements ###( x, y, p 1 , p 2 , p 3 )### where x and y are the offset distance in x and y directions from the previous point. ### p1, p2 and p3### are three possible states in binary one-hot representation where ### p1 ### indicates the pen is touching the paper, ### p2 ### indicates the pen will be lifted from here, and ###p3### represents the drawing has ended.

Sketch-RNN

Unconditional Generation

Training

Experiments

Conditional Reconstruction

Latent Space Interpolation

Sketch Drawing Analogies

Predicting Different Endings of Incomplete Sketches

Applications and Future Work

Conclusion

References

- Bahdanau, Dzmitry, Kyunghyun Cho, and Yoshua Bengio. "Neural machine translation by jointly learning to align and translate." arXiv preprint arXiv:1409.0473 (2014).

fonts and examples

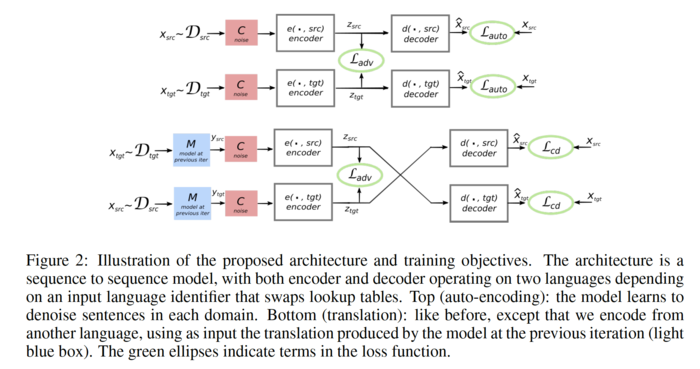

The unsupervised translation scheme has the following outline:

- The word-vector embeddings of the source and target languages are aligned in an unsupervised manner.

- Sentences from the source and target language are mapped to a common latent vector space by an encoder, and then mapped to probability distributions over

The objective function is the sum of:

- The de-noising auto-encoder loss,

I shall describe these in the following sections.