a neural representation of sketch drawings: Difference between revisions

Jump to navigation

Jump to search

No edit summary |

No edit summary |

||

| Line 8: | Line 8: | ||

The authors offer two motivations for their work: | The authors offer two motivations for their work: | ||

# To translate between languages for which large parallel corpora does not exist | # To translate between languages for which large parallel corpora does not exist | ||

== Methodology == | |||

=== Dataset === | |||

== | === Sketch-RNN === | ||

== | === Unconditional Generation === | ||

== | === Training === | ||

== Experiments == | |||

=== Conditional Reconstruction === | |||

== | === Latent Space Interpolation === | ||

=== Sketch Drawing Analogies === | |||

=== Predicting Different Endings of Incomplete Sketches === | |||

== Applications and Future Work == | |||

== | == Conclusion == | ||

== | == References == | ||

#Bahdanau, Dzmitry, Kyunghyun Cho, and Yoshua Bengio. "Neural machine translation by jointly learning to align and translate." arXiv preprint arXiv:1409.0473 (2014). | |||

== fonts and examples == | |||

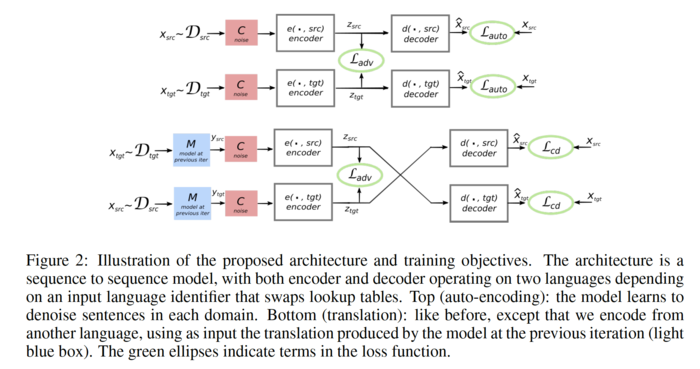

The unsupervised translation scheme has the following outline: | |||

* The word-vector embeddings of the source and target languages are aligned in an unsupervised manner. | |||

* Sentences from the source and target language are mapped to a common latent vector space by an encoder, and then mapped to probability distributions over | |||

The objective function is the sum of: | |||

The | # The de-noising auto-encoder loss, | ||

I shall describe these in the following sections. | |||

[[File:paper4_fig2.png|700px|]] | |||

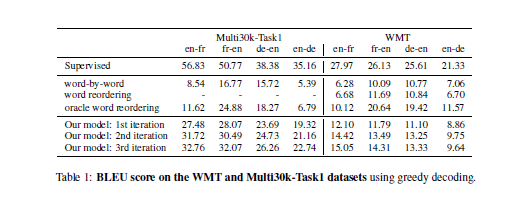

[[File:MC_Translation Results.png]] | [[File:MC_Translation Results.png]] | ||

[[File:MC_Alignment_Results.png|frame|none|alt=Alt text|From Conneau et al. (2017). The final row shows the performance of alignment method used in the present paper. Note the degradation in performance for more distant languages.]] | [[File:MC_Alignment_Results.png|frame|none|alt=Alt text|From Conneau et al. (2017). The final row shows the performance of alignment method used in the present paper. Note the degradation in performance for more distant languages.]] | ||

[[File:MC_Translation_Ablation.png|frame|none|alt=Alt text|From the present paper. Results of an ablation study. Of note are the first, third, and forth rows, which demonstrate that while the translation component of the loss is relatively unimportant, the word vector alignment scheme and de-noising auto-encoder matter a great deal.]] | [[File:MC_Translation_Ablation.png|frame|none|alt=Alt text|From the present paper. Results of an ablation study. Of note are the first, third, and forth rows, which demonstrate that while the translation component of the loss is relatively unimportant, the word vector alignment scheme and de-noising auto-encoder matter a great deal.]] | ||

Revision as of 17:16, 15 November 2018

Introduction

lalala

- To provide a strong lower bound that any semi-supervised machine translation system is supposed to yield

Related Work

The authors offer two motivations for their work:

- To translate between languages for which large parallel corpora does not exist

Methodology

Dataset

Sketch-RNN

Unconditional Generation

Training

Experiments

Conditional Reconstruction

Latent Space Interpolation

Sketch Drawing Analogies

Predicting Different Endings of Incomplete Sketches

Applications and Future Work

Conclusion

References

- Bahdanau, Dzmitry, Kyunghyun Cho, and Yoshua Bengio. "Neural machine translation by jointly learning to align and translate." arXiv preprint arXiv:1409.0473 (2014).

fonts and examples

The unsupervised translation scheme has the following outline:

- The word-vector embeddings of the source and target languages are aligned in an unsupervised manner.

- Sentences from the source and target language are mapped to a common latent vector space by an encoder, and then mapped to probability distributions over

The objective function is the sum of:

- The de-noising auto-encoder loss,

I shall describe these in the following sections.