a Rank Minimization Heuristic with Application to Minimum Order System Approximation: Difference between revisions

| Line 31: | Line 31: | ||

According to the definition of the nuclear norm we have <math>\|X\|_*=\sum_{i=1}^{\min\{m,n\} }\sigma_i(X)</math> where <math> \sigma_i(X) = \sqrt{\lambda_i (X^TX)}</math>. | According to the definition of the nuclear norm we have <math>\|X\|_*=\sum_{i=1}^{\min\{m,n\} }\sigma_i(X)</math> where <math> \sigma_i(X) = \sqrt{\lambda_i (X^TX)}</math>. | ||

The nuclear norm is dual of the spectrial norm | |||

<math>\|X\|_* =\sup \{ \mbox{Tr } Y^T X | \|Y\| \leq 1 \}</math>. So the relaxed version of the rank minimization problem is a convex optimization problem. | |||

When the matrix variable <math>X</math> is symmetric and positive semidefinite, then its singular values are the same as its eigenvalues, and therefore the nuclear norm reduces to <math>\mbox{Tr } X</math>, and that means the heuristic reduces to the trace minimization heuristic. | When the matrix variable <math>X</math> is symmetric and positive semidefinite, then its singular values are the same as its eigenvalues, and therefore the nuclear norm reduces to <math>\mbox{Tr } X</math>, and that means the heuristic reduces to the trace minimization heuristic. | ||

Revision as of 23:41, 23 November 2010

Rank Minimization Problem (RMP) has application in a variety of areas such as control, system identification, statistics and signal processing. Except in some special cases RMP is known to be computationaly hard. [math]\displaystyle{ \begin{array}{ l l } \mbox{minimize} & \mbox{Rank } X \\ \mbox{subject to: } & X \in C \end{array} }[/math]

If the matrix is symmetric and positive semidifinite, trace minimization is a very effective heuristic for rank minimization problem. The trace minimization results in a semidefinite problem which can be easily solved. [math]\displaystyle{ \begin{array}{ l l } \mbox{minimize} & \mbox{Tr } X \\ \mbox{subject to: } & X \in C \end{array} }[/math]

This paper focuses on the following problems:

- Describing a generalization of the trace heuristic for genaral non-square matrices.

- Showing that the new heuristic can be reduced to an SDP, and hence effictively solved.

- Applying the mothod on the minimum order system approximation.

A Generalization Of The Trace Heuristic

This heurisitic minimizes the sum of the singular values of the matrix [math]\displaystyle{ X\in \real^{m\times n} }[/math], which is the nuclear norm of [math]\displaystyle{ X }[/math] denoted by [math]\displaystyle{ \|X\|_* }[/math].

[math]\displaystyle{ \begin{array}{ l l } \mbox{minimize} & \|X\|_* \\ \mbox{subject to: } & X \in C \end{array} }[/math]

According to the definition of the nuclear norm we have [math]\displaystyle{ \|X\|_*=\sum_{i=1}^{\min\{m,n\} }\sigma_i(X) }[/math] where [math]\displaystyle{ \sigma_i(X) = \sqrt{\lambda_i (X^TX)} }[/math].

The nuclear norm is dual of the spectrial norm [math]\displaystyle{ \|X\|_* =\sup \{ \mbox{Tr } Y^T X | \|Y\| \leq 1 \} }[/math]. So the relaxed version of the rank minimization problem is a convex optimization problem.

When the matrix variable [math]\displaystyle{ X }[/math] is symmetric and positive semidefinite, then its singular values are the same as its eigenvalues, and therefore the nuclear norm reduces to [math]\displaystyle{ \mbox{Tr } X }[/math], and that means the heuristic reduces to the trace minimization heuristic.

Nuclear Norm Minimization vs. Rank Minimization

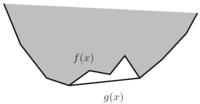

Definition: Let [math]\displaystyle{ f:C \rightarrow\real }[/math] where [math]\displaystyle{ C\subseteq \real^n }[/math]. The convex envelope of [math]\displaystyle{ f }[/math] (on [math]\displaystyle{ C }[/math]) is defined as the largest convex function [math]\displaystyle{ g }[/math] such that [math]\displaystyle{ g(x)\leq f(x) }[/math] for all [math]\displaystyle{ x\in X }[/math].

Theorem 1 The convex envelope of the function [math]\displaystyle{ \phi(X)=\mbox{Rank }(X) }[/math], on [math]\displaystyle{ C=\{X\in \real^{m\times n} | \|X\|\leq 1\} }[/math] is [math]\displaystyle{ \phi_{\mbox{env}}(X) = \|X\|_* }[/math].

Suppose [math]\displaystyle{ X\in C }[/math] is bounded by [math]\displaystyle{ M }[/math] that is [math]\displaystyle{ \|X\|\leq M }[/math], then the convex envelope of [math]\displaystyle{ \mbox{Rank }X }[/math] on [math]\displaystyle{ \{X | \|X\|\leq M\} }[/math] is given by [math]\displaystyle{ \frac{1}{M}\|X\|_* }[/math].

[math]\displaystyle{ \mbox{Rank } X \geq \frac{1}{M} \|X\|_* }[/math] That means if [math]\displaystyle{ p_{\mbox{rank}} }[/math] and [math]\displaystyle{ p_{*} }[/math] are the optimal values of the rank minimization problem and dual spectrial norm minimization problem then we have [math]\displaystyle{ p_{\mbox{rank}}\geq \frac{1}{M} p_{*} }[/math]

References

<references />