Wasserstein Auto-encoders: Difference between revisions

(Editorial) |

|||

| (51 intermediate revisions by 21 users not shown) | |||

| Line 1: | Line 1: | ||

The first version of this work was published in 2017 and this version (which is the third revision) is presented in ICLR 2018. Source code for the first version is available [https://github.com/tolstikhin/wae here] | |||

=Introduction= | =Introduction= | ||

Early successes in the field of representation learning were based on supervised approaches, which used large | Early successes in the field of representation learning were based on supervised approaches, which used large labeled datasets to achieve impressive results. On the other hand, popular unsupervised generative modeling methods mainly consisted of probabilistic approaches focusing on low dimensional data. In recent years, there have been models proposed which try to combine these two approaches. One such popular method is called variational auto-encoders (VAEs). VAEs are theoretically elegant but have a major drawback of generating blurry sample images when used for modeling natural images. In comparison, generative adversarial networks (GANs) produce much sharper sample images but have their own list of problems which include a lack of encoder, harder to train, and the "mode collapse" problem. Mode collapse problem refers to the inability of the model to capture all the variability in the true data distribution. Currently, there has been a lot of activities around finding and evaluating numerous GANs architectures and combining VAEs and GANs, but a model which combines the best of both GANs and VAEs is yet to be discovered. | ||

The work done in this paper builds upon the theoretical work done in Bousquet et al.[2017] [4]. The authors tackle generative modeling using optimal transport (OT). The OT cost is defined as the measure of distance between probability distributions. | |||

To be more specific on the OT: | |||

Given a function <math>c : X × Y → R</math>, they seek a minimizer of <math> C(µ, ν) := \underset{π ∈ Π(µ, ν)}{inf} \int_{X×Y}{c(x, y)dπ(x, y)}</math> | |||

The | The measures <math>π ∈ Π(µ, ν)</math> are called transport plans or transference plans. The measures <math>π ∈ Π(µ, ν)</math> achieving the infimum are called optimal transport plans. The classical interpretation of this problem is the problem of minimizing the total cost <math>C(µ, ν)</math> of transporting the mass distribution <math>µ</math> to the mass distribution <math>ν</math>, where the cost of transporting one unit of mass at the point <math>x ∈ X</math> to one unit of mass at the point <math>y ∈ Y</math> is given by the cost function <math>c(x, y)</math>. | ||

One of the features of OT cost which is beneficial is that it provides much weaker topology when compared to other costs, including f-divergences which are associated with the original GAN algorithms. | |||

This particular feature is crucial in applications where the data is usually supported on low dimensional manifolds in the input space. The problem with stronger notions of distances such as f-divergences is that they often max out and provide no useful gradients for training. In comparison, the OT cost has been claimed to behave much more nicely [5, 8]. Despite the preceding claim, the implementation, which is similar to GANs, still requires the addition of a constraint or a regularization term into the objective function. | |||

==Original Contributions== | ==Original Contributions== | ||

| Line 9: | Line 20: | ||

The main contributions are given below: | The main contributions are given below: | ||

* A new class of auto-encoders called Wasserstein Auto-Encoders (WAE). WAEs minimize the optimal transport <math>W_c(P_X, P_G)</math> for any cost function <math>c</math>. As is the case with VAEs, WAE objective function is also made up of two terms: the c-reconstruction cost and a regularizer term <math>\mathcal{D}_Z(P_Z, Q_Z)</math> which penalizes the discrepancy between two distributions in <math>\mathcal{Z}: P_Z\ and | * A new class of auto-encoders called Wasserstein Auto-Encoders (WAE). WAEs minimize the optimal transport <math>W_c(P_X, P_G)</math> for any cost function <math>c</math>. As is the case with VAEs, WAE objective function is also made up of two terms: the c-reconstruction cost and a regularizer term <math>\mathcal{D}_Z(P_Z, Q_Z)</math> which penalizes the discrepancy between two distributions in <math>\mathcal{Z}: P_Z \text{ and } Q_Z</math>. <math>Q_Z</math> is a distribution of encoded points, i.e. <math>Q_Z := \mathbb{E}_{P_X}[Q(Z|X)]</math>. Note that when <math>c</math> is the squared cost and the regularizer term is the GAN objective, WAE is equivalent to the adversarial auto-encoders described in [2]. | ||

* Experimental results of using WAE on MNIST and CelebA datasets with squared cost <math>c(x, y) = ||x - y||_2^2</math>. The results of these experiments show that WAEs have the good features of VAEs such as stable training, encoder-decoder architecture, and a nice latent manifold structure while simultaneously improving the quality of the generated samples. | * Experimental results of using WAE on MNIST and CelebA datasets with squared cost <math>c(x, y) = ||x - y||_2^2</math>. The results of these experiments show that WAEs have the good features of VAEs such as stable training, encoder-decoder architecture, and a nice latent manifold structure while simultaneously improving the quality of the generated samples. | ||

* Two different regularizers. One based on GANs and adversarial training in the latent space <math>\mathcal{Z}</math>. The other one is based on | * Two different regularizers. One based on GANs and adversarial training in the latent space <math>\mathcal{Z}</math>. The other one is based on "Maximum Mean Discrepancy" which is known to have high performance when matching high dimensional standard normal distributions. This second regularizer also makes training a fully adversary-free min-min optimization problem and gets rid of the problem of tuning the GAN. During GAN training, the mode can often collapse, the model is sensitive to hyper-parameters, and the loss is uninterpretable since it fluctuates during training. WAE solves such problems and is much more developer-friendly. Most important of all, the loss in WAE is interpretable, making it easier to decide when to terminate the training. | ||

* The final contribution is the mathematical analysis used to derive the WAE objective function. In particular, the mathematical analysis shows that in the case of generative models, the primal form of <math>W_c(P_X, P_G)</math> is equivalent to a problem which deals with the optimization of a probabilistic encoder <math>Q(Z|X)</math> | * The final contribution is the mathematical analysis used to derive the WAE objective function. In particular, the mathematical analysis shows that in the case of generative models, the primal form of <math>W_c(P_X, P_G)</math> is equivalent to a problem which deals with the optimization of a probabilistic encoder <math>Q(Z|X)</math> | ||

The paper provides an ostensibly simple recipe to implement a non-blurry VAE (it is generative). It provides what looks like an elegant and logical way to cast the Wasserstein distance metric to setup the VAE/GAN problem. | |||

The paper gives three instructive VAEGAN model comparisons, unifying them thematically – Adversarial Autoencoders (AAE), Adversarial Variational Bayes (AVB), and the original Variational Autoencoders (VAE). These generalizations arise for the case with random decoders – the paper introduces the idea with deterministic decodes, and then extends it to random decoders – with play on the regularizer of the VAE which these papers replace with a GAN. | |||

=Proposed Method= | =Proposed Method= | ||

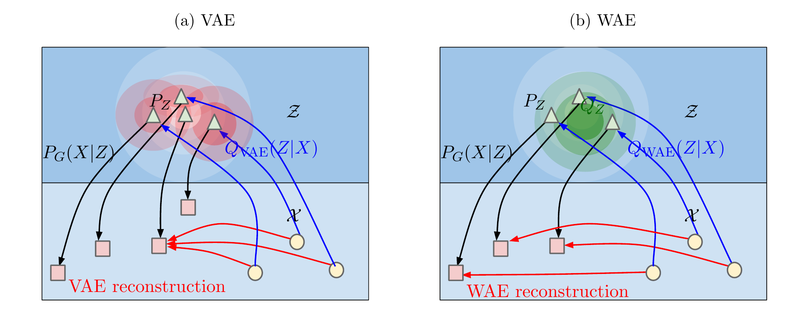

The method proposed by the authors uses a novel auto-encoder architecture to minimize the optimal transport cost <math>W_c(P_X, P_G)</math>. In the optimization problem that follows, the decoder tries to accurately reconstruct the data points as measured by the cost function <math>c</math>. The encoder tries to achieve the following two conflicting goals at the same time: (1) try to match the distribution of the encoded data points <math>Q_Z := \mathbb{E}_{P_X}[Q(Z|X)]</math> to the prior distribution <math>P_Z</math> as measured by the divergence <math>\mathcal{D}_Z(P_Z, Q_Z)</math> and, (2) make sure that the latent space vectors encoded contain enough information so that the reconstruction of the data points are of high quality. The figure below illustrates this: | The method proposed by the authors uses a novel auto-encoder architecture to minimize the optimal transport cost <math>W_c(P_X, P_G)</math>. In the optimization problem that follows, the decoder tries to accurately reconstruct the data points as measured by the cost function <math>c</math>. The encoder tries to achieve the following two conflicting goals at the same time: (1) try to match the distribution of the encoded data points <math>Q_Z := \mathbb{E}_{P_X}[Q(Z|X)]</math> to the prior distribution <math>P_Z</math> as measured by the divergence <math>\mathcal{D}_Z(P_Z, Q_Z)</math> and, (2) make sure that the latent space vectors encoded contain enough information so that the reconstruction of the data points are of high quality. The figure below illustrates this: | ||

| Line 25: | Line 41: | ||

==Preliminaries and Notations== | ==Preliminaries and Notations== | ||

Authors use calligraphic letters to denote sets (for example, <math>\mathcal{X}</math>), capital letters for random variables (for example, <math>X</math>), and lower case letters for the values (for example, <math>x</math>). Probability distributions are are also denoted with capital letters (for example, <math>P(X)</math>) and the corresponding densities are denoted with lowercase letter (for example, <math>p(x)</math>). | Authors use calligraphic letters to denote sets (for example, <math>\mathcal{X}</math>), capital letters for random variables (for example, <math>X</math>), and lower case letters for the values (for example, <math>x</math>). Probability distributions are are also denoted with capital letters (for example, <math>P(X)</math>) and the corresponding densities are denoted with lowercase letter (for example, <math>p(x)</math>). | ||

| Line 33: | Line 50: | ||

A rich class of measure of distances between probability distributions is motivated by the optimal transport problem. One such formulation of the optimal transport problem is the Kantovorich's formulation given by: | A rich class of measure of distances between probability distributions is motivated by the optimal transport problem. One such formulation of the optimal transport problem is the Kantovorich's formulation given by: | ||

<math> | <center><math> | ||

W_c(P_X, P_G) := \underset{\Gamma \in \mathcal{P}(X \sim P_X ,Y \sim P_G)}{inf} \mathbb{E}_{(X,Y) \sim \Gamma}[c(X,Y)], | W_c(P_X, P_G) := \underset{\Gamma \in \mathcal{P}(X \sim P_X ,Y \sim P_G)}{inf} \mathbb{E}_{(X,Y) \sim \Gamma}[c(X,Y)], | ||

\text{where} \ c(x, y): \mathcal{X} \times \mathcal{X} → \mathcal{R_{+}} | \text{where} \ c(x, y): \mathcal{X} \times \mathcal{X} → \mathcal{R_{+}} | ||

</math> | </math></center> | ||

is any measurable cost function and <math>\mathcal{P}(X \sim P_X, Y \sim P_G)</math> is a set of all joint distributions of (X, Y) with marginals <math>P_X\ \text{and}\ P_G</math> respectively. | is any measurable cost function, and <math>\mathcal{P}(X \sim P_X, Y \sim P_G)</math> is a set of all joint distributions of (X, Y) with marginals <math>P_X\ \text{and}\ P_G</math> respectively. | ||

A particularly interesting case is when <math>(\mathcal{X}, d)</math> is metric space and <math>c(x, y) = d^p(x, y)\ \text{for}\ p ≥ 1</math>. In this case <math>W_p</math>, the <math>p-th</math> root of <math>W_c</math>, is called the p-Wasserstein distance. | A particularly interesting case is when <math>(\mathcal{X}, d)</math> is metric space and <math>c(x, y) = d^p(x, y)\ \text{for}\ p ≥ 1</math>. In this case <math>W_p</math>, the <math>p-th</math> root of <math>W_c</math>, is called the p-Wasserstein distance. | ||

| Line 50: | Line 67: | ||

The intuition behind modern generative models like VAEs and GANs is that they try to minimize specific distance measures between the data distribution <math>P_X</math> and the model <math>P_G</math>. Unfortunately, with the current knowledge and tools, it is usually really hard or even impossible to calculate most of the standard discrepancy measures especially when <math>P_X</math> is not known and <math>P_G</math> is parametrized by deep neural networks. Having said that, there are certain tricks available which can be employed to get around that difficulty. | The intuition behind modern generative models like VAEs and GANs is that they try to minimize specific distance measures between the data distribution <math>P_X</math> and the model <math>P_G</math>. Unfortunately, with the current knowledge and tools, it is usually really hard or even impossible to calculate most of the standard discrepancy measures especially when <math>P_X</math> is not known and <math>P_G</math> is parametrized by deep neural networks. Having said that, there are certain tricks available which can be employed to get around that difficulty. | ||

For KL-divergence <math>D_{KL}(P_X, P_G)</math> minimization, or equivalently the marginal log-likelihood <math>E_{P_X}[log_{P_G}(X)]</math> maximization, one can use the famous variational lower bound which provides a theoretically grounded framework. This has been used quite successfully by the VAEs. In the general case of minimizing f-divergence <math>D_f(P_X, P_G)</math>, using its dual formulation along with f-GANs and adversarial training is viable. Finally, OT cost <math>W_c(P_X, P_G)</math> can be minimized by using the Kantorovich-Rubinstein duality expressed as an adversarial objective. The | For KL-divergence <math>D_{KL}(P_X, P_G)</math> minimization, or equivalently the marginal log-likelihood <math>E_{P_X}[log_{P_G}(X)]</math> maximization, one can use the famous variational lower bound which provides a theoretically grounded framework. This has been used quite successfully by the VAEs. In the general case of minimizing f-divergence <math>D_f(P_X, P_G)</math>, using its dual formulation along with f-GANs and adversarial training is viable. Finally, OT cost <math>W_c(P_X, P_G)</math> can be minimized by using the Kantorovich-Rubinstein duality expressed as an adversarial objective. The Wasserstein-GAN implement this idea. | ||

In this paper, the authors focus on the latent variable models <math>P_G</math> given by a two step procedure. First, a code <math>Z</math> is sampled from a fixed distribution <math>P_Z</math> on a latent space < | In this paper, the authors focus on the latent variable models <math>P_G</math> given by a two step procedure. First, a code <math>Z</math> is sampled from a fixed distribution <math>P_Z</math> on a latent space <math>\mathcal{Z}</math>. Second step is to map <math>Z</math> to the image <math>X \in \mathcal{X} = \mathcal{R}^d</math> with a (possibly random) transformation. This gives us a density of the form, | ||

<math> | <center><math> | ||

p_G(x) := \int\limits_{\mathcal{Z}}{p_G(x|z)p_z(z)}dz,\ \forall x \in \mathcal{X}, | p_G(x) := \int\limits_{\mathcal{Z}}{p_G(x|z)p_z(z)}dz,\ \forall x \in \mathcal{X}, | ||

</math> | </math></center> | ||

provided all the probablities involved are properly defined. In order to keep things simple, the authors focus on non-random decoders, i.e., the generative models <math>P_G(X|Z)</math> deterministically map <math>Z</math> to <math>X = G(Z)</math> using a fixed map <math>G: \mathcal{Z} → \mathcal{X}</math>. Similar results hold for the random decoders as shown by the authors in the appendix B.1. | provided all the probablities involved are properly defined. In order to keep things simple, the authors focus on non-random decoders, i.e., the generative models <math>P_G(X|Z)</math> deterministically map <math>Z</math> to <math>X = G(Z)</math> using a fixed map <math>G: \mathcal{Z} → \mathcal{X}</math>. Similar results hold for the random decoders as shown by the authors in the appendix B.1. | ||

| Line 70: | Line 87: | ||

where <math>Q_Z</math> is the marginal distribution of <math>Z</math> when <math>X \sim P_X</math> and <math>Z \sim Q(Z|X)</math>. | where <math>Q_Z</math> is the marginal distribution of <math>Z</math> when <math>X \sim P_X</math> and <math>Z \sim Q(Z|X)</math>. | ||

According to the authors, the result above allows optimization over random encoders <math>Q(Z|X)</math> instead of optimizing | According to the authors, the result above allows optimization over random encoders <math>Q(Z|X)</math> instead of optimizing overall couplings of <math>X</math> and <math>Y</math>. Both problems are still constrained. To find a numerical solution, the authors relax the constraints on <math>Q_Z</math> by adding a regularizer term to the objective. This gives them the WAE objective: | ||

<math> | <math> | ||

| Line 76: | Line 93: | ||

</math> | </math> | ||

where <math>\mathcal{Q}</math> is any nonparametric set of probabilistic encoders, <math>\mathcal{D}_Z</math> is an arbitrary measure of distance between <math>Q_Z</math> and <math>P_Z</math>, and <math>\lambda > 0</math> is a hyperparameter. As is the case with the VAEs, the | where <math>\mathcal{Q}</math> is any nonparametric set of probabilistic encoders, <math>\mathcal{D}_Z</math> is an arbitrary measure of distance between <math>Q_Z</math> and <math>P_Z</math>, and <math>\lambda > 0</math> is a hyperparameter. As is the case with the VAEs, the authors propose using deep neural networks to parameterize both encoders <math>Q</math> and decoders <math>G</math>. Note that, unlike VAEs, WAE allows for non-random encoders deterministically mapping their inputs to their latent codes. | ||

authors propose using deep neural networks to parameterize | |||

The authors propose two different regularizers <math>\mathcal{D}_Z(Q_Z, P_Z)</math> | The authors propose two different regularizers <math>\mathcal{D}_Z(Q_Z, P_Z)</math> | ||

===GAN-based <math>\mathcal{D}_z</math>=== | ===GAN-based <math>\mathcal{D}_z</math>=== | ||

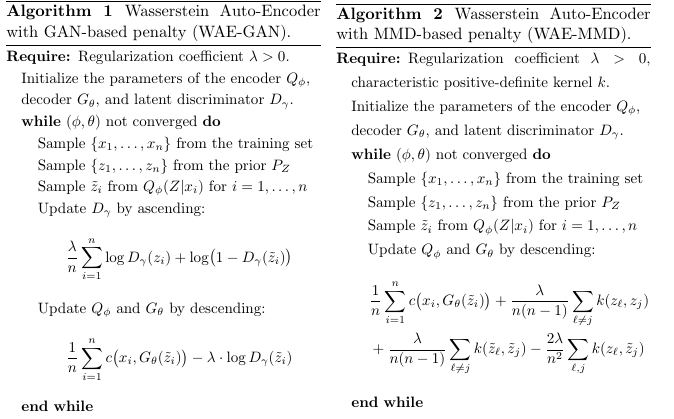

One of the option is to use <math>\mathcal{D}_Z(Q_Z, P_Z) = \mathcal{D}_{JS}(Q_Z, P_Z)</math> along with adversarial training for estimation. In particular, the discriminator (adversary) is used in the latent space <math>\mathcal{Z}</math> to classify "true" points sampled for <math>P_X</math> and "fake" ones samples from <math>Q_Z</math>. This leads to the WAE-GAN as described in | One of the option is to use <math>\mathcal{D}_Z(Q_Z, P_Z) = \mathcal{D}_{JS}(Q_Z, P_Z)</math> along with adversarial training for estimation. In particular, the discriminator (adversary) is used in the latent space <math>\mathcal{Z}</math> to classify "true" points sampled for <math>P_X</math> and "fake" ones samples from <math>Q_Z</math>. This leads to the WAE-GAN as described in Algorithm 1 listed below. Even though WAE-GAN still uses max-min optimization, one positive feature is that it moves the adversary from the input (pixel) space <math>\mathcal{X}</math> to the latent space <math>\mathcal{Z}</math>. Additionally, the true latent space distribution <math>P_Z</math> might have a nice shape with a single mode (for a Gaussian prior), making the task of matching much easier as opposed to matching an unknown, complex, and possibly multi-modal distributions which is usually the case in GANs. This leads to the second penalty. | ||

===MMD-based <math>\mathcal{D}_z</math>=== | ===MMD-based <math>\mathcal{D}_z</math>=== | ||

For a positive-definite reproducing kernel <math>k: \mathcal{Z} \times \mathcal{Z} → \mathcal{R}</math>, the maximum mean discrepancy (MMD) is defined as | For a positive-definite reproducing kernel <math>k: \mathcal{Z} \times \mathcal{Z} → \mathcal{R}</math>, the maximum mean discrepancy (MMD) is defined as: | ||

<math> | <center><math> | ||

MMD_k(P_Z, Q_Z) = \left \Vert \int \limits_{\mathcal{Z}} {k(z, \cdot)dP_Z(z)} - \int \limits_{\mathcal{Z}} {k(z, \cdot)dQ_Z(z)} \right \|_{\mathcal{H}_k} | MMD_k(P_Z, Q_Z) = \left \Vert \int \limits_{\mathcal{Z}} {k(z, \cdot)dP_Z(z)} - \int \limits_{\mathcal{Z}} {k(z, \cdot)dQ_Z(z)} \right \|_{\mathcal{H}_k} | ||

</math>, | </math>,</center> | ||

where <math>\mathcal{H}_k</math> is the RKHS (reproducing kernel Hilbert space) of real-valued functions mappings <math>\mathcal{Z}</math> to <math>\mathcal{R}</math>. If <math>k</math> is | where <math>\mathcal{H}_k</math> is the RKHS (reproducing kernel Hilbert space) of real-valued functions mappings <math>\mathcal{Z}</math> to <math>\mathcal{R}</math>. If <math>k</math> is characteristic then <math>MMD_k</math> defines a metric and can be used as a distance measure. The authors propose to use <math>\mathcal{D}_Z(P_Z, Q_Z) = MMD_k(P_Z, Q_Z)</math>. MMD also have an unbiased U-statistic estimator which can be used along with stochastic gradient descent (SGD) methods. This gives us WAE-MMD as described in Algorithm 2 listed below. Note that MMD is known to perform well when matching high dimensional standard normal distributions, so it is expected that this penalty will work well when the prior <math>P_Z</math> is Gaussian. | ||

[[File:ka2khan_figure_2.png|800px|thumb|center|Algorithms]] | [[File:ka2khan_figure_2.png|800px|thumb|center|Algorithms- WAE-GAN on left and WAE-MMD on right]] | ||

=Related Work= | =Related Work= | ||

==Literature on auto-encoders== | ==Literature on auto-encoders== | ||

==Literature on OT== | Classical unregularized auto-encoders have an objective function which only tries to minimize the reconstruction cost. This results in distinct data points being encoded into distinct zones distributed chaotically across the latent space <math>\mathcal{Z}</math>. The latent space <math>\mathcal{Z}</math> in this scenario contains huge "holes" for which the decoder <math>P_G(X|Z)</math> has never been trained. In general, the encoder trained this way do not provide terribly useful representations and sampling from the latent space <math>\mathcal{Z}</math> becomes a difficult task [12]. | ||

==Literature on | |||

VAEs [1] minimize the KL-divergence <math>D_{KL}(P_X, P_G)</math> which consists of the reconstruction cost and the regularizer <math>\mathbb{E}_{P_X}[D_{KL}(Q(|X), P_Z)]</math>. The regularizer penalizes the difference in the encoded training images and the prior <math>P_Z</math>. But this penalty still does not guarantee that the overall encoded distribution matches the prior distribution as WAE does. In addition, VAEs require a non-degenerate (i.e. non-deterministic) Gaussian encoders along with random decoders. Another paper [11] later, proposed a method which allows the use of non-Gaussian encoders with VAEs. In the meanwhile, WAE minimizes <math>W_{c}(P_X, P_G)</math> and allows probabilistic and deterministic encoder and decoder pairs. | |||

When parameters are appropriately defined, WAE is able to generalize AAE in two ways: it can use any cost function in the input space and use any discrepancy measure <math>D_Z</math> in latent space <math>Z</math> other than the adversarial one. | |||

There has been work done on regularized auto-encoders called InfoVAE [14], which has objective similar to [4] but using different motivations and arguments. | |||

WAEs explicitly define the cost function <math>c(x,y)</math>, whereas VAEs rely on an implicitly through a negative log likelihood term. It theoretically can induce any arbitrary cost function, but in practice can require an estimation of the normalizing constant that can be different for values of <math>z</math>. | |||

==Literature on Optimal Transport (OT)== | |||

[15] provides methods for computing OT cost for large-scale data using SGD and sampling. The WGAN [5] proposes a generative model which minimizes 1-Wasserstein distance <math>W_1(P_X, P_G)</math>. The WGAN algorithm does not provide an encoder and cannot be easily applied to any arbitrary cost <math>W_C</math>. The model proposed in [5] uses the dual form, in contrast, the model proposed in this paper uses the primal form. The primal form allows the use of any arbitrary cost function <math>c</math> and naturally, comes with an encoder. | |||

In order to compute <math>W_c(P_X, P_G)</math> or <math>W_1(P_X, P_G)</math>, the model needs to handle various non-trivial constraints, various methods has be proposed in the literature ([5], [2], [8], [16], [15], [17], [18]) to avoid this difficulty . | |||

==Literature on GANs== | |||

A lot of the GAN variations which have been proposed in the literature come without an encoder. Examples include WGAN and f-GAN. These models are deficient in cases where a reconstruction of latent space is needed to use the learned manifold. | |||

There have been numerous models proposed in the literature which try to combine the adversarial training of GANs with auto-encoder architectures. Some examples are [19], [20], [21], and [22]. There has also been work done in which reproducing kernels have been used in the context of GANS ([23], [24]). | |||

=Experiments= | =Experiments= | ||

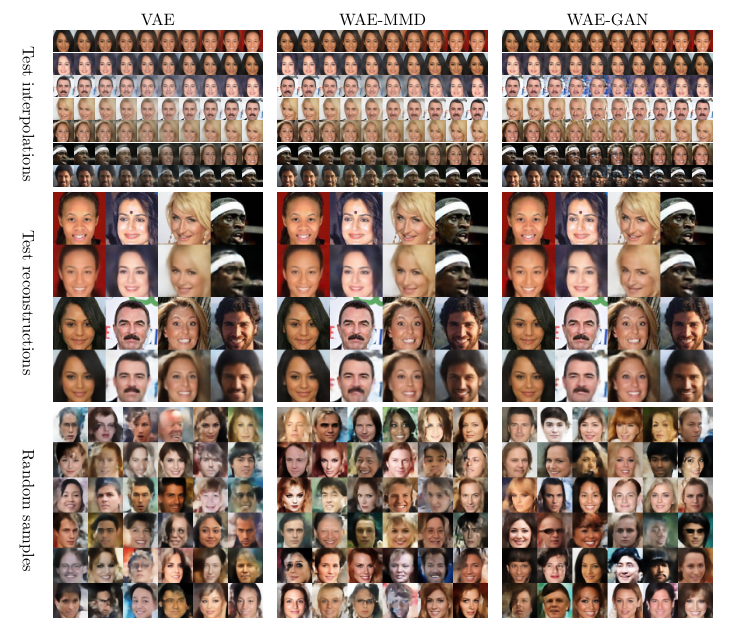

Experiments were used to empirically evaluate the proposed WAE model. | |||

'''Experimental setup''' | |||

For experimental setup, authors used <math> \small P_Z</math> and squared cost function <math> \small c(x,y)</math> for data points. | |||

Deterministic encoder-decoder pairs were used. The authors conducted experiments using the following two real-world datasets: (1) MNIST [27] made up of 70k images, and (2) CelebA [28] consisting of approximately 203k images. For test reconstruction and interpolations a pair of held out images, <math>(x,y)</math> from the test set are Auto-encoded (separately), to produce <math>(z_x, z_y)</math> in the latent space | |||

'''Training Details - MNIST''' | |||

Authors use mini-batches of size 100 and trained the models for 100 epochs. They used λ = 10 and variance of 1. For the encoder-decoder pair, they set α = 0.01 for Adam in the beginning and for the adversary in WAE-GAN to α = 0.005. After 30 epochs they decreased both by a factor of 2, and after first 50 epochs further by a factor of 5. Both encoder and decoder used fully convolutional architectures with 4x4 convolutional filters. | |||

'''Training Details - CelebA''' | |||

Authors took the CelebA images and conducted 140x140 center crops and then resized to the 4x64 resolution. They again used mini-batches of size 100 and trained the models for upto 250 epochs. All reported WAE models were trained for 55 epochs and VAE for 68 epochs. For WAE-MMD we used λ = 100 and for WAE-GAN λ = 1. Both used variance of 2. | |||

For WAE-MMD the learning rate of Adam was initially set to α = 0.01 . For WAE-GAN the learning rate of Adam for the encoder-decoder pair was initially set to α = 0.003 and for the adversary to 0.01. All learning rates were decreased by a factor of 2 after 30 epochs, further by a factor of 5 after 50 first epochs, and finally additional factor of 10 after 100 first epochs. | |||

The main evaluation criteria were to see if the WAE model can simultaneously achieve: | |||

<ol> | |||

<li>accurate reconstruction of the data points</li> | |||

<li>resonable geometry of the latent manifold</li> | |||

<li>generation of high quality random samples</li> | |||

</ol> | |||

For the model to generalize well (1) and (2) should be met on both the training and test data set. | |||

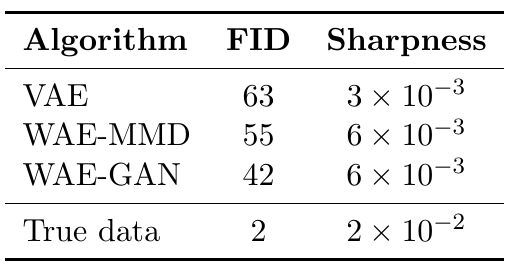

The proposed model achieve reasonably good results as highlighted in the figures given below: | |||

[[File:ka2khan_figure_3.png|800px|thumb|center|Using CelebA dataset]] | |||

[[File:ka2khan_figure_4.png|800px|thumb|center|Using CelebA dataset, FID (Fréchet Inception Distance | |||

[32]): smaller is better, sharpness: larger is better]] | |||

=Conclusion= | =Conclusion= | ||

The authors proposed a new class of algorithms for building a generative model called Wasserstein Autoencoders based on optimal transport cost. They related the newly proposed model to the existing probabilistic modeling techniques. They empirically evaluated the proposed models using two real-world datasets. They compared the results obtained using their proposed model with the results obtained using VAEs on the same dataset to show that the proposed models generate sample images of higher quality in addition to being easier to train and having good reconstruction quality of the data points. | |||

The authors claim that in future work, they will further explore the criteria for matching the encoding distribution <math>Q_Z</math> to the prior distribution <math>P_Z</math>, evaluate whether it is feasible to adversarially train the cost function <math>c</math>in the input space <math>\mathcal{X}</math>, and a theoretical analysis of the dual-formations for WAE-GAN and WAE-MMD. | |||

=Future Work= | |||

Following the work of this paper, another generative model was introduced by [34] that is based on the concept of optimal transport. Optimal transport is basically the distance between probability distributions by transporting one of the distributions to the other (and hence the name of optimal transport). Then, a new simple model called "Sliced-Wasserstein Autoencoders" (SWAE) is presented, which is easily implemented, and provides the capabilities of Wasserstein Autoencoders. | |||

([https://openreview.net/forum?id=HkL7n1-0b]) The results from MNIST and CelebA datasets look convincing, though could include additional evaluation to compare the adversarial loss with the straightforward MMD metric and potentially discuss their pros and cons. In some sense, given the challenges in evaluating and comparing closely related auto-encoder solutions, the authors could design demonstrative experiments for cases where Wassersterin distance helps and maybe its potential limitations. | |||

=Critique= | |||

Although this paper presented some empirical tests to explain its method in an appropriate way, it would be better to provide some clearer notations including the details of the architectures in their experiments. Furthermore, they could benefit from performing some comparisons between the results of their work and other similar works. As pointed out by a reviewer, the closest work to this paper is the adversarial variational Bayes framework by Mescheder et.al. which also attempts at unifying VAEs and GANs. Although the authors describe the conceptual differences and advantages over that approach, it will be beneficial to actually include some comparisons in the results section. | |||

Moreover, the performance of the algorithm is not a significant improvement compared to previous VAE algorithm. The performance can be described and tested if the author performed empirical tests on various datasets. However, the methodology is flexible and unified to other types of the algorithm which is a huge benefit without compromising the stability of the training. | |||

=References= | =References= | ||

| Line 170: | Line 249: | ||

[33] B. Poole, A. Alemi, J. Sohl-Dickstein, and A. Angelova. Improved generator objectives for GANs, 2016. | [33] B. Poole, A. Alemi, J. Sohl-Dickstein, and A. Angelova. Improved generator objectives for GANs, 2016. | ||

[34] S. Kolouri, C. E. Martin, and G. K. Rohde. Sliced-wasserstein autoencoder: An embarrassingly simple generative model. arXiv preprint arXiv:1804.01947, 2018. | |||

Latest revision as of 19:25, 10 December 2018

The first version of this work was published in 2017 and this version (which is the third revision) is presented in ICLR 2018. Source code for the first version is available here

Introduction

Early successes in the field of representation learning were based on supervised approaches, which used large labeled datasets to achieve impressive results. On the other hand, popular unsupervised generative modeling methods mainly consisted of probabilistic approaches focusing on low dimensional data. In recent years, there have been models proposed which try to combine these two approaches. One such popular method is called variational auto-encoders (VAEs). VAEs are theoretically elegant but have a major drawback of generating blurry sample images when used for modeling natural images. In comparison, generative adversarial networks (GANs) produce much sharper sample images but have their own list of problems which include a lack of encoder, harder to train, and the "mode collapse" problem. Mode collapse problem refers to the inability of the model to capture all the variability in the true data distribution. Currently, there has been a lot of activities around finding and evaluating numerous GANs architectures and combining VAEs and GANs, but a model which combines the best of both GANs and VAEs is yet to be discovered.

The work done in this paper builds upon the theoretical work done in Bousquet et al.[2017] [4]. The authors tackle generative modeling using optimal transport (OT). The OT cost is defined as the measure of distance between probability distributions.

To be more specific on the OT:

Given a function [math]\displaystyle{ c : X × Y → R }[/math], they seek a minimizer of [math]\displaystyle{ C(µ, ν) := \underset{π ∈ Π(µ, ν)}{inf} \int_{X×Y}{c(x, y)dπ(x, y)} }[/math]

The measures [math]\displaystyle{ π ∈ Π(µ, ν) }[/math] are called transport plans or transference plans. The measures [math]\displaystyle{ π ∈ Π(µ, ν) }[/math] achieving the infimum are called optimal transport plans. The classical interpretation of this problem is the problem of minimizing the total cost [math]\displaystyle{ C(µ, ν) }[/math] of transporting the mass distribution [math]\displaystyle{ µ }[/math] to the mass distribution [math]\displaystyle{ ν }[/math], where the cost of transporting one unit of mass at the point [math]\displaystyle{ x ∈ X }[/math] to one unit of mass at the point [math]\displaystyle{ y ∈ Y }[/math] is given by the cost function [math]\displaystyle{ c(x, y) }[/math].

One of the features of OT cost which is beneficial is that it provides much weaker topology when compared to other costs, including f-divergences which are associated with the original GAN algorithms. This particular feature is crucial in applications where the data is usually supported on low dimensional manifolds in the input space. The problem with stronger notions of distances such as f-divergences is that they often max out and provide no useful gradients for training. In comparison, the OT cost has been claimed to behave much more nicely [5, 8]. Despite the preceding claim, the implementation, which is similar to GANs, still requires the addition of a constraint or a regularization term into the objective function.

Original Contributions

Let [math]\displaystyle{ P_X }[/math] be the true but unknown data distribution, [math]\displaystyle{ P_G }[/math] be the latent variable model specified by the prior distribution [math]\displaystyle{ P_Z }[/math] of latent codes [math]\displaystyle{ Z \in \mathcal{Z} }[/math] and the generative model [math]\displaystyle{ P_G(X|Z) }[/math] of the data points [math]\displaystyle{ X \in \mathcal{X} }[/math] given [math]\displaystyle{ Z }[/math]. The goal in this paper is to minimize [math]\displaystyle{ OT\ W_c(P_X, P_G) }[/math].

The main contributions are given below:

- A new class of auto-encoders called Wasserstein Auto-Encoders (WAE). WAEs minimize the optimal transport [math]\displaystyle{ W_c(P_X, P_G) }[/math] for any cost function [math]\displaystyle{ c }[/math]. As is the case with VAEs, WAE objective function is also made up of two terms: the c-reconstruction cost and a regularizer term [math]\displaystyle{ \mathcal{D}_Z(P_Z, Q_Z) }[/math] which penalizes the discrepancy between two distributions in [math]\displaystyle{ \mathcal{Z}: P_Z \text{ and } Q_Z }[/math]. [math]\displaystyle{ Q_Z }[/math] is a distribution of encoded points, i.e. [math]\displaystyle{ Q_Z := \mathbb{E}_{P_X}[Q(Z|X)] }[/math]. Note that when [math]\displaystyle{ c }[/math] is the squared cost and the regularizer term is the GAN objective, WAE is equivalent to the adversarial auto-encoders described in [2].

- Experimental results of using WAE on MNIST and CelebA datasets with squared cost [math]\displaystyle{ c(x, y) = ||x - y||_2^2 }[/math]. The results of these experiments show that WAEs have the good features of VAEs such as stable training, encoder-decoder architecture, and a nice latent manifold structure while simultaneously improving the quality of the generated samples.

- Two different regularizers. One based on GANs and adversarial training in the latent space [math]\displaystyle{ \mathcal{Z} }[/math]. The other one is based on "Maximum Mean Discrepancy" which is known to have high performance when matching high dimensional standard normal distributions. This second regularizer also makes training a fully adversary-free min-min optimization problem and gets rid of the problem of tuning the GAN. During GAN training, the mode can often collapse, the model is sensitive to hyper-parameters, and the loss is uninterpretable since it fluctuates during training. WAE solves such problems and is much more developer-friendly. Most important of all, the loss in WAE is interpretable, making it easier to decide when to terminate the training.

- The final contribution is the mathematical analysis used to derive the WAE objective function. In particular, the mathematical analysis shows that in the case of generative models, the primal form of [math]\displaystyle{ W_c(P_X, P_G) }[/math] is equivalent to a problem which deals with the optimization of a probabilistic encoder [math]\displaystyle{ Q(Z|X) }[/math]

The paper provides an ostensibly simple recipe to implement a non-blurry VAE (it is generative). It provides what looks like an elegant and logical way to cast the Wasserstein distance metric to setup the VAE/GAN problem.

The paper gives three instructive VAEGAN model comparisons, unifying them thematically – Adversarial Autoencoders (AAE), Adversarial Variational Bayes (AVB), and the original Variational Autoencoders (VAE). These generalizations arise for the case with random decoders – the paper introduces the idea with deterministic decodes, and then extends it to random decoders – with play on the regularizer of the VAE which these papers replace with a GAN.

Proposed Method

The method proposed by the authors uses a novel auto-encoder architecture to minimize the optimal transport cost [math]\displaystyle{ W_c(P_X, P_G) }[/math]. In the optimization problem that follows, the decoder tries to accurately reconstruct the data points as measured by the cost function [math]\displaystyle{ c }[/math]. The encoder tries to achieve the following two conflicting goals at the same time: (1) try to match the distribution of the encoded data points [math]\displaystyle{ Q_Z := \mathbb{E}_{P_X}[Q(Z|X)] }[/math] to the prior distribution [math]\displaystyle{ P_Z }[/math] as measured by the divergence [math]\displaystyle{ \mathcal{D}_Z(P_Z, Q_Z) }[/math] and, (2) make sure that the latent space vectors encoded contain enough information so that the reconstruction of the data points are of high quality. The figure below illustrates this:

Figure 1: Both VAE and WAE have objectives which are composed of two terms. The two terms are the reconstruction cost and the regularizer term which penalizes the divergence between [math]\displaystyle{ P_Z }[/math] and [math]\displaystyle{ Q_Z }[/math]. VAE forces [math]\displaystyle{ Q(Z|X = x) }[/math] to match [math]\displaystyle{ P_Z }[/math] for the the different training examples drawn from [math]\displaystyle{ P_X }[/math]. As shown in the figure above, every red ball representing [math]\displaystyle{ Q_z }[/math] is forced to match [math]\displaystyle{ P_Z }[/math] depicted as whitish triangles. This causes intersection among red balls and results in reconstruction problems. On the other hand, WAE coerces the mixture [math]\displaystyle{ Q_Z := \int{Q(Z|X)\ dP_X} }[/math] to match [math]\displaystyle{ P_Z }[/math] as shown in the figure above. This provides a better chance of the encoded latent codes to have more distance between them. As a consequence of this, higher reconstruction quality is achieved.

Preliminaries and Notations

Authors use calligraphic letters to denote sets (for example, [math]\displaystyle{ \mathcal{X} }[/math]), capital letters for random variables (for example, [math]\displaystyle{ X }[/math]), and lower case letters for the values (for example, [math]\displaystyle{ x }[/math]). Probability distributions are are also denoted with capital letters (for example, [math]\displaystyle{ P(X) }[/math]) and the corresponding densities are denoted with lowercase letter (for example, [math]\displaystyle{ p(x) }[/math]).

Several measure of difference between probability distributions are also used by the authors. These include f-divergences given by [math]\displaystyle{ D_f(p_X||p_G) := \int{f(\frac{p_X(x)}{p_G(x)})p_G(x)}dx\ \text{where}\ f:(0, \infty) → \mathcal{R} }[/math] is any convex function satisfying [math]\displaystyle{ f(1) = 0 }[/math]. Other divergences used include KL divergence ([math]\displaystyle{ D_{KL} }[/math]) and Jensen-Shannon ([math]\displaystyle{ D_{JS} }[/math]) divergences.

Optimal Transport and its Dual Formations

A rich class of measure of distances between probability distributions is motivated by the optimal transport problem. One such formulation of the optimal transport problem is the Kantovorich's formulation given by:

is any measurable cost function, and [math]\displaystyle{ \mathcal{P}(X \sim P_X, Y \sim P_G) }[/math] is a set of all joint distributions of (X, Y) with marginals [math]\displaystyle{ P_X\ \text{and}\ P_G }[/math] respectively.

A particularly interesting case is when [math]\displaystyle{ (\mathcal{X}, d) }[/math] is metric space and [math]\displaystyle{ c(x, y) = d^p(x, y)\ \text{for}\ p ≥ 1 }[/math]. In this case [math]\displaystyle{ W_p }[/math], the [math]\displaystyle{ p-th }[/math] root of [math]\displaystyle{ W_c }[/math], is called the p-Wasserstein distance.

When [math]\displaystyle{ c(x, y) = d(x, y) }[/math] the following Kantorovich-Rubinstein duality holds:

[math]\displaystyle{ W_1(P_X, P_G) = \underset{f \in \mathcal{F}_L}{sup} \mathbb{E}_{X \sim P_x}[f(X)] = \mathbb{E}_{Y \sim P_G}[f(Y)] }[/math] where [math]\displaystyle{ \mathcal{F}_L }[/math] is the class of all bounded 1-Lipschitz functions on [math]\displaystyle{ (\mathcal{X}, d) }[/math].

Application to Generative Models: Wasserstein auto-encoders

The intuition behind modern generative models like VAEs and GANs is that they try to minimize specific distance measures between the data distribution [math]\displaystyle{ P_X }[/math] and the model [math]\displaystyle{ P_G }[/math]. Unfortunately, with the current knowledge and tools, it is usually really hard or even impossible to calculate most of the standard discrepancy measures especially when [math]\displaystyle{ P_X }[/math] is not known and [math]\displaystyle{ P_G }[/math] is parametrized by deep neural networks. Having said that, there are certain tricks available which can be employed to get around that difficulty.

For KL-divergence [math]\displaystyle{ D_{KL}(P_X, P_G) }[/math] minimization, or equivalently the marginal log-likelihood [math]\displaystyle{ E_{P_X}[log_{P_G}(X)] }[/math] maximization, one can use the famous variational lower bound which provides a theoretically grounded framework. This has been used quite successfully by the VAEs. In the general case of minimizing f-divergence [math]\displaystyle{ D_f(P_X, P_G) }[/math], using its dual formulation along with f-GANs and adversarial training is viable. Finally, OT cost [math]\displaystyle{ W_c(P_X, P_G) }[/math] can be minimized by using the Kantorovich-Rubinstein duality expressed as an adversarial objective. The Wasserstein-GAN implement this idea.

In this paper, the authors focus on the latent variable models [math]\displaystyle{ P_G }[/math] given by a two step procedure. First, a code [math]\displaystyle{ Z }[/math] is sampled from a fixed distribution [math]\displaystyle{ P_Z }[/math] on a latent space [math]\displaystyle{ \mathcal{Z} }[/math]. Second step is to map [math]\displaystyle{ Z }[/math] to the image [math]\displaystyle{ X \in \mathcal{X} = \mathcal{R}^d }[/math] with a (possibly random) transformation. This gives us a density of the form,

provided all the probablities involved are properly defined. In order to keep things simple, the authors focus on non-random decoders, i.e., the generative models [math]\displaystyle{ P_G(X|Z) }[/math] deterministically map [math]\displaystyle{ Z }[/math] to [math]\displaystyle{ X = G(Z) }[/math] using a fixed map [math]\displaystyle{ G: \mathcal{Z} → \mathcal{X} }[/math]. Similar results hold for the random decoders as shown by the authors in the appendix B.1.

Working under the model defined in the preceding paragraph, the authors find that OT cost takes a much simpler form as the transportation plan factors through the map [math]\displaystyle{ G: }[/math] instead of finding a coupling [math]\displaystyle{ \Gamma }[/math] between two random variables in the [math]\displaystyle{ \mathcal{X} }[/math] space, one given by the distribution [math]\displaystyle{ P_X }[/math] and the other by the the distribution [math]\displaystyle{ P_G }[/math], it is enough to find a conditional distribution [math]\displaystyle{ Q(Z|X) }[/math] such that its [math]\displaystyle{ Z }[/math] marginal, [math]\displaystyle{ Q_Z)Z) := \mathbb{E}_{X \sim P_X}[Q(Z|X)] }[/math] is the same as the prior distribution [math]\displaystyle{ P_Z }[/math]. This is formalized by the theorem given below. The theorem given below was proven in [4] by the authors.

Theorem 1. For [math]\displaystyle{ P_G }[/math] defined as above with deterministic [math]\displaystyle{ P_G(X|Z) }[/math] and any function [math]\displaystyle{ G:\mathcal{Z} → \mathcal{X} }[/math]

[math]\displaystyle{ \underset{\Gamma \in \mathcal{P}(X \sim P_X ,Y \sim P_G)}{inf} \mathbb{E}_{(X,Y) \sim \Gamma}[c(X,Y)] = \underset{Q: Q_Z = P_Z}{inf} \mathbb{E}_{P_X} \mathbb{E}_{Q(Z|X)}[c(X, G(Z))] }[/math]

where [math]\displaystyle{ Q_Z }[/math] is the marginal distribution of [math]\displaystyle{ Z }[/math] when [math]\displaystyle{ X \sim P_X }[/math] and [math]\displaystyle{ Z \sim Q(Z|X) }[/math].

According to the authors, the result above allows optimization over random encoders [math]\displaystyle{ Q(Z|X) }[/math] instead of optimizing overall couplings of [math]\displaystyle{ X }[/math] and [math]\displaystyle{ Y }[/math]. Both problems are still constrained. To find a numerical solution, the authors relax the constraints on [math]\displaystyle{ Q_Z }[/math] by adding a regularizer term to the objective. This gives them the WAE objective:

[math]\displaystyle{ D_{WAE}(P_X, P_G) := \underset{Q(Z|X) \in \mathcal{Q}}{inf} \mathbb{E}_{P_X} \mathbb{E}_{Q(Z|X)}[c(X, G(Z))] + \lambda \cdot \mathcal{D}_Z(Q_Z, P_Z) }[/math]

where [math]\displaystyle{ \mathcal{Q} }[/math] is any nonparametric set of probabilistic encoders, [math]\displaystyle{ \mathcal{D}_Z }[/math] is an arbitrary measure of distance between [math]\displaystyle{ Q_Z }[/math] and [math]\displaystyle{ P_Z }[/math], and [math]\displaystyle{ \lambda > 0 }[/math] is a hyperparameter. As is the case with the VAEs, the authors propose using deep neural networks to parameterize both encoders [math]\displaystyle{ Q }[/math] and decoders [math]\displaystyle{ G }[/math]. Note that, unlike VAEs, WAE allows for non-random encoders deterministically mapping their inputs to their latent codes.

The authors propose two different regularizers [math]\displaystyle{ \mathcal{D}_Z(Q_Z, P_Z) }[/math]

GAN-based [math]\displaystyle{ \mathcal{D}_z }[/math]

One of the option is to use [math]\displaystyle{ \mathcal{D}_Z(Q_Z, P_Z) = \mathcal{D}_{JS}(Q_Z, P_Z) }[/math] along with adversarial training for estimation. In particular, the discriminator (adversary) is used in the latent space [math]\displaystyle{ \mathcal{Z} }[/math] to classify "true" points sampled for [math]\displaystyle{ P_X }[/math] and "fake" ones samples from [math]\displaystyle{ Q_Z }[/math]. This leads to the WAE-GAN as described in Algorithm 1 listed below. Even though WAE-GAN still uses max-min optimization, one positive feature is that it moves the adversary from the input (pixel) space [math]\displaystyle{ \mathcal{X} }[/math] to the latent space [math]\displaystyle{ \mathcal{Z} }[/math]. Additionally, the true latent space distribution [math]\displaystyle{ P_Z }[/math] might have a nice shape with a single mode (for a Gaussian prior), making the task of matching much easier as opposed to matching an unknown, complex, and possibly multi-modal distributions which is usually the case in GANs. This leads to the second penalty.

MMD-based [math]\displaystyle{ \mathcal{D}_z }[/math]

For a positive-definite reproducing kernel [math]\displaystyle{ k: \mathcal{Z} \times \mathcal{Z} → \mathcal{R} }[/math], the maximum mean discrepancy (MMD) is defined as:

where [math]\displaystyle{ \mathcal{H}_k }[/math] is the RKHS (reproducing kernel Hilbert space) of real-valued functions mappings [math]\displaystyle{ \mathcal{Z} }[/math] to [math]\displaystyle{ \mathcal{R} }[/math]. If [math]\displaystyle{ k }[/math] is characteristic then [math]\displaystyle{ MMD_k }[/math] defines a metric and can be used as a distance measure. The authors propose to use [math]\displaystyle{ \mathcal{D}_Z(P_Z, Q_Z) = MMD_k(P_Z, Q_Z) }[/math]. MMD also have an unbiased U-statistic estimator which can be used along with stochastic gradient descent (SGD) methods. This gives us WAE-MMD as described in Algorithm 2 listed below. Note that MMD is known to perform well when matching high dimensional standard normal distributions, so it is expected that this penalty will work well when the prior [math]\displaystyle{ P_Z }[/math] is Gaussian.

Related Work

Literature on auto-encoders

Classical unregularized auto-encoders have an objective function which only tries to minimize the reconstruction cost. This results in distinct data points being encoded into distinct zones distributed chaotically across the latent space [math]\displaystyle{ \mathcal{Z} }[/math]. The latent space [math]\displaystyle{ \mathcal{Z} }[/math] in this scenario contains huge "holes" for which the decoder [math]\displaystyle{ P_G(X|Z) }[/math] has never been trained. In general, the encoder trained this way do not provide terribly useful representations and sampling from the latent space [math]\displaystyle{ \mathcal{Z} }[/math] becomes a difficult task [12].

VAEs [1] minimize the KL-divergence [math]\displaystyle{ D_{KL}(P_X, P_G) }[/math] which consists of the reconstruction cost and the regularizer [math]\displaystyle{ \mathbb{E}_{P_X}[D_{KL}(Q(|X), P_Z)] }[/math]. The regularizer penalizes the difference in the encoded training images and the prior [math]\displaystyle{ P_Z }[/math]. But this penalty still does not guarantee that the overall encoded distribution matches the prior distribution as WAE does. In addition, VAEs require a non-degenerate (i.e. non-deterministic) Gaussian encoders along with random decoders. Another paper [11] later, proposed a method which allows the use of non-Gaussian encoders with VAEs. In the meanwhile, WAE minimizes [math]\displaystyle{ W_{c}(P_X, P_G) }[/math] and allows probabilistic and deterministic encoder and decoder pairs.

When parameters are appropriately defined, WAE is able to generalize AAE in two ways: it can use any cost function in the input space and use any discrepancy measure [math]\displaystyle{ D_Z }[/math] in latent space [math]\displaystyle{ Z }[/math] other than the adversarial one.

There has been work done on regularized auto-encoders called InfoVAE [14], which has objective similar to [4] but using different motivations and arguments.

WAEs explicitly define the cost function [math]\displaystyle{ c(x,y) }[/math], whereas VAEs rely on an implicitly through a negative log likelihood term. It theoretically can induce any arbitrary cost function, but in practice can require an estimation of the normalizing constant that can be different for values of [math]\displaystyle{ z }[/math].

Literature on Optimal Transport (OT)

[15] provides methods for computing OT cost for large-scale data using SGD and sampling. The WGAN [5] proposes a generative model which minimizes 1-Wasserstein distance [math]\displaystyle{ W_1(P_X, P_G) }[/math]. The WGAN algorithm does not provide an encoder and cannot be easily applied to any arbitrary cost [math]\displaystyle{ W_C }[/math]. The model proposed in [5] uses the dual form, in contrast, the model proposed in this paper uses the primal form. The primal form allows the use of any arbitrary cost function [math]\displaystyle{ c }[/math] and naturally, comes with an encoder.

In order to compute [math]\displaystyle{ W_c(P_X, P_G) }[/math] or [math]\displaystyle{ W_1(P_X, P_G) }[/math], the model needs to handle various non-trivial constraints, various methods has be proposed in the literature ([5], [2], [8], [16], [15], [17], [18]) to avoid this difficulty .

Literature on GANs

A lot of the GAN variations which have been proposed in the literature come without an encoder. Examples include WGAN and f-GAN. These models are deficient in cases where a reconstruction of latent space is needed to use the learned manifold.

There have been numerous models proposed in the literature which try to combine the adversarial training of GANs with auto-encoder architectures. Some examples are [19], [20], [21], and [22]. There has also been work done in which reproducing kernels have been used in the context of GANS ([23], [24]).

Experiments

Experiments were used to empirically evaluate the proposed WAE model.

Experimental setup

For experimental setup, authors used [math]\displaystyle{ \small P_Z }[/math] and squared cost function [math]\displaystyle{ \small c(x,y) }[/math] for data points. Deterministic encoder-decoder pairs were used. The authors conducted experiments using the following two real-world datasets: (1) MNIST [27] made up of 70k images, and (2) CelebA [28] consisting of approximately 203k images. For test reconstruction and interpolations a pair of held out images, [math]\displaystyle{ (x,y) }[/math] from the test set are Auto-encoded (separately), to produce [math]\displaystyle{ (z_x, z_y) }[/math] in the latent space

Training Details - MNIST

Authors use mini-batches of size 100 and trained the models for 100 epochs. They used λ = 10 and variance of 1. For the encoder-decoder pair, they set α = 0.01 for Adam in the beginning and for the adversary in WAE-GAN to α = 0.005. After 30 epochs they decreased both by a factor of 2, and after first 50 epochs further by a factor of 5. Both encoder and decoder used fully convolutional architectures with 4x4 convolutional filters.

Training Details - CelebA

Authors took the CelebA images and conducted 140x140 center crops and then resized to the 4x64 resolution. They again used mini-batches of size 100 and trained the models for upto 250 epochs. All reported WAE models were trained for 55 epochs and VAE for 68 epochs. For WAE-MMD we used λ = 100 and for WAE-GAN λ = 1. Both used variance of 2.

For WAE-MMD the learning rate of Adam was initially set to α = 0.01 . For WAE-GAN the learning rate of Adam for the encoder-decoder pair was initially set to α = 0.003 and for the adversary to 0.01. All learning rates were decreased by a factor of 2 after 30 epochs, further by a factor of 5 after 50 first epochs, and finally additional factor of 10 after 100 first epochs.

The main evaluation criteria were to see if the WAE model can simultaneously achieve:

- accurate reconstruction of the data points

- resonable geometry of the latent manifold

- generation of high quality random samples

For the model to generalize well (1) and (2) should be met on both the training and test data set.

The proposed model achieve reasonably good results as highlighted in the figures given below:

Conclusion

The authors proposed a new class of algorithms for building a generative model called Wasserstein Autoencoders based on optimal transport cost. They related the newly proposed model to the existing probabilistic modeling techniques. They empirically evaluated the proposed models using two real-world datasets. They compared the results obtained using their proposed model with the results obtained using VAEs on the same dataset to show that the proposed models generate sample images of higher quality in addition to being easier to train and having good reconstruction quality of the data points.

The authors claim that in future work, they will further explore the criteria for matching the encoding distribution [math]\displaystyle{ Q_Z }[/math] to the prior distribution [math]\displaystyle{ P_Z }[/math], evaluate whether it is feasible to adversarially train the cost function [math]\displaystyle{ c }[/math]in the input space [math]\displaystyle{ \mathcal{X} }[/math], and a theoretical analysis of the dual-formations for WAE-GAN and WAE-MMD.

Future Work

Following the work of this paper, another generative model was introduced by [34] that is based on the concept of optimal transport. Optimal transport is basically the distance between probability distributions by transporting one of the distributions to the other (and hence the name of optimal transport). Then, a new simple model called "Sliced-Wasserstein Autoencoders" (SWAE) is presented, which is easily implemented, and provides the capabilities of Wasserstein Autoencoders.

([1]) The results from MNIST and CelebA datasets look convincing, though could include additional evaluation to compare the adversarial loss with the straightforward MMD metric and potentially discuss their pros and cons. In some sense, given the challenges in evaluating and comparing closely related auto-encoder solutions, the authors could design demonstrative experiments for cases where Wassersterin distance helps and maybe its potential limitations.

Critique

Although this paper presented some empirical tests to explain its method in an appropriate way, it would be better to provide some clearer notations including the details of the architectures in their experiments. Furthermore, they could benefit from performing some comparisons between the results of their work and other similar works. As pointed out by a reviewer, the closest work to this paper is the adversarial variational Bayes framework by Mescheder et.al. which also attempts at unifying VAEs and GANs. Although the authors describe the conceptual differences and advantages over that approach, it will be beneficial to actually include some comparisons in the results section. Moreover, the performance of the algorithm is not a significant improvement compared to previous VAE algorithm. The performance can be described and tested if the author performed empirical tests on various datasets. However, the methodology is flexible and unified to other types of the algorithm which is a huge benefit without compromising the stability of the training.

References

[1] D. P. Kingma and M. Welling. Auto-encoding variational Bayes. In ICLR, 2014.

[2] A. Makhzani, J. Shlens, N. Jaitly, and I. Goodfellow. Adversarial autoencoders. In ICLR, 2016.

[3] Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. Generative adversarial nets. In NIPS, pages 2672–2680, 2014.

[4] O. Bousquet, S. Gelly, I. Tolstikhin, C. J. Simon-Gabriel, and B. Schölkopf. From optimal transport to generative modeling: the VEGAN cookbook, 2017.

[5] M. Arjovsky, S. Chintala, and L. Bottou. Wasserstein GAN, 2017.

[6] C. Villani. Topics in Optimal Transportation. AMS Graduate Studies in Mathematics, 2003.

[7] Sebastian Nowozin, Botond Cseke, and Ryota Tomioka. f-GAN: Training generative neural samplers using variational divergence minimization. In NIPS, 2016.

[8] I. Gulrajani, F. Ahmed, M. Arjovsky, V. Domoulin, and A. Courville. Improved training of wasserstein GANs, 2017.

[9] A. Gretton, K. M. Borgwardt, M. J. Rasch, B. Schölkopf, and A. J. Smola. A kernel two-sample test. Journal of Machine Learning Research, 13:723–773, 2012.

[10] F. Liese and K.-J. Miescke. Statistical Decision Theory. Springer, 2008.

[11] L. Mescheder, S. Nowozin, and A. Geiger. Adversarial variational bayes: Unifying variational autoencoders and generative adversarial networks, 2017.

[12] Y. Bengio, A. Courville, and P. Vincent. Representation learning: A review and new perspectives. Pattern Analysis and Machine Intelligence, 35, 2013.

[13] M. D. Hoffman and M. Johnson. Elbo surgery: yet another way to carve up the variational evidence lower bound. In NIPS Workshop on Advances in Approximate Bayesian Inference, 2016.

[14] S. Zhao, J. Song, and S. Ermon. InfoVAE: Information maximizing variational autoencoders, 2017.

[15] A. Genevay, M. Cuturi, G. Peyré, and F. R. Bach. Stochastic optimization for large-scale optimal transport. In Advances in Neural Information Processing Systems, pages 3432–3440, 2016.

[16] M. Cuturi. Sinkhorn distances: Lightspeed computation of optimal transport. In Advances in Neural Information Processing Systems, pages 2292–2300, 2013.

[17] Lenaic Chizat, Gabriel Peyré, Bernhard Schmitzer, and François-Xavier Vialard. Unbalanced optimal transport: geometry and kantorovich formulation. arXiv preprint arXiv:1508.05216, 2015.

[18] Matthias Liero, Alexander Mielke, and Giuseppe Savaré. Optimal entropy-transport problems and a new hellinger-kantorovich distance between positive measures. arXiv preprint arXiv:1508.07941, 2015.

[19] J. Zhao, M. Mathieu, and Y. LeCun. Energy-based generative adversarial network. In ICLR, 2017.

[20] V. Dumoulin, I. Belghazi, B. Poole, A. Lamb, M. Arjovsky, O. Mastropietro, and A. Courville. Adversarially learned inference. In ICLR, 2017.

[21] D. Ulyanov, A. Vedaldi, and V. Lempitsky. It takes (only) two: Adversarial generator-encoder networks, 2017.

[22] D. Berthelot, T. Schumm, and L. Metz. Began: Boundary equilibrium generative adversarial networks, 2017.

[23] Y. Li, K. Swersky, and R. Zemel. Generative moment matching networks. In ICML, 2015.

[24] G. K. Dziugaite, D. M. Roy, and Z. Ghahramani. Training generative neural networks via maximum mean discrepancy optimization. In UAI, 2015.

[25] R. Reddi, A. Ramdas, A. Singh, B. Poczos, and L. Wasserman. On the high-dimensional power of a linear-time two sample test under mean-shift alternatives. In AISTATS, 2015.

[26] C. L. Li, W. C. Chang, Y. Cheng, Y. Yang, and B. Poczos. Mmd gan: Towards deeper understanding of moment matching network, 2017.

[27] Y. LeCun, L. Bottou, Y. Bengio, and P. Haffner. Gradient-based learning applied to document recognition. In Proceedings of the IEEE, volume 86(11), pages 2278–2324, 1998.

[28] Ziwei Liu, Ping Luo, Xiaogang Wang, and Xiaoou Tang. Deep learning face attributes in the wild. In Proceedings of International Conference on Computer Vision (ICCV), 2015.

[29] D. P. Kingma and J. Lei. Adam: A method for stochastic optimization, 2014.

[30] A. Radford, L. Metz, and S. Chintala. Unsupervised representation learning with deep convolutional generative adversarial networks. In ICLR, 2016.

[31] S. Ioffe and C. Szegedy. Batch normalization: Accelerating deep network training by reducing internal covariate shift, 2015.

[32] Martin Heusel, Hubert Ramsauer, Thomas Unterthiner, Bernhard Nessler, Günter Klambauer, and Sepp Hochreiter. GANs trained by a two time-scale update rule converge to a nash equilibrium. arXiv preprint arXiv:1706.08500, 2017.

[33] B. Poole, A. Alemi, J. Sohl-Dickstein, and A. Angelova. Improved generator objectives for GANs, 2016.

[34] S. Kolouri, C. E. Martin, and G. K. Rohde. Sliced-wasserstein autoencoder: An embarrassingly simple generative model. arXiv preprint arXiv:1804.01947, 2018.