STAT946F20/BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding

Presented by

Wenyu Shen

Introduction

This paper introduces the structure of the BERT model. The full name of the BERT model is Bidirectional Encoder Representations from Transformers, and this language model breaks records in eleven natural language process tasks. BERT advanced the state-of-the-art for pre-training of contextual representations. One novel feature as compared to Word2Vec or GLoVE, is the ability for BERT to produce different representations for a unique word given different contexts. To elaborate, Word2Vec would always create the same embedding for a given word regardless of the words that precede and proceed with it. BERT however, will generate different embeddings based on what precedes and proceeds it. This can be useful as words can have homonyms, such as "bank" where it could refer to a "bank" as a "financial institution" or the "land alongside or sloping down to a river or lake".

Transformer and BERT

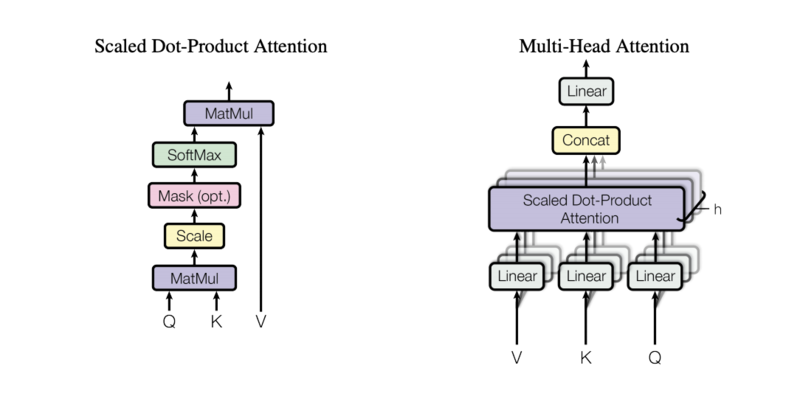

Let us start with the introduction of encoder and decoder. From the class, the encoder-decoder model is applied in the seq2seq question. For the sea2seq question, if we input a sequence x, then through performing the encoder-decoder model, we could generate another output sequence y based on x (like translation, questions with answer system). However, while using the RNN or other models as the basic architecture of encoder-decoder, the model might not have great performance while the input source is too long. Though we can use the encoder-decoder with attention which does not merge all the output into one context(layer), the paper Attention is All You Need [1] introduce a framework and only use Attention in the encoder-decoder to do the machine translation task. The Transformer utilized the Scaled Dot-Product Attention and the sequential mask in the decoder and usually performs Multi-head attention to derive more features from the different subspace of sentence for the individual token. The transformer trained the positional encoding, which has the same dimension as the word embedding, to obtain the sequential information of the inputs. BERT is built by the N unit of the transformer encoder.

BERT

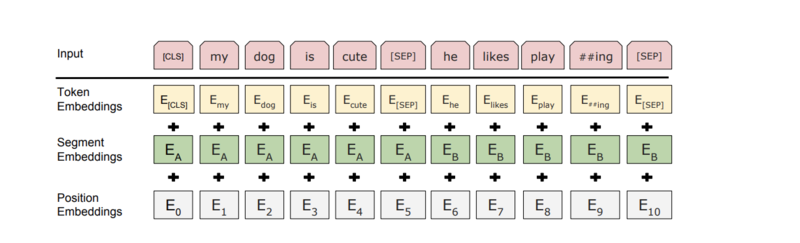

BERT works well in both the Feature-based and the Fine-tuning approaches. Both Feature-based and Fine-tuning structures started with unsupervised learning from source A. While the Feature-based approach keeps the pre-trained parameters fixed while using the labeled source B to train the task-specific model and get the additional feature, the Fine-tuning approach tunes all parameters when training on the afterword task. This paper improves BERT based on the Fine-tuning approach. Original transformer learned from left to right. The deep bidirectional model is strictly more powerful than the left to right or even the concatenation of the left-to-right and right-to-left models. However, bidirectional conditioning would allow each word to see itself indirectly, which makes the problem trivial. Therefore, BERT used the MLM (masked language model) to pre-train deep bidirectional Transformers. In this pre-training method, some random tokens are masked each time and the model's objective is to find the vocabulary id of the masked token based on both its left and its right contexts. Also, BERT performs the Next Sentence Prediction(NSP) task to make the model understand the relationship between sentences. In the NSP task, two sentences, A and B are fed to the network to predict whether they are consecutive or not. These pairs of sentences in the train data are 50% of the time consecutive (labeled as IsNext) and 50% of the time random sentences from the corpus( labeled as NotNext). Also, the Input/Output Representation created Token Embeddings, Segment Embeddings, and Position Embeddings to make BERT accomplish a variety of downstream tasks. Additionally, during this paper, the randomly selected tokens in MLM are not always utilized by masks to solve the unmatched issue while pre-training and fine-tuning models. To resolve this mismatch, the 15% of the tokens selected to be predicted are 80% of the time replaced with [MASK], 10% of the time are replaced with a random token, and 10% of the time remain unchanged.

Applications

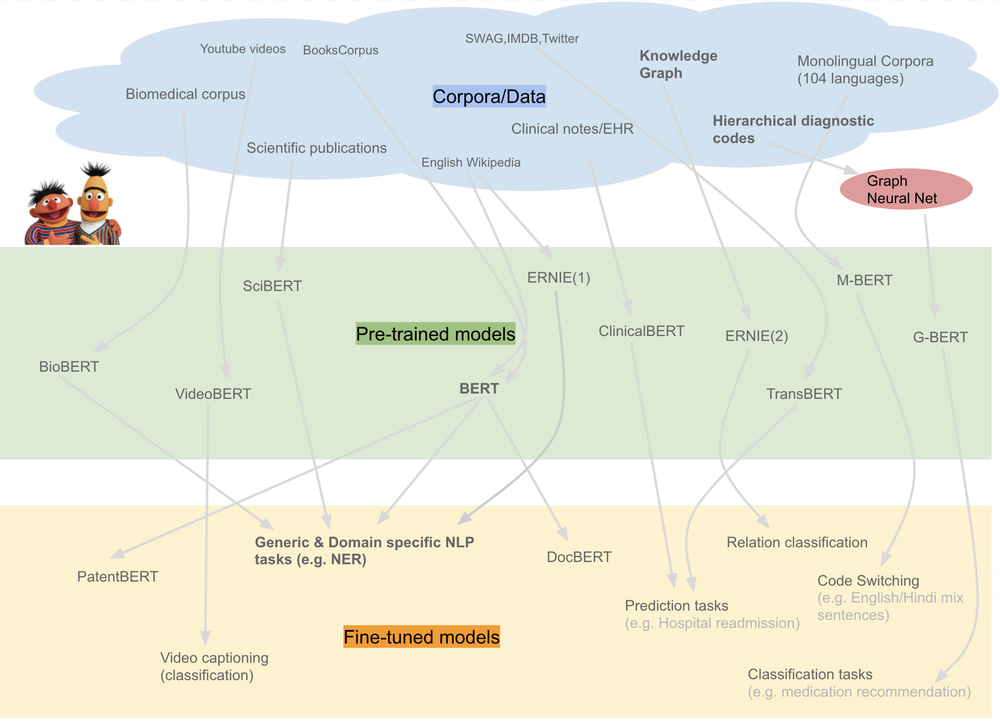

As previously mentioned BERT has achieved state-of-the-art performance in eleven NLP tasks. BERT can even be trained on different corpora/data as seen in figure 1 and then different pre-training and fine-tuning can be applied downstream, this landscape is surely not exhaustive. This aids in showing the wide range of applications BERT can be completely retrained for.

Comparison between ELMo, GPT, and BERT

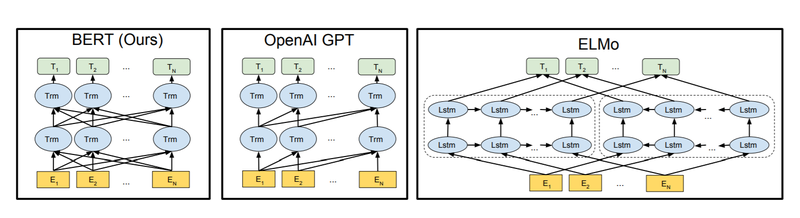

In this section, we will compare BERT with previous language models, particularly ELMo and GPT. These three models are among the biggest advancements in NLP. ELMo is a bi-directional LSTM model and is able to capture context information from both directions. It's a feature-based approach, which means the pre-trained representations are used as features. GPT and BERT are both transformer-based models. GPT only uses transformer decoders and is unidirectional. This means information only flows from the left to the right in GPT. In contrast, BERT only uses transformer encoders and is bidirectional. Therefore, it can capture more context information than GPT and tends to perform better when context information from both sides is important. GPT and BERT are fine-tuning-based approaches. Users can use the models on downstream tasks by simply fine-tuning model parameters.

By looking at the above picture, we can better understand the comparison between these three models. As mentioned above GPT is unidirectional which means the layers are not dense and only weights from left to right are present. BERT is bidirectional in the sense that both weight from left to right and from right to left are present (the layers are dense). ELMo is also bidirectional but not the same way as BERT. It actually uses a concatenation of independently trained left-to-right and right-to-left LSTMs. Note that only BERT representations are jointly conditioned on both directions' context in all layers among these three models.

Conclusion

Consequently, BERT is a powerful pre-trained model in a large number of unsupervised resources and contributes when we want to perform NLP tasks with a low amount of obtained data.

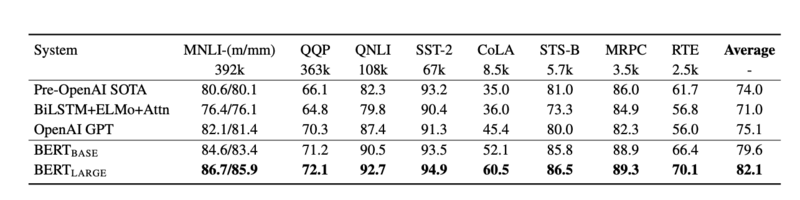

From Table 3 it can be observed that [math]\displaystyle{ BERT_{LARGE} }[/math] and [math]\displaystyle{ BERT_{BASE} }[/math] performance significantly better than the previous state-of-the-art models with 7% and 4.5% improvement in average accuracy over the previous best model (OpenAI GPT). Also, it is noteworthy that OpenAI GPT and [math]\displaystyle{ BERT_{BASE} }[/math] have similar architecture and the only difference is that [math]\displaystyle{ BERT_{BASE} }[/math] makes use of attention masks and gets and improvement of 4.5%. It can also be seen that [math]\displaystyle{ BERT_{LARGE} }[/math] outperforms [math]\displaystyle{ BERT_{BASE} }[/math] across all the datasets and the difference is significant when there is less training data available.

Critique

Bert showed that transformers could be a good architecture to solve NLP downstream tasks but they didn't care about choosing their hyper-parameters or even training and pre-training choices. As Albert[3], RoBERTa[4] shown in their paper, by choosing better hyper-parameters or even training choices, we can have a similar or even better performance within less time and training data.

Repository

A github repository for BERT is available at "official repository"

Fun facts

A collection of BERT-related papers published in 2019. The y-axis is the log of the citation count (based on Google Scholar).

References

[1] Ashish Vaswani and Noam Shazeer and Niki Parmar and Jakob Uszkoreit and Llion Jones and Aidan N. Gomez and Lukasz Kaiser and Illia Polosukhin. "Attention Is All You Need". (2017)

[2] Jacob Devlin and Ming-Wei Chang and Kenton Lee and Kristina Toutanova. "BERT: Pre-training of Deep Bidirectional Transformers for Language".(2019)

[3] Lan, Zhenzhong, et al. "Albert: A lite bert for self-supervised learning of language representations." arXiv preprint arXiv:1909.11942 (2019). [4] Liu, Yinhan, et al. "Roberta: A robustly optimized bert pretraining approach." arXiv preprint arXiv:1907.11692 (2019).