Towards Deep Learning Models Resistant to Adversarial Attacks: Difference between revisions

| Line 19: | Line 19: | ||

=== Contributions === | === Contributions === | ||

This paper uses robust optimization to explore adversarial robustness of neural networks. | This paper uses robust optimization to explore adversarial robustness of neural networks. The authors conduct an experimental study of the saddle-point formulation which is used for adversarially training. The authors propose that we can reliably solve the saddle-point optimization problem using first-order methods, particularly project gradient descent (PGD) and stochastic gradient descent (SGD), despite the problem's non-convexity and non-concavity. They explore how network capacity affects adversarial robustness and conclude that networks need larger capacity in order to be resistant to strong adversaries. Lastly, they train adversarially robust networks on MNIST and CIFAR10 using the saddle point formulation. | ||

== 2. An Optimization View of Adversarial Robustness == | == 2. An Optimization View of Adversarial Robustness == | ||

Revision as of 22:25, 21 November 2018

Presented by

- Yongqi Dong

- Aden Grant

- Andrew McMurry

- Jameson Ngo

- Baizhi Song

- Yu Hao Wang

- Amy Xu

1. Introduction

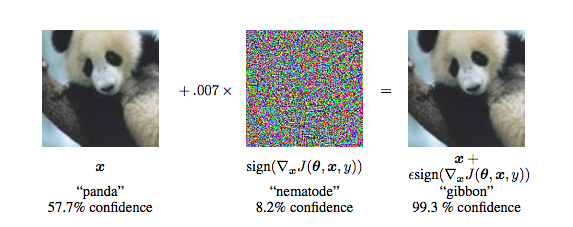

Any classifier can be tricked into giving the incorrect result. When an input is specifically designed to do this, it is called an adversarial attack. This can be done by injecting a set of perturbations to the input. These attacks are a prominent challenge for classifiers that are used for image processing and security systems because small changes to the input values that are imperceptible to the human eye can easily fool high-level neural networks. As such, resistance to adversarial attacks has become an increasingly important aspect for classifiers to have and methods that make models robust to adversarial inputs need to be developed.

While there have been many approaches to defend against adversarial attacks, we can never be certain that these defenses will be able to robust against broad types of adversaries. Furthermore, these defenses can be easily evaded by stronger and adaptive adversaries.

Contributions

This paper uses robust optimization to explore adversarial robustness of neural networks. The authors conduct an experimental study of the saddle-point formulation which is used for adversarially training. The authors propose that we can reliably solve the saddle-point optimization problem using first-order methods, particularly project gradient descent (PGD) and stochastic gradient descent (SGD), despite the problem's non-convexity and non-concavity. They explore how network capacity affects adversarial robustness and conclude that networks need larger capacity in order to be resistant to strong adversaries. Lastly, they train adversarially robust networks on MNIST and CIFAR10 using the saddle point formulation.

2. An Optimization View of Adversarial Robustness

Amy

4. Experiments ADVERSARIALLY ROBUST DEEP LEARNING MODELS?

Amy

6. Related Works

Amy