A Brief Survey of Text Mining: Classification, Clustering and Extraction Techniques

Text mining is the process of extracting meaningful information from text that is either structured (ie. databases), semi-structured (ie. XML and JSON files) or unstructured (ie. word documents, videos and images). This paper discusses the various methods essential for the text mining field, from preprocessing and classification to clustering and extraction techniques, and also touches on applications of text mining in the biomedical and health domains.

Text preprocessing is a key component in various text mining algorithms and can affect the resulting accuracy of classification models. It encodes the text in a numerical way so that various classification models and clustering methods can be used on the data. The most common method of text representation is the vector space model, and while this is a simple model, it enables efficient analysis of large collections of documents.

The classification models used in the context of text mining aims to assign predefined classes to text documents, and some of the various models used include Naive Bayes, Nearest Neighbour, Decision Tree and Support Vector Machines (SVM). Clustering also has a wide range of applications in the context of text mining, including classification, visualization and document organization. Naive clustering methods usually do not work well for text data because it has distinct characteristics which require algorithms designed specifically for text data. The most popular text clustering algorithms used are hierarchical clustering, k-means clustering and probabilistic clustering (ie. topic modelling), but there are always tradeoffs between effectiveness and efficiency.

Information extraction (IE) is another critical aspect of text mining as it automatically extracts structured information from unstructured or semi-structured text. It is essentially a kind of "supervised" form of natural language processing where the information we are looking for is known beforehand. The first part of information extraction is named entity recognition (NER) which locates and classifies named entities in free text into predefined categories, and the second part is relation extraction which seeks and locates the semantic relations between entities in text documents. Common models used for NER include Hidden Markov model (HMM) and Conditional random fields (CRF).

One of the domains where text mining is frequently used is biomedical sciences. Due to the exponential growth in biomedical literature, it is difficult for biomedical scientists to keep up with relevant publications in their own research area. Therefore, text mining methods and machine learning algorithms are widely used to overcome the information overload.

Presented by

Qi Chu, Xiaoran Huang, Di Sang, Amanda Lam, Yan Jiao, Shuyue Wang, Yutong Wu

Background

There is a tremendous amount of text data in various forms created from social networks, patient records, healthcare insurance data, news outlets and more. Unstructured text is easily processed and analyzed by humans, but it is significantly harder for machines to understand. However, the large volume of text produced is still an invaluable source of information, so there is a desperate need for text mining algorithms in a wide variety of applications and domains that can effectively automate the processing of large amounts of text. Academics is a key domain where text mining has becoming increasingly important - the National Centre for Text Mining, operated by the University of Manchester, is the first publicly-funded centre that provides text mining services for the UK academic community (NaCTeM). Some of the earliest known papers of applying already known or new methods to textual data for text mining include research on a cluster-based approach to browsing large document collections (1992)[1], indexing by latent semantic analysis (1990) [2], and knowledge discovery in textual databases (1995)[3]. While text mining uses similar techniques as traditional data mining, the characteristics and nature of textual data requires some specific differences.

Text Representation And Encoding

Preprocessing is one of the key components in many text mining algorithms. It usually consists of the tasks such as tokenization, filtering, lemmatization and stemming. These four techniques are widely used in NLP.

Tokenization: In a paper in 1992, Webster et al. defined Tokenization as the initial step of NLP. the goal is to identify tokens, aka, the basic units that do not need to be further decomposed in the future processes[4]. It is the task of breaking a character sequence up into pieces (words/phrases) called tokens, and perhaps at the same time throw away certain characters such as punctuation marks. The list of tokens then is used to further processing.

Filtering: Filtering is usually done on documents to remove some of the words. A common filtering is stop-words removal. Stop words are the words frequently appear in the text without having much content information (e.g. prepositions, conjunctions, etc). Similarly words occurring quite often in the text said to have little information to distinguish different documents and also words occurring very rarely are also possibly of no significant relevance and can be removed from the documents.

Lemmatization: Lemmatization is the task that considers the morphological analysis of the words, i.e. grouping together the various inflected forms of a word so they can be analyzed as a single item. In other words lemmatization methods try to map verb forms to infinite tense and nouns to a single form. In order to lemmatize the documents we first must specify the POS of each word of the documents and because POS is tedious and error prone, in practice stemming methods are preferred.

e.g. operations, operate, operates, operational, operative => operate

Stemming: Stemming methods aim at obtaining stem (root) of derived words. Stemming algorithms are indeed language dependent. The first stemming algorithm introduced in 1968. The most widely stemming method used in English is introduced in 1980.

e.g. beautiful, beautifully => beauti

Lemmatization and stemming seem to have similar functions. Note that lemmatization follows a vocabulary, or a dictionary. Stemming is more of a heuristics that chops off prefixes and suffixes to obtain a root. The root is not necessarily a word in the dictionary.

Text preprocessing is language-specific. Though English and German both descended from the same root language, Proto-Germanic, German obtained an aglutinative morphology, which means words are glued together for a phrase. Meanwhile, for some Asian languages like Chinese, there is no alphabet. There is also no whitespace between characters. So how to preprocess the text really depends on the language and the application.

English: insurance companies providing legal protection

Chinese: 提供法律保护的保险公司

German: Kaftfahrzeug-Haftpflichtversicherung

Thai: บริษัท ประกันภัยที่ให้การคุ้มครองตามกฎหมาย

Vector Space Model

The most common way to represent documents is to convert them into numeric vectors. This representation is called "Vector Space Model" (VSM). VSM is broadly used in various text mining algorithms and IR systems and enables efficient analysis of large collection of documents. In order to allow for more formal descriptions of the algorithms, we first define some terms and variables that will be frequently used in the following: Given a collection of documents [math]\displaystyle{ D = \{ d_1 ,d_2 , \dotsc ,d_D \} }[/math], let [math]\displaystyle{ V = \{ w_1 ,w_2 , \dotsc ,w_v \} }[/math] be the set of distinct words/terms in the collection. Then [math]\displaystyle{ V }[/math] is called the vocabulary. The frequency of the term [math]\displaystyle{ w ∈V }[/math] in document [math]\displaystyle{ d ∈D }[/math] is shown by [math]\displaystyle{ f_d(w) }[/math] and the number of documents having the word [math]\displaystyle{ w }[/math] is represented by [math]\displaystyle{ f_D(w) }[/math]. The term vector for document [math]\displaystyle{ d }[/math] is denoted by [math]\displaystyle{ \vec t_d\ = (f_d(w_1), f_d(w_2), \dotsc, f_d(w_v)) }[/math].

In VSM each word is represented by a variable having a numeric value indicating the weight (importance) of the word in the document. There are two main term weight models: 1)Boolean model : In this model a weight [math]\displaystyle{ w_{ij}\gt 0 }[/math] is assigned to each term [math]\displaystyle{ w_i ∈d_j }[/math]. For any term that does not appear in [math]\displaystyle{ d_j, w_{ij} = 0 }[/math]. 2)Term frequency-inverse document frequency (TF-IDF):The most popular term weighting schemes is TF-IDF. Let [math]\displaystyle{ q }[/math] be this term weighting scheme, then the weight of each word [math]\displaystyle{ w_i ∈d }[/math] is computed as follows:

[math]\displaystyle{ q(w) = f_d(w)*log\frac{\left\vert D \right\vert}{f_D(w)} }[/math]

where [math]\displaystyle{ \left\vert D \right\vert }[/math] is the number of documents in the collection [math]\displaystyle{ D }[/math].

In TF-IDF the term frequency is normalized by inverse document frequency, IDF. This normalization decreases theweight of the terms occurring more frequently in the document collection, making sure that the matching of documents be more effected by distinctive words which have relatively low frequencies in the collection.

Based on the term weighting scheme, each document is represented by a vector of term weights [math]\displaystyle{ w(d) = (w(d,w_1), w(d,w_2), \dotsc, w(d,w_v)) }[/math]. We can compute the similarity between two documents [math]\displaystyle{ d_1 }[/math] and [math]\displaystyle{ d_2 }[/math]. One of the most widely used similarity measures is cosine similarity and is computed as follows:

[math]\displaystyle{ S(d_1,d_2) = \cos \theta = \frac{\displaystyle d_1 · d_2}{{\displaystyle \sqrt {\sum_{i=1}^v w_{1i}^2}} · {\displaystyle \sqrt {\sum_{i=1}^v w_{2i}^2}}} }[/math]

Classification

Problem Statement

The problem of classification is defined as follows. We have a training set [math]\displaystyle{ D = {d_1,d_2, . . . ,d_n } }[/math] of documents, such that each document di is labeled with a label ℓi from the set [math]\displaystyle{ L = {ℓ_1, ℓ_2, . . . , ℓ_k } }[/math]. The task is to find a classification model (classifier) [math]\displaystyle{ f }[/math] where [math]\displaystyle{ f : D → L, f (d) = ℓ }[/math].

To evaluate the performance of the classification model, we set aside a test set. After training the classifier with training set, we classify the test set and the portion of correctly classified documents to the total number of documents is called accuracy.

The common evaluation metrics for text classification are precision, recall and F-1 scores. Charu C Aggarwal and others defines these metrics as follows:

Precision: The fraction of the correct instances among the identified positive instances.

Recall: The percentage of correct instances among all the positive instances.

F-1 score: The geometric mean of precision and recall. [math]\displaystyle{ F1 = 2 × \frac{precision × recall}{precision + recall} }[/math]

Naive Bayes Classifier

Definition: The Naive Bayes classifier is a simple yet widely used classifier. It makes assumptions about how the data (in our case words in documents) are generated and propose a probabilistic model based on these assumptions. Then use a set of training examples to estimate the parameters of the model. Bayes rule is used to classify new examples and select the class that is most likely has generated the example. There are two main models commonly used for naive Bayes classifications: Multi-variate Bernoulli Model and Multinomial Model. Both models try to find the posterior probability of a class, based on the distribution of the words in the document. However, Multinomial Model takes into account the frequency of the words whereas the Multi-variate Bernoulli Model does not.

Nearest Neighbor Classifier

The main idea is that documents which belong to the same class are more likely “similar” or close to each other based on the similarity measures. The classification of the test document is inferred from the class labels of the similar documents in the training set. If we consider the k-nearest neighbor in the training data set, the approach is called k-nearest neighbor classification and the most common class from these k neighbors is reported as the class label.

Decision Tree Classifiers

Decision tree is basically a hierarchical tree of the training instances, in which a condition on the attribute value is used to divide the data hierarchically.

In case of text data, the conditions on the decision tree nodes are commonly defined in terms of terms in the text documents. For instance a node may be subdivided to its children relying on the presence or absence of a particular term in the document.

Support Vector Machines

Support Vector Machines (SVM) are a form of Linear Classifiers. In the context of text documents, Linear Classifiers are models that make a classification decision based on the value of the linear combinations of the documents features. The output of a linear predictor is defined to be [math]\displaystyle{ y = \vec{a} \cdot \vec{x} + b }[/math], where [math]\displaystyle{ \vec{x} = (x1, x2, . . . , xn) }[/math] is the normalized document word frequency vector, [math]\displaystyle{ \vec{a} = (a1, a2, . . . , an) }[/math] is vector of coefficients and b is a scalar. We can interpret the predictor [math]\displaystyle{ y = \vec{a} \cdot \vec{x} + b }[/math] in the categorical class labels as a separating hyperplane between different classes.

One advantage of the SVM method is that, it is quite robust to high dimensionality, i.e. learning is almost independent of the dimensionality of the feature space. It rarely needs feature selection since it selects data points (support vectors) required for the classification. According to Joachims and others, text data is an ideal choice for SVM classification due to sparse high dimensional nature of the text with few irrelevant features. SVM methods have been widely used in many application domains such as pattern recognition, face detection and spam filtering.

Clustering

Clustering is the task of grouping objects in a collection using a similarity function. Clustering in text mining can be in different levels such as documents, paragraphs, sentences or terms. Clustering helps enhance retrieval and browsing and is applied in many fields including classification, visualization and document organization. For example, clustering can be used to produce a table of contents or to construct a context-based retrieval systems. Various software programs such as Lemur and BOW have implementations of common clustering algorithms.

A simple algorithm of clustering is representing text documents as binary vectors using the presence or absence of words. However, algorithms like this are insufficient for text representing due to documents having the following unique properties: Text representation usually has a large dimensionality but sparse underlying data; Word correlation in each text piece is generally very strong and thus should be taken into account in the clustering algorithm; Normalizing should also be included in the clustering step since documents are often of very different sizes.

Common algorithms of clustering include:

Hierarchical Clustering algorithms

Top-down(divisive) hierarchical clustering begins with one cluster and recursively split it into sub-clusters. Bottom-up(agglomerative) hierarchical starts with each data point as a single cluster and successively merge clusters until all data points are in a single cluster.

K-means Clustering

Given a document set D and a similarity measure S, first select k clusters and randomly select their centroids, then recursively assign documents to clusters with the closest centroids and recalculate the centroids by taking the mean of all documents in each cluster.

Probabilistic Clustering and Topic Models

Topic modeling constructs a probabilistic generative model over text corpora. Latent Dirichlet Allocation is a state of the art unsupervised model that is a three-level hierarchical Bayesian model in which documents are represented as random mixtures over latent topics and each topic is characterized by a distribution over words.

Information Extraction

What is information extraction

Information extraction is the kind of NLP task where the information we are looking for are known beforehand

Two fundamental tasks

1. Named Entity Recognition(NER)

Locate and classify named entity in free text into predefined categories

2. Relation Extraction

Categorize the relation between two entities into one of the fixed relation types

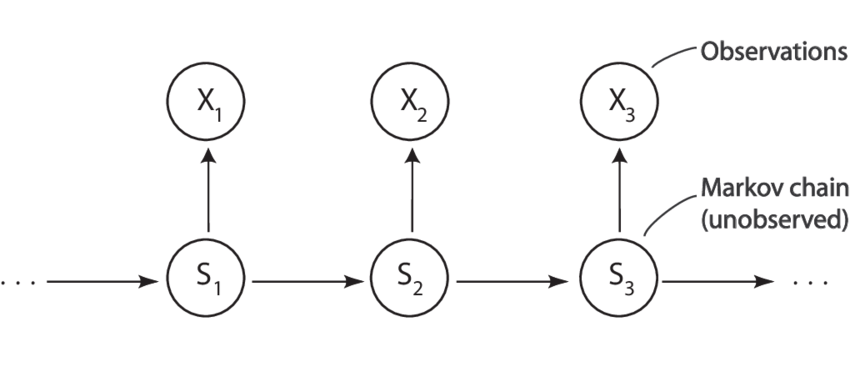

Hidden Markov Model for NER

Why HMM:

HMM consider the predicted labels of the neighboring words

Problems with Markov Model:

1. Hard to capture true state of the system

2. Noise exists in the real world problem

HIdden Markov Model setting

Observations depends on the states of the system, and the current state only depends on the last state

Parameters of HMM:

transition probability: T(i,j) = p(Zk+1 = j| Zk = i)

emission probability: E(x) = p(Xk = x | Zk = i)

initial distribution:π(i) = π(Z1 = i)

Text Mining in Biomedical Domain

The amount of biomedical literature is growing dramatically, which disturbs biomedical scientists with following up new discoveries and dealing with biomedical experimental data. For example, there is thousands of millions of academic terms in biomedical domain, and recognizing these long terms and relating them to real entity are necessary when considering using machine-learning method to facilitate biomedical researches. Therefore, applications of text mining in biomedical domain become inevitable. Recently, text mining methods have been utilized in a variety of biomedical domains such as protein structure prediction, gene clustering, biomedical hypothesis and clinical diagnosis, to name a few.

Information Extraction, aforementioned, is a useful preprocessing step to extract structured information from scientific articles in biomedical literature. For instance, Named-Entity Recognition(NER) often used to link entities to formal terms in biomedical domain. Also, Relation Extraction makes it possible for several entities to set complex but clear relationships with each other. In particular, NER methods are usually grouped into several approaches: dictionary-based approach, rule-based approach and statistical approaches.

Summarization is a common biomedical text mining task using information extraction method. It aims at identifying the significant aspects of one or more documents and represent them in a coherent fashion automatically. In addition, question answering is another biomedical text mining task where significantly exploits information extraction methods. It is defined as the process of producing accurate answers to questions posed by humans in a natural language.

Conclusion

The paper gave a great overall introduction into the vast field of text mining. Each method has its own tradeoffs between effectiveness and efficiency, and choosing a particular method very much depends on the textual data that is being dealt with. The paper also discussed some of the important domains and applications of text mining, particularly in the biomedical domain, including information extraction, summarizing and question answering. Information will continue to grow exponentially as data has become an integral part of our world. For scientists, having a growing amount of resources at their fingertips is very useful; however, the amount of papers being published online are growing significantly which makes it difficult for them to keep up. Text mining is also inevitably important for social media and data companies like Facebook and Google due to the large amounts of data that they possess. In addition, it is useful for other industries that deal with a lot of textual data, such as law and media.

References

- [5] Jonathan J. Webster, Chunyu Kit, Tokenization as the initial phase in NLP

- [6] Douglass R. Cutting, David R. Karger, Jan O. Pedersen, and John W. Tukey. 1992. Scatter/Gather: a cluster-based approach to browsing large document collections. In Proceedings of the 15th annual international ACM SIGIR conference on Research and development in information retrieval (SIGIR '92), Nicholas Belkin, Peter Ingwersen, and Annelise Mark Pejtersen (Eds.). ACM, New York, NY, USA, 318-329. DOI: https://doi.org/10.1145/133160.133214

- [7] Deerwester, S. , Dumais, S. T., Furnas, G. W., Landauer, T. K. and Harshman, R. (1990), Indexing by latent semantic analysis. J. Am. Soc. Inf. Sci., 41: 391-407. doi:10.1002/(SICI)1097-4571(199009)41:6<391::AID-ASI1>3.0.CO;2-9

- [8] Ronen Feldman and Ido Dagan. 1995. Knowledge discovery in Textual Databases (KDT). In Proceedings of the First International Conference on Knowledge Discovery and Data Mining (KDD'95), Usama Fayyad and Ramasamy Uthurusamy (Eds.). AAAI Press 112-117.

- [9] Allahyari, M., Pouriyeh, S., Assefi, M., Safaei, S., Trippe, E. D., Gutierrez, J. B., & Kochut, K. (2017). A brief survey of text mining: Classification, clustering and extraction techniques. arXiv preprint arXiv:1707.02919.