stat441w18/Saliency-based Sequential Image Attention with Multiset Prediction

Presented by

1. Yiqun Wang

2. Robert Huang

3. Yufeng Wang

4. Renato Ferreira

5. Being Fan

6. Xiaoni Lang

7. Xukun Liu

8. Handi Gao

Introduction

We are able to achieve high performances in image classification using current techniques, however, the techniques often exhibit unexpected and unintuitive behaviour, allowing minor perturbations to cause a complete misclassification. In addition, the classifier may accurately classify the image, while completely missing the object in question (for example, classifying an image containing a polar bear correctly because of the snowy setting).

To remedy this, we can either isolate the object and its surroundings and re-evaluate whether the classifier still performs adequately, or we can apply a saliency detection method to determine the focus of the classifier, and to understand how the classifier makes its decisions.

A commonly used method for saliency detection takes an image, then recursively removes sections of the image and evaluates the impact on the accuracy of the classification. The smallest region that causes the biggest impact on the classification score makes up our saliency map. However, this iterative method is computationally intensive and thus time-consuming.

This paper proposes a new saliency detection method that uses a trained model to predict the saliency map from a single feed-forward pass. The resulting saliency detection is not only order of magnitudes faster, but benchmarks against standard saliency detection methods also show that we have produced higher quality saliency masks and achieved better localization results.

Related Works

Numerous methods for saliency detection have been proposed since the introduction of CNNs in 2015. One such method uses gradient calculations to find the region with the greatest gradient magnitude, under the assumption that such a region is a valid salient region. Another suggests the use of guided back-propogation (Springenberg et al., 2014), which takes into account the error signal for gradient adjustment, and excitation back-propogation (Zhang et al., 2016), which uses non-negatively weighted connections to find meaningful saliency regions. However, these gradient-based methods, while fast, provide us with a sub-par saliency map that is difficult to interpret, or to adjust and improve upon.

An alternative approach (Zhou et al., 2014) takes an image, then iteratively removes patches (setting its colour to the mean) such that the class score is preserved. This gives us an excellent explanatory map, easily human-interpretable. However, the iterative method is time-consuming and unsuited for a real-time saliency application.

Another technique (Cao et al., 2015) that has been proposed is to selectively ignore network activations, such that the resulting subset of the activations provides us with the highest class score. This method, again, uses an iterative process that is unsuited for real-time application.

Fong and Vedaldi, 2017 proposes a similar solution, but instead of preserving the class score, they aim to maximally reduce the class score by removing minimal sections of the image. In doing so, they end up with a saliency map that is easily human-interpretable, and model-agnostic (on account of performing the optimization on the image space and not the model itself).

Image Saliency and Introduced Evidence

No single metric can measure the quality of produced map.

Saliency map is defined as a summarised explanation of where the classifier “looks” to make its prediction.

There are 2 saliency definitions:

1. Smallest sufficient region(SSR)

2. Smallest destroying region(SDR)

SSR is small and hard to recognize but contains important info with 90% accuracy. SDR is larger.

There are some ways removing evidence like removing, setting to constant color, adding noise, or completely cropping out. But all of them bring some side effect, like Misclassification.

Fighting the Introduced Evidence

Here’s case of applying a mask M to image X to obtain the edited image E.

[math]\displaystyle{ E = X \odot M }[/math]

This operation sets “0” color to certain part.

The mask M can generate adversarial artifacts. Adversarial artifacts are very small and imperceivable by people but can ruin the classifier. This phenomenon should be avoided.

There are a few ways to make the introduction of artifacts harder. We apply a mask to reduce the amount of unwanted evidence.

[math]\displaystyle{ E = X \odot M + A \odot (1 - M) }[/math]

where A is an alternative image. A can be chosen as a blurring version of X. Therefore harder to generate high-frequency-high-evidence artifacts. But blur does not eliminate existing evidence all.

Another choice of A is random color with high-frequency noise. This makes E more unpredictable at region where M is low.

But adversarial artifacts still occur. Thus it is necessary to encourage smoothness of M via a total variation(TV) penalty. We can also resize smaller mask to the required size as resizing can be seen as a smoothness mechanism.

A New Saliency Metric

A new saliency measure is introduced instead of the traditional measure. Specifically, it defines a new saliency metric as log difference between the area of the rectangular crop and the probability of correct prediction of the classifier, i.e

[math]\displaystyle{ s(a,p) = log(\tilde(a)) - log(p) }[/math]

This metric is a direct translation of the SSR objective, thus it has the ideal properties for the classification.

The Saliency Objective

The saliency objective, with the previous conditions defined, is thus to find a maks M that is smooth and performs well on both SSR and SDR, and the authors deducted the objective function that enforces mask smoothness, encourages small region, and differentiates the probabilities of correct prediction from the saliency mask and the leftover part.

Masking Model

The masking model aims to transform an image with a class sector to masks by optimising the objective function. It depends on three main elements: the masking objective function, a black-box classification model, and the architecture of the masking model itself.

The objective function is a trade-off that tries to achieve, at the same time: lower total variation of the mask (so as to prevent brittle, high-frequency masks); lower average mask value (so as to make the mask small); higher output class probability (the masked image should be highly relevant); and lower “inverse-masked” output probability (the dropped part should not be very relevant). However, getting the masking model by optimization method takes too much iterations and will causes overfit. So a trainable masking model is developed to produce masks.

Any classification model can be used as the black-box element; examples are GoogleNet and ResNet. This model is used to judge how good the masking model is in selecting the salient region of images.

A masking model has the following architecture: first, to speed up the training prediction, a convolutional downsampling stage by a factor of two goes from the pixel representation of an image to progressively more coarse-grained feature representations with a pre-trained model. Then, the coarsest feature representation is given to the feature filter, which applies a sigmoid nonlinearity to select which coarse-grained positions to keep or drop the image from the selected class. Note that the feature selector has access to the true label so that it can select relevant portions of the image. Finally, the filtered lower resolution features are upsampled by a factor of two using transformed convolution, which means using the features captured in the downsampling stage to fine-tune the saliency map (e.g., fit to edges of the image and other details). And after that, the final output mask of the masking model is given by applying a convolution to produce a feature map.

When training the model, the objective function need to be minimized. The black-box model provides pre-trained classification probabilities and the output mask is learned from the masking model. This ensures that a small and simple mask which selects salient regions for classification is learned.

To help fitting properly, two optimizations are important: first, sometimes the model focuses on the dominant object and makes a “fake”, incorrect label. The way to solve it is to penalize when it sees the wrong label, so the only way to reduce the objective function is to drop the entire image. This encourages the model to only select a salient region when it sees the true label. For example, it should not select a dog from the image if the label is “cat”. Second, to prevent the model from making assumptions about the image, the model uses a random choice of either blurred image, or random colour with Gaussian noise. This ensures that the image given to the classifier is “unpredictable” outside the masked region, reducing the possibility of adversarial issues and improving the quality of the saliency mask.

Experiments

The experiments compare the masks generated by the masking model trained using GoogleNet with other existing methods. And to assess them, three evaluation measurements are used.

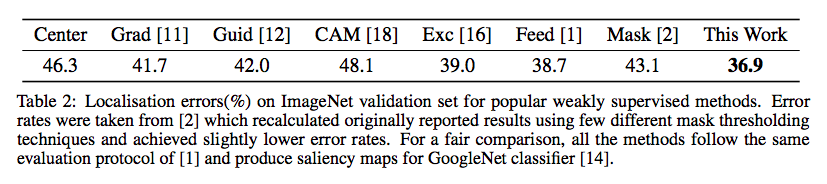

Weakly Supervised Object Localisation Error

Object localisation accuracy is a standard method to evaluate produced saliency maps. The table below is the localisation errors on ImageNet validation set for different weakly supervised methods, including the one this paper proposed. Note that for comparison purpose, all the saliency maps are produced for GoogleNet classifier.

It can be seen that this model overperforms other approaches. It also performs significantly better than the baseline (centrally placed box) and iteratively optimised saliency masks.

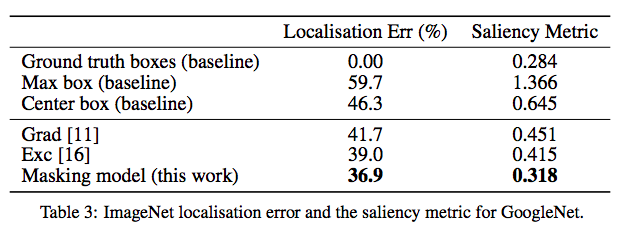

Saliency Metric

The authors also use the new measurement introduced in this paper, saliency metric, and the results are in the following table.

The masking model achieves a considerably better saliency metric than other saliency approaches, especially, it significantly overperforms max box and center box baselines. The masking model is also very close to ground truth boxes which supports the claim that the interpretability of the localisation boxes generated by the masking model is similar to that of the ground truth boxes.

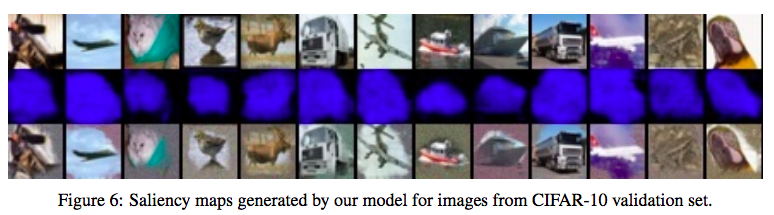

Detecting Saliency of CIFAR-10

Finally the authors test the performance of their model on CIFAR-10, a completely different dataset. The purpose of this experiment is to confirm the performance of their model on low-resolution images. To accommodate to this change, they use FitNet trained to 92% validation accuracy (therefore it is not a pre-trained model) as a black box classifier to train the masking model and modify the architecture slightly. Here is the saliency maps generated by the masking model.

Through the produced maps, we can still recognize the original objects which confirms that the masking model works even at low resolution and can be applied to a non-pre-trained model (FitNet).

Conclusion

In this work, the fast and accurate saliency detection model can be applied to any black box image classifier to find out its corresponding saliency mask. Under the new saliency metric which is developed in the paper, it is shown that the model outperforms other weakly supervised techniques at ImageNet localization task, and is on par with ground truth bounding boxes. This technique in finding saliency mask can be extended and improved by changing the masking network or the objective function to achieve desired properties of the out. It could also be applied in videos since the model is able to produce 100 saliency mask per second, therefore being able to run in real-time problems