STAT946F17/ Learning a Probabilistic Latent Space of Object Shapes via 3D GAN: Difference between revisions

No edit summary |

No edit summary |

||

| Line 8: | Line 8: | ||

= Methodology = | = Methodology = | ||

Let us first review GANs... | Let us first review Generative Adversarial Networks (GANs). As proposed in Goodfellow et al. [2014], GANs consist of a generator and a discriminator, where the discriminator tries to classify real objects and objects synthesized by the generator, while the generator attempts to confuse the discriminator. With proper guidance, this adversarial game will result in a generator the is able to synthesize fake samples very similar to the real training samples that the discriminator cannot distinguish. | ||

=== 3D-GANs === | === 3D-GANs === | ||

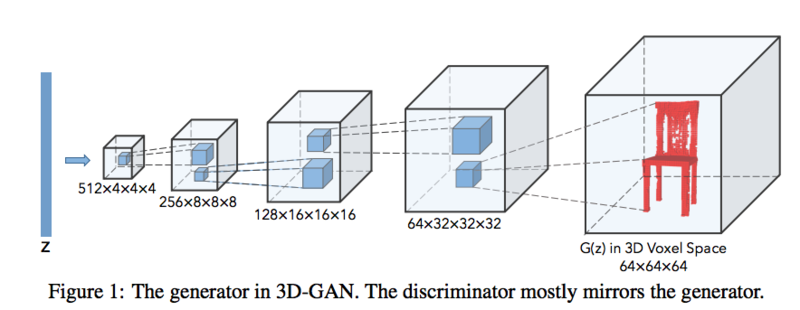

3D-GANs are a simple extension of GANs for 2D imagery. Here, the model is composed of a | In this paper, 3D Generative Adversarial Networks (3D-GANs) are presented as a simple extension of GANs for 2D imagery. Here, the model is composed of i) a generator (G) which maps a 200-dimensional latent vector z, randomly sampled from a probabilistic latent space (Uniform [0,1]), to a $64 \times 64 \times 64$ cube, representing the object $G(z)$ in voxel space, and ii) a discriminator (D) which outputs a confidence value $D(x)$ of whether a 3D object input x input is real or synthetic. Following Goodfellow et al. [2014], the classification loss used at the end of the discriminator is binary cross-entropy as | ||

$L_{3D-GAN} = \log D(x) + \log( 1 - D( G(z) ) )$ | |||

% \[ | |||

% L_{3D-GAN} = \log D(x) + \log( 1 - D( G(z) ) ) | |||

% \] | |||

% \begin{align*} | |||

% L_{3D-GAN} = \log D(x) + \log( 1 - D( G(z) ) ) | |||

% \end{align*} | |||

=== 3D-VAE-GANs === | === 3D-VAE-GANs === | ||

| Line 25: | Line 32: | ||

===== Generator ===== | ===== Generator ===== | ||

[[File: | [[File:amirhk_network_arch.png|800px]] | ||

===== Discriminator ===== | ===== Discriminator ===== | ||

Revision as of 15:25, 17 October 2017

Introduction

Related Work

Existing method

- Borrow parts from objects in existing CAD model libraries → realistic but not novel

- Learn deep object representations based on voxelized objects → fail to capture highly structured differences between 3D objects

- Mostly learn based on a supervised criterion

Methodology

Let us first review Generative Adversarial Networks (GANs). As proposed in Goodfellow et al. [2014], GANs consist of a generator and a discriminator, where the discriminator tries to classify real objects and objects synthesized by the generator, while the generator attempts to confuse the discriminator. With proper guidance, this adversarial game will result in a generator the is able to synthesize fake samples very similar to the real training samples that the discriminator cannot distinguish.

3D-GANs

In this paper, 3D Generative Adversarial Networks (3D-GANs) are presented as a simple extension of GANs for 2D imagery. Here, the model is composed of i) a generator (G) which maps a 200-dimensional latent vector z, randomly sampled from a probabilistic latent space (Uniform [0,1]), to a $64 \times 64 \times 64$ cube, representing the object $G(z)$ in voxel space, and ii) a discriminator (D) which outputs a confidence value $D(x)$ of whether a 3D object input x input is real or synthetic. Following Goodfellow et al. [2014], the classification loss used at the end of the discriminator is binary cross-entropy as

$L_{3D-GAN} = \log D(x) + \log( 1 - D( G(z) ) )$

% \[ % L_{3D-GAN} = \log D(x) + \log( 1 - D( G(z) ) ) % \]

% \begin{align*} % L_{3D-GAN} = \log D(x) + \log( 1 - D( G(z) ) ) % \end{align*}

3D-VAE-GANs

An extension of ...

Training and Results

Network Architecture

Generator

Discriminator

Encoder

Coupled Generator-Discriminator Training

Training GANs is tricky because in practice training a network to generate objects is more difficult than training a network to distinguish between real and fake samples. In other words, training the generator is harder than training the discriminator. Intuitively, it becomes difficult for the generator to extract signal for improvement from a discriminator that is way ahead, as all examples it generated would be correctly identified as synthetic with high confidence. This problem is compounded when we deal with 3D generated objects (compared to 2D) due to the higher dimensionality. There exists different strategies to overcome this challenge, some of which we saw in class:

- 1 D update every N G updates

- Capped gradient updates, where only a maximum gradient is propagated back through the network for the discriminator network, essentially capping how fast it can learn

The approach used in this paper is interesting in that it adaptively decides whether to train the network or not. Here, for each batch, D is only updated if its accuracy in the last batch is <= 80%. Additionally, the generator learning rate is set to 2.5 x 10e-3 whereas the discriminator learning rate is set to 10e-5. This further caps the speed of training for the discriminator relative to the generator.

Evaluation

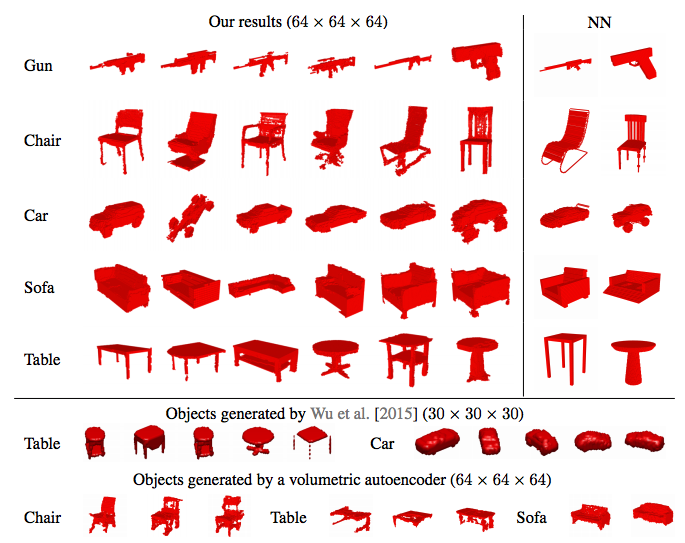

Qualitative results for 3D generated objects

For generation:

- Sample a 200-dimensional vector following an i.i.d. Uniform distr over [0,1]

- Render the largest connected component

Compare with

- 3D object synthesis from a probabilistic space [Wu et al., 2015]

- Volumetric auto-encoders (because latent space is not restricted, we fit a Gaussian to the empirical latent space distribution)

- Able to synthesize high-res 3D objects with detailed geometries

- Objects are similar, but not identical to training samples → not memorizing

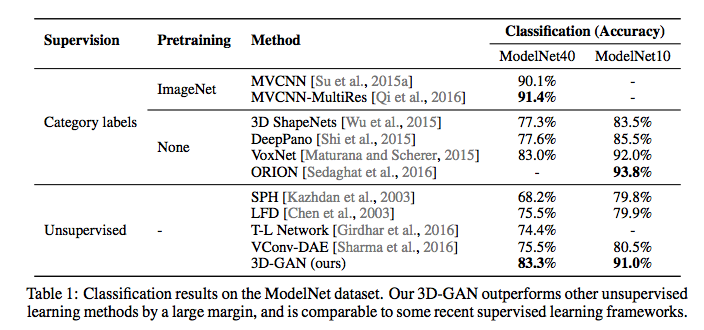

Classification performance of learned representations w/o supervision

Typical way to evaluate representations learned without supervision is to use them as features for classification. Input: 3D object Output: feature vector (concatenated the responses of the 2nd, 3rd, 4th convolutional layers in the discriminator, w/ applied max-pooling of size {8,4,2}) Classifier: Linear SVM

Train Data: ShapeNet Test Data: ModelNet {10, 40}

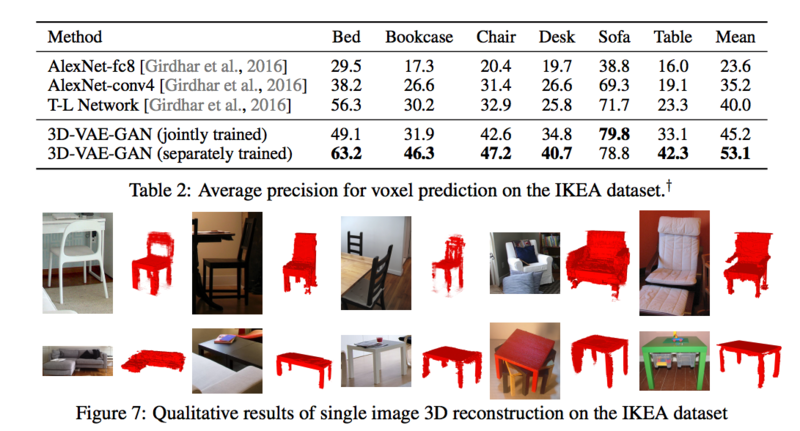

3D object reconstruction from a single image

Following previous work [Girdhar et al., 2016] the performance of 3D-VAE-GAN was evaluated on the IKEA dataset 1039 objects centre-cropped from 759 images (supplied by author) Images captured in the wild, often w/ cluttered backgrounds and occluded 6 categories: bed, bookcase, chair, desk, sofa, table Performance Single 3D-VAE-GAN trained on 6 categories Multiple 3D-VAE-GANs each trained on 1 category Align each prediction with GT over permutations, flips, and %10 translation

Future Work and Open questions

Source

Sutskever, I. Vinyals, O. & Le. Q. V. Sequence to sequence learning with neural networks. In Proc. Advances in Neural Information Processing Systems 27 3104–3112 (2014). <references />