STAT946F17/ Dance Dance Convolution: Difference between revisions

| Line 70: | Line 70: | ||

* LSTM | * LSTM | ||

$i_t = σ(W_i·[h_{t−1},x_t]+b_i)$ | $i_t = σ(W_i·[h_{t−1},x_t]+b_i)$ | ||

$f_t = σ(W_f·[h_{t−1},x_t]+b_f)$ | |||

$f_t = σ(W_f·[h_{t−1},x_t]+b_f)$ | |||

$q_t = tanh(W_q·[h_{t−1},x_t]+b_q)$ | $q_t = tanh(W_q·[h_{t−1},x_t]+b_q)$ | ||

$o_t = σ(W_o·[h_{t−1},x_t]+b_o)$ | $o_t = σ(W_o·[h_{t−1},x_t]+b_o)$ | ||

$c_t = f_t⊙c_{t−1}+i_t⊙q_t$ | $c_t = f_t⊙c_{t−1}+i_t⊙q_t$ | ||

$h_t = o_t⊙tanh(c_t)$ | $h_t = o_t⊙tanh(c_t)$ | ||

Revision as of 23:03, 23 November 2017

Introduction

- Dance Dance Revolution (DDR)

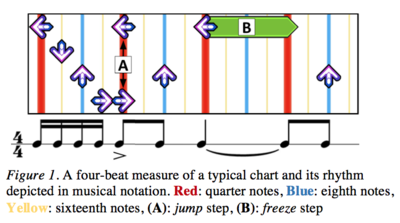

Dance Dance Revolution (DDR) is a rhythm-based video game. Players perform steps on a dance platform in synchronization with music as directed by on-screen step charts. The dance pad contains up, down, left, and right arrows, each of which can be in one of four states: on, off, hold, or release. There are $4^4 = 256$ possible step combinations at any instant since the four arrows can be in any of the four states independently. The terms jump: hitting two or more arrows at once; freeze: holding on one arrow for some duration. (Figure 1 (A) & (B))

Step charts exhibit complex semantics and tend to mirror musical structure: particular sequences of steps correspond to different motifs and reoccur as sections of the song are repeated. (Figure 1) The DDR community uses simulators, such as the opensource StepMania, which allow fans to create their own charts. Typically, for each song, packs containing one chart for five difficulty levels are created.

- Learning to Choreograph

While players may grow tired of existing charts in standardized packs or creating charts can be really time-consuming for players, the authors introduce the task of learning to choreograph, which learns to produce new step charts given raw audio tracks. This task has been approached via ad-hoc method, it is the first time to be casted as a learning task to mimic the semantics of human-generated charts. The learning task is broke into two subtasks: First, step placement task decides when to place steps conditioning on various diffculty levels. Second, step selection task decides which steps to select at each timestamp.

- Music Information Retrieval (MIR)

Music information retrieval (MIR) may be involved in the progress on learning to choreograph. 1) step placement task closely resembles onset detection, a well-studied MIR problem. Onset detection identifies the times of all musically salient events, such as melody notes or drum strikes and each DDR step corresponds to an onset.

2) DDR packs specify a metronome click track for each song. For songs with changing tempos, the location of change and the new tempo are annotated. This click data could help for beat tracking and tempo detection.

- Contributions

1) Defining learning to choreograph, a new task with real-world usefulness and strong connections to fundamental problems in MIR.

2) Introducing two large, curated datasets for benchmarking DDR choreography algorithms.

3) Presenting an effective pipeline for learning to choreograph with deep neural networks.

Data

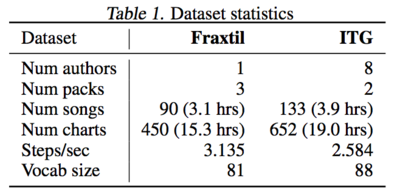

Basic statistics of the two datasets are shown in Table 1.

- Notes from Table 1:

1) Fraxtil: single author vs. In The Groove (ITG): multi-author

2) Each dataset contains five charts per song corresponding to increasing difficulty levels except that ITG dataset has 13 songs that lack charts for the highest difficulty.

3) The two datasets have similar vocabulary sizes (81 and 88 distinct step combinations, respectively).

4) The datasets contain around 35 hours of annotations and 350,000 steps considering all charts across all songs.

- Data Augmentation:

Four instances of each chart, by mirroring left/right, up/down (or both), are generated to augment the amount of data available for training.

- (beat, time, step) tuples:

To make the data easier to work with, the authors convert the useful data correspoding to the metadata to a canonical form consisting of (beat, time, step) tuples.

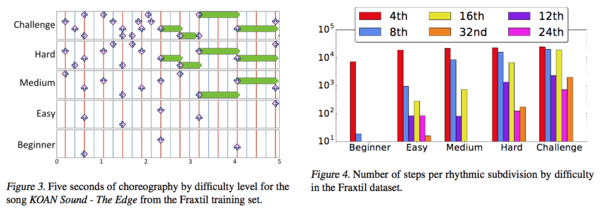

- Number of steps per rhythmic subdivision by difficulty (Figure4)

An increase in difficulty corresponds to increasing trend for steps to appear at finer rhythmic subdivisions.

Methods

Pipeline:

1) extract an audio feature representation

2) feed the representation into a step placement algorithm estimating probabilities that a step lies within that frame

3) use a peak-picking process on the sequence of probabilities to identify the timestamps at which to place steps

4) given a sequence of timestamps, use a step selection algorithm to choose which steps to place at each time. Note the approach to this problem involves modeling the probability distribution $\mathbb{P}(m_n|m_{n-1},...,m_1)$, where $m_{n}$ is the $n^{th}$ step in the sequence.

Audio Representation

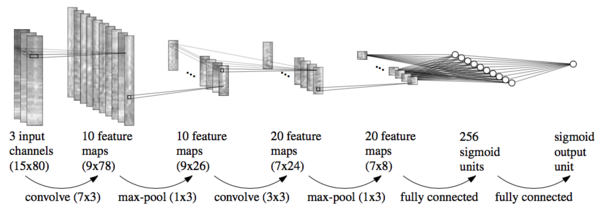

The final audio representation is a 15×80×3 tensor. These correspond to the temporal width of 15 representing 150ms of audio context, 80 frequency bands, and 3 different window lengths of 23ms, 46ms, and 93ms from short-time Fourier transform (STFT), where shorter window sizes preserve low-level features such as pitch and timbre while larger window sizes provide more context for high-level features such as melody and rhythm (Hamel et al., 2012)[1]. Their approach to acoustic feature representation follows the work of Schlüter & Böck (2014)[2], who develop similar representations to perform onset detection with CNNs. (Figure 5)

Step Placement

- LSTM

$i_t = σ(W_i·[h_{t−1},x_t]+b_i)$

$f_t = σ(W_f·[h_{t−1},x_t]+b_f)$ $q_t = tanh(W_q·[h_{t−1},x_t]+b_q)$

$o_t = σ(W_o·[h_{t−1},x_t]+b_o)$

$c_t = f_t⊙c_{t−1}+i_t⊙q_t$

$h_t = o_t⊙tanh(c_t)$

- C-LSTM

For step placement, all models considered have output consisting a single sigmoid unit which estimates the probability that a step is placed. Also, the authors augment the audio features with a one-hot representation of difficulty.

First, they use CNN model with the same structure as the CNN for onset detection (Figure 5) except for replacing 7) with another fully connected layer of 128 nodes.

Second...

Peak Picking

Step Selection

Experiments

Step Placement

Step Selection

References

1. Hamel, Philippe, Bengio, Yoshua, and Eck, Douglas. Building musically-relevant audio features through multiple timescale representations. In ISMIR, 2012.

2. Improved musical onset detection with CNN Schlüter & Böck (2014)

3.