Robot Learning in Homes: Improving Generalization and Reducing Dataset Bias

Introduction

Using data-driven approaches in robotics has increased in the last decade. Instead of using hand-designed models, these data-driven approaches works on large-scale datasets and learn appropriate policies that map from high-dimensional observations to actions. Since collecting data using an actual robot in real-time is very expensive, most of data-driven approaches in robotics use simulators in order to collect simulated data. The concern which arises here is whether these approaches are able to be robust enough to domain shift and to be used for real-world data. It is an undeniable fact that there is a wide reality gap between simulators and the real world.

On the other hand, the declining costs of hardware to expand collecting data for a variety of tasks push the robotics community to collect real-world physical data. This approach has been quite successful at tasks such as grasping, pushing, poking and imitation learning. However, the major problem is that the performance of these learning models is not good enough and tends to plateau fast. Furthermore, robotic action data did not lead to similar gains as in other areas such as computer vision and natural language processing. As the paper claimed, the solution for all of these obstacles is using “real data”. Current robotic datasets lack diversity of environment. Learning based approaches need to move out of simulators in the labs and go to real environments such as real homes so that they can learn from real datasets.

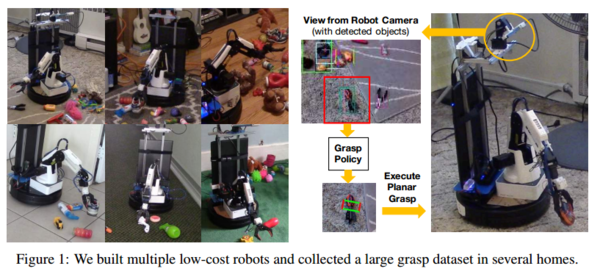

Like every other process, collecting real data and working with it has several challenges. First, there is a need for cheap and compact robots to collect data in homes but current industrial robots (i.e. Sawyer and Baxter) are too expensive. Secondly, cheap robots are not accurate enough to collect reliable data. Also, collecting data in homes cannot have a supervisor at all times. These challenges in addition to some other external factors can have a result in having noisy data. In this paper, a first systematic effort has been presented for collecting a dataset inside the homes which has the following parts:

-A cheap robot which is appropriate for using in homes

-Collecting training data in 6 different homes and testing data in 3 homes

-An approach for modeling the noise in the labeled data

Overview

This paper emphasizes on the importance of diversifying the data for robotic learning in order to have a greater generalization. A diverse dataset also allows for removing biases in the data. By considering these facts, the paper argues that even for simple tasks like grasping, datasets which are collected in labs suffer from strong biases such as simple back grounds and same environment dynamics. Hence, the learning approaches cannot generalize the models and work well on real datasets.

As a future possibility, there would be a need for having a low-cost robot to collect large-scale data inside a huge number of homes. For this reason, they introduced a customized mobile manipulator. They used a Dobot Magician which is a robotic arm mounted on a Kobuki which is a low-cost mobile base. The resulting robot arm has five degrees of freedom (DOF). They also add an Intel R200 RGBD camera to their robot which is at a height of 1m above the ground. An intel core i5 processor is also used as an on-board laptop to perform all the processing. The whole system can run for 1.5 hours with a single charge.

As there is always a trade-off, when we gain a low-cost robot, we are actually losing accuracy for controlling it. So, the low-cost robot which is built from cheaper components than the expensive setups such as Baxter and Sawyer, suffers from higher calibration errors and execution errors. This means that, the dataset collected with this approach is diverse and huge but it has noisy labels. To illustrate, consider when the robot wants to grasp at location (x, y). Since there is a noise in the execution, the robot may perform this action in the location (x+δ_x,y+δ_y) which would assign the success or failure label of this action to a wrong place. Therefore, to solve the problem, they used an approach to learn from noisy data. They modeled noise as a latent variable and used two networks, one for predicting the noise and one for predicting the action to execute.