Pre-Training Tasks For Embedding-Based Large-Scale Retrieval: Difference between revisions

Jump to navigation

Jump to search

(Created page with "==Introduction== One of the ways humans learn language, especially second language or language learning by students, is by communication and getting its feedback. However, mo...") |

No edit summary |

||

| Line 1: | Line 1: | ||

==Main Contributors== | |||

Pierre McWhannel wrote this summary with editorial and technical contributions from STAT 946 fall 2020 classmates. The summary is based on the paper "Pre-Training Tasks for Embedding-Based Large-Scale Retrieval" which was presented at ICLR 2020. The author's of this paper are Wei-Cheng Chang, Felix X. Yu, Yin-Wen Chang, Yiming Yang, Sanjiv Kumar. | |||

==Introduction== | ==Introduction== | ||

Let's begin. | |||

===Contributions of this paper=== | ===Contributions of this paper=== | ||

| Line 16: | Line 18: | ||

<br/> | <br/> | ||

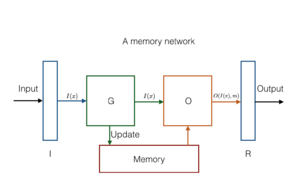

A memory network combines learning strategies from the machine learning literat | A memory network combines learning strategies from the machine learning literat | ||

===References==== | |||

[1] Wei-Cheng Chang, Felix X Yu, Yin-Wen Chang, Yiming Yang, and Sanjiv Kumar. Pre-training tasks for embedding-based large-scale retrieval. arXiv preprint arXiv:2002.03932, 2020. | |||

Revision as of 20:02, 17 November 2020

Main Contributors

Pierre McWhannel wrote this summary with editorial and technical contributions from STAT 946 fall 2020 classmates. The summary is based on the paper "Pre-Training Tasks for Embedding-Based Large-Scale Retrieval" which was presented at ICLR 2020. The author's of this paper are Wei-Cheng Chang, Felix X. Yu, Yin-Wen Chang, Yiming Yang, Sanjiv Kumar.

Introduction

Let's begin.

Contributions of this paper

- Introduce a set of tasks that model natural feedback from a teacher and hence assess the feasibility of dialog-based language learning.

- Evaluated some baseline models on this data and compared them to standard supervised learning.

- Introduced a novel forward prediction model, whereby the learner tries to predict the teacher’s replies to its actions, which yields promising results, even with no reward signal at all

Code for this paper can be found on Github:https://github.com/facebook/MemNN/tree/master/DBLL

Background on Memory Networks

A memory network combines learning strategies from the machine learning literat

References=

[1] Wei-Cheng Chang, Felix X Yu, Yin-Wen Chang, Yiming Yang, and Sanjiv Kumar. Pre-training tasks for embedding-based large-scale retrieval. arXiv preprint arXiv:2002.03932, 2020.