on the difficulty of training recurrent neural networks

Introduction

test

Training Recurrent Neural Network (RNN) is difficult and two of the most prominent problems have been vanishing and exploding gradients, <ref name="yoshua1993">Yoshua Bengio, Paolo Frasconi, and Patrice Simard. The problem of learning long-term dependencies in recurrent networks. In Neural Networks, 1993., IEEE International Conference on, pages 1183–1188. IEEE, 1993.</ref> which prevents neural networks from learning and fitting data with long-term dependencies. In this paper the authors propose a gradient norm clipping strategy to deal with exploding gradients and a soft constraint for the vanishing gradients problem.

Background

A generic recurrent neural network has the form:

[math]\displaystyle{ x_{t} = F(\mathbf{x}_{t -1}, \mathbf{u}_{t}, \theta) }[/math]

Where:

- [math]\displaystyle{ \mathbf{x}_{t} }[/math] is the state at time [math]\displaystyle{ t }[/math]

- [math]\displaystyle{ \mathbf{u}_{t} }[/math] is the input at time [math]\displaystyle{ t }[/math]

- [math]\displaystyle{ \theta\, }[/math] are the parameters

- [math]\displaystyle{ F()\, }[/math] is the function that represents a neuron

In the theoreical sections the authors made use of specific parameterization:

[math]\displaystyle{ \mathbf{x}_{t} = \mathbf{W}_{rec} \sigma(\mathbf{x}_{t - 1}) + \mathbf{W}_{in} \mathbf{u}_{t} + b }[/math]

Where:

- [math]\displaystyle{ \mathbf{W}_{rec} }[/math] is the RNN weight matrix

- [math]\displaystyle{ \sigma()\, }[/math] is an element wise function

- [math]\displaystyle{ b\, }[/math] is the bias

- [math]\displaystyle{ \mathbf{W}_{in} }[/math] is the input weights matrix

The following are gradient equations for using the Back Propagation Through Time (BPTT) algorithm. The authors rewrote the equations in order to highlight the exploding gradients problem:

[math]\displaystyle{ \frac{\partial \varepsilon}{\partial \theta} = \sum_{1 \leq t \leq T} \frac{\partial \varepsilon_t}{\partial \theta} }[/math]

[math]\displaystyle{ \frac{\partial \varepsilon_{t}}{\partial \theta} = \sum_{1 \leq k \leq T} \left( \frac{\partial \varepsilon_{t}}{\partial x_{t}} \frac{\partial x_{t}}{\partial x_{k}} \frac{\partial^{+} x_{k}}{\partial \theta} \right) }[/math]

[math]\displaystyle{ \frac{\partial x_{t}}{\partial x_{k}} = \prod_{t \leq i \leq k} \frac{\partial x_{i}}{\partial x_{i - 1}} = \prod_{t \leq i \leq k} \mathbf{W}^{T}_{rec} \textit{diag}(\sigma^{\prime}(x_{i - 1})) }[/math]

Where:

- [math]\displaystyle{ \varepsilon_{t} }[/math] is the error obtained from output at time [math]\displaystyle{ t }[/math]

- [math]\displaystyle{ \frac{\partial^{+} \mathbf{x}_{k}}{\partial \theta} }[/math] is the immediate partial derivative of state [math]\displaystyle{ \mathbf{x}_{k} }[/math]. For the parameterization above, [math]\displaystyle{ \frac{\partial^+ \mathbf{x}_k}{\partial \mathbf{W}_{rec}} = \sigma(\mathbf{x}_{k-1}) }[/math].

The authors of this paper also distinguish between long-term and short-term contributions to the gradient with respect to [math]\displaystyle{ \frac{\partial \mathbf{x}_t}{\partial \mathbf{x}_k} }[/math]. The contribution is long-term if [math]\displaystyle{ k \ll t }[/math], and short-term otherwise.

Exploding and Vanishing Gradients

The Mechanics

It's known that [math]\displaystyle{ |\sigma^'(x)| }[/math] is bounded. Let [math]\displaystyle{ \left|\left|diag(\sigma^'(x_k))\right|\right| \leq \gamma \in R }[/math].

The paper first proves that it is sufficient for [math]\displaystyle{ \lambda_1 \lt \frac{1}{\gamma} }[/math], where [math]\displaystyle{ \lambda_1 }[/math] is the largest singular value of [math]\displaystyle{ \bold{W}_{rec} }[/math], for the vanishing gradient problem to occur. The Jacobian matrix [math]\displaystyle{ \frac{\partial x_{k+1}}{\partial x_k} }[/math] is given by [math]\displaystyle{ \bold{ W}_{rec}^{T}diag(\sigma^'(x_k)) }[/math]. Then, the 2-norm of this Jacobian is bounded by the product of the norms of the two matrices. This leads to [math]\displaystyle{ \forall k, ||\frac{\partial{x_{k+1}}}{\partial x_k}|| \leq ||\bold{W}_{rec}^T||||diag(\sigma^'(x_k))|| \lt \frac{1}{\gamma}\gamma \lt 1 }[/math]

Let [math]\displaystyle{ \eta \in R }[/math] be such that [math]\displaystyle{ \forall k, ||\frac{\partial {x_{k+1}}}{\partial x_k}|| \leq \eta \lt 1 }[/math]. By induction over [math]\displaystyle{ i }[/math], we can show that [math]\displaystyle{ ||\frac{\partial \varepsilon_t}{\partial x_t}(\prod_{i=k}^{t-1}{\frac{\partial x_{i+1}}{\partial x_i}})|| \leq \eta^{t-k}||\frac{\partial \varepsilon_t}{\partial x_t}|| }[/math]. Since [math]\displaystyle{ \eta \lt 1 }[/math], as [math]\displaystyle{ t-k }[/math] goes larger, the gradient goes to 0.

By inverting this proof, it also shows that when the largest singular value [math]\displaystyle{ \lambda_1 }[/math] is larger than [math]\displaystyle{ \frac{1}{\gamma} }[/math], we will have exploding gradients.

From a dynamical systems perspective

Drawing from a dynamical systems perspective similiar to <ref name="yoshua1993"></ref><ref name="doya1993">Kenji Doya. Bifurcations of recurrent neural networks in gradient descent learning. IEEE Transactions on neural networks, 1:75–80, 1993.</ref>, dynamical systems theory states that as “[math]\displaystyle{ \theta }[/math] changes the asymptotic behaviour changes smoothly almost every where except for cetian crucial points where drasic changes occur” <ref name="pascanu"></ref>, this is because crossing these bifurcation has the potential to cause gradients to explode <ref name="doya1993"></ref>.

The authors of this paper argue, however, that crossing these bifurcation points does not guarantee a sudden change in gradients. Their idea is that a change to the model parameters can alter the attractor landscape in such a way that basin of attraction corresponding to the current model state is unaltered. For example, a change to the model parameters might eliminate a basic of attraction in a portion of the model's state space that is very far from its current state. In this case, the bifurcation will have no effect on the asymptotic behaviour of the model, and there will accordingly be no gradient explosion. On the other hand, if a change to the model parameters substantially alters the final basin of attraction given the current state, then there will a considerable effect on the asymptotic behaviour of the model, and the gradients will explode accordingly.

The figure above depicts a bifurcation diagram for a single-unit RNN, where the x-axis is the parameter [math]\displaystyle{ b }[/math] (bias) and the y-axis is the asymptotic state [math]\displaystyle{ x_{\infty} }[/math], (i.e. the equilibrium activation value of the unit), and the plot line is the movement of the final point attractor [math]\displaystyle{ x_{\infty} }[/math] as [math]\displaystyle{ b }[/math] changes. What this figure represents is the presence of two attractors, one emerging from [math]\displaystyle{ b_1 }[/math] and another disappearing at [math]\displaystyle{ b_2 }[/math], as the value of [math]\displaystyle{ b }[/math] is decreased. Note that only one attractor exists when the value of [math]\displaystyle{ b }[/math] is outside of the interval between [math]\displaystyle{ b_1 }[/math] and [math]\displaystyle{ b_2 }[/math], and that when two attractors exist, the attractor state towards which the unit ultimately gravitates is determined by its initial starting state. The boundary between the these two basins of attraction is denoted with the dashed line - starting states on opposite sides of this boundary will gravitate towards different attractor states. The blue filled circles indicate a bifurcation point at which a small change to the value of [math]\displaystyle{ b }[/math] can have a drastic effect on the attractor landscape over the unit's state space. In short, the landscape shifts to include a single attractor state for a low value of [math]\displaystyle{ x }[/math]. The unfilled green circles represents Pascanu’s (2013) extension of Doya’s hypothesis, where if the model is in the boundary range at time [math]\displaystyle{ 0 }[/math], a small change in [math]\displaystyle{ b }[/math] would result in a sudden large change in [math]\displaystyle{ x_{t} }[/math].

Overall, these remarks indicate that, when treated as dynamical system, the behaviour of a RNN can be analyzed with respect to both changes to the parameter values that determine an attractor landscape over its state space (assuming a fixed starting state), and with respect to changes to the starting state (assuming a fixed attractor landscape).

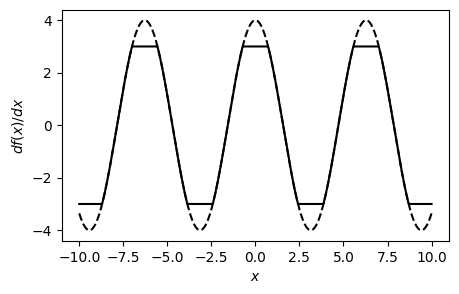

From a geometric perspective

Aside from a dynamical systems prospective to exploding and vanishing gradients, the authors also considered a geometric perspective, where a simple one hidden unit RNN was considered.

Reusing the RNN state equation the authors defined:

[math]\displaystyle{ x_{t} = W_{rec} \sigma(x_{t - 1}) + W_{in} u_{t} + b }[/math]

By assuming no input, with [math]\displaystyle{ b = 0 }[/math] and initial state [math]\displaystyle{ x_{0} }[/math], the equation simplifies to:

[math]\displaystyle{ x_{t} = W_{rec}^{t} x_{0} }[/math]

Differentiating the above equation to the first and second order would give:

[math]\displaystyle{ \frac{\delta x_{t}}{\delta \omega} = t W_{rec}^{t - 1} x_{0} }[/math]

[math]\displaystyle{ \frac{\delta^{2} x_{t}}{\delta \omega^{2}} = t (t - 1) W_{rec}^{t - 2} x_{0} }[/math]

Which implies if the first order derivative explodes then so will the second derivative. In the general case, when the gradients explode they do so along some directions v. If this bound is tight, it is hypothesized that when gradients explode so does the curvature along v, leading to a wall in the error surface, like the one seen above. If both the gradient and the leading eigenvector of the curvature are aligned with the exploding direction v, it follows that the error surface has a steep wall perpendicular to v (and consequently to the gradient). This means when the Stochastic Gradient decent (SGD) approaches the loss error surface and attempts to step into it, it will be deflected away and possibly hinder the learning process. (See figure above). Note that this solution assumes that the valley bordered by a steep cliff in the value of the loss function is wide enough with respect the clip being applied to the gradient, otherwise the deflection caused by an update of SGD would still hinder the learning process despite clipping being used. The practical effectiveness of clipping provides some evidence in support of this assumption.

Dealing with Exploding and Vanishing Gradient

Related Work

- L1/L2 Regularization: Helps with exploding gradients, but limits the RNN to a single point attractor at the origin, and prevents the model to learn generator models or exhibit long term memory traces.

- Teacher Forcing: Proposed by <ref name="doya1993"></ref>, performs a cross bifurcation boundary if the model does not exhibit asymptotic behavior towards a desired target. This assumes the user knows what the behaviour might look like or how to intialize the model to reduce exploding gradients.

- LTSM: The Long-Short Term Memory architecture by <ref name="graves2009">Alex Graves, Marcus Liwicki, Santiago Fern ́andez, Roman Bertolami, Horst Bunke, and Jurgen Schmidhuber. A novel connectionist system for unconstrained handwriting recognition. Pattern Analysis and Machine Intelligence, IEEE Transactions on, 31(5):855–868, 2009</ref><ref name="Hochreiter">Sepp Hochreiter and Jurgen Schmidhuber. 9(8):1735–1780, 1997. Long short-term memory.Neural computation,</ref> is an attempt to deal with the vanishing gradient problem by using a linear unit that feedbacks to itself with a weight of [math]\displaystyle{ 1 }[/math]. This solution however does not deal with the exploding gradient

- Hessian-Free optimizer with structural damping: Proposed by <ref>Ilya Sutskever, James Martens, and Geoffrey E Hinton. Generating text with recurrent neural networks. In Proceedings of the 28th International Conference on Machine Learning (ICML-11), pages 1017–1024, 2011</ref> addresses the vanishing and exploding gradient problem. <ref name="pascanu"></ref> reasons that this approach solves the vanishing gradient problem because of the high dimensionality of the spaces gives rise to a high probability for the long term components to be orthogonal to short term components. Additionally for exploding gradient the curvature of the gradient is taken into account.

- Echo State Networks: <ref>Herbert Jaeger and Harald Haas. Harnessing nonlinearity: Predicting chaotic systems and saving energy in wireless communication. Science, 304(5667):78–80, 2004.</ref> avoids the exploding and vanishing gradient problem by not learning the input and recurrent weights, they are instead hand crafted distributions that prevent information from getting loss, since a spectral radius for the recurrent weights matrix is usually smaller than 1.

Scaling Down the Gradients

The intuition of this gradient clipping algorithm is simple, obtain the norm of the gradients, if it is larger than the set threshold then scale the gradients by a constant defined as the treshold divided by the norm of gradients. <ref name="pascanu"></ref> suggests using a threshold value from half to ten times the norm.

Vanishing Gradient Regularization

The vanishing gradient regularizer is as follows:

[math]\displaystyle{ \Omega = \sum_{k} \Omega_{k} = \sum_{k} \left( \frac{ \| \frac{\delta \varepsilon}{\delta x_{k + 1}} \frac{\delta x_{k + 1}}{\delta x_{k}} \| } { \| \frac{\delta \varepsilon}{\delta x_{k + 1}} \| } - 1 \right)^{2} }[/math]

The vanishing gradient problem occurs when at time [math]\displaystyle{ t }[/math] the inputs [math]\displaystyle{ u }[/math] may be irrelevant and noisy and the network starts to learn to ignore them. However this is not desirable as the model will end up not learning anything. The authors found that the sensitivity to all inputs [math]\displaystyle{ u_{t} \dots u_{k} }[/math] could be increased by increasing the norm of [math]\displaystyle{ \frac{\delta x_t}{\delta x_{t}} }[/math]. This imples that in order to increses the [math]\displaystyle{ \frac{\delta x_t}{\delta x_{t}} }[/math] norm the error must remain large, this however would prevent the model from converging, thus the authors argue a regularizer is a more natural choice. The regularizer is a soft constraint that forces the Jacobian matrices [math]\displaystyle{ \frac{\delta x_{k + 1}}{\delta x_{k}} }[/math] to preserve norm in the direction of the error [math]\displaystyle{ \frac{\delta \varepsilon}{\delta x_{k + 1}} }[/math].

Experimental Results

The Temporal Order Problem

The authors repeated the temporal order problem as the prototypical pathological problem for validating the clipping and regularizer devised. The temporal order problem involves generating a long sequence of discrete symbols, and at the beginning an [math]\displaystyle{ A }[/math] or a [math]\displaystyle{ B }[/math] symbol is placed at the beginning and middle of the sequence. The task is to correctly classify the order of [math]\displaystyle{ A }[/math] and [math]\displaystyle{ B }[/math] at the end of the sequence.

Three different RNN intializations were performed for the experiment:

- sigmoid unit network: [math]\displaystyle{ W_{rec}, W_{in}, W_{out} \sim \mathcal{N}(0, 0.01) }[/math]

- basic tanh unit network: [math]\displaystyle{ W_{rec}, W_{in}, W_{out} \sim \mathcal{N}(0, 0.1) }[/math]

- smart tanh unit network: [math]\displaystyle{ W_{rec}, W_{in}, W_{out} \sim \mathcal{N}(0, 0.01) }[/math]

Of the three RNN networks three different optimizer configurations were used:

- MSGD: Mini-batch Stochastic Gradient Decent

- MSGD-C: MSGD with Gradient Clipping

- MSGD-CR: MSGD-C with Regularization

Additional model parameters include:

- Spectral Radius of 0.95

- [math]\displaystyle{ b = b_{out} = 0 }[/math]

- 50 hidden neurons

- constant learning rate of 0.01

- clipping threshold of 1 (only for MSGD-C and MSGD-CR)

- regularization weight of 4 (MSGD-CR)

The experiment was performed 5 times and from the figure below we can observe the importance of gradient clipping and the regularizer. In all cases, the combination of the two methods yielded the best results regardless of which unit network was used. Furthermore this experiment provided empirical evidence that exploding gradients correlates to tasks that require long memory traces, as can be seen as the sequence length of the problem increases clipping and regularization becomes more important. This is largely due to the fact that increased memory yields a larger spectral radius, which in turn leads to increased likelihood of gradient explosion.

Other Pathological Problems

The author repeated other pathological problems from <ref name="Hochreiter"></ref>. The results are listed below:

The authors did not discuss the experimental results in detail here.

Summary

The paper explores two different perspectives in explaining the exploding and vanishing gradient problems in training RNNs via the dynamical systems and geometric approach. The authors devised methods to mitigate the corresponding problems by introducing a gradient clipping and a gradient vanishing regularizer and their experimental results showed that in all cases except for the Penn Treebank dataset, clipping and regularizer has improved on the results for the RNNs in their respective experiments.