On The Convergence Of ADAM And Beyond

Introduction

Somewhat different to the presentation I gave in class, this paper focuses strictly on the pitfalls in convergance of the ADAM training algorithm for neural networks from a theoretical standpoint and proposes a novel improvement to ADAM called AMSGrad. The result essentially introduces the idea that it is possible for ADAM to get itself "stuck" in it's weighted average history which must be prevented somehow.

Notation

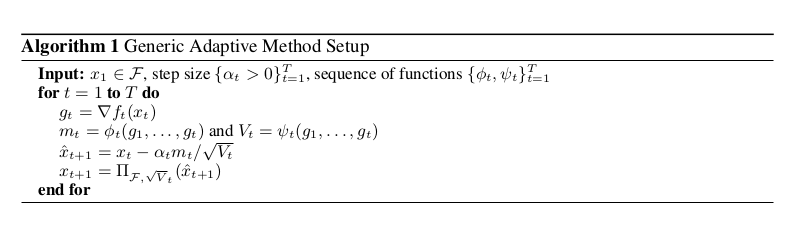

The paper presents the following framework that generalizes training algorithms to allow us to define a specific variant such as AMSGrad or SGD entirely within it:

Where we have [math]\displaystyle{ x_t }[/math] as our network parameters defined within a vector space [math]\displaystyle{ \mathcal{F} }[/math]. [math]\displaystyle{ \prod_{\mathcal{F}} (y) = }[/math] the projection of [math]\displaystyle{ y }[/math] on to the set [math]\displaystyle{ \mathcal{F} }[/math]. [math]\displaystyle{ \psi_t }[/math] and [math]\displaystyle{ \phi_t }[/math] correspond to arbitrary functions we will provide later, The former maps from the history of gradients to [math]\displaystyle{ \mathds{R}^d }[/math] and the latter maps from the history of the gradients to positive semi definite matrices. And finally [math]\displaystyle{ f_t }[/math] is our loss function at some time [math]\displaystyle{ t }[/math], the rest should be pretty self explanatory. Using this framework and defining different [math]\displaystyle{ \psi_t }[/math] , [math]\displaystyle{ \phi_t }[/math] will allows us to recover all different kinds of training algorithms under this one roof.

SGD As An Example

To recover SGD using this framework we simply select [math]\displaystyle{ \phi_t (g_1, \dotsc, g_t) = g_t }[/math] and [math]\displaystyle{ \psi_t (g_1, \dotsc, g_t) = I }[/math]. It's easy to see that no transformations are ultimately applied to any of the parameters based on any gradient history other than the most recent from [math]\displaystyle{ \phi_t }[/math] and that [math]\displaystyle{ \psi_t }[/math] in no way transforms any of the parameters by any specific amount as [math]\displaystyle{ V_t = I }[/math] has no impact later on.

ADAM As Another Example

Once you can convince yourself that SGD is correct, you should understand the framework enough to see why the following setup for ADAM will allow us to recover the behavior we want. ADAM has the ability to define a "learning rate" for every parameter based on how much that parameter moves over time (a.k.a it's momentum) supposedly to help with the learning process.

In order to do this we will choose [math]\displaystyle{ \phi_t (g_1, \dotsc, g_t) = (1 - \beta_1) \sum_{i=0}^{t} {\beta_1}^{t - i} g_t }[/math] And we will choose psi to be [math]\displaystyle{ \psi_t (g_1, \dotsc, g_t) = (1 - \beta_2) }[/math]diag[math]\displaystyle{ ( \sum_{i=0}^{t} {\beta_2}^{t - i} {g_t}^2) }[/math].

From this we can now see that [math]\displaystyle{ m_t }[/math] gets filled up with the exponentially weighted average of the history of our gradients that we've come across so far in the algorithm. And that as we proceed to update we scale each one of our paramaters by dividing out [math]\displaystyle{ V_t }[/math] (in the case of diagonal it's just 1/the diagonal entry) which contains the exponentially weighted average of each parameters momentum ([math]\displaystyle{ {g_t}^2 }[/math]) across our training so far in the algorithm. Thus giving each parameter it's own unique scaling by it's second moment or momentum. Intuitively from a physical perspective if each parameter is a ball rolling around in the optimization landscape what we are now doing is instead of having the ball changed positions on the landscape at a fixed velocity (i.e. momentum of 0) the ball now has the ability to accelerate and speed up or slow down if it's on a steep hill or flat trough in the landscape (i.e. a momentum that can change with time).

[math]\displaystyle{ \Gamma_t }[/math] An Interesting Quantity

Now that we have an idea of what ADAM looks like in this framework, let us now investigate the following:

[math]\displaystyle{ \Gamma_{t + 1} = \frac{\sqrt{V_{t+1}}}{\alpha_{t+1}} - \frac{\sqrt{V_t}}{\alpha_t} }[/math]

Which essentially measure the change of the "Inverse of the learning rate" across time (since we are using alpha's as step sizes). Looking back to our example of SGD it's not hard to see that this quantity is strictly positive, which leads to "non-increasing" learning rates a desired property. However that is not the case with ADAM, and can pose a problem in a theoretical and applied setting.