Obfuscated Gradients Give a False Sense of Security Circumventing Defenses to Adversarial Examples: Difference between revisions

| Line 49: | Line 49: | ||

The last column shows the accuracies each defense method achieved over the adversarial test set. Except for [Madry, 2018], all defense methods could only achieve an accuracy of <10%. Furthermore, the accuracy of most methods was 0%. The results of [Samangoui,2018] (double asterisk), show that their approach was not as successful. The authors claim that is is a result of implementation imperfections but theoretically the defense can be circumvented using their proposed method. | The last column shows the accuracies each defense method achieved over the adversarial test set. Except for [Madry, 2018], all defense methods could only achieve an accuracy of <10%. Furthermore, the accuracy of most methods was 0%. The results of [Samangoui,2018] (double asterisk), show that their approach was not as successful. The authors claim that is is a result of implementation imperfections but theoretically the defense can be circumvented using their proposed method. | ||

==== The | ==== The defense that worked - Adversarial Training [Madary, 2018] ==== | ||

As a defense mechanism, [Madry, 2018] proposes training the neural networks with adversarial images. Although this approach is previously known [Szegedy, 2013], in their formulation, the problem is setup in a more systematic way using a min-max formulation, | As a defense mechanism, [Madry, 2018] proposes training the neural networks with adversarial images. Although this approach is previously known [Szegedy, 2013], in their formulation, the problem is setup in a more systematic way using a min-max formulation, | ||

Revision as of 20:19, 15 November 2018

Introduction

Over the past few years, neural network models have been the source of major breakthroughs in a variety of computer vision problems. However, these networks have been shown to be susceptible to adversarial attacks. In these attacks, small humanly-imperceptible changes are made to images (that are correctly classified) which causes these models to misclassify with high confidence. These attacks pose a major threat that needs to be addressed before these systems can be deployed on a large scale, especially in safety-critical scenarios.

The seriousness of this threat has generated major interest in both the design and defense against them. In this paper, the authors identify a common technique employed by several recently proposed defenses and design a set of attacks that can be used to overcome them. The use of this technique, masking gradients, is so prevalent, that 7 out of the 8 defenses proposed in the ICLR 2018 conference employed them. The authors were able to circumvent the proposed defenses and successfully brought down the accuracy of their models to below 10%.

Methodology

The papers assume a lot of familiarity with adversarial attack literature. The section below briefly explains some key concepts.

Background

Adversarial Images Mathematically

Given an image [math]\displaystyle{ x }[/math] and a classifier [math]\displaystyle{ f(x) }[/math], an adversarial image [math]\displaystyle{ x' }[/math] satisfies two properties:

- [math]\displaystyle{ D(x,x') \lt \epsilon }[/math]

- [math]\displaystyle{ c(x') \neq c^*(x) }[/math]

Where [math]\displaystyle{ D }[/math] is some distance metric, [math]\displaystyle{ \epsilon }[/math] is a small constant, [math]\displaystyle{ c(x') }[/math] is the output class predicted by the model, and [math]\displaystyle{ c^*(x) }[/math] is the true class for input x. In words, the adversarial image is a small distance from the original image, but the classifier classifies it incorrectly.

Adversarial Attacks Terminology

- Adversarial attacks can be either black or white-box. In black box attacks, the attacker has access to the network output only, while white-box attackers have full access to the network, including its gradients, architecture and weights. This makes white-box attackers much more powerful. Given access to gradients, white-box attacks use back propagation to modify inputs (as opposed to the weights) with respect to the loss function.

- In untargeted attacks, the objective is to maximize the loss of the true class, [math]\displaystyle{ x'=x \mathbf{+} \lambda(sign(\nabla_xL(x,c^*(x)))) }[/math]. While in targeted attacks, the objective is to minimize loss for a target class [math]\displaystyle{ c^t(x) }[/math] that is different from the true class, [math]\displaystyle{ x'=x \mathbf{-} \epsilon(sign(\nabla_xL(x,c^t(x)))) }[/math]. Here, [math]\displaystyle{ \nabla_xL() }[/math] is the gradient of the loss function with respect to the input, [math]\displaystyle{ \lambda }[/math] is a small gradient step and [math]\displaystyle{ sign() }[/math] is the sign of the gradient.

- An attacker may be allowed to use a single step of back-propagation (single step) or multiple (iterative) steps. Iterative attackers can generate more powerful adversarial images. Typically, to bound iterative attackers a distance measure is used.

In this paper the authors focus on the more difficult attacks; white-box iterative targeted and untargeted attacks.

Obfuscated Gradients

As gradients are used in the generation of white-box adversarial images, many defense strategies have focused on methods that mask gradients. If gradients are masked, they cannot be followed to generate adversarial images. The authors argue against this general approach by showing that it can be easily circumvented. To emphasize their point, they looked at white-box defenses proposed in ICLR 2018. Three types of gradient masking techniques were found:

- Shattered gradients: Non-differentiable operations are introduced into the model.

- Stochastic gradients: A stochastic process is added into the model at test time.

- Vanishing Gradients : Very deep neural networks or those with recurrent connections are used. Because of the vanishing or exploding gradient problem common in these deep networks, effective gradients at the input are small and not very useful.

The Attacks

To circumvent these gradient masking techniques, the authors propose:

- Backward Pass Differentiable Approximation (BPDA): For defenses that introduce non-differentiable components, the authors replace it with an approximate function that is differentiable on the backward pass. In a white-box setting, the attacker has full access to any added non-linear transformation and can find its approximation.

- Expectation over Transformation: For defenses that add some form of test time randomness, the authors propose to use expectation over transformation technique in the backward pass. Rather than moving along the gradient every step, several gradients are sampled and the step is taken in the average direction. This can help with any stochastic misdirection from individual gradients.

- Re-parametrize the exploration space: For very deep networks that rely on vanishing or exploding gradients, the authors propose to re-parameterize and search over the range where the gradient does not explode/vanish.

Main Results

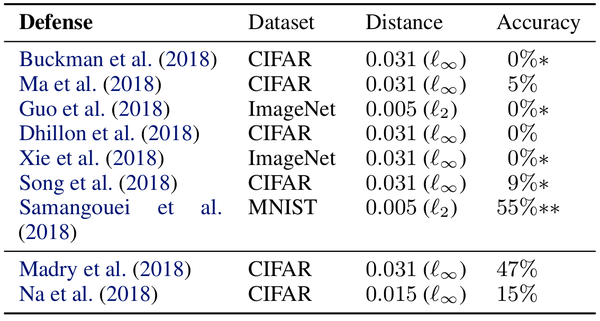

The table above summarizes the results of their attacks. Attacks are mounted on the same dataset each defense targeted. If multiple datasets were used, attacks were performed on the largest one. Two different distance metrics ([math]\displaystyle{ \ell_{\infty} }[/math] and [math]\displaystyle{ \ell_{2} }[/math]) were used in the construction of adversarial images. Distance metrics specify how much an adversarial image can vary from an original image. For [math]\displaystyle{ \ell_{\infty} }[/math] adversarial images, each pixel is allowed to vary by a maximum amount. For example, [math]\displaystyle{ \ell_{\infty}=0.031 }[/math] specifies that each pixel can vary by [math]\displaystyle{ 256*0.031=8 }[/math] from its original value. [math]\displaystyle{ \ell_{2} }[/math] distances specify the magnitude of the total distortion allowed over all pixels. For MNIST and CIFAR-10, untargeted adversarial images were constructed using the entire test set, while for Imagenet, 1000 test images were randomly selected and used to generate targeted adversarial images.

Standard models were used in evaluating the accuracy of defense strategies under the attacks,

- MNIST: 5-layer Convolutional Neural Network (99.3% top-1 accuracy)

- CIFAR-10: Wide-Resnet (95.0% top-1 accuracy)

- Imagenet: InceptionV3 (78.0% top-1 accuracy)

The last column shows the accuracies each defense method achieved over the adversarial test set. Except for [Madry, 2018], all defense methods could only achieve an accuracy of <10%. Furthermore, the accuracy of most methods was 0%. The results of [Samangoui,2018] (double asterisk), show that their approach was not as successful. The authors claim that is is a result of implementation imperfections but theoretically the defense can be circumvented using their proposed method.

The defense that worked - Adversarial Training [Madary, 2018]

As a defense mechanism, [Madry, 2018] proposes training the neural networks with adversarial images. Although this approach is previously known [Szegedy, 2013], in their formulation, the problem is setup in a more systematic way using a min-max formulation, \begin{align} \theta^* = \arg \underset{\theta} \min \mathop{\mathbb{E_x}} \bigg{[} \underset{\delta \in [-\epsilon,\epsilon]}\max L(x+\delta,y;\theta)\bigg{]} \end{align}

where [math]\displaystyle{ \theta }[/math] is the parameter of the model, [math]\displaystyle{ \theta^* }[/math] is the optimal set of parameters and [math]\displaystyle{ \delta }[/math] is a small perturbation to the input image [math]\displaystyle{ x }[/math] and is bounded by [math]\displaystyle{ [-\epsilon,\epsilon] }[/math].

Train proceeds in the following way. For each clean input image, a distorted version of the image is found by maximizing the inner maximization problem for a fixed number of iterations. Gradient steps are constrained to fall within the allowed range (projected gradient descent). Next, the classification problem is solved by minimizing the outer minimization problem.

This approach was shown to provide resilience to all types of adversarial attacks.

How to check for Obfuscated Gradients

For future defense proposals, it is recommended to avoid using masked gradients. To assist with this, the authors propose a set of conditions that can help identify if defense is relying on masked gradients:

- If weaker one-step attacks are performing better than iterative attacks.

- Black-box attacks can find stronger adversarial images compared with white-box attacks.

- Unbounded iterative attacks do not reach 100% success.

- If random brute force attempts are better than gradient based methods at finding adversarial images.

Recommendations for future defense presentations to facilitate reproducibility

Detailed Results

Gradient Shattering

Thermometer Coding, [Buckman, 2018]

Input Transformation, [Guo, 2018]

Stochastic Gradients

Stochastic Activation Pruning, [Dhillon, 2018]

Mitigation Through Randomization, [Xie, 2018]

Vanishing and Exploding Gradients

Pixel Defend, [Song, 2018]

Defense-GAN, [Samangouei, 2018]

Conclusion

Critique

Other Sources

References

- [Madry, 2018] Madry, A., Makelov, A., Schmidt, L., Tsipras, D. and Vladu, A., 2017. Towards deep learning models resistant to adversarial attacks. arXiv preprint arXiv:1706.06083.

- [Buckman, 2018] Buckman, J., Roy, A., Raffel, C. and Goodfellow, I., 2018. Thermometer encoding: One hot way to resist adversarial examples.

- [Guo, 2018] Guo, C., Rana, M., Cisse, M. and van der Maaten, L., 2017. Countering adversarial images using input transformations. arXiv preprint arXiv:1711.00117.

- [Xie, 2018] Xie, C., Wang, J., Zhang, Z., Ren, Z. and Yuille, A., 2017. Mitigating adversarial effects through randomization. arXiv preprint arXiv:1711.01991.

- [song, 2018] Song, Y., Kim, T., Nowozin, S., Ermon, S. and Kushman, N., 2017. Pixeldefend: Leveraging generative models to understand and defend against adversarial examples. arXiv preprint arXiv:1710.10766.