Obfuscated Gradients Give a False Sense of Security Circumventing Defenses to Adversarial Examples: Difference between revisions

| Line 46: | Line 46: | ||

= Critique = | = Critique = | ||

= References = | = References = | ||

[Madry, 2018] Madry, A., Makelov, A., Schmidt, L., Tsipras, D. and Vladu, A., 2017. Towards deep learning models resistant to adversarial attacks. arXiv preprint arXiv:1706.06083. | |||

Revision as of 12:10, 15 November 2018

Introduction

Over the past few years, neural network models have been the source of major breakthroughs in a variety of computer vision problems. However, these networks have been shown to be susceptible to adversarial attacks. In these attacks, small humanly-imperceptible changes are made to images (that are correctly classified) which causes these models to misclassify with high confidence. These attacks pose a major threat that needs to be addressed before these systems can be deployed on a large scale, especially in safety-critical scenarios.

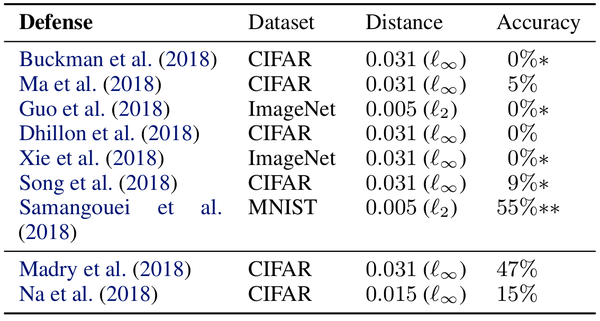

The seriousness of this threat has generated major interest in both the design and defense against them. In this paper, the authors identify a common technique employed by several recently proposed defenses and design a set of attacks that can be used to overcome them. The use of this technique, masking gradients, is so prevalent, that 7 out of the 8 defenses proposed in the ICLR 2018 conference employed them. The authors were able to circumvent the proposed defenses and successfully brought down the accuracy of their models to below 10%.

Methodology

The papers assume a lot of familiarity with adversarial attack literature. The section below briefly explains some key concepts.

Background

Adversarial Images Mathematically

Given an image [math]\displaystyle{ x }[/math] and a classifier [math]\displaystyle{ f(x) }[/math], an adversarial image [math]\displaystyle{ x' }[/math] satisfies two properties:

- [math]\displaystyle{ D(x,x') \lt \epsilon }[/math]

- [math]\displaystyle{ c(x') \neq c^*(x) }[/math]

Where [math]\displaystyle{ D }[/math] is some distance metric, [math]\displaystyle{ \epsilon }[/math] is a small constant, [math]\displaystyle{ c(x') }[/math] is the output class predicted by the model, and [math]\displaystyle{ c^*(x) }[/math] is the true class for input x. In words, the adversarial image is a small distance from the original image, but the classifier classifies it incorrectly.

Adversarial Attacks Terminology

- Adversarial attacks can be either black or white-box. In black box attacks, the attacker has access to the network output only, while white-box attackers have full access to the network, including its gradients, architecture and weights. This makes white-box attackers much more powerful. Given access to gradients, white-box attacks use back propagation to modify inputs (as opposed to the weights) with respect to the loss function.

- In untargeted attacks, the objective is to maximize the loss of the true class, [math]\displaystyle{ x'=x \mathbf{+} \lambda(sign(\nabla_xL(x,c^*(x)))) }[/math]. While in targeted attacks, the objective is to minimize loss for a target class [math]\displaystyle{ c^t(x) }[/math] that is different from the true class, [math]\displaystyle{ x'=x \mathbf{-} \epsilon(sign(\nabla_xL(x,c^t(x)))) }[/math]. Here, [math]\displaystyle{ \nabla_xL() }[/math] is the gradient of the loss function with respect to the input, [math]\displaystyle{ \lambda }[/math] is a small gradient step and [math]\displaystyle{ sign() }[/math] is the sign of the gradient.

- An attacker may be allowed to use a single step of back-propagation (single step) or multiple (iterative) steps. Iterative attackers can generate more powerful adversarial images. Typically, to bound iterative attackers a distance measure is used.

In this paper the authors focus on the more difficult attacks; white-box iterative targeted and untargeted attacks.

Obfuscated Gradients

As gradients are used in the generation of white-box adversarial images, many defense strategies have focused on methods that mask gradients. If gradients are masked, they cannot be followed to generate adversarial images. The authors argue against this general approach by showing that it can be easily circumvented. To emphasize their point, they looked at white-box defenses proposed in ICLR 2018. Three types of gradient masking techniques were found:

- Shattered gradients: Non-differentiable operations are introduced into the model.

- Stochastic gradients: A stochastic process is added into the model at test time.

- Vanishing Gradients : Very deep neural networks or those with recurrent connections are used. Because of the vanishing or exploding gradient problem common in these deep networks, effective gradients at the input are small and not very useful.

To circumvent these gradient masking techniques, the authors propose:

- Backward Pass Differentiable Approximation (BPDA): For defenses that introduce non-differentiable components, the authors replace it with an approximate function that is differentiable on the backward pass. In a white-box setting, the attacker has full access to any added non-linear transformation and can find its approximation.

- Expectation over Transformation: For defenses that add some form of test time randomness, the authors propose to use expectation over transformation technique in the backward pass. Rather than moving along the gradient every step, several gradients are sampled and the step is taken in the average direction. This can help with any stochastic misdirection from individual gradients.

- Re-parametrize the exploration space: For very deep networks that rely on vanishing or exploding gradients, the authors propose to re-parameterize and search over the range where the gradient does not explode/vanish.

Summary Results

How to check for Obfuscated Gradients

The technique that worked - [Madary, 2018]

Detailed Results

Conclusion

Critique

References

[Madry, 2018] Madry, A., Makelov, A., Schmidt, L., Tsipras, D. and Vladu, A., 2017. Towards deep learning models resistant to adversarial attacks. arXiv preprint arXiv:1706.06083.