Imagination-Augmented Agents for Deep Reinforcement Learning: Difference between revisions

| Line 51: | Line 51: | ||

=Experiment= | =Experiment= | ||

As the | These following experiments were tested in Sokoban and MiniPacman games. All results are averages taken from top three agents. | ||

As the pre-training strategy, the training data of I2A was pre-generated from trajectories of a partially trained standard model-free agent, the data is also taken into account for the budget. | |||

In the game Sokoban, to make sure the network is not "memorize" all states, | In the game Sokoban, to make sure the network is not just simply "memorize" all states, the game procedurally generates a new level each episode. Out of 40 million levels generated, less than 0.7% were repeated. Therefore, a good agent should solve the unseen level as well. | ||

To show the advantage of I2A, the authors set a model-free standard architecture, consisting of several convolutional layers with two fully connected layers, as one of the baselines. Besides, to demonstrate the influence of larger architecture in I2A, the authors set a copy-model agent that uses the same architecture of I2A but the environment model is replaced by identical map. This agent is regarded as an I2A agent without imagination. | |||

=Conclusion= | =Conclusion= | ||

Revision as of 21:32, 8 November 2017

Introduction

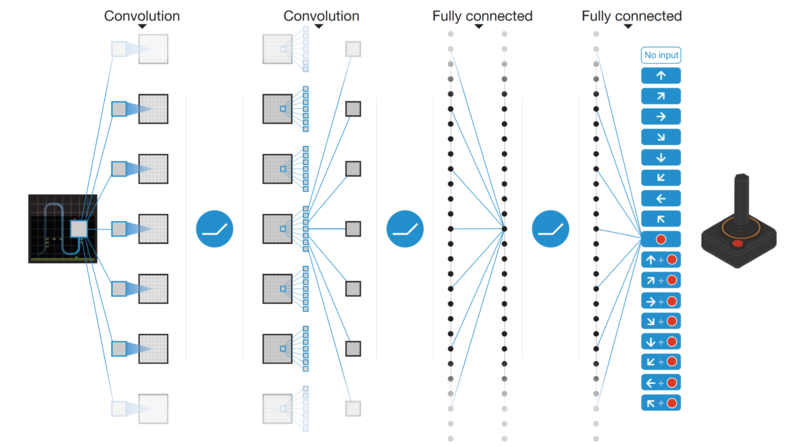

An interesting research area in Reinforcement Learning is developing AI for playing video games. Before Deep Learning, the AI for video games is coded based on Monte-Carlo Tree Search of pre-set rules. In recent researches, Deep Reinforcement Learning shown the success in playing video games like Atari 2600 games. To be specific, the method(Figure 1) is called Deep Q-Learning(DQN) which learns the optimal actions based on current observations(raw pixels). However, there are some complex games where DQN fails to learn: some games need to solve a sub-problem without explicit reward or contain irreversible domains, where actions can be catastrophic. A typical example of these games is Sokoban(Wikipedia). Even as humans are playing the game, planning and inference are needed. This kind of game raises challenges to RL.

In Reinforcement Learning, the algorithms can be divided into two categories: model-free algorithm and model-based algorithm. DQN, mentioned above(Figure 1), is a model-free method. It takes raw pixels as input and maps them to values or actions. As a drawback, large amounts of training data is required. In addition, the policies are not generalized to new tasks in the same environment. A model-based method is trying to build a model for the environment. By querying the model, agents can avoid irreversible, poor decisions. As an approximation of the environment, it can enable better generalization across states. However, this method only shows success in limited settings, where an exact transition model is given or in simple domains. In complex environments, model-based methods suffer from model errors from function approximation. Currently, there is no model-based method that is robust against imperfections.

In this paper, the authors introduce a novel deep reinforcement learning architecture called Imagination-Augmented Agents(I2As). Literally, this method enables agents to learn to interpret predictions from a learned environment model to construct implicit plans. It is a combination of model-free and model-based aspects. The advantage of this method is that it learns in an end-to-end way to extract information from model simulations without making any assumptions about the structure or the perfections of the environment model. As shown in the results, this method outperforms DQN in the games: Sokoban, and MniniPacman. In addition, the experiments all show that I2A is able to successfully use imperfect models.

Motivation

Although the structure of this method is complex, the motivation is intuitive: since the agent suffers from irreversible decisions, attempts in simulated stated may be helpful. To improve the expensive search space in traditional MCTS methods, adding decision from policy network can reduce search steps. In order to keep context information, rollout results are encoded by an LSTM encoder. The final output is combining the result from model-free network and model-based network.

Related Work

There are some works that try to apply deep learning to model-based reinforcement learning. The popular approach is to learn a neural network from the environment and apply the network in classical planning algorithms. These works can not handle the mismatch between the learned model and the ground truth. Liu et al.(2017) use context information from trajectories, but in terms of imitation learning.

To deal with imperfect models, Deisenroth and Rasmussen(2011) try to capture model uncertainty by applying high-computational Gaussian Process models.

Similar ideas can be found in a study by Hamrick et al.(2017): they present a neural network that queries expert models, but just focus on meta-control for continuous contextual bandit problems. Pascanu et al.(2017) extend this work by focusing on explicit planning in sequential environments.

Approach

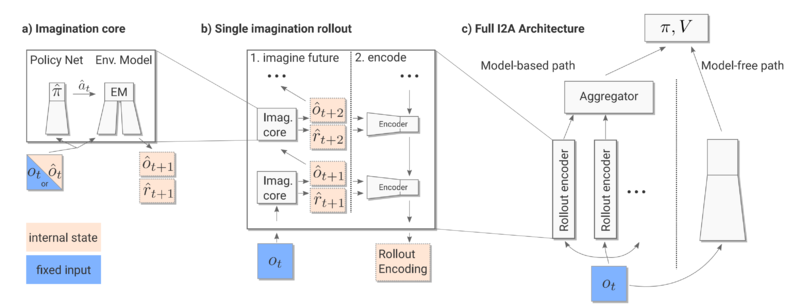

The summary of the architecture of I2A can be seen in Figure 2.

The observation $O_t$ (Figure 2 right) is fed into two paths, the model-free path is just common DQN which predicts the best action given $O_t$, whereas the model-based path performs a rollout strategy, the aggregator combines the $n$ rollout encoded outputs($n$ equals to the number of actions in the action space), and forwards the results to next layer. Together they are used to generate a policy function $\pi$ to output an action. In each rollout operation, the imagination core is used to predict the future state and reward.

Imagination Core

The imagination core(Figure 2 left) is the key role in the model-based path. It consists of two parts: environment model and rollout policy. The former is an approximation of the environment and the latter is used to simulate imagined trajectories, which are interpreted by a neural network and provided as additional context to a policy network.

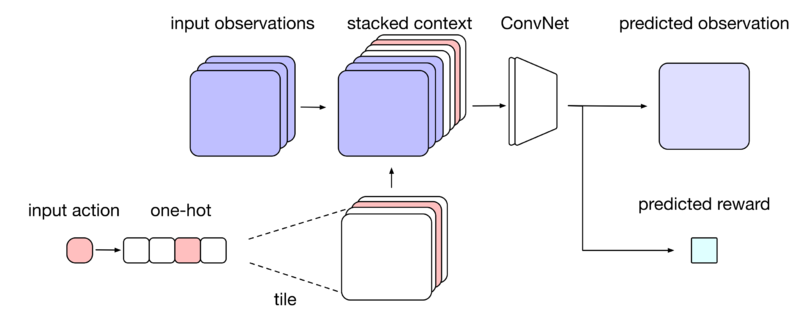

environment model

In order to augment agents with imagination, the method relies on environment models that, given current information, can be queried to make predictions about the future. In this work, the environment model is built based on action-conditional next-step predictors, which receive input contains current observation and current action, and predict the next observation and the next reward(Figure 3).

rollout policy

The rollout process is regarded as the simulated trajectories. In this work, the rollout is performed for each possible action in the environment.

A rollout policy $\hat \pi$ is a function that takes current observation $O$ and outputs an action $a$ that potentially leads to maximal reward. In this architecture, the rollout policy can be a DQN network. In the experiment, the rollout pocily$\hat \pi$ is broadcasted and shared. After experiments on the types of rollout policies(random, pre-trained), the authors found the efficient strategy is to distill the policy into a model-free policy, which consists in creating a smaill model-free network $\hat \pi(O_t)$, and adding to the total loss.

Together as the imagination core, these two parts produces $n$ trajectories $\hat \tau_1,...,\hat \tau_n$. Each imagined trajectory $\hat \tau$ is a sequence of features $(\hat f_{t+1},...,\hat f_{t+\tau})$, where $t$ is the current time, $\tau$ the length of rollout, and $\hat f_{t+i}$ the output of the environment model(the predicted observation and reward). In order to guarantee success in imperfections, the architecture does not assume the learned model to be perfect. The output will not only depend on the predicted reward.

Trajectories Encoder

From the intuition to keep the sequence information in the trajectories, the architecture uses a rollout encoder $\varepsilon$ that processes the imagined rollout as a whole and learns to interpret it(Figure 2 middle). Each trajectory is encoded as a rollout embedding $e_i=\varepsilon(\hat \tau_i)$. Then, the aggregator $A$ combines the rollout embedding s into a single imagination code $c_{ia}=A(e_1,...,e_n)$. In the experiments, the encoder is an LSTM that takes the predicted output from environment model as the input. One observation is that the order of the sequence $\hat f_{t+1}$ to $\hat f_{t+\tau}$ makes relatively little impact on the performance. The encodes mimics the Bellman type backup operations in DQN.

Model-Free Path

The model-free path contains a network that only takes the current observation as input that generates the potential optimal action. This network can be same as the one in imagination core.

In conclusion, the I2A learns to combine information for two paths, and without the model-based path, I2A simply reduce to a standard model-free network(such as A3C). The imperfect approximation results in a rollout policy with higher entropy, potentially striking a balance between exploration and exploitation.

Experiment

These following experiments were tested in Sokoban and MiniPacman games. All results are averages taken from top three agents. As the pre-training strategy, the training data of I2A was pre-generated from trajectories of a partially trained standard model-free agent, the data is also taken into account for the budget.

In the game Sokoban, to make sure the network is not just simply "memorize" all states, the game procedurally generates a new level each episode. Out of 40 million levels generated, less than 0.7% were repeated. Therefore, a good agent should solve the unseen level as well.

To show the advantage of I2A, the authors set a model-free standard architecture, consisting of several convolutional layers with two fully connected layers, as one of the baselines. Besides, to demonstrate the influence of larger architecture in I2A, the authors set a copy-model agent that uses the same architecture of I2A but the environment model is replaced by identical map. This agent is regarded as an I2A agent without imagination.