Generating Image Descriptions: Difference between revisions

| Line 3: | Line 3: | ||

== Introduction and Motivation == | == Introduction and Motivation == | ||

People often say that a picture is worth a thousand words, but with the current tools available, NLP and image recognition algorithms are unable to | People often say that a picture is worth a thousand words, but with the current tools available, NLP and image recognition algorithms are unable to extract nearly that much meaningful text from images. Contrarily, when a human glances at an image, they can easily identify numerous details and relationships within a detailed visual scene. Despite numerous advancements in image classification, object detection, and natural language processing, this particular task has proven challenging for existing visual recognition models. The majority of similar visual recognition models focus on labeling objects [CITATIONS], or generating image descriptions based on limited vocabularies and fixed sentence models [CITATIONS]. The authors of this paper believed that the assumptions of these types of models were too restrictive, leading to an inability to generate rich descriptions that the human mind is capable of. | ||

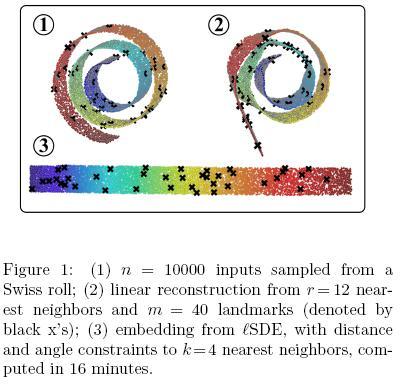

In this paper, the restriction of hard-coded word templates, and sentence structures are removed. Using this assumption-free ideal, the Kapersky and Li create a model that can generate richer descriptions drawn from a wider vocabulary. Due to the lack of directly relevant training data for this task, the authors leveraged the large quantity of images with detailed captions available online. Although these images contained no explicit information about image region labels or relationships between regions, they could treat individual captions as weak labels in which contiguous segments of words correspond to some particular, but unknown location in the image. By inferring the location of alignment between the corpus and image, a generative model is created that can generate detailed descriptions as seen in Figure 1. | |||

[[File:Fig1.jpg]] | |||

== Literature Review == | == Literature Review == | ||

Revision as of 17:06, 22 March 2018

This Page is Under Construction.

Introduction and Motivation

People often say that a picture is worth a thousand words, but with the current tools available, NLP and image recognition algorithms are unable to extract nearly that much meaningful text from images. Contrarily, when a human glances at an image, they can easily identify numerous details and relationships within a detailed visual scene. Despite numerous advancements in image classification, object detection, and natural language processing, this particular task has proven challenging for existing visual recognition models. The majority of similar visual recognition models focus on labeling objects [CITATIONS], or generating image descriptions based on limited vocabularies and fixed sentence models [CITATIONS]. The authors of this paper believed that the assumptions of these types of models were too restrictive, leading to an inability to generate rich descriptions that the human mind is capable of.

In this paper, the restriction of hard-coded word templates, and sentence structures are removed. Using this assumption-free ideal, the Kapersky and Li create a model that can generate richer descriptions drawn from a wider vocabulary. Due to the lack of directly relevant training data for this task, the authors leveraged the large quantity of images with detailed captions available online. Although these images contained no explicit information about image region labels or relationships between regions, they could treat individual captions as weak labels in which contiguous segments of words correspond to some particular, but unknown location in the image. By inferring the location of alignment between the corpus and image, a generative model is created that can generate detailed descriptions as seen in Figure 1.