Dialog-based Language Learning: Difference between revisions

No edit summary |

|||

| (24 intermediate revisions by 11 users not shown) | |||

| Line 1: | Line 1: | ||

This page is a summary for | This page is a summary for Convolutional neural network for diagnosis of viral pneumonia and COVID-19 alike diseases. | ||

==Introduction== | ==Introduction== | ||

One of the ways humans learn language, especially second language or language learning by students, is by communication and getting its feedback. However, most existing research in Natural Language Understanding has focused on supervised learning from fixed training sets of labeled data. This kind of supervision is not realistic of how humans learn, where language is both learned by, and used for, communication. When humans act in dialogs (i.e., make speech utterances) the feedback from other human’s responses contain very rich information. This is perhaps most pronounced in a student/teacher scenario where the teacher provides positive feedback for successful communication and corrections for unsuccessful ones. | One of the ways humans learn language, especially second language or language learning by students, is by communication and getting its feedback. However, most existing research in Natural Language Understanding has focused on supervised learning from fixed training sets of labeled data. This kind of supervision is not realistic of how humans learn, where language is both learned by, and used for, communication. When humans act in dialogs (i.e., make speech utterances) the feedback from other human’s responses contain very rich information. This is perhaps most pronounced in a student/teacher scenario where the teacher provides positive feedback for successful communication and corrections for unsuccessful ones. | ||

This paper is about | This paper is about dialog-based language learning, where supervision is given naturally and implicitly in the response of the dialog partner during the conversation. This paper is a step towards the ultimate goal of being able to develop an intelligent dialog agent that can learn while conducting conversations. Specifically, this paper explores whether we can train machine learning models to learn from dialog. | ||

===Contributions of this paper=== | ===Contributions of this paper=== | ||

| Line 9: | Line 9: | ||

*Evaluated some baseline models on this data and compared them to standard supervised learning. | *Evaluated some baseline models on this data and compared them to standard supervised learning. | ||

*Introduced a novel forward prediction model, whereby the learner tries to predict the teacher’s replies to its actions, which yields promising results, even with no reward signal at all | *Introduced a novel forward prediction model, whereby the learner tries to predict the teacher’s replies to its actions, which yields promising results, even with no reward signal at all | ||

Code for this paper can be found on Github:https://github.com/facebook/MemNN/tree/master/DBLL | |||

==Background on Memory Networks== | ==Background on Memory Networks== | ||

<br/> | <br/> | ||

[[File: | [[File:ershad_dialognetwork.png|center|center|thumb|Figure 2: end-to-end model]] | ||

<br/> | <br/> | ||

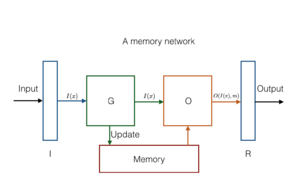

A memory network combines learning strategies from the machine learning literature with a memory component that can be read and written to. | A memory network combines learning strategies from the machine learning literature with a memory component that can be read and written to. | ||

| Line 25: | Line 27: | ||

$I$, $G$, $O$ and $R$ can all potentially be learned components and make use of any ideas from the existing machine learning literature. | $I$, $G$, $O$ and $R$ can all potentially be learned components and make use of any ideas from the existing machine learning literature. | ||

In question answering systems for example, the components may be instantiated as follows: | In question answering systems, for example, the components may be instantiated as follows: | ||

*$I$ can make use of standard pre-processing such as parsing, coreference, and entity resolution. It could also encode the input into an internal feature representation by converting from text to a sparse or dense feature vector. | *$I$ can make use of standard pre-processing such as parsing, coreference, and entity resolution. It could also encode the input into an internal feature representation by converting from text to a sparse or dense feature vector. | ||

*The simplest form of $G$ is to introduce a function $H$ which maps the internal feature representation produced by I to an individual memory slot, and just updates the memory at $H(I(x))$. | *The simplest form of $G$ is to introduce a function $H$ which maps the internal feature representation produced by I to an individual memory slot, and just updates the memory at $H(I(x))$. | ||

*$O$ Reads from memory and performs inference to deduce the set of relevant memories needed to perform a good response. | *$O$ Reads from memory and performs inference to deduce the set of relevant memories needed to perform a good response. | ||

*$R$ would produce the actual wording of the question answer based on the memories found by $O$. For example, $R$ could be an RNN conditioned on the output of $O$ | *$R$ would produce the actual wording of the question-answer based on the memories found by $O$. For example, $R$ could be an RNN conditioned on the output of $O$ | ||

When the components $I$,$G$,$O$, & $R$ are neural networks, the authors describe the resulting system as a <b>Memory Neural Network (MemNN)</b>. They build a MemNN for QA (question answering) problems and compare it to RNNs (Recurrent Neural Network) and LSTMs (Long Short Term Memory RNNs) and find that it gives superior performance. | When the components $I$,$G$,$O$, & $R$ are neural networks, the authors describe the resulting system as a <b>Memory Neural Network (MemNN)</b>. They build a MemNN for QA (question answering) problems and compare it to RNNs (Recurrent Neural Network) and LSTMs (Long Short Term Memory RNNs) and find that it gives superior performance. | ||

| Line 39: | Line 41: | ||

'''Usefulness of feedback in language learning:''' Social interaction and natural infant directed conversations are shown to useful for language learning[2]. Several studies[3][4][5][6] have shown that feedback is especially useful in second language learning and learning by students. | '''Usefulness of feedback in language learning:''' Social interaction and natural infant directed conversations are shown to useful for language learning[2]. Several studies[3][4][5][6] have shown that feedback is especially useful in second language learning and learning by students. | ||

'''Supervised learning from dialogs using neural models:''' Neural networks | '''Supervised learning from dialogs using neural models:''' Neural networks have been used for response generation that can be trained end to end on large quantities of unstructured Twitter conversations[7]. However, this does not incorporate feedback from dialog partner during real-time conversation | ||

'''Reinforcement learning:''' Reinforcement learning works on dialogs[8][9], often consider reward as the feedback model rather than exploiting the dialog feedback per se. To be more specific, the reinforcement learning utilizes the system of rewards or what the authors | '''Reinforcement learning:''' Reinforcement learning works on dialogs[8][9], often consider reward as the feedback model rather than exploiting the dialog feedback per se. To be more specific, the reinforcement learning utilizes the system of rewards or what the authors of paper [8] called “trial-and-error”. The learning agent (in this case the language-learning agent) interacts with the dynamic environment (in this case through active dialog) and it receives feedback in the form of positive or negative rewards. By setting the objective function as maximizing the rewards, the model can be trained without explicit y responses. The reason why such algorithm is not particularly efficient in training a dialog-based language learning model is that there’s no explicit/fixed threshold of a positive or negative reward. One possible way to measure such action is to define what a successful completion of a dialog should be and use that as the objective function. | ||

'''Forward prediction models:''' Forward models describe the causal relationship between actions and their consequences, and the fundamental goal of an action is to predict the consequences of it. Although forward prediction models | '''Forward prediction models:''' Forward models describe the causal relationship between actions and their consequences, and the fundamental goal of an action is to predict the consequences of it. Although forward prediction models have been used in other applications like learning eye-tracking[10], controlling robot arms[11] and vehicles[12], it has not been used for dialog. | ||

==Dialog-based Supervision tasks== | ==Dialog-based Supervision tasks== | ||

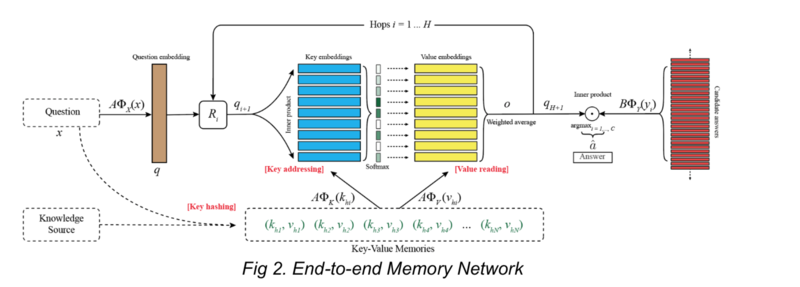

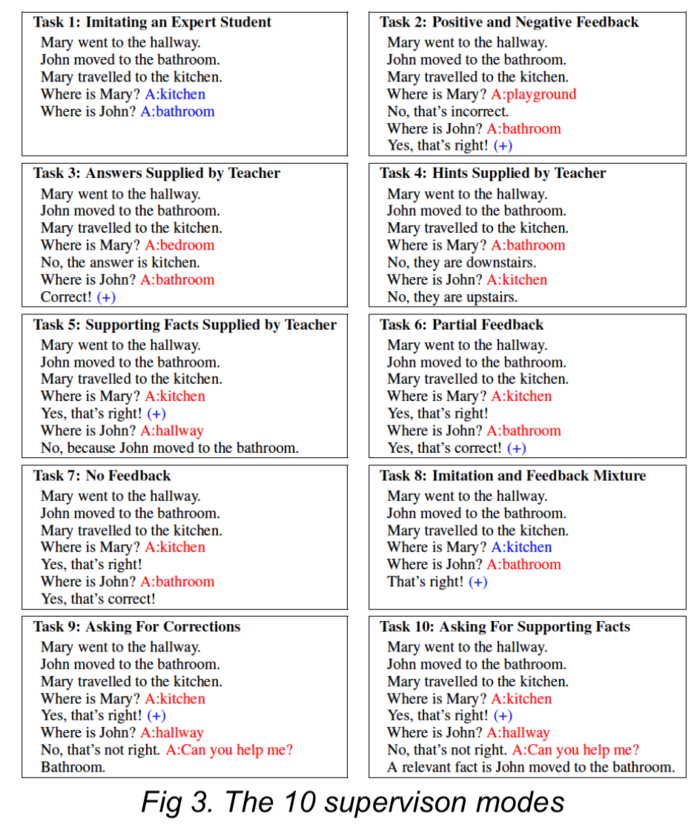

For testing their models, the authors chose two datasets (i) the single supporting fact problem from the bAbI datasets [13] which consists of short stories from a simulated world followed by questions; and (ii) the MovieQA dataset [14] which is a large-scale dataset (∼ 100k questions over ∼ 75k entities) based on questions with answers in the open movie database (OMDb) | For testing their models, the authors chose two datasets (i) the single supporting fact problem from the [http://fb.ai/babi bAbI] datasets [13] which consists of short stories from a simulated world followed by questions; and (ii) the MovieQA dataset [14] which is a large-scale dataset (∼ 100k questions over ∼ 75k entities) based on questions with answers in the open movie database (OMDb) | ||

However, since these datasets were not designed to model the supervision from dialogs, the authors modified them to create 10 supervision task types on these datasets(Fig 3). | However, since these datasets were not designed to model the supervision from dialogs, the authors modified them to create 10 supervision task types on these datasets(Fig 3). | ||

| Line 55: | Line 57: | ||

*'''Task 1: Imitating an Expert Student''': The dialogs take place between a teacher and an expert student who gives semantically coherent answers. Hence, the task is for the learner to imitate that expert student, and become an expert themselves | *'''Task 1: Imitating an Expert Student''': The dialogs take place between a teacher and an expert student who gives semantically coherent answers. Hence, the task is for the learner to imitate that expert student, and become an expert themselves | ||

*'''Task 2: Positive and Negative Feedback:''' When the learner answers a question the teacher then replies with either positive or negative feedback. In the experiments the subsequent responses are variants of “No, that’s incorrect” or “Yes, that’s right”. In the datasets there are 6 templates for positive feedback and 6 templates for negative feedback, e.g. ”Sorry, that’s not it.”, ”Wrong”, etc. To distinguish the notion of positive from negative, an additional external reward signal that is not part of the text | *'''Task 2: Positive and Negative Feedback:''' When the learner answers a question the teacher then replies with either positive or negative feedback. In the experiments, the subsequent responses are variants of “No, that’s incorrect” or “Yes, that’s right”. In the datasets, there are 6 templates for positive feedback and 6 templates for negative feedback, e.g. ”Sorry, that’s not it.”, ”Wrong”, etc. To distinguish the notion of positive from negative, an additional external reward signal that is not part of the text | ||

*'''Task 3: Answers Supplied by Teacher:''' The teacher gives positive and negative feedback as in Task 2, however when the learner’s answer is incorrect, the teacher also responds with the correction. For example if “where is Mary?” is answered with the incorrect answer “bedroom” the teacher responds “No, the answer is kitchen”’ | *'''Task 3: Answers Supplied by Teacher:''' The teacher gives positive and negative feedback as in Task 2, however when the learner’s answer is incorrect, the teacher also responds with the correction. For example if “where is Mary?” is answered with the incorrect answer “bedroom” the teacher responds “No, the answer is kitchen”’ | ||

*'''Task 4: Hints Supplied by Teacher:''' The corrections provided by the teacher do not provide the exact answer as in Task 3, but only a useful hint. This setting is meant to mimic the real life occurrence of being provided only partial information about what you did wrong. | *'''Task 4: Hints Supplied by Teacher:''' The corrections provided by the teacher do not provide the exact answer as in Task 3, but only a useful hint. This setting is meant to mimic the real-life occurrence of being provided only partial information about what you did wrong. | ||

*'''Task 5: Supporting Facts Supplied by Teacher:''' Another way of providing partial supervision for an incorrect answer is explored. Here, the teacher gives a reason (explanation) why the answer is wrong by referring to a known fact that supports the true answer that the incorrect answer may contradict. | *'''Task 5: Supporting Facts Supplied by Teacher:''' Another way of providing partial supervision for an incorrect answer is explored. Here, the teacher gives a reason (explanation) why the answer is wrong by referring to a known fact that supports the true answer that the incorrect answer may contradict. | ||

| Line 76: | Line 78: | ||

<br/> | <br/> | ||

The authors constructed the ten supervision tasks for both datasets. They were built in the following way: for each task a fixed policy is considered for answering questions which gets questions correct with probability $π_{acc}$ (i.e. the chance of getting the red text correct in Figs. 3 and 4). We thus can compare different learning algorithms for each task over different values of $π_{acc}$ (0.5, 0.1 and 0.01). In all cases a training, validation and test set is provided. Note that because the policies are fixed the experiments in this paper are not in a reinforcement learning setting. | The authors constructed the ten supervision tasks for both datasets. They were built in the following way: for each task, a fixed policy is considered for answering questions which gets questions correct with probability $π_{acc}$ (i.e. the chance of getting the red text correct in Figs. 3 and 4). We thus can compare different learning algorithms for each task over different values of $π_{acc}$ (0.5, 0.1 and 0.01). In all cases, a training, validation and test set is provided. Note that because the policies are fixed the experiments in this paper are not in a reinforcement learning setting. | ||

==Learning models== | ==Learning models== | ||

This work evaluates four possible learning strategies for each of the 10 tasks: imitation learning, reward-based imitation, forward prediction, and a combination of reward-based imitation and forward prediction | This work evaluates four possible learning strategies for each of the 10 tasks: imitation learning, reward-based imitation, forward prediction, and a combination of reward-based imitation and forward prediction | ||

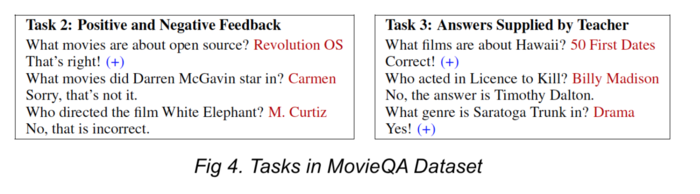

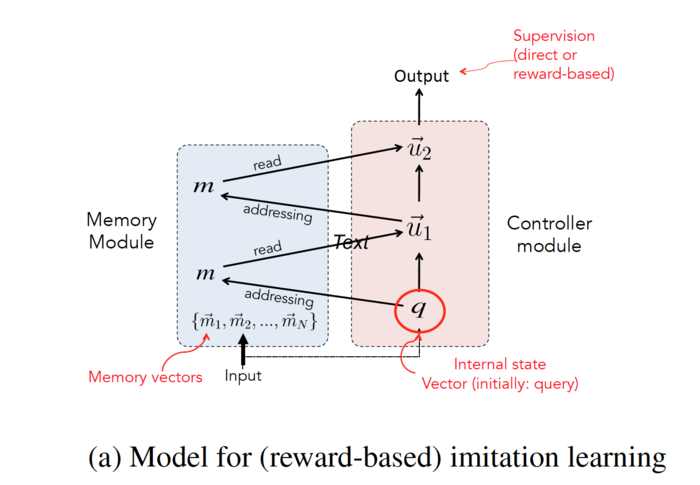

All of these approaches are evaluated with the same model architecture: an end-to-end memory network (MemN2N) [15], which has been used as a baseline model for exploring | All of these approaches are evaluated with the same model architecture: an end-to-end memory network (MemN2N) [15], which has been used as a baseline model for exploring different modes of learning. | ||

[[File:F5.png|center|700px]] | [[File:F5.png|center|700px]] | ||

<br/> | <br/> | ||

The input is the last utterance of the dialog, $x$, as well as a set of memories (context) (<math> c_1</math>, . . . , <math> c_n</math> ) which can encode both short-term memory, e.g. recent previous utterances and replies, and long-term memories, e.g. facts that could be useful for answering questions. The context inputs <math> c_i</math> are converted into vectors <math> m_i</math> via embeddings and are stored in the memory. The goal is to produce an output $\hat{a}$ by processing the input $x$ and using that to address and read from the memory, $m$, possibly multiple times, in order to form a coherent reply. In the figure the memory is read twice, which is termed multiple “hops” of attention. | The input is the last utterance of the dialog, $x$, as well as a set of memories (context) (<math> c_1</math>, . . . , <math> c_n</math> ) which can encode both short-term memory, e.g. recent previous utterances and replies, and long-term memories, e.g. facts that could be useful for answering questions. The context inputs <math> c_i</math> are converted into vectors <math> m_i</math> via embeddings and are stored in the memory. The goal is to produce an output $\hat{a}$ by processing the input $x$ and using that to address and read from the memory, $m$, possibly multiple times, in order to form a coherent reply. In the figure, the memory is read twice, which is termed multiple “hops” of attention. | ||

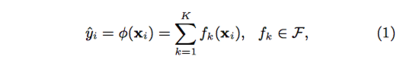

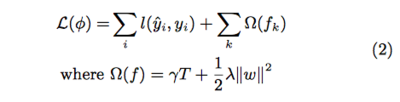

In the first step, the input $x$ is embedded using a matrix $A$ of size $d$ × $V$ where $d$ is the embedding dimension and $V$ is the size of the vocabulary, giving $q$ = $A$$x$, where the input $x$ is as a bag-of words vector. Each memory <math> c_i</math> is embedded using the same matrix, giving $m_i$ = $A$$c_i$ . The output of addressing and then reading from memory in the first hop is: | In the first step, the input $x$ is embedded using a matrix $A$ (typically using Word2Vec or GloVe) of size $d$ × $V$ where $d$ is the embedding dimension and $V$ is the size of the vocabulary, giving $q$ = $A$$x$, where the input $x$ is as a bag-of words vector. Each memory <math> c_i</math> is embedded using the same matrix, giving $m_i$ = $A$$c_i$ . The output of addressing and then reading from memory in the first hop is: | ||

[[File:eq1.png|center|400px]] | [[File:eq1.png|center|400px]] | ||

| Line 99: | Line 101: | ||

[[File:eq3.png|center|400px]] | [[File:eq3.png|center|400px]] | ||

where there are $C$ candidate answers in $y$. In our experiments $C$ is the set of actions that occur in the training set for the bAbI tasks, and for MovieQA it is the set of words retrieved from the KB. | where there are $C$ candidate answers in $y$. In our experiments, $C$ is the set of actions that occur in the training set for the bAbI tasks, and for MovieQA it is the set of words retrieved from the KB. | ||

==Training strategies== | ==Training strategies== | ||

1. '''Imitation Learning''' | |||

This approach involves simply imitating one of the speakers in observed dialogs. Examples arrive as $(x, c, a)$ triples, where $a$ is (assumed to be) a good response to the last utterance $x$ given context $c$. Here, the whole memory network model defined above is trained using stochastic gradient descent by minimizing a standard cross-entropy loss between $\hat{a}$ and the label $a$ | This approach involves simply imitating one of the speakers in observed dialogs. Examples arrive as $(x, c, a)$ triples, where $a$ is (assumed to be) a good response to the last utterance $x$ given context $c$. Here, the whole memory network model defined above is trained using stochastic gradient descent by minimizing a standard cross-entropy loss between $\hat{a}$ and the label $a$ | ||

2. '''Reward-based Imitation''' | |||

If some actions are poor choices, then one does not want to repeat them, that is we shouldn’t treat them as a supervised objective. Here, positive reward is only obtained immediately after (some of) the correct actions, or else is zero. Only apply imitation learning on the rewarded actions. The rest of the actions are simply discarded from the training set. For more complex cases like actions leading to long-term changes and delayed rewards applying reinforcement learning algorithms would be necessary. e.g. one could still use policy gradient to train the MemN2N but applied to the model’s own policy. | If some actions are poor choices, then one does not want to repeat them, that is we shouldn’t treat them as a supervised objective. Here, the positive reward is only obtained immediately after (some of) the correct actions, or else is zero. Only apply imitation learning on the rewarded actions. The rest of the actions are simply discarded from the training set. For more complex cases like actions leading to long-term changes and delayed rewards applying reinforcement learning algorithms would be necessary. e.g. one could still use policy gradient to train the MemN2N but applied to the model’s own policy. | ||

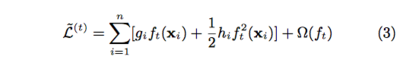

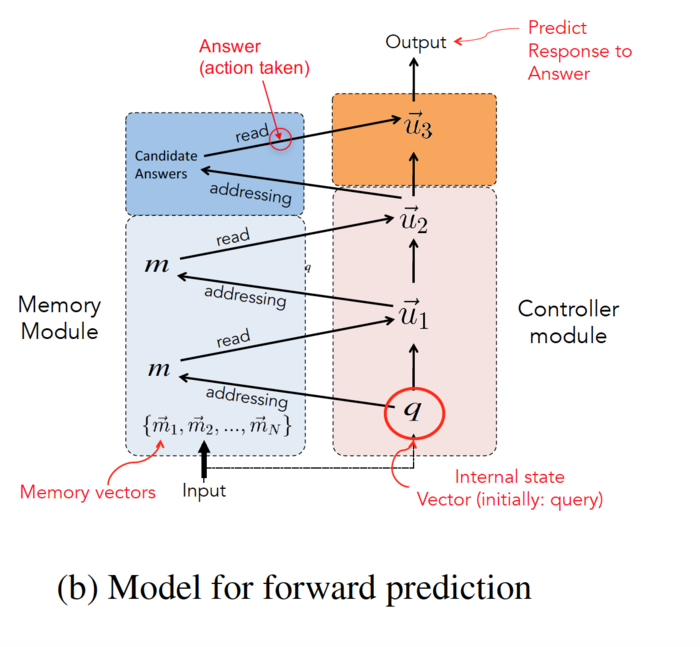

3. '''Forward Prediction''' | |||

The aim is, given an utterance $x$ from speaker 1 and an answer a by speaker 2 (i.e., the learner), to predict $x^{¯}$, the response to the answer from speaker 1. That is, in general to predict the changed state of the world after action $a$, which in this case involves the new utterance $x^{¯}$. | The aim is, given an utterance $x$ from speaker 1 and an answer a by speaker 2 (i.e., the learner), to predict $x^{¯}$, the response to the answer from speaker 1. That is, in general, to predict the changed state of the world after action $a$, which in this case involves the new utterance $x^{¯}$. | ||

[[File:F6.png|center|700px]] | [[File:F6.png|center|700px]] | ||

| Line 121: | Line 123: | ||

[[File:eq4.png|center|550px]] | [[File:eq4.png|center|550px]] | ||

where $β^{*}$ is a d-dimensional vector, that is also | where $β^{*}$ is a d-dimensional vector, that is also learned, that represents the output $o_3$ the action that was actually selected. The mechanism above gives the model a way to compare the most likely answers to $x$ with the given answer $a$. For example, if the given answer $a$ is incorrect and the model can assign high $p_i$ to the correct answer then the output $o_3$ will contain a small amount of $\beta^*$; conversely, $o_3$ has a large | ||

amount of $\beta^*$ if $a$ is correct. Thus, $o_3$ informs the model of the likely response $\bar{x}$ from the teacher. After obtaining $o_3$, the forward prediction is then computed as: | amount of $\beta^*$ if $a$ is correct. Thus, $o_3$ informs the model of the likely response $\bar{x}$ from the teacher. After obtaining $o_3$, the forward prediction is then computed as: | ||

| Line 132: | Line 134: | ||

4. '''Reward-based Imitation + Forward Prediction''' | |||

As the reward-based imitation learning uses the architecture of Fig (a), and forward prediction uses the same architecture but with the additional layers of Fig (b), we can learn jointly with both strategies. This is a powerful combination as it makes use of reward signal when available and the dialog feedback when the reward signal is not available. In this approach the authors share the weights across the two networks, and perform gradient steps for both criteria. Also the compelling reason to consider this approach is that we should make use of the rewards when they are available. Hence they have taken the advantages of both the forward prediction and reward based approaches. | As the reward-based imitation learning uses the architecture of Fig (a), and forward prediction uses the same architecture but with the additional layers of Fig (b), we can learn jointly with both strategies. This is a powerful combination as it makes use of reward signal when available and the dialog feedback when the reward signal is not available. In this approach, the authors share the weights across the two networks, and perform gradient steps for both criteria. Also, the compelling reason to consider this approach is that we should make use of the rewards when they are available. Hence they have taken the advantages of both the forward prediction and reward based approaches. | ||

==Experiments== | ==Experiments== | ||

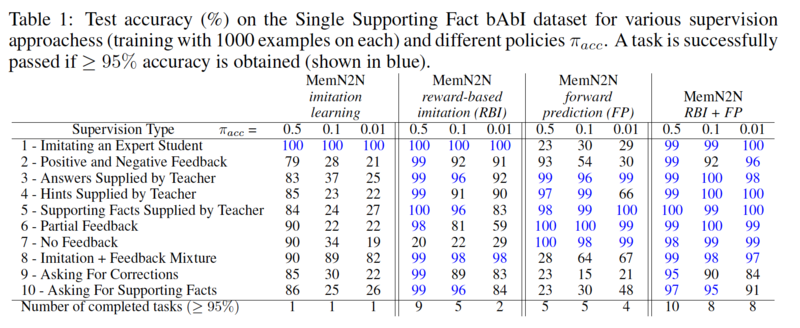

Experiments were conducted on the two test datasets - | Experiments were conducted on the two test datasets - bAbI and MovieQA. For each task, a fixed policy is considered for performing actions (answering questions) which gets questions correct with probability $π_{acc}$. This helps to compare the different training strategies described earlier over each task for different values of $π_{acc}$. Hyperparameters for all methods are optimized on the validation sets. | ||

<br/><br/> | <br/><br/> | ||

[[File:DB F7.png|center|800px]] | [[File:DB F7.png|center|800px]] | ||

<br/> | <br/> | ||

The following results are observed by the authors: | The following results are observed by the authors: | ||

*Imitation learning, ignoring rewards, is a poor learning strategy when imitating inaccurate answers, e.g. for $π_{acc}$ < 0.5. For imitating an expert however (Task 1) it is hard to beat. | *Imitation learning, ignoring rewards, is a poor learning strategy when imitating inaccurate answers, e.g. for $π_{acc}$ < 0.5. For imitating an expert, however (Task 1) it is hard to beat. | ||

*Reward-based imitation (RBI) performs better when rewards are available, particularly in Table 1, but also degrades when they are too sparse e.g. for πacc = 0.01. | *Reward-based imitation (RBI) performs better when rewards are available, particularly in Table 1, but also degrades when they are too sparse e.g. for πacc = 0.01. | ||

*Forward prediction (FP) is more robust and has stable performance at different levels of πacc. However as it only predicts answers implicitly and does not make use of rewards it is outperformed by RBI on several tasks, notably Tasks 1 and 8 (because it cannot do supervised learning) and Task 2 (because it does not take advantage of positive rewards). | *Forward prediction (FP) is more robust and has a stable performance at different levels of πacc. However as it only predicts answers implicitly and does not make use of rewards it is outperformed by RBI on several tasks, notably Tasks 1 and 8 (because it cannot do supervised learning) and Task 2 (because it does not take advantage of positive rewards). | ||

*FP makes use of dialog feedback in Tasks 3-5 whereas RBI does not. This explains why FP does better with useful feedback (Tasks 3-5) than without (Task 2), whereas RBI cannot. | *FP makes use of dialog feedback in Tasks 3-5 whereas RBI does not. This explains why FP does better with useful feedback (Tasks 3-5) than without (Task 2), whereas RBI cannot. | ||

*Supplying full answers (Task 3) is more useful than hints (Task 4) but hints still help FP more than just yes/no answers without extra information (Task 2). | *Supplying full answers (Task 3) is more useful than hints (Task 4) but hints still help FP more than just yes/no answers without extra information (Task 2). | ||

*When positive feedback is sometimes missing (Task 6) RBI suffers especially in Table 1. FP does not as it does not use this feedback. | *When positive feedback is sometimes missing (Task 6) RBI suffers especially in Table 1. FP does not as it does not use this feedback. | ||

*One of the most surprising results of our experiments is that FP performs well overall, given that it does not use feedback, which we will attempt to explain subsequently. This is particularly evident on Task 7 (no feedback) where RBI has no hope of succeeding as it has no positive examples. FP on the other hand learns adequately. | *One of the most surprising results of our experiments is that FP performs well overall, given that it does not use feedback, which we will attempt to explain subsequently. This is particularly evident on Task 7 (no feedback) where RBI has no hope of succeeding as it has no positive examples. FP, on the other hand, learns adequately. | ||

*Tasks 9 and 10 are harder for FP as the question is not immediately before the feedback. | *Tasks 9 and 10 are harder for FP as the question is not immediately before the feedback. | ||

*Combining RBI and FP ameliorates the failings of each, yielding the best overall results | *Combining RBI and FP ameliorates the failings of each, yielding the best overall results | ||

One of the most interesting aspects of the results in this paper is that FP works at all without any rewards. | |||

==Future work== | ==Future work== | ||

* Any reply in a dialog can be seen as feedback | * Any reply in a dialog can be seen as feedback and should be useful for learning. Evaluate if forward prediction, and the other approaches in this paper, work there too. | ||

* Develop further evaluation methodologies to test how the models presented here | * Develop further evaluation methodologies to test how the models presented here work in more complex settings where actions that are made lead to long-term changes in the environment and delayed rewards, i.e. extending to the reinforcement learning setting, and to full language generation. | ||

* How dialog-based feedback could also be used as a medium to learn non-dialog based skills, e.g. natural language dialog for completing visual or physical tasks. | * How dialog-based feedback could also be used as a medium to learn non-dialog based skills, e.g. natural language dialog for completing visual or physical tasks. In the environment that actions can lead to long-term changes in the environment and delayed rewards, i.e. extending to the reinforcement learning setting. | ||

* A paper under review for ICLR 2017, also authored in-part by this paper's author, further extends the forward prediction method [17]. They assign a probability that the student will provide a random answer. The claim is that this allows the method to potentially discover correct answers. They also add data balancing, where they balance training across the all the teacher responses. This is supposed to ensure that one part of the distribution doesn't dominate during model learning. | |||

==Critique== | ==Critique== | ||

| Line 161: | Line 166: | ||

The paper in its abstract says, there is no need for a reward, but a feedback by the partner saying "yes" or a "no" is a sort of reward. Yes, there is just a fixed policy used in learning, which makes this type of learning a subset of reinforcement learning which goes against the claim that this is not a reinforcement learning. | The paper in its abstract says, there is no need for a reward, but a feedback by the partner saying "yes" or a "no" is a sort of reward. Yes, there is just a fixed policy used in learning, which makes this type of learning a subset of reinforcement learning which goes against the claim that this is not a reinforcement learning. | ||

Also there are certain things that are not clearly explained, particularly the details of the forward-prediction model. It is not clear how the response to the answer is related to the learner's first input or to the answer. Hence it is not clear as to what the learner actually learns encodes into memory network. This also makes it impossible to say why this type of learning performs better than other approaches. | Also, there are certain things that are not clearly explained, particularly the details of the forward-prediction model. It is not clear how the response to the answer is related to the learner's first input or to the answer. Hence it is not clear as to what the learner actually learns encodes into memory network. This also makes it impossible to say why this type of learning performs better than other approaches. | ||

It seems difficult to enhance this model to generate real complex queries to the user (not predefined ones), how would this method handle multiple dialogues turns with complex language? Also, the forward prediction architecture seems interesting but it can hardly be extended to multiple dialogue turns. On the other hand, reinforcement learning is interesting in dialogue: evaluating a value function implicitly learns a transition model and predicts future outcomes. Here, the authors say that this architecture allows avoiding the definition of a reward due to its ability to predict words such as "right" or "correct" but it is not always the case that the user gives feedback like (especially in real-world dialogues). It is worth comparing this method with reinforcement and imitation learning for dialogue management, which could eventually lead to more novel models. | |||

The authors claim "Language Learning", but instead assess the model based on question answering. The claims that are made are not represented by the results presented. While this method may serve as an approach to QA, it is certainly a stretch to call it language learning. | |||

==References== | ==References== | ||

| Line 180: | Line 189: | ||

# J. Schatzmann, K. Weilhammer, M. Stuttle, and S. Young. A survey of statistical user simulation techniques for reinforcement-learning of dialogue management strategies. The knowledge engineering review, 21(02):97–126, 2006. | # J. Schatzmann, K. Weilhammer, M. Stuttle, and S. Young. A survey of statistical user simulation techniques for reinforcement-learning of dialogue management strategies. The knowledge engineering review, 21(02):97–126, 2006. | ||

# 9 memory networks for language understanding: https://www.youtube.com/watch?v=5ekMog_nhaQ | # 9 memory networks for language understanding: https://www.youtube.com/watch?v=5ekMog_nhaQ | ||

# Li, Jiwei; Miller, Alexander H.; Chopra, Sumit; Ranzato, Marc'Aurelio; Weston, Jason. "Dialogue Learning With Human-In-The-Loop". Review for ICLR 2017. | |||

Latest revision as of 13:59, 21 November 2021

This page is a summary for Convolutional neural network for diagnosis of viral pneumonia and COVID-19 alike diseases.

Introduction

One of the ways humans learn language, especially second language or language learning by students, is by communication and getting its feedback. However, most existing research in Natural Language Understanding has focused on supervised learning from fixed training sets of labeled data. This kind of supervision is not realistic of how humans learn, where language is both learned by, and used for, communication. When humans act in dialogs (i.e., make speech utterances) the feedback from other human’s responses contain very rich information. This is perhaps most pronounced in a student/teacher scenario where the teacher provides positive feedback for successful communication and corrections for unsuccessful ones.

This paper is about dialog-based language learning, where supervision is given naturally and implicitly in the response of the dialog partner during the conversation. This paper is a step towards the ultimate goal of being able to develop an intelligent dialog agent that can learn while conducting conversations. Specifically, this paper explores whether we can train machine learning models to learn from dialog.

Contributions of this paper

- Introduce a set of tasks that model natural feedback from a teacher and hence assess the feasibility of dialog-based language learning.

- Evaluated some baseline models on this data and compared them to standard supervised learning.

- Introduced a novel forward prediction model, whereby the learner tries to predict the teacher’s replies to its actions, which yields promising results, even with no reward signal at all

Code for this paper can be found on Github:https://github.com/facebook/MemNN/tree/master/DBLL

Background on Memory Networks

A memory network combines learning strategies from the machine learning literature with a memory component that can be read and written to.

The high-level view of a memory network is as follows:

- There is a memory, $m$, an indexed array of objects (e.g. vectors or arrays of strings).

- An input feature map $I$, which converts the incoming input to the internal feature representation

- A generalization component $G$ which updates old memories given the new input.

- An output feature map $O$, which produces a new output in the feature representation space given the new input and the current memory state.

- A response component $R$ which converts the output into the response format desired – for example, a textual response or an action.

$I$, $G$, $O$ and $R$ can all potentially be learned components and make use of any ideas from the existing machine learning literature.

In question answering systems, for example, the components may be instantiated as follows:

- $I$ can make use of standard pre-processing such as parsing, coreference, and entity resolution. It could also encode the input into an internal feature representation by converting from text to a sparse or dense feature vector.

- The simplest form of $G$ is to introduce a function $H$ which maps the internal feature representation produced by I to an individual memory slot, and just updates the memory at $H(I(x))$.

- $O$ Reads from memory and performs inference to deduce the set of relevant memories needed to perform a good response.

- $R$ would produce the actual wording of the question-answer based on the memories found by $O$. For example, $R$ could be an RNN conditioned on the output of $O$

When the components $I$,$G$,$O$, & $R$ are neural networks, the authors describe the resulting system as a Memory Neural Network (MemNN). They build a MemNN for QA (question answering) problems and compare it to RNNs (Recurrent Neural Network) and LSTMs (Long Short Term Memory RNNs) and find that it gives superior performance.

Related Work

Usefulness of feedback in language learning: Social interaction and natural infant directed conversations are shown to useful for language learning[2]. Several studies[3][4][5][6] have shown that feedback is especially useful in second language learning and learning by students.

Supervised learning from dialogs using neural models: Neural networks have been used for response generation that can be trained end to end on large quantities of unstructured Twitter conversations[7]. However, this does not incorporate feedback from dialog partner during real-time conversation

Reinforcement learning: Reinforcement learning works on dialogs[8][9], often consider reward as the feedback model rather than exploiting the dialog feedback per se. To be more specific, the reinforcement learning utilizes the system of rewards or what the authors of paper [8] called “trial-and-error”. The learning agent (in this case the language-learning agent) interacts with the dynamic environment (in this case through active dialog) and it receives feedback in the form of positive or negative rewards. By setting the objective function as maximizing the rewards, the model can be trained without explicit y responses. The reason why such algorithm is not particularly efficient in training a dialog-based language learning model is that there’s no explicit/fixed threshold of a positive or negative reward. One possible way to measure such action is to define what a successful completion of a dialog should be and use that as the objective function.

Forward prediction models: Forward models describe the causal relationship between actions and their consequences, and the fundamental goal of an action is to predict the consequences of it. Although forward prediction models have been used in other applications like learning eye-tracking[10], controlling robot arms[11] and vehicles[12], it has not been used for dialog.

Dialog-based Supervision tasks

For testing their models, the authors chose two datasets (i) the single supporting fact problem from the bAbI datasets [13] which consists of short stories from a simulated world followed by questions; and (ii) the MovieQA dataset [14] which is a large-scale dataset (∼ 100k questions over ∼ 75k entities) based on questions with answers in the open movie database (OMDb)

However, since these datasets were not designed to model the supervision from dialogs, the authors modified them to create 10 supervision task types on these datasets(Fig 3).

- Task 1: Imitating an Expert Student: The dialogs take place between a teacher and an expert student who gives semantically coherent answers. Hence, the task is for the learner to imitate that expert student, and become an expert themselves

- Task 2: Positive and Negative Feedback: When the learner answers a question the teacher then replies with either positive or negative feedback. In the experiments, the subsequent responses are variants of “No, that’s incorrect” or “Yes, that’s right”. In the datasets, there are 6 templates for positive feedback and 6 templates for negative feedback, e.g. ”Sorry, that’s not it.”, ”Wrong”, etc. To distinguish the notion of positive from negative, an additional external reward signal that is not part of the text

- Task 3: Answers Supplied by Teacher: The teacher gives positive and negative feedback as in Task 2, however when the learner’s answer is incorrect, the teacher also responds with the correction. For example if “where is Mary?” is answered with the incorrect answer “bedroom” the teacher responds “No, the answer is kitchen”’

- Task 4: Hints Supplied by Teacher: The corrections provided by the teacher do not provide the exact answer as in Task 3, but only a useful hint. This setting is meant to mimic the real-life occurrence of being provided only partial information about what you did wrong.

- Task 5: Supporting Facts Supplied by Teacher: Another way of providing partial supervision for an incorrect answer is explored. Here, the teacher gives a reason (explanation) why the answer is wrong by referring to a known fact that supports the true answer that the incorrect answer may contradict.

- Task 6: Partial Feedback: External rewards are only given some of (50% of) the time for correct answers, the setting is otherwise identical to Task 3. This attempts to mimic the realistic situation of some learning being more closely supervised (a teacher rewarding you for getting some answers right) whereas other dialogs have less supervision (no external rewards). The task attempts to assess the impact of such partial supervision.

- Task 7: No Feedback: External rewards are not given at all, only text, but is otherwise identical to Tasks 3 and 6. This task explores whether it is actually possible to learn how to answer at all in such a setting.

- Task 8: Imitation and Feedback Mixture: Combines Tasks 1 and 2. The goal is to see if a learner can learn successfully from both forms of supervision at once. This mimics a child both observing pairs of experts talking (Task 1) while also trying to talk (Task 2).

- Task 9: Asking For Corrections: The learner will ask questions to the teacher about what it has done wrong. Task 9 tests one of the most simple instances, where asking “Can you help me?” when wrong obtains from the teacher the correct answer.

- Task 10: Asking for Supporting Facts: A less direct form of supervision for the learner after asking for help is to receive a hint rather than the correct answer, such as “A relevant fact is John moved to the bathroom” when asking “Can you help me?”. This is thus related to the supervision in Task 5 except the learner must request help

The authors constructed the ten supervision tasks for both datasets. They were built in the following way: for each task, a fixed policy is considered for answering questions which gets questions correct with probability $π_{acc}$ (i.e. the chance of getting the red text correct in Figs. 3 and 4). We thus can compare different learning algorithms for each task over different values of $π_{acc}$ (0.5, 0.1 and 0.01). In all cases, a training, validation and test set is provided. Note that because the policies are fixed the experiments in this paper are not in a reinforcement learning setting.

Learning models

This work evaluates four possible learning strategies for each of the 10 tasks: imitation learning, reward-based imitation, forward prediction, and a combination of reward-based imitation and forward prediction

All of these approaches are evaluated with the same model architecture: an end-to-end memory network (MemN2N) [15], which has been used as a baseline model for exploring different modes of learning.

The input is the last utterance of the dialog, $x$, as well as a set of memories (context) ([math]\displaystyle{ c_1 }[/math], . . . , [math]\displaystyle{ c_n }[/math] ) which can encode both short-term memory, e.g. recent previous utterances and replies, and long-term memories, e.g. facts that could be useful for answering questions. The context inputs [math]\displaystyle{ c_i }[/math] are converted into vectors [math]\displaystyle{ m_i }[/math] via embeddings and are stored in the memory. The goal is to produce an output $\hat{a}$ by processing the input $x$ and using that to address and read from the memory, $m$, possibly multiple times, in order to form a coherent reply. In the figure, the memory is read twice, which is termed multiple “hops” of attention.

In the first step, the input $x$ is embedded using a matrix $A$ (typically using Word2Vec or GloVe) of size $d$ × $V$ where $d$ is the embedding dimension and $V$ is the size of the vocabulary, giving $q$ = $A$$x$, where the input $x$ is as a bag-of words vector. Each memory [math]\displaystyle{ c_i }[/math] is embedded using the same matrix, giving $m_i$ = $A$$c_i$ . The output of addressing and then reading from memory in the first hop is:

Here, $p^{1}$ is a probability vector over the memories, and is a measure of how much the input and the memories match. The goal is to select memories relevant to the last utterance $x$, i.e. the most relevant have large values of $p^{1}_i$ . The output memory representation $o_1$ is then constructed using the weighted sum of memories, i.e. weighted by $p^{1}$ . The memory output is then added to the original input, [math]\displaystyle{ u_1 }[/math] = [math]\displaystyle{ R_1 }[/math]([math]\displaystyle{ o_1 }[/math] + $q$), to form the new state of the controller, where [math]\displaystyle{ R_1 }[/math] is a $d$ × $d$ rotation matrix . The attention over the memory can then be repeated using [math]\displaystyle{ u_1 }[/math] instead of $q$ as the addressing vector, yielding:

The controller state is updated again with [math]\displaystyle{ u_2 }[/math] = [math]\displaystyle{ R_2 }[/math]([math]\displaystyle{ o_2 }[/math] + [math]\displaystyle{ u_1 }[/math]), where [math]\displaystyle{ R_2 }[/math] is another $d$ × $d$ matrix to be learnt. In a two-hop model the final output is then defined as:

where there are $C$ candidate answers in $y$. In our experiments, $C$ is the set of actions that occur in the training set for the bAbI tasks, and for MovieQA it is the set of words retrieved from the KB.

Training strategies

1. Imitation Learning This approach involves simply imitating one of the speakers in observed dialogs. Examples arrive as $(x, c, a)$ triples, where $a$ is (assumed to be) a good response to the last utterance $x$ given context $c$. Here, the whole memory network model defined above is trained using stochastic gradient descent by minimizing a standard cross-entropy loss between $\hat{a}$ and the label $a$

2. Reward-based Imitation If some actions are poor choices, then one does not want to repeat them, that is we shouldn’t treat them as a supervised objective. Here, the positive reward is only obtained immediately after (some of) the correct actions, or else is zero. Only apply imitation learning on the rewarded actions. The rest of the actions are simply discarded from the training set. For more complex cases like actions leading to long-term changes and delayed rewards applying reinforcement learning algorithms would be necessary. e.g. one could still use policy gradient to train the MemN2N but applied to the model’s own policy.

3. Forward Prediction The aim is, given an utterance $x$ from speaker 1 and an answer a by speaker 2 (i.e., the learner), to predict $x^{¯}$, the response to the answer from speaker 1. That is, in general, to predict the changed state of the world after action $a$, which in this case involves the new utterance $x^{¯}$.

As shown in Figure (b), this is achieved by chopping off the final output from the original network of Fig (a) and replace it with some additional layers that compute the forward prediction. The first part of the network remains exactly the same and only has access to input x and context c, just as before. The computation up to $u_2$ = $R_2$($o_2$ + $u_1$) is thus exactly the same as before.

Then perform another “hop” of attention but over the candidate answers rather than the memories. The information of which action (candidate) was actually selected in the dialog (i.e. which one is a) is also incorporated which is crucial. After this “hop”, the resulting state of the controller is then used to do the forward prediction.

Concretely, we compute:

where $β^{*}$ is a d-dimensional vector, that is also learned, that represents the output $o_3$ the action that was actually selected. The mechanism above gives the model a way to compare the most likely answers to $x$ with the given answer $a$. For example, if the given answer $a$ is incorrect and the model can assign high $p_i$ to the correct answer then the output $o_3$ will contain a small amount of $\beta^*$; conversely, $o_3$ has a large amount of $\beta^*$ if $a$ is correct. Thus, $o_3$ informs the model of the likely response $\bar{x}$ from the teacher. After obtaining $o_3$, the forward prediction is then computed as:

where $u_3$ = $R_3$($o_3$ + $u_2$). That is, it computes the scores of the possible responses to the answer a over $\bar{C}$ possible candidates.

Training can then be performed using the cross-entropy loss between $\hat{x}$ and the label $x ̄$, similar to before. In the event of a large number of candidates $\bar{C}$ we subsample the negatives, always keeping $x ̄$ in the set. The set of answers $y$ can also be similarly sampled, making the method highly scalable. Note that after training with the forward prediction criterion, at test time one can “chop off” the top again of the model to retrieve the original memory network model. One can thus use it to predict answers $\hat{a}$ given only $x$ and $c$.

4. Reward-based Imitation + Forward Prediction

As the reward-based imitation learning uses the architecture of Fig (a), and forward prediction uses the same architecture but with the additional layers of Fig (b), we can learn jointly with both strategies. This is a powerful combination as it makes use of reward signal when available and the dialog feedback when the reward signal is not available. In this approach, the authors share the weights across the two networks, and perform gradient steps for both criteria. Also, the compelling reason to consider this approach is that we should make use of the rewards when they are available. Hence they have taken the advantages of both the forward prediction and reward based approaches.

Experiments

Experiments were conducted on the two test datasets - bAbI and MovieQA. For each task, a fixed policy is considered for performing actions (answering questions) which gets questions correct with probability $π_{acc}$. This helps to compare the different training strategies described earlier over each task for different values of $π_{acc}$. Hyperparameters for all methods are optimized on the validation sets.

The following results are observed by the authors:

- Imitation learning, ignoring rewards, is a poor learning strategy when imitating inaccurate answers, e.g. for $π_{acc}$ < 0.5. For imitating an expert, however (Task 1) it is hard to beat.

- Reward-based imitation (RBI) performs better when rewards are available, particularly in Table 1, but also degrades when they are too sparse e.g. for πacc = 0.01.

- Forward prediction (FP) is more robust and has a stable performance at different levels of πacc. However as it only predicts answers implicitly and does not make use of rewards it is outperformed by RBI on several tasks, notably Tasks 1 and 8 (because it cannot do supervised learning) and Task 2 (because it does not take advantage of positive rewards).

- FP makes use of dialog feedback in Tasks 3-5 whereas RBI does not. This explains why FP does better with useful feedback (Tasks 3-5) than without (Task 2), whereas RBI cannot.

- Supplying full answers (Task 3) is more useful than hints (Task 4) but hints still help FP more than just yes/no answers without extra information (Task 2).

- When positive feedback is sometimes missing (Task 6) RBI suffers especially in Table 1. FP does not as it does not use this feedback.

- One of the most surprising results of our experiments is that FP performs well overall, given that it does not use feedback, which we will attempt to explain subsequently. This is particularly evident on Task 7 (no feedback) where RBI has no hope of succeeding as it has no positive examples. FP, on the other hand, learns adequately.

- Tasks 9 and 10 are harder for FP as the question is not immediately before the feedback.

- Combining RBI and FP ameliorates the failings of each, yielding the best overall results

One of the most interesting aspects of the results in this paper is that FP works at all without any rewards.

Future work

- Any reply in a dialog can be seen as feedback and should be useful for learning. Evaluate if forward prediction, and the other approaches in this paper, work there too.

- Develop further evaluation methodologies to test how the models presented here work in more complex settings where actions that are made lead to long-term changes in the environment and delayed rewards, i.e. extending to the reinforcement learning setting, and to full language generation.

- How dialog-based feedback could also be used as a medium to learn non-dialog based skills, e.g. natural language dialog for completing visual or physical tasks. In the environment that actions can lead to long-term changes in the environment and delayed rewards, i.e. extending to the reinforcement learning setting.

- A paper under review for ICLR 2017, also authored in-part by this paper's author, further extends the forward prediction method [17]. They assign a probability that the student will provide a random answer. The claim is that this allows the method to potentially discover correct answers. They also add data balancing, where they balance training across the all the teacher responses. This is supposed to ensure that one part of the distribution doesn't dominate during model learning.

Critique

The paper in its abstract says, there is no need for a reward, but a feedback by the partner saying "yes" or a "no" is a sort of reward. Yes, there is just a fixed policy used in learning, which makes this type of learning a subset of reinforcement learning which goes against the claim that this is not a reinforcement learning.

Also, there are certain things that are not clearly explained, particularly the details of the forward-prediction model. It is not clear how the response to the answer is related to the learner's first input or to the answer. Hence it is not clear as to what the learner actually learns encodes into memory network. This also makes it impossible to say why this type of learning performs better than other approaches.

It seems difficult to enhance this model to generate real complex queries to the user (not predefined ones), how would this method handle multiple dialogues turns with complex language? Also, the forward prediction architecture seems interesting but it can hardly be extended to multiple dialogue turns. On the other hand, reinforcement learning is interesting in dialogue: evaluating a value function implicitly learns a transition model and predicts future outcomes. Here, the authors say that this architecture allows avoiding the definition of a reward due to its ability to predict words such as "right" or "correct" but it is not always the case that the user gives feedback like (especially in real-world dialogues). It is worth comparing this method with reinforcement and imitation learning for dialogue management, which could eventually lead to more novel models.

The authors claim "Language Learning", but instead assess the model based on question answering. The claims that are made are not represented by the results presented. While this method may serve as an approach to QA, it is certainly a stretch to call it language learning.

References

- Jason Weston. Dialog-based Language Learning. NIPS, 2016.

- P. K. Kuhl. Early language acquisition: cracking the speech code. Nature reviews neuroscience, 5(11): 831–843, 2004.

- M. A. Bassiri. Interactional feedback and the impact of attitude and motivation on noticing l2 form. English Language and Literature Studies, 1(2):61, 2011.

- R. Higgins, P. Hartley, and A. Skelton. The conscientious consumer: Reconsidering the role of assessment feedback in student learning. Studies in higher education, 27(1):53–64, 2002.

- A. S. Latham. Learning through feedback. Educational Leadership, 54(8):86–87, 1997.

- M. G. Werts, M. Wolery, A. Holcombe, and D. L. Gast. Instructive feedback: Review of parameters and effects. Journal of Behavioral Education, 5(1):55–75, 1995.

- A. Sordoni, M. Galley, M. Auli, C. Brockett, Y. Ji, M. Mitchell, J.-Y. Nie, J. Gao, and B. Dolan. A neural network approach to context-sensitive generation of conversational responses. Proceedings of NAACL, 2015.

- V. Rieser and O. Lemon. Reinforcement learning for adaptive dialogue systems: a data-driven methodology for dialogue management and natural language generation. Springer Science & Business Media, 2011.

- J. Schatzmann, K. Weilhammer, M. Stuttle, and S. Young. A survey of statistical user simulation techniques for reinforcement-learning of dialogue management strategies. The knowledge engineering review, 21(02):97–126, 2006.

- J. Schmidhuber and R. Huber. Learning to generate artificial fovea trajectories for target detection. International Journal of Neural Systems, 2(01n02):125–134, 1991.

- I. Lenz, R. Knepper, and A. Saxena. Deepmpc: Learning deep latent features for model predictive control. In Robotics Science and Systems (RSS), 2015.

- G. Wayne and L. Abbott. Hierarchical control using networks trained with higher-level forward models. Neural computation, 2014.

- B. C. Stadie, S. Levine, and P. Abbeel. Incentivizing exploration in reinforcement learning with deep predictive models. arXiv preprint arXiv:1507.00814, 2015.

- J. Clarke, D. Goldwasser, M.-W. Chang, and D. Roth. Driving semantic parsing from the world’s response. In Proceedings of the fourteenth conference on computational natural language learning, pages 18–27. Association for Computational Linguistics, 2010.

- J. Schatzmann, K. Weilhammer, M. Stuttle, and S. Young. A survey of statistical user simulation techniques for reinforcement-learning of dialogue management strategies. The knowledge engineering review, 21(02):97–126, 2006.

- 9 memory networks for language understanding: https://www.youtube.com/watch?v=5ekMog_nhaQ

- Li, Jiwei; Miller, Alexander H.; Chopra, Sumit; Ranzato, Marc'Aurelio; Weston, Jason. "Dialogue Learning With Human-In-The-Loop". Review for ICLR 2017.