Depthwise Convolution Is All You Need for Learning Multiple Visual Domains

Presented by

Yuwei Liu, Daniel Mao

Introduction

This paper propose a multi-domain learning architecture based on depthwise separable convolu- tion, which is based on the assumption that images from different domains share cross-channel correlations but have domain-specific spatial correlations. The proposed model is compact and has minimal overhead when being applied to new domains. Additionally, we introduce a gat- ing mechanism to promote soft sharing between different domains. The approach was evalueated on Visual Decathlon Challenge, and it showed that the approach can achieve the highest score while only requiring 50% of the parameters compared with the state-of-the-art approaches.

Motivation

Can we build a single neural network that can deal with images across different domains? This question motivates the concept of "multi-domain learning", and there are two challenges in multi-domain learning:

1.Identify a common structure among different domains.

2.Add new tasks to the model without introducing additional parameters.

Previous Work

1. Multi-Domain Learning aims at creating a single neural network to perform image classification tasks in a variety of domains. (Bilen and Vedaldi 2017) showed that a single neural network can learn simultaneously several different visual domains by using an instance normalization layer. (Rebuffi, Bilen, and Vedaldi 2017; 2018) proposed universal parametric families of neural networks that contain specialized problem-specific models which differ only by a small number of parameters. (Rosenfeld and Tsotsos 2018) proposed a method called Deep Adaptation Networks (DAN) that constrains newly learned filters for new domains to be linear combinations of existing ones.

2. Multi-Task Learning (Doersch and Zisserman 2017; Kokkinos 2017) is to extract different features from a single input to simultaneously perform classification, object recognition, edge detection, etc.

3. Transfer Learning is to improve the performance of a model on a target domain by leveraging the information from a related source domain (Pan, Yang, and others 2010; Bengio 2012; Hu, Lu, and Tan 2015).

Model Architecture

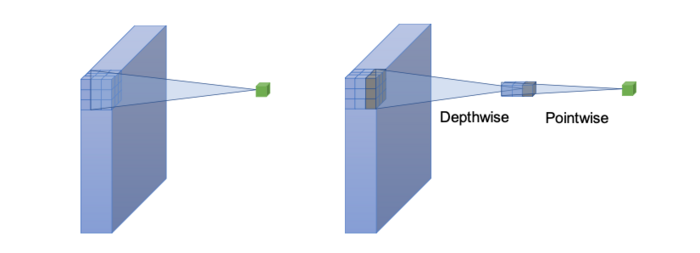

Depthwise Separable Convolution Proposed approach is based on depthwise separable convolution that factorizes a standard 3 × 3 convolution into a 3 × 3 depthwise convolution and a 1 × 1 pointwise convolution. While standard convolution performs the channel-wise and spatial-wise computation in one step, depthwise separable convolution splits the computation into two steps: depthwise convolution applies a single convolutional filter per each input channel and pointwise convolution is used to create a linear combination of the output of the depthwise convolution. The comparison of standard convolution and depthwise separable convolution is shown:

Depthwise convolution and pointwise convolution have different roles in generating new features: the former is used for capturing spatial correlations while the latter is used for capturing channel-wise correlations.

Network Architecture For the experiments, we use the same ResNet-26 architecture as in (Rebuffi, Bilen, and Vedaldi 2018). The original architecture has three macro residual blocks, each outputting 64, 128, 256 feature channels. Each macro block consists of 4 residual blocks. Each residual block has two convolutional layers consisting of 3 × 3 convolutional filters. The network ends with a global average pooling layer and a softmax layer for classification. Each standard convolution in the ResNet-26 was replaced with depthwise separable convolution and increase the channel size. The modified network architecture is shown:

The reduction of parameters does no harm to the performance of the model and the use of depthwise separable convolution allows us to model cross-channel correlations and spatial correlations separately. The idea behind our multi-domain learning method is to leverage the different roles of cross-channel correlations and spatial correlations in generating image features by sharing the pointwise convolution across different domains.

Learning Multiple Domains For multi-domain learning,it is essential to have a set of universally sharable parameters that can generalize to unseen domains. To get a good starting set of parameters, first train the modified ResNet-26 on ImageNet, then obtain a well-initialized network, each time when a new domain arrives, add a new output layer and finetune the depth-wise convolutional filters. The pointwise convolutional filters are shared across different domains. Since the statistics of theimages from different domains are different, domain-specific batch normalization parameters are also allowed. During inference, stack the trained depthwise convolutional filters for all domains as a 4D tensor. The adoption of depthwise separable convolution provides a natural separation for modeling cross-channel correlations and spatial correlations. Experimental evidence (Chollet 2017) suggests the decouple of cross-channel correlations and spatial correlations would result in more useful features. Take one step further to develop a multi-domain domain method based on the assumption that different domains share cross-channel correlations but have domain-specific spatial correlations. The method is based on two observations: model efficiency and interpretability of hidden units in a deep neural network.

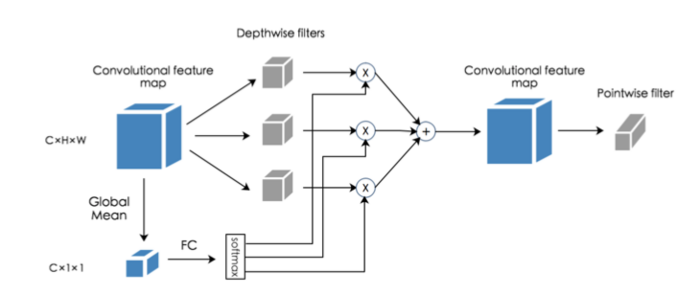

Soft Sharing of Trained Depthwise Filters In addition to the proposed sharing pointwi paper we also investigate whether the depthwise filters (spatial correlations) learned from other domains can be transferred to the target domain. Hence a novel soft sharing approach is introduced in the multi-domain setting to allow the sharing of depthwise convolution. after training domain-specific depthwise filters, all the domain-specific filters are stacked as in the picture:

During soft-sharing, we train each domain one by one. All the domain-specific depthwise filters and pointwise filters (trained on ImageNet) are fixed during soft sharing. We only train the feedforward network that controls the softmax gate. For a specific target domain, the softmax gate allows a soft sharing of trained depthwise filters with other domains. It is widely believed that early layers in a convolutional neural network are used for detecting lower level features such as textures while later layers are used for detecting parts or objects. Based on this observation, we partition the nework into three regions (early, middle, late) as shown and consider different placement of the softmax gate which allows us to compare a variety of sharing strategies.

Experiments

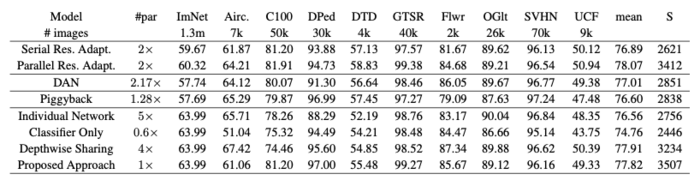

The approach achieves the highest score with the least amount of parameters. In partiular, the proposed approach improves the current state-of-the-art approaches by 100 points with only 50% of the pa- rameters.

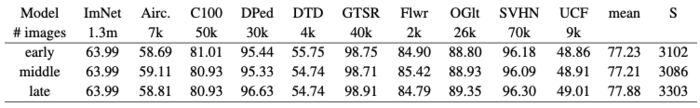

The following table shows the soft sharing can obtain a slightly higher accuracy on DTD and SVHN by sharing early layers. Also sharing later layers leads to a higher score, implying that although images in different domain may not share similar low level features, they can still be benefited from transfering information in later layers.

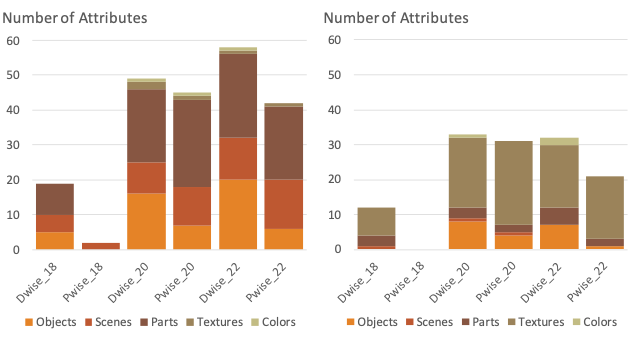

This figuture demonstrate that depthwise convolution consistently detects more attributes than pointwise convolution.

Conclusion

A multi-domain learning approach is proposed based on depthwise separable convolution and the assumption that images from different domains share the same channel-wise correlation but have domain-specific spatial-wise correlation. By evaluating on Visual Decathlon Challenge, the model achieves the highest score. The visualization results reveal that depthwise convolution captures more attributes and higher level concepts than pointwise convolution.

References

Bengio, Y. 2012. Deep learning of representations for un- supervised and transfer learning. In Proceedings of ICML Workshop on Unsupervised and Transfer Learning, 17–36.

Bilen, H., and Vedaldi, A. 2017. Universal representa- tions: The missing link between faces, text, planktons, and cat breeds. arXiv preprint arXiv:1701.07275.

Chollet, F. 2017. Xception: Deep learning with depthwise separable convolutions. In 2017 IEEE Conference on Com- puter Vision and Pattern Recognition (CVPR), 1800–1807. IEEE.

Doersch, C., and Zisserman, A. 2017. Multi-task self- supervised visual learning. In The IEEE International Con- ference on Computer Vision (ICCV).

Hu, J.; Lu, J.; and Tan, Y.-P. 2015. Deep transfer metric learning. In Proceedings of the IEEE Conference on Com- puter Vision and Pattern Recognition, 325–333.

Kokkinos, I. 2017. Ubernet: Training a universal convo- lutional neural network for low-, mid-, and high-level vi- sion using diverse datasets and limited memory. In 2017 IEEE Conference on Computer Vision and Pattern Recogni- tion (CVPR), 5454–5463. IEEE.

Pan, S. J.; Yang, Q.; et al. 2010. A survey on transfer learn- ing. IEEE Transactions on knowledge and data engineering 22(10):1345–1359.

Rebuffi, S.-A.; Bilen, H.; and Vedaldi, A. 2017. Learning multiple visual domains with residual adapters. In Advances in Neural Information Processing Systems, 506–516.

Rebuffi, S.-A.; Bilen, H.; and Vedaldi, A. 2018. Efficient parametrization of multi-domain deep neural networks. In IEEE Conference on Computer Vision and Pattern Recogni- tion (CVPR).

Rosenfeld, A., and Tsotsos, J. K. 2018. Incremental learn- ing through deep adaptation. IEEE transactions on pattern analysis and machine intelligence.