Countering Adversarial Images Using Input Transformations

Motivation

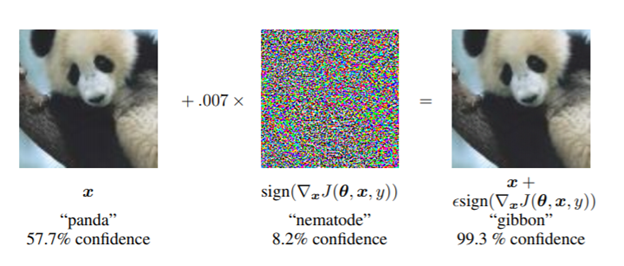

As the use of machine intelligence has increased , robustness has become a critical feature to guarantee the reliability of deployed machine-learning systems. However, recent research has shown that existing models are not robust to small , adversarial designed perturbations of the input. Adversarial examples are inputs to Machine Learning models that an attacker has intentionally designed to cause the model to make a mistake.The adversarial examples are not specific to Images , but also Malware, Text Understanding ,Speech. Below example (Goodfellow et. al), a small perturbation when applied to original image of panda, the prediction is changed to gibbon.

Hence an urgent need for approaches/defenses that increase the robustness of learning systems to such adversarial examples.

Introduction

The paper studies strategies that defend against adversarial-example attacks on image-classification systems by transforming the images before feeding them to a Convolutional Network Classifier. Generally, defenses against adversarial examples fall into two main categories -

- Model-Specific – They enforce model properties such as smoothness and in-variance via the learning algorithm.

- Model-Agnostic – They try to remove adversarial perturbations from the input.

This paper focuses on increasing the effectiveness of Model Agnostic defense strategies. Below image transformations techniques have been studied:

- Image Cropping and Re-scaling ( Graese et al, 2016).

- Bit Depth Reduction (Xu et. al, 2017)

- JPEG Compression (Dziugaite et al, 2016)

- Total Variance Minimization(RUdin at al , 1992)

- Image Quilting (Efros & Freeman , 2001).

These image transformations have been studied against Adversarial attacks such as - fast gradient sign method(Kurakin et al., 2016a), Deepfool (Moosavi-Dezfooli et al., 2016), and the Carlini & Wagner (2017) attack. The strongest defenses are based on Total Variance Minimization and Image Quilting: as these defenses are non-differentiable and inherently random which makes difficult for an adversary to get around them.

Problem Definition/Terminology

Gray Box Attack : Model Architecture and parameters are Public

Black Box Attack : Adversary does not have access to the model.

Non Targeted Adversarial Attack : Goal of the attack is to modify source image in a way that image will be classified incorrectly by Machine Learning Classifier

Targeted Adversarial Attack : Goal of the attack is to modify source image in way that image will be classified as a specific target by Machine Learning Classifier.

The paper discusses non- targeted adversarial example for image recognition systems. Given image space X , and a classifier h(.) , and a source image x ∈ X , a non targeted adversarial example of x is a perturbed image x'∈ X , such that h(x) ≠ h(x'). Given a set of N images {x1, …xn} , a target classifier h(.) , an adversarial attack aims to generate {( x{1}),…..(x'{n})}, such that (x'n)is an adversary of xn.

Success rate of an attack is given as :1/N ∑_(n=1)^N∑_[h(xn) ≠ h((x'n)]