Conditional Image Synthesis with Auxiliary Classifier GANs

Abstract: "In this paper we introduce new methods for the improved training of generative adversarial networks (GANs) for image synthesis. We construct a variant of GANs employing label conditioning that results in 128×128 resolution image samples exhibiting global coherence. We expand on previous work for image quality assessment to provide two new analyses for assessing the discriminability and diversity of samples from class-conditional image synthesis models. These analyses demonstrate that high resolution samples provide class information not present in low resolution samples. Across 1000 ImageNet classes, 128×128 samples are more than twice as discriminable as artificially resized 32×32 samples. In addition, 84.7% of the classes have samples exhibiting diversity comparable to real ImageNet data." (Odena et al., 2016)

Introduction

Motivation

The authors introduce a GAN architecture for generating high resolution images from the ImageNet dataset. They show that this architecture makes it possible to split the generation process into many sub-models. They further suggest that GANs have trouble generating globally coherent images, and that this architecture is responsible for the coherence of their samples. They experimentally demonstrate that generating higher resolution images allow the model to encode more class-specific information, making them more visually discriminable than lower resolution images even after they have been resized to the same resolution.

The second half of the paper introduces metrics for assessing visual discriminability and diversity of synthesized images. The discussion of image diversity in particular is important due to the tendency for GANs to 'collapse' to only produce one image that best fools the discriminator (Goodfellow et al., 2014).

Previous Work

Of all image synthesis methods (e.g. variational autoencoders, autoregressive models, invertible density estimators), GANs have become one of the most popular and successful due to their flexibility and the ease with which they can be sampled from. A standard GAN framework pits a generative model $G$ against a discriminative adversary $D$. The goal of $G$ is to learn a mapping from a latent space $Z$ to a real space $X$ to produce examples (generally images) indistinguishable from training data. The goal of the $D$ is to iteratively learn to predict when a given input image is from the training set or a synthesized image from $G$. Jointly the models are trained to solve the game-theoretical minimax problem, as defined by Goodfellow et al. (2014): $$\underset{G}{\text{min }}\underset{D}{\text{max }}V(G,D)=\mathbb{E}_{X\sim p_{data}(x)}[log(D(X))]+\mathbb{E}_{Z\sim p_{Z}(z)}[log(1-D(G(Z)))]$$

While this initial framework has clearly demonstrated great potential, other authors have proposed changes to the method to improve it. Many such papers propose changes to the training process (Salimans et al., 2016)(Karras et al., 2017), which is notoriously difficult for some problems. Others propose changes to the model itself. Mirza & Osindero (2014) augment the model by supplying the class of observations to both the generator and discriminator to produce class-conditional samples. According to van den Oord et al. (2016), conditioning image generation on classes can greatly improve their quality. Other authors have explored using even richer side information in the generation process with good results (Reed et al., 2016).

Another model modification relevant to this paper is to force the discriminator network to reconstruct side information by adding an auxiliary network to classify generated (and real) images. The authors make the claim that forcing a model to perform additional tasks is known to improve performance on the original task (Szegedy et al., 2014)(Sutskever et al., 2014)(Ramsundar et al., 2016). They further suggest that using pre-trained image classifiers (rather than classifiers trained on both real and generated images) could improve results over and above what is shown in this paper.

Contributions

The contributions of this paper are in three main areas. First, the authors propose slight changes to previously existing GAN architectures, resulting in a model capable of generating samples of impressive quality. Second, the authors propose two metrics to assess the quality of samples generated from a GAN. Lastly, they present empirical results on GANs which are of some interest.

Model

The authors propose an auxiliary classifier GAN (AC-GAN) which is a slight variation on previous architectures. Like Mirza & Osindero (2014), the generator takes the image class to be generated as input in addition to the latent encoding $Z$. Like Odena (2016) and Salimans et al. (2016), the discriminator is trained to predict not only whether an observation is real or fake, but to classify each observation as well. The marginal contribution of this paper is to combine these in one model.

Formally, let $C\sim p_c$ represent the target class label of each generated observation and $Z$ represent the usual noise vector from the latent space. Then the generator function takes both as argument to produce image samples: $X_{fake}=G(c,z)$.The discriminator gives a probability distribution over the source $S$ (real or fake) of the image as well as the class label $C$ being generated. $$D(X)=<P(S|X),P(C|X)>$$

The objective function for the model thus has two parts, one corresponding to the source $L_S$ and the other to the class $L_C$. $D$ is trained to maximize $L_S + L_C$, while $G$ is trained to maximize $L_C-L_S$. Using the notation of Goodfellow et al. (2014), these are: $$L_S=\mathbb{E}_{X\sim p_{data}(x)}[log(P(S=real|X))]+\mathbb{E}_{C,Z\sim p_{C,Z}(c,z)}[log(P(S=fake|G(C,Z)))]$$ $$L_C=\mathbb{E}_{X\sim p_{data}(x)}[log(P(C|X))]+\mathbb{E}_{C,Z\sim p_{C,Z}(c,z)}[log(P(C|G(C,Z)))]$$

Because G accepts both $C$ and $Z$ as arguments, it is able to learn a mapping $Z\rightarrow X$ that is independent of $C$. The authors argure that all class-specific information should be represented by $C$, allowing $Z$ to represent other factors such as pose, background, etc.

Lastly the authors split the generation process into many class-specific submodels. They point out that the structure of their model permits this split, though it should technically be possible for even the standard GAN framework by dividing the training data into groups according to their known class labels.

The changes above result in a model capable of generating (some) image samples with both high resolution and global coherence.

GAN Quality Metrics

A much larger part of the authors' contributions in this paper is in the area of measuring the quality of a GAN's output. As they say, evaluating a generative model's quality is difficult due to a large number of probabilistic measures (such as average log-likelihood, Parzen window estimates, and visual fidelity (Theis et al., 2015)) and "a lack of a perceptually meaningful image similarity metric".

Image Discriminability Metric

The authors develop two metrics in this paper to address these shortcomings. The first of these is a discriminability metric, the goal of which is to assess the degree to which generated images are identifiable as the class they are meant to represent. Ideally a team of non-expert humans could handle this, but the difficulty of such a an approach makes the need for an automated metric apparent. The metric proposed by the authors is to measure the accuracy of a pre-trained image classifier trained on the pristine training data. For this they select a modified version of Inception-v3(Szegedy et al., 2015).

Other metrics already exist for assessing image quality, the most popular of which is probably the Inception Score (Salimans et al., 2016). The authors list two main advantages of their approach over the Inception Score. The first is that accuracy figures are easier to interpret than the Inception Score, which is fairly self-evident. The second advantage of using Inception accuracy instead of Inception Score is that Inception accuracy may be calculated for individual classes, giving a better picture of where the model is strong and where it is weak.

Image Diversity Metric

The second metric proposed by the authors measures the diversity of the generated images. As mentioned above, image diversity is an important quality in a GAN, a common failure they suffer from is 'collapsing', where the generator learns to only output one image that is good at fooling the discriminator (Goodfellow et al., 2014)(Salimans et al., 2016). The metric proposed in this section is intended to be complementary to the Inception accuracy, at Inception accuracy would not detect generator collapse.

For their diversity metric, the authors co-opt a different metric used to measure the similarity between two images: multiscale structural similarity (MS-SSIM)(Wang et al., 2003). The authors do not go into detail about how the MS-SSIM measure is calculated, except to say that it is one of the more successful ways to predict human perceptual similarity judgements. It can take values on the interval $[0,1]$, and higher values indicate the two images being compared a perceptually more similar. For images generated from a GAN then, the metric should ideally be low.

The authors contribution is to use this metric to assess diversity of a GANs output. It is a pairwise comparison between two images, so their solution is to compare 100 images (that is $100\cdot 99$ paired comparisons) from each class and take the mean MS-SSIM score.

The authors make two points about their use of this metric. First, the way they apply the metric is different from how it was originally intended to be used. It is possible that it will not behave as desired because of this. As evidence to the contrary, they state that:

- Visually the metric seems to work. Pairs with high MS-SSIM seem more similar.

- Comparisons are only made between images in the same class, keeping their application of the metric closer to its original use case of measuring the quality of compression algorithms.

- The metric is not saturated. Scores on their generated data vary across the unit interval. If scores were all very close to zero the metric would not be much use.

The second point they raise is that the mean MS-SSIM metric is not intended as a proxy for entropy of the generator distribution in pixel space. That measure is hard to compute, and in any case is sensitive to trivial changes in the pixels, whereas the true intention of this metric is to measure perceptual similarity.

Experimental Results on GAN Properties

The authors conduct several experiments on their model and proposed metrics. These are summarized in this section.

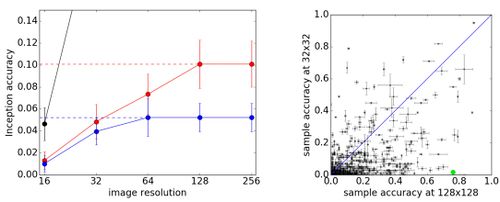

Higher Resolution Images are more Discriminable

As it is one of the main attractions of this paper, the authors investigate how generating samples at different resolutions affects their discriminability. To achieve this two models are trained, one that generates 64 x 64 resolution images and one that generates 128 x 128 resolution images. These images can be rescaled using bilinear interpolation to make them directly comparable. The authors find that the 128 x 128 AC-GAN (on average) achieves higher discriminability (per the Inception accuracy metric introduced above) at all resized resolutions.

Generated Images are both Diverse and Discriminable

Effect of Class Splits on Image Sample Quality

Results

Critique

Model

Not very different from other GANs. Some unsupported claims about stabilizing training etc. No balancing hyperparameter for classification and discrimination. Never tested/showed whether their architecture is better than others.

Odena et al. (2016) argue that the class conditional generator allows $G$ to learn a representation of $Z$ independent of $C$ in section 3, and give evidence of the claim later in section 4.5 by showing that images generated with a fixed latent vector $z$ but different class labels $c$ have similar global structure (e.g. orientation of the subject) but the subjects (bird species) vary according to the label. Interestingly, the background (especially in the top row) also varies with the class label. This can possibly be attributed to the bird species coming from different areas, hence a seagull might be expected to have an ocean background. Clearly here the model benefits from the fact that the authors grouped similar classes together. A more interesting analysis might show the same comparison between different classes, such as birds and forklifts, to see how global structure is encoded across them.

GAN Quality Metrics

This metric is only possible in a conditional GAN setting, since the standard GAN framework has no ground-truth labels. However the advantages the authors list do have merit.

Experiments

Discussion of overfitting says b/c nearest neighbours under L1 measure in pixel space are not similar looking it doesn't overfit.

Conclusion

References

- Odena, A., Olah, C., & Shlens, J. (2016). Conditional image synthesis with auxiliary classifier gans. arXiv preprint arXiv:1610.09585.

- Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., ... & Bengio, Y. (2014). Generative adversarial nets. In Advances in neural information processing systems (pp. 2672-2680).

- Salimans, T., Goodfellow, I., Zaremba, W., Cheung, V., Radford, A., & Chen, X. (2016). Improved techniques for training gans. In Advances in Neural Information Processing Systems (pp. 2234-2242).

- Karras, T., Aila, T., Laine, S., & Lehtinen, J. (2017). Progressive Growing of GANs for Improved Quality, Stability, and Variation. arXiv preprint arXiv:1710.10196.

- Mirza, M., & Osindero, S. (2014). Conditional generative adversarial nets. arXiv preprint arXiv:1411.1784.

- van den Oord, A., Kalchbrenner, N., Espeholt, L., Vinyals, O., & Graves, A. (2016). Conditional image generation with pixelcnn decoders. In Advances in Neural Information Processing Systems (pp. 4790-4798).

- Reed, S. E., Akata, Z., Mohan, S., Tenka, S., Schiele, B., & Lee, H. (2016). Learning what and where to draw. In Advances in Neural Information Processing Systems (pp. 217-225).

- Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed, S., Anguelov, D., ... & Rabinovich, A. (2015). Going deeper with convolutions. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 1-9).

- Sutskever, I., Vinyals, O., & Le, Q. V. (2014). Sequence to sequence learning with neural networks. In Advances in neural information processing systems (pp. 3104-3112).

- Ramsundar, B., Kearnes, S., Riley, P., Webster, D., Konerding, D., & Pande, V. (2015). Massively multitask networks for drug discovery. arXiv preprint arXiv:1502.02072

- Odena, A. (2016). Semi-supervised learning with generative adversarial networks. arXiv preprint arXiv:1606.01583.

- Theis, L., Oord, A. V. D., & Bethge, M. (2015). A note on the evaluation of generative models. arXiv preprint arXiv:1511.01844.

- Wang, Z., Simoncelli, E. P., & Bovik, A. C. (2003, November). Multiscale structural similarity for image quality assessment. In Signals, Systems and Computers, 2004. Conference Record of the Thirty-Seventh Asilomar Conference on (Vol. 2, pp. 1398-1402). IEEE.