Breaking Certified Defenses: Semantic Adversarial Examples With Spoofed Robustness Certificates

Presented By

Gaurav Sikri

Background

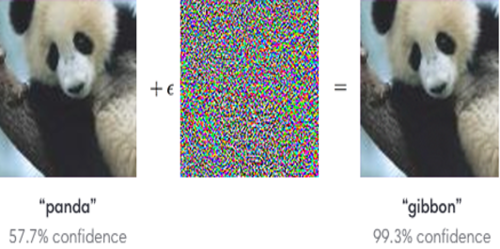

Adversarial examples are inputs to machine learning or deep neural network models that an attacker intentionally designs to deceive the model or to cause the model to make a wrong prediction. This is done by adding a little noise to the original image or perturbing an original image and creating an image that is not identified by the network and the model misclassifies the new image.