Breaking Certified Defenses: Semantic Adversarial Examples With Spoofed Robustness Certificates: Difference between revisions

No edit summary |

No edit summary |

||

| Line 4: | Line 4: | ||

== Background == | == Background == | ||

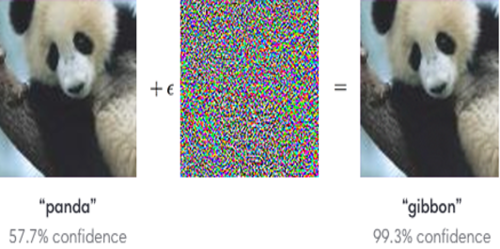

Adversarial examples are inputs to machine learning or deep neural network models that an attacker intentionally designs to deceive the model or to cause the model to make a wrong prediction. This is done by adding a little noise to the original image or perturbing an original image and creating an image that is not identified by the network and the model misclassifies the new image. | Adversarial examples are inputs to machine learning or deep neural network models that an attacker intentionally designs to deceive the model or to cause the model to make a wrong prediction. This is done by adding a little noise to the original image or perturbing an original image and creating an image that is not identified by the network and the model misclassifies the new image. The following image describes an adversarial attack where a model is deceived by an attacker by adding small noise to an input image and as a result, the prediction of the model changed. | ||

[[File:adversarial_example.png|500px|Image: 500 pixels]] | [[File:adversarial_example.png|500px|Image: 500 pixels]] | ||

The impacts of adversarial attacks can be life-threatening in the real world. Consider the case of driverless cars where the model installed in a car is trying to read a STOP sign on the road. However, if the STOP sign is replaced by an adversarial image of the original image, and if that new image is able to fool the model to not make a decision to stop, it can lead to an accident. Hence it becomes really important to design the classifiers such that these classifiers are immune to such adversarial attacks. | |||

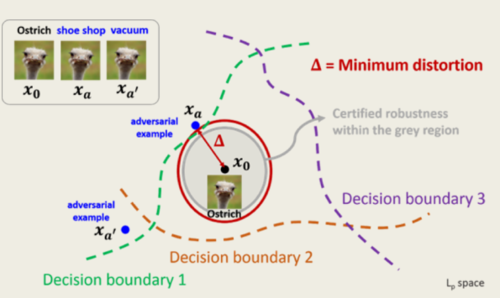

While training a deep network, the network is trained on a set of augmented images as well along with the original images. For any given image, there are multiple augmented images created and passed to the network to ensure that a model is able to learn from the augmented images as well. During the validation phase, after labeling an image, the defenses check whether there exists an image of a different label within a region of a certain unit radius of the input. If the classifier assigns all images within the specified region ball the same class label, then a certificate is issued. This certificate ensures that the model is protected from adversarial attacks and is called Certified Defense. The image below shows a certified region (in red) | |||

[[File:certified_defense.png|500px|Image: 500 pixels]] | |||

Revision as of 20:42, 8 November 2020

Presented By

Gaurav Sikri

Background

Adversarial examples are inputs to machine learning or deep neural network models that an attacker intentionally designs to deceive the model or to cause the model to make a wrong prediction. This is done by adding a little noise to the original image or perturbing an original image and creating an image that is not identified by the network and the model misclassifies the new image. The following image describes an adversarial attack where a model is deceived by an attacker by adding small noise to an input image and as a result, the prediction of the model changed.

The impacts of adversarial attacks can be life-threatening in the real world. Consider the case of driverless cars where the model installed in a car is trying to read a STOP sign on the road. However, if the STOP sign is replaced by an adversarial image of the original image, and if that new image is able to fool the model to not make a decision to stop, it can lead to an accident. Hence it becomes really important to design the classifiers such that these classifiers are immune to such adversarial attacks.

While training a deep network, the network is trained on a set of augmented images as well along with the original images. For any given image, there are multiple augmented images created and passed to the network to ensure that a model is able to learn from the augmented images as well. During the validation phase, after labeling an image, the defenses check whether there exists an image of a different label within a region of a certain unit radius of the input. If the classifier assigns all images within the specified region ball the same class label, then a certificate is issued. This certificate ensures that the model is protected from adversarial attacks and is called Certified Defense. The image below shows a certified region (in red)