Adversarial Attacks on Copyright Detection Systems

Presented by

Luwen Chang, Qingyang Yu, Tao Kong, Tianrong Sun

1. Introduction

Copyright detection system is one of the most commonly used machine learning systems; however, the hardiness of copyright detection and content control systems to adversarial attacks, inputs intentionally designed by people to cause the model to make a mistake, has not been widely addressed by public. Copyright detection system are vulnerable to attacks for three reasons.

1. Unlike to physical-world attacks where adversarial samples need to survive under different conditions like resolutions and viewing angles, any digital files can be uploaded directly to the web without going through a camera or microphone.

2. The detection system is open which means the uploaded files may not correspond to an existing class. In this case, it will prevent people from uploading unprotected audio/video whereas most of the uploaded files nowadays are not protected.

3. The detection system needs to handle a vast majority of content which have different labels but similar features. For example, in the ImageNet classification task, the system is easily attacked when there are two cats/dogs/birds with high similarities but from different classes.

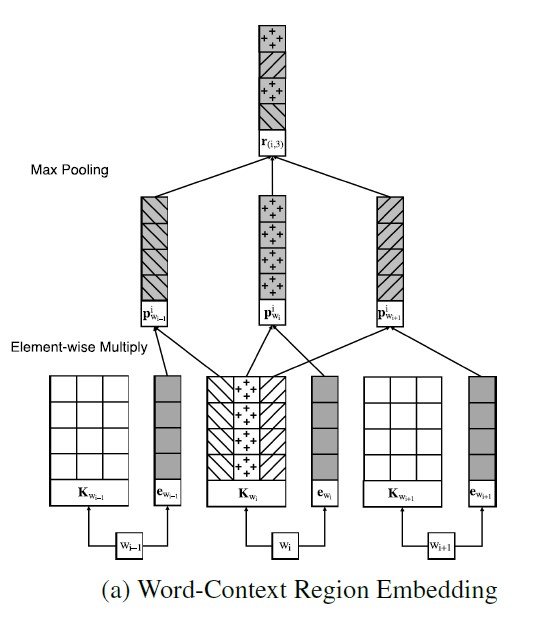

3.2. Interpreting the fingerprint extractor as a CNN

The generic neural network model consists two convolutional layers and a max-pooling layer, depicted in the figure below. As mentioned above, the convolutional neural network is well-known for its properties of temporarily localized and transformational invariant. The purpose of this network is to generate audio fingerprinting signals that extract features that uniquely identify a signal, regardless of the starting and ending time of the inputs.

3.3 Formulating the adversarial loss function

In the previous section, local maxima of spectrogram are used to generate fingerprints by CNN, but a loss has not been quantified how similar tow fingerprints are. After the loss is found, standard gradient methods can be used to find a perturbation $\delta$, which can be added to a signal so that the copyright detection system will be tricked. Also, a bound is set to make sure the generated fingerprints are close enough to the original audio signal. $$\text{bound:}\ ||\delta||_p\le\epsilon$$

where $||\delta||_p\le\epsilon$ is the $l_p$-norm of the perturbation and $\epsilon$ is the bound of the difference between the original file and the adversarial example.

To compare how similar two binary fingerprints are, Hamming distance is employed. Hamming distance between two strings is the number of digits that are different. For example, the Hamming distance between 101100 and 100110 is 2.

Let $\psi(x)$ and $\psi(y)$ be two binary fingerprints outputted from the model, the number of peaks shared by $x$ and $y$ can be found through $|\psi(x)\cdot\psi(y)|$. Now, to get a differentiable loss function, the equation is found to be $$J(x,y)=|\phi(x)\cdot\psi(x)\cdot\psi(y)|$$

This is effective for white-box attacks with knowing the fingerprinting system. However, the loss can be easily minimized by modifying the location of the peaks by one pixel, which would not be reliable to transfer to black-box industrial systems. To make it more transferable, a new loss function which involves more movements of the local maxima of the spectrogram is proposed. The idea is to move the locations of peaks in $\phi(x)$ outside of neighborhood of the peaks of $\phi(y)$. In order to implement the model more efficiently, two max-pooling layers are used. One of the layers has a bigger width $w_1$ while the other one has a smaller width $w_2$. For any location, if the output of $w_1$ pooling is strictly greater than the output of $w_2$ pooling, then it can be concluded that no peak in that location with radius $w_2$.

The loss function is as the following:

$$J(x,y) = \sum_i\bigg(ReLU\bigg(c-\bigg(\underset{|j| \leq w_1}{\max}\phi(i+j;x)-\underset{|j| \leq w_2}{\max}\phi(i+j;x)\bigg)\bigg)\cdot\psi(i;y)\bigg)$$ The equation above penalizes the peaks of $x$ which are in neighborhood of peaks of $y$ with radius of $w_2$. The activation function uses $ReLU$. $c$ is the difference between the output of two max-pooling layers.

Lastly, instead of the maximum operator, smoothed max function is replaced here: $$S_\alpha(x_1,x_2,...,x_n) = \frac{\sum_{i=1}^{n}x_ie^{\alpha x_i}}{\sum_{i=1}^{n}e^{\alpha x_i}}$$ $\alpha$ is a smoothing hyper parameter. When $\alpha$ approaches positive infinity, $S_\alpha$ is closer to the actual max function.

To summarize, the optimization problem can be formulated as the following:

$$ \underset{\delta}{\min}J(x+\delta,x)\\ s.t.||\delta||_{\infty}\le\epsilon $$ where $x$ is the input signal, $J$ is the loss function with the smoothed max function.

5. Conclusion

In conclusion, many industrial copyright detection systems used in the popular video and music website such as YouTube and AudioTag are significantly vulnerable to adversarial attacks established in the existing literature. By building a simple music identification system resembling that of Shazam using neural network and attack it by the well-known gradient method, this paper firmly proved the lack of robustness of the current online detector. The intention of this paper is to raise the awareness of the vulnerability of the current online system to adversarial attacks and to emphasize the significance of enhancing our copyright detection system. More approach, such as adversarial training needs to be developed and examined, in order to protect us against the threat of adversarial copyright attack.