ALBERT: A Lite BERT for Self-supervised Learning of Language Representations

Presented by

Maziar Dadbin

Introduction

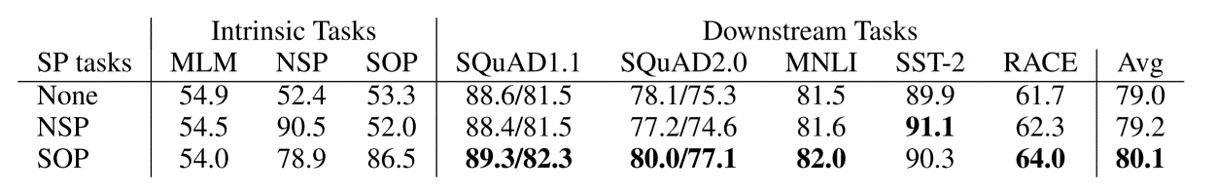

In this paper, the authors have made some changes to the BERT model and the result is ALBERT, a model that out-performs BERT on GLUE, SQuAD, and RACE benchmarks. The important point is that ALBERT has fewer number of parameters than BERT-large, but still it gets better results. The above mentioned changes are Factorized embedding parameterization and Cross-layer parameter sharing which are two methods of parameter reduction. They also introduced a new loss function and replaced it with one of the loss functions being used in BERT (i.e. NSP). The last change is removing dropout from the model.