ALBERT: A Lite BERT for Self-supervised Learning of Language Representations

Presented by

Maziar Dadbin

Introduction

In this paper, the authors have made some changes to the BERT model and the result is ALBERT, a model that out-performs BERT on GLUE, SQuAD, and RACE benchmarks. The important point is that ALBERT has fewer parameters than BERT-large, but it still produces better results. The changes made to BERT model are Factorized embedding parameterization and Cross-layer parameter sharing which are two methods of parameter reduction. They also introduced a new loss function and replaced it with one of the loss functions being used in BERT (i.e. NSP). The last change is removing dropouts from the model.

Motivation

In natural language representations, larger models often result in improved performance. For example, the BERT_large performs better than BERT_base in all systems all tasks by a significant margin of the average range between 4.5% to 7%. However, at some point, GPU/TPU memory and training time constraints limit our ability to increase the model size any further. There exist some attempts to reduce memory consumption, but at the cost of speed. For example, Chen et al. (2016)[1] uses an extra forward pass but reduces memory requirements in a gradient checkpoint technique. Moreover, Gomez et al. (2017)[2] leverages a method to reconstruct a layer's activations from its next layer, to eliminate the need to store these activations, freeing up the memory. In addition, Raffel et al. (2019)[3], leverage model parallelization while training a massive model. The authors of this paper claim that their parameter reduction techniques reduce memory consumption and increase training speed.

Model details

The fundamental structure of ALBERT is the same as BERT i.e. it uses a transformer encoder with GELU nonlinearities. The authors set the feed-forward/filter size to be 4*H and the number of attention heads to be H/64 (where H is the size of the hidden layer). Next, we explain the changes that have been applied to the BERT.

Factorized embedding parameterization

In BERT (as well as subsequent models like XLNet and RoBERTa) we have [math]\displaystyle{ \\E }[/math]=[math]\displaystyle{ \\H }[/math] i.e. the size of the vocabulary embedding ([math]\displaystyle{ \\E }[/math]) and the size of the hidden layer ([math]\displaystyle{ \\H }[/math]) are tied together. This choice is not efficient because we may need to have a large hidden layer but not a large vocabulary embedding layer. This issue is a case in many applications because the vocabulary embedding ‘[math]\displaystyle{ \\E }[/math]’ is meant to learn context-independent representations while the hidden-layer embedding ‘[math]\displaystyle{ \\H }[/math]’ is meant to learn context-dependent representation which usually is harder. However, if we increase [math]\displaystyle{ \\H }[/math] and [math]\displaystyle{ \\E }[/math] together, it will result in a huge increase in the number of parameters because the size of the vocabulary embedding matrix is [math]\displaystyle{ \\V \cdot E }[/math] where [math]\displaystyle{ \\V }[/math] is the size of the vocabulary and is usually quite large. For example, [math]\displaystyle{ \\V }[/math] equals 30000 in both BERT and ALBERT. The authors proposed the following solution to the problem: Do not project one-hot vectors directly into hidden space, instead first project one-hot vectors into a lower dimensional space of size [math]\displaystyle{ \\E }[/math] and then project it to the hidden layer. This reduces embedding parameters from [math]\displaystyle{ \\O(V \cdot H) }[/math] to [math]\displaystyle{ \\O(V \cdot E+E \cdot H) }[/math] which is significant when [math]\displaystyle{ \\H }[/math] is much larger than [math]\displaystyle{ \\E }[/math].

Cross-layer parameter sharing

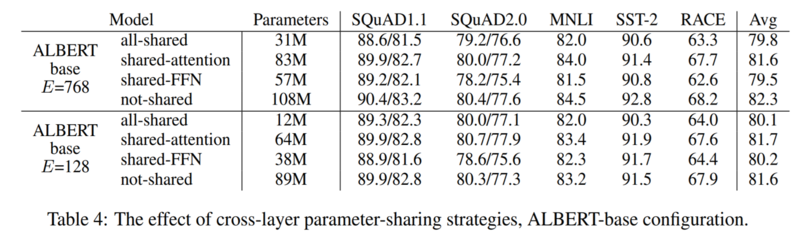

Another method the authors used for reducing the number of parameters is to share the parameters across layers. There are different strategies for parameter sharing. For example, one may only share feed-forward network parameters or only share attention parameters. However, the default choice for ALBERT is to simply share all parameters across layers. The following table shows the effect of different parameter sharing strategies in two settings for the vocabulary embedding size. In both cases, sharing all the parameters has a negative effect on the accuracy where most of this effect comes from sharing the FFN parameters instead of the attention parameters. Given this, the authors have decided to share all the parameters across the layers, resulting in a much smaller number of parameters, which in turn enable them to have larger hidden layers, which is how they compensate what they have lost from parameter sharing.

Why does cross-layer parameter sharing work?

From the experiment results, we can see that cross-layer parameter sharing dramatically reduces the model size without hurting the accuracy too much. While it is obvious that sharing parameters can reduce the model size, it might be worth thinking about why parameters can be shared across BERT layers. Two of the authors briefly explained the reason in a blog. They noticed that the network often learned to perform similar operations at various layers (Soricut, Lan, 2019). Previous research also showed that attention heads in BERT behave similarly (Clark et al., 2019). These observations made it possible to use the same weights at different layers.

Inter-sentence coherence loss

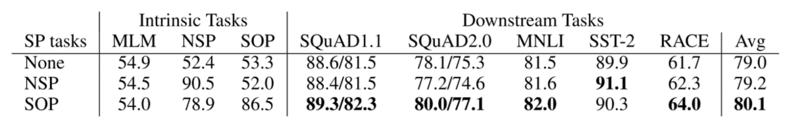

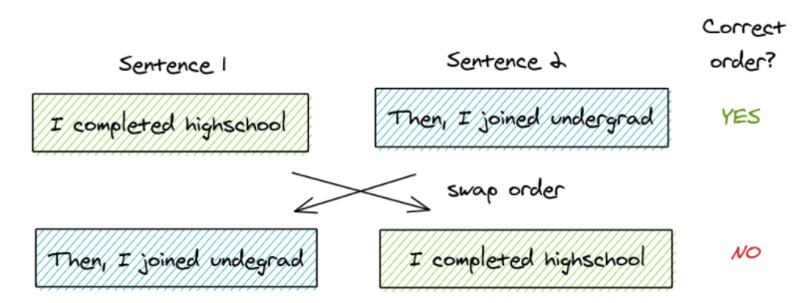

The BERT uses two loss functions namely Masked language modeling (MLM) loss and Next-sentence prediction (NSP) loss. The NSP is a binary classification loss where positive examples are two consecutive segments from the training corpus and negative examples are pairing segments from different documents. The negative and positive examples are sampled with equal probability. However, experiments show that NSP is not effective, and it should also be pointed out that NSP loss overlaps with MLM loss in terms of the task in topic prediction. In fact, the necessity of NSP loss has been questioned in the literature (Lample and Conneau,2019; Joshi et al., 2019). The authors explained the reason as follows: A negative example in NSP is misaligned from both topic and coherence perspective. However, the topic prediction is easier to learn compared to coherence prediction. Hence, the model ends up learning just the easier topic-prediction signal. For example, the model can easily be trained to learn "I love cats" and "I had sushi for lunch" are not coherent as they are already very different topic-wise, but might not be able to tell that "I love cats" and "my mom owned a dog" are not next to each other. They tried to solve this problem by introducing a new loss namely sentence order prediction (SOP) which is again a binary classification loss. Positive examples are the same as in NSP (two consecutive segments). But the negative examples are the same two consecutive segments with their order swapped. The SOP forces the model to learn the harder coherence prediction task. The following table compares NSP with SOP. As we can see the NSP cannot solve the SOP task (it performs at random 52%) but the SOP can solve the NSP task to an acceptable degree (78.9%). We also see that on average the SOP improves results on downstream tasks by almost 1%. Therefore, they decided to use MLM and SOP as the loss functions.

What does sentence order prediction (SOP) look like?

Through a mathematical lens:

First we will present some variable as follows. [math]\displaystyle{ \vec{s_{j}} }[/math] is the [math]\displaystyle{ j^{th} }[/math] textual segment in a document, [math]\displaystyle{ D }[/math]. Here [math]\displaystyle{ \vec{s_{j}} \in span \{ \vec{w^{j}_1}, ... , \vec{w^{j}_n} \} }[/math]. [math]\displaystyle{ \vec{w^{j}_i} }[/math] is the [math]\displaystyle{ i^{th} }[/math] word in [math]\displaystyle{ \vec{s_{j}} }[/math]. Now the task of SOP is given [math]\displaystyle{ \vec{s_{k}} }[/math] to predict whether a following textual segment [math]\displaystyle{ \vec{s_{k+1}} }[/math] is truly the following sentence or not. Here the subscripts [math]\displaystyle{ k }[/math] and [math]\displaystyle{ k+1 }[/math] denote the ordering. The task is predict whether [math]\displaystyle{ \vec{s_{k+1}} }[/math] is actually [math]\displaystyle{ \vec{s_{j+1}} }[/math] or [math]\displaystyle{ \vec{s_{j}} }[/math].

Through a visual lens:

Removing dropout

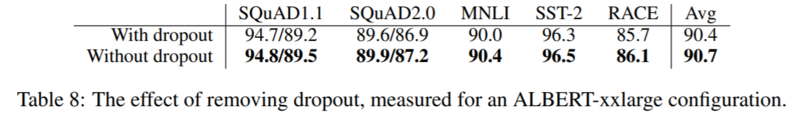

The last change the authors applied to the BERT is that they removed the dropout. Table 8 below shows the effect of removing dropout. They also observe that the model does not overfit the data even after 1M steps of training. The authors point out that empirical [8] and theoretical [9] evidence suggests that batch normalization in combination with dropout may have harmful results, particularly in convolutional neural networks. They speculate that dropout may be having a similar effect here.

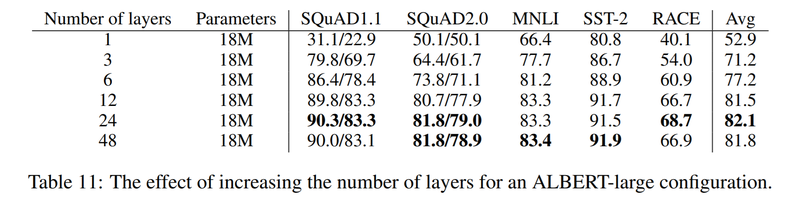

Effect of Network Depth and Width

In table 11, we can see the effect of increasing the number of layers. In all these settings the size of hidden layers is 1024. It appears that with increasing the depth of the model we get better and better results until the number of layers reaches 24. However, it seems that increasing the depth from 24 to 48 will decline the performance of the model.

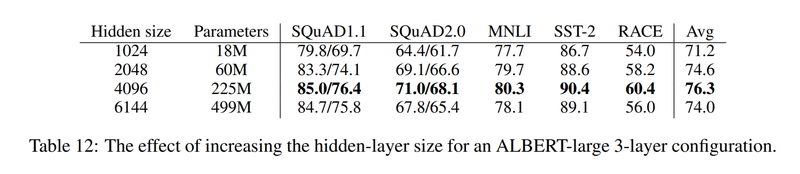

Table 12 shows the effect of the width of the model. It was observed that the accuracy of the model improved till the width of the network reaches 4096 and after that, any further increase in the width appears to have a decline in the accuracy of the model.

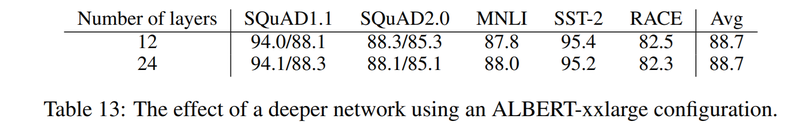

Table 13 investigates if we need a very deep model when the model is very wide. It seems that when we have H=4096, the difference between the performance of models with 12 or 24 layers is negligible.

These three tables illustrate the logic behind the authors' decisions about the width and depth of the model.

Source Code

The official source code is available at: https://github.com/google-research/ALBERT

Conclusion

By looking at the following table we can see that ALBERT-xxlarge outperforms the BERT-large on all the downstream tasks. Note that the ALBERT-xxlarge uses a larger configuration (yet fewer number of parameters) than BERT-large and as a result it is about 3 times slower.

Critiques

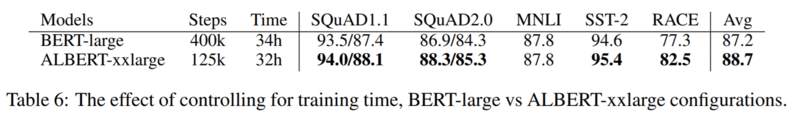

The authors mentioned that we usually get better results if we train our model for a longer time. Therefore, they present a comparison in which they trained both ALBERT-xxlarge and BERT-large for the same amount of time instead of the same number of steps. Here are the results:

However, in my opinion, this is not a fair comparison to let the ALBERT-xxlarge to train for 125K step and say that the BERT-large will be trained for 400K steps in the same amount of time because after some number of training steps, additional steps will not improve the result by that much. It would be better to look at the results when they let the BERT-large be trained for 125K step and the ALBERT-xxlarge to be trained the same amount of time. I guess in that case the result was in favour of the BERT-large. Actually it would be nice if we could have a plot with the time on the horizontal and the accuracy on the vertical axis. Then we would probably see that the BERT-large is better at first but at some time point afterwards the ALBERT-xxlarge starts to give the higher accuracy.

This paper proposed an embedding factorization to reduce the number of parameters in the embedding dimension, but the authors didn't cite or compare to related approaches. However, this kind of dimensionality reduction has been explored with other techniques, for example for knowledge distillation, quantization, or even adaptive input/softmax.

Reference

[1]: Tianqi Chen, Bing Xu, Chiyuan Zhang, and Carlos Guestrin. Training deep nets with sublinear memory cost. arXiv preprint arXiv:1604.06174, 2016.

[2]: Aidan N Gomez, Mengye Ren, Raquel Urtasun, and Roger B Grosse. The reversible residual network: Backpropagation without storing activations. In Advances in neural information processing systems, pp. 2214–2224, 2017.

[3]: Colin Raffel, Noam Shazeer, Adam Roberts, Katherine Lee, Sharan Narang, Michael Matena, Yanqi Zhou, Wei Li, and Peter J Liu. Exploring the limits of transfer learning with a unified text-to-text transformer. arXiv preprint arXiv:1910.10683, 2019.

[4]: Radu Soricut, Zhenzhong. ALBERT: A Lite BERT for Self-Supervised Learning of Language Representations. 2019. URL https://ai.googleblog.com/2019/12/albert-lite-bert-for-self-supervised.html

[5]: Kevin Clark, Urvashi Khandelwal, Omer Levy, Christopher D. Manning. What Does BERT Look At? An Analysis of BERT's Attention. 2019. URL https://arxiv.org/abs/1906.04341

[6]: Mandar Joshi, Danqi Chen, Yinhan Liu, Daniel S. Weld, Luke Zettlemoyer, and Omer Levy. SpanBERT: Improving pre-training by representing and predicting spans. 2019. URL https://arxiv.org/abs/1907.10529

[7]: Guillaume Lample and Alexis Conneau. Crosslingual language model pretraining. 2019. URL https://arxiv.org/abs/1901.07291

[8]: Christian Szegedy, Sergey Ioffe, Vincent Vanhoucke, and Alexander A Alemi. Inception-v4, inception-resnet and the impact of residual connections on learning. In Thirty-First AAAI Conference on Artificial Intelligence, 2017.

[9]: Xiang Li, Shuo Chen, Xiaolin Hu, and Jian Yang. Understanding the disharmony between dropout and batch normalization by variance shift. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2682–2690, 2019