BERTScore: Evaluating Text Generation with BERT

Presented by

Gursimran Singh

Introduction

In recent times, various machine learning approaches for text generation have gained popularity. The idea behind this paper is to develop an automatic metric that will judge the quality of the generated text. Commonly used state of the art metrics either uses n-gram approach or word embeddings for calculating the similarity between the reference and the candidate sentence. BertScore, on the other hand, calculates the similarity using contextual embeddings. The authors of the paper have carried out various experiments in Machine Translation and Image Captioning to show why BertScore is more reliable and robust than the previous approaches.

Previous Work

Previous Approaches for evaluating text generation can be broadly divided into various categories. The commonly used techniques for text evaluation are based on n-gram matching. The main objective here is to compare the n-grams in reference and candidate sentences and thus analyze the ordering of words in the sentences.

The most popular n-Gram Matching metric is BLEU. It follows the underlying principle of n-Gram matching and its uniqueness comes from three main factors.

• Each n-Gram is matched at most once.

• The total of exact-matches is accumulated for all reference candidate pairs.

• Very short candidates are restricted.

n-Gram approaches also include METEOR, NIST, ΔBLEU, etc.

Other categories include Edit-distance-based Metrics, Embedding-based metrics, and Learned Metrics. Most of these techniques do not capture the context of a word in the sentence. Moreover, Learned Metric approaches also require costly human judgments as supervision for each dataset.

Motivation

The n-gram approaches like BLEU do not capture the positioning and the context of the word and simply rely on exact matching for evaluation. Consider the following example that shows how BLEU can result in incorrect judgment.

Reference: people like foreign cars

Candidate 1: people like visiting places abroad

Candidate 2: consumers prefer imported cars

BLEU gives a higher score to Candidate 1 as compared to Candidate 2. This undermines the performance of text generation models since contextually correct sentences are penalized whereas some semantically different phrases are scored higher just because they are closer to the surface form of the reference sentence.

On the other hand, BERTScore computes similarity using contextual token embeddings. It helps in detecting semantically correct paraphrased sentences. It also captures the cause and effect relationship (A gives B in place of B gives A) that isn't detected by the BLEU score.

BERTScore Architecture

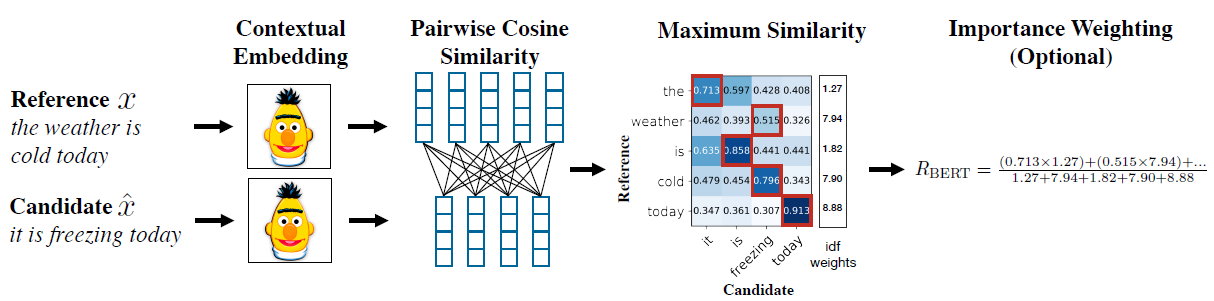

Fig 1 summarizes the steps for calculating the BERTScore. Next, we will see details about each step. Here, the reference sentence is given by [math]\displaystyle{ x = ⟨x1, . . . , xk⟩ }[/math] and candidate sentence [math]\displaystyle{ \hat{x} = ⟨\hat{x1}, . . . , \hat{xl}⟩. }[/math]

Token Representation

Reference and the candidate sentences are represented using contextual embeddings. This is inspired by word embedding techniques but in contrast to word embeddings, the contextual embedding of a word depends upon the surrounding words in the sentence. These contextual embeddings are calculated using BERT which utilizes self-attention and nonlinear transformations.

Cosine Similarity

Pairwise cosine similarity is calculated between each token [math]\displaystyle{ x_{i} }[/math] in reference sentence and [math]\displaystyle{ \hat{x}_{j} }[/math] in candidate sentence. Prenormalized vectors are used therefore the pairwise similarity is given by [math]\displaystyle{ x_{i}^T \hat{x_{i}}. }[/math]

BERTScore

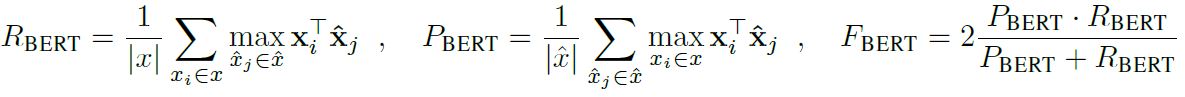

Each token in x is matched to the most similar token in x^ and vice-versa for calculating Recall and Precision respectively. The matching is greedy and isolated. Precision and Recall are combined for calculating the F1 score. Fig 2 shows the equations for calculating Precision, Recall and F1 Score.