Adacompress: Adaptive compression for online computer vision services

Presented by

Ahmed Salamah

Introduction

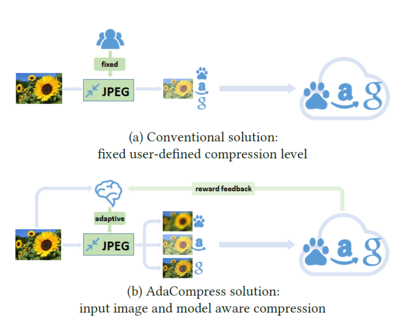

Big data and deep learning has been merged to create the great success of artificial intelligence which increases the burden on the network's speed, computational complexity, and storage in many applications. The image Classification task is one of the most important computer vision tasks which has shown a high dependency on Deep Neural Networks to improve their performance in many applications. Recently, they tend to use different image classification models on the cloud just to share the computational power between the different users as mentioned in this paper (e.g., SenseTime, Baidu Vision and Google Vision, etc.). Most of the researchers in the literature work to improve the structure and increase the depth of DNNs to achieve better performance from the point of how the features are represented and crafted using Conventional Neural Networks (CNNs). As the most well-known image classification datasets (e.g. ImageNet) are compressed using JPEG as this compression technique is optimized for Human Visual System (HVS) but not the machines (i.e. DNNs), so to be aligned with HVS the authors have to reconfigure the JPEG while maintaining the same classification accuracy.

JPEG

One of the major parameters that can be changed in the JPEG pipeline is the quantization table, which is the main source of artifacts to be added in the image to make it lossless compression. The authors got motivated to change the JPEG configuration to optimize the uploading rate of different cloud computer vision without considering pre-knowledge of the original model and dataset. The authors used Deep Reinforcement learning (DRL) in an online manner to choose the quantization level to upload an image to the cloud for the computer vision model and this is the only approach to design an adaptive JPEG based on RL mechanism.

The approach is designed based on an interactive training environment which represents any computer vision cloud services, then they needed a tool to evaluate and predict the performance of quantization level on an uploaded image, so they used a deep Q neural network agent. They feed the agent with a reward function which considers two optimization parameters, accuracy and image size. It works as iterative behavior interacting with the environment. The environment is exposed to different images with different virtual redundant information that needs an adaptive solution for each image to select the suitable compression level for the model.

Thus, they designed an explore-exploit mechanism to train the agent on different scenery which is designed in deep Q agent as an inference-estimate-retain mechanism to control to restart the training procedure for each image. The authors verify their approach by providing some analysis and insight using Grad-Cam by showing some patterns of each image with its own corresponding quality factor. Each image shows a different response from a deep model to show that images are more sensitive to large smooth areas, while is more robust compression for images with complex textures.

Critiques

The authors used a pre-trained model as a feature extractor to select a Quality Factor (QF) for the JPEG. I think what would be missing that they did not report the distribution of each of their span of QFs as it is important to understand which one is expected to contribute more. In my video, I have done one experiment using Inception-V3 to understand if it is possible to get better accuracy. I found that it is possible by using the inception model as a pre-trained model to choose a lower QF, but as well known that the mobile models are shallower than the inception models which make it less complex to run on edge devices. I think it is possible to achieve at least the same accuracy or even more if we replaced the mobile model with the inception. Another point, the authors did not run their approach on a complete database like ImageNet, they only included a part of two different datasets. I know they might have limitations in the available datasets to test like CIFARs, as they are not totally comparable from the resolution perspective for the real online computer vision services work with higher resolutions.