Pre-Training Tasks For Embedding-Based Large-Scale Retrieval

Contributors

Pierre McWhannel wrote this summary with editorial and technical contributions from STAT 946 fall 2020 classmates. The summary is based on the paper "Pre-Training Tasks for Embedding-Based Large-Scale Retrieval" which was presented at ICLR 2020. The authors of this paper are Wei-Cheng Chang, Felix X. Yu, Yin-Wen Chang, Yiming Yang, Sanjiv Kumar [1].

Introduction

The problem domain which the paper is positioned within is large-scale query-document retrieval. This problem is: given a query/question to collect relevant documents which contain the answer(s) and identify the span of words which contain the answer(s). This problem is often separated into two steps 1) a retrieval phase which reduces a large corpus to a much smaller subset and 2) a reader which is applied to read the shortened list of documents and score them based on their ability to answer the query and identify the span containing the answer, these spans can be ranked according to some scoring function. In the setting of open-domain question answering the query, [math]\displaystyle{ q }[/math] is a question and the documents [math]\displaystyle{ d }[/math] represent a passage which may contain the answer(s). The focus of this paper is on the retriever in open-domain question answering, in this setting a scoring function [math]\displaystyle{ f: \mathcal{X} \times \mathcal{Y} \rightarrow \mathbb{R} }[/math] is utilized to map [math]\displaystyle{ (q,d) \in \mathcal{X} \times \mathcal{Y} }[/math] to a real value which represents the score of how relevant the passage is to the query. We desire high scores for relevant passages and and low scores otherwise and this is the job of the retriever.

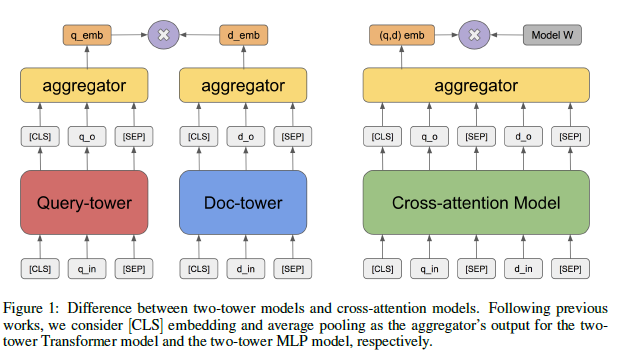

Certain characteristics desired of the retriever are high recall since the reader will only be applied to a subset meaning there is an an opportunity to identify false positive but not false negatives. The other characteristic desired of a retriever is low latency. The popular approach to meet these heuristics has been the BM-25 [2] which is relies on token-based matching between two high-dimensional and sparse vectors representing [math]\displaystyle{ q }[/math] and [math]\displaystyle{ d }[/math] this approach performances well and retrieval can be performed in time sublinear to the number of passages with inverter indexing. The downfall of this type of algorithm is its inability to be optimized for a specific task. An alternate option is BERT or transformer based models with cross attention between query and passage pairs these can be optimized for a specific task. However, these models suffer from latency as the retriever needs to process all the pairwise combinations of a query with all passages. The next type of algorithm is an embedding-based model that jointly embeds the query and passage in the same embedding space and then uses measures such as cosine distance, inner product, or even the Euclidean distance in this space to get a score. The authors suggest the two-tower models can capture deeper semantic relationships in addition to being able to be optimized for specific tasks. This model is referred to as the "two-tower retrieval model", since it has two transformer based models where one embeds the query [math]\displaystyle{ \phi{(\cdot)} }[/math] and the other the passage [math]\displaystyle{ \psi{(\cdot)} }[/math], then the embeddings can be scored by [math]\displaystyle{ f(q,d) = \langle \phi{(q)},\psi{(d)} \rangle \in \mathbb{R} }[/math]. This model can avoid the latency problems of a cross-layer model by pre-computing [math]\displaystyle{ \psi{(d)} }[/math] before hand and then by utilizing efficient approximate nearest neighbor search algorithms in the embedding space to find the nearest documents.

These two-tower retrieval models often use BERT with pretrained weights, in BERT the pre-training tasks are masked-LM (MLM) and next sentence prediction (NSP). The authors note that the research of pre-training tasks that improve the performance of two-tower retrieval models is an unsolved research problem. This is exactly what the authors have done in this paper is develop pre-training tasks for the two-tower model based on heuristics and then validated by experimental results. The pre-training tasks suggested are Inverse Cloze Task (ICT), Body First Search (BFS), Wiki Link Prediction (WLP), and combining all three. The authors used the Retrieval Question-Answering (ReQA) benchmark and used two datasets SQuAD and Natural Questions for training and evaluating their models.

Contributions of this paper

- The two-tower transformer model with proper pre-training can significantly outperform the widely used BM-25 Algorithm.

- Paragraph-level pre-training tasks such as ICT, BFS, and WLP hugely improve the retrieval quality, whereas the most widely used pre-training task (the token-level masked-LM) gives only marginal gains.

- The two-tower models with deep transformer encoders benefit more from paragraph-level pre-training compared to its shallow bag-of-word counterpart (BoW-MLP)

Background on Two-Tower Retrieval Models

This section is to present the two-tower model in more detail. As seen previously [math]\displaystyle{ q \in \mathcal{X} }[/math], [math]\displaystyle{ d \in \mathcal{y} }[/math] are the query and document or passage respectively. The two-tower retrieval model consists of two encoders: [math]\displaystyle{ \phi: \mathcal{X} \rightarrow \mathbb{R}^k }[/math] and [math]\displaystyle{ \psi: \mathcal{Y} \rightarrow \mathbb{R}^k }[/math]. The tokens in [math]\displaystyle{ \mathcal{X},\mathcal{Y} }[/math] are a sequence of tokens representing the query and document respectively. The scoring function is [math]\displaystyle{ f(q,d) = \langle \phi{(q)},\psi{(d)} \rangle \in \mathbb{R} }[/math]. The cross-attention BERT-style model would have [math]\displaystyle{ f_{\theta,w}(q,d) = \psi_{\theta}{(q \oplus d)^{T}}w }[/math] as a scoring function and [math]\displaystyle{ \oplus }[/math] signifies the concatenation of the sequences of [math]\displaystyle{ q }[/math] and [math]\displaystyle{ d }[/math], [math]\displaystyle{ \theta }[/math] are the parameters of the cross-attention model. The architectures of these two models can be seen below in figure 1.

Comparisons

Pre-Training Tasks

Experiments and Results

Conclusion

Critique

References

[1] Wei-Cheng Chang, Felix X Yu, Yin-Wen Chang, Yiming Yang, and Sanjiv Kumar. Pre-training tasks for embedding-based large-scale retrieval. arXiv preprint arXiv:2002.03932, 2020.

[2] Stephen Robertson, Hugo Zaragoza, et al. The probabilistic relevance framework: BM25 and beyond. Foundations and Trends in Information Retrieval, 3(4):333–389, 2009.