Learning to Navigate in Cities Without a Map

Paper: Learning to Navigate in Cities Without a Map

A video of the paper is available here.

Introduction

Navigation is an attractive topic in many research disciplines and technology related domains such as neuroscience and robotics. The majority of algorithms are based on the following steps.

1. Building an explicit map

2. Planning and acting using that map.

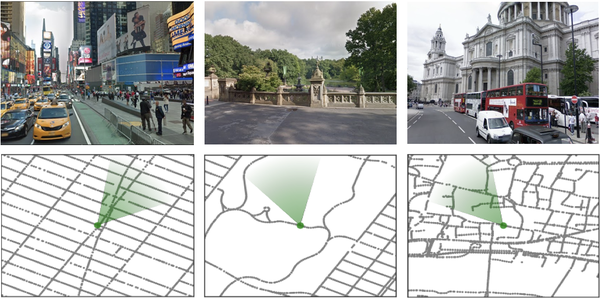

In this article, based on this fact that human can learn to navigate through cities without using any special tool such as maps or GPS, authors propose new methods to show that a neural network agent can do the same thing by using visual observations. To do so, an interactive environment using Google StreetView Images and a dual pathway agent architecture is designed. As shown in figure 1, some parts of the environment are built using Google StreetView images of New York City (Times Square, Central Park) and London (St. Paul’s Cathedral). The green cone represents the agent’s location and orientation. Although learning to navigate using visual aids is shown to be successful in some domains such as games and simulated environments using deep reinforcement learning (RL), it suffers from data inefficiency and sensitivity to changes in the environment. Thus, it is unclear whether this method could be used for large-scale navigation. That’s why it became the subject of investigation in this paper.

Contribution

This paper has made the following contributions:

1. Designing a dual pathway agent architecture. This agent can navigate through a real city and is trained with end-to-end reinforcement learning to handle real-world navigations.

2. Using Goal-dependent learning. This means that the policy and value functions must adapt themselves to a sequence of goals that are provided as input.

3. Leveraging a recurrent neural architecture. Using that, not only could navigation through a city be possible, but also the model is scalable for navigation in new cities. This architecture supports both locale-specific learnings and general transferable navigations. The authors achieved these by separating a recurrent neural pathway. This pathway receives and interprets the current goal as well as encapsulates and memorizes features of a single region.

4. Using a new environment which is built on top of Google StreetView images. This provides real-world images for agent’s observation. Using this environment, the agent can navigate from an arbitrary starting point to a goal and then to another goal etc. Also, London, Paris, and New York City are chosen for navigation.

The authors demonstrate that their proposed method can provide a mechanism for transferring knowledge to new cities. As with humans, when the agent visits a new city, the expectation is it to have it learn a new set of landmarks, but not to have to re-learn its visual representations or its behaviours (e.g., zooming forward along streets or turning at intersections). Therefore, using the MultiCity architecture, the paper trains first on a number of cities, then freezes both the policy network and the visual convolutional network and only a new locale-specific pathway on a new city. This approach enables the agent to acquire new knowledge without forgetting what it has already learned, similarly to the progressive neural networks architecture.

Related Work

1. Localization from real-world imagery. For example, (Weyand et al., 2016), a CNN was able to achieve excellent results on geolocation task. This paper provides novel work by not including supervised training with ground-truth labels, and by including planning as a goal. Some other works also improve by exploiting spatiotemporal continuity or estimating camera pose or depth estimation from pixels. These methods rely on supervised training with ground truth labels, which is not possible in every environment.

2. Deep RL methods for navigation. For instance, (Mirowski et al., 2016; Jaderberg et al., 2016) used self-supervised auxiliary tasks to produce visual navigation in several created mazes. Some other researches used text descriptions to incorporate goal instructions. Researchers developed realistic, higher-fidelity environment simulations to make the experiment more realistic, but that still came with lack of diversities. This paper makes use of real-world data, in contrast to many related papers in this area. It's diverse and visually realistic but still, it does not contain dynamic elements, and the street topology cannot be regenerated or altered.

3. Deep RL for path planning and mapping. For example, (Zhang et al., 2017) created an agent that represented a global map via an RL agent with external memory; some other work uses a hierarchical control strategy to propose a structured memory and Memory Augmented Control Maps. Explicit neural mapper and navigation planner with joint training was also used. Among all these works, the target-driven visual navigation with a goal-conditional policy approach was most related to our method.

4. To make simulations resemble reality, researchers have developed higher-fidelity simulated environments (Dosovitskiy et al., 2017; Kolve et al., 2017; Shah et al., 2018; Wu et al., 2018). However, in spite of the photo-realism, the inherent problems of simulated environments pertain to the limited diversity of the environments and the idealistic cleanliness of the observations.

Environment

Google StreetView consists of both high-resolution 360-degree imagery and graph connectivity. Also, it provides a public API. These features make it a valuable resource. In this work, large areas of New York, Paris, and London that contain between 7,000 and 65,500 nodes (and between 7,200 and 128,600 edges, respectively), have a mean node spacing of 10m and cover a range of up to 5km chosen (Figure 2), without simplifying the underlying connections. This means that there are many areas 'congested' with nodes, occlusions, available footpaths, etc. The agent only sees RGB images that are visible in StreetView images (Figure 1) and is not aware of the underlying graph.

Agent Interface and the Courier Task

In an RL environment, we need to define observations and actions in addition to tasks. The inputs to the agent are the image [math]\displaystyle{ x_t }[/math] and the goal [math]\displaystyle{ g_t }[/math]. Also, a first-person view of the 3D environment is simulated by cropping [math]\displaystyle{ x_t }[/math] to a 60-degree square RGB image that is scaled to 84*84 pixels. Furthermore, the action space consists of 5 movements: “slow” rotate left or right (±22:5), “fast” rotate left or right (±67.5), or move forward (implemented as a noop in the case where this is not a viable action). The most central edge is chosen if there are multiple edges in the agents viewing cone.

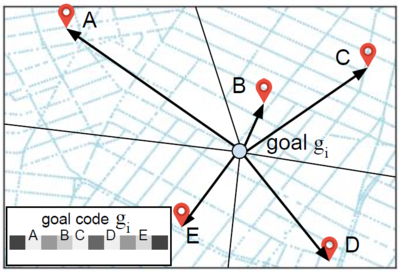

There are lots of ways to specify the goal to the agent. In this paper, the current goal is chosen to be represented in terms of its proximity to a set L of fixed landmarks [math]\displaystyle{ L={(Lat_k, Long_k)} }[/math] which are specified using Latitude and Longitude coordinate system. For distance to the [math]\displaystyle{ k_{th} }[/math] landmark [math]\displaystyle{ {(d_{(t,k)}^g})_k }[/math] the goal vector contains [math]\displaystyle{ g_{(t,i)}=\tfrac{exp(-αd_{(t,i)}^g)}{∑_k exp(-αd_{(t,k)}^g)} }[/math]for [math]\displaystyle{ i_{th} }[/math] landmark with [math]\displaystyle{ α=0.002 }[/math] (Figure 3).

This form of representation has several advantages:

1. It could easily be extended to new environments.

2. It is intuitive. Even humans and animals use landmarks to be able to move from one place to another.

3. It does not rely on arbitrary map coordinates, and provides an absolute (as opposed to relative) goal.

In this work, 644 landmarks for New York, Paris, and London are manually defined. The courier task is the problem of navigating to a list of random locations within a city. In each episode, which consists of 1000 steps, the agent starts from a random place with random orientation. when an agent gets within 100 meters of goal, the next goal is randomly chosen. An episode ends after 1000 agent steps. Finally, the reward is proportional to the shortest path between agent and goal when the goal is first assigned (providing more reward for longer journeys). Thus the agent needs to learn the mapping between the images observed at the goal location and the goal vector in order to solve the courier task problem. Furthermore, the agent must learn the association between the images observed at its current location and the policy to reach the goal destination.

Methods

Goal-dependent Actor-Critic Reinforcement Learning

In this paper, the learning problem is based on Markov Decision Process, with state space [math]\displaystyle{ \mathcal{S} }[/math], action space [math]\displaystyle{ \mathcal{A} }[/math], environment [math]\displaystyle{ \mathcal{E} }[/math], and a set of possible goals [math]\displaystyle{ \mathcal{G} }[/math]. The reward function depends on the current goal and state: [math]\displaystyle{ \mathcal{R}: \mathcal{S} \times \mathcal{G} \times \mathcal{A} → \mathbb{R} }[/math]. Typically, in reinforcement learning the main goal is to find the policy which maximizes the expected return. Expected return is defined as the sum of discounted rewards starting from state [math]\displaystyle{ s_0 }[/math] with discount [math]\displaystyle{ \gamma }[/math]. Also, the expected return from a state [math]\displaystyle{ s_t }[/math] depends on the goals that are sampled. The policy is defined as a distribution over the actions, given the current state [math]\displaystyle{ s_t }[/math] and the goal [math]\displaystyle{ g_t }[/math]:

\begin{align} \pi(\alpha|s,g)=Pr(\alpha_t=\alpha|s_t=s, g_t=g) \end{align}

Value function is defined as the expected return obtained by sampling actions from policy [math]\displaystyle{ \pi }[/math] from state [math]\displaystyle{ s_t }[/math] with goal [math]\displaystyle{ g_t }[/math]:

\begin{align} V^{\pi}(s,g)=E[R_t]=E[Σ_{k=0}^{\infty}\gamma^kr_{t+k}|s_t=s, g_t=g] \end{align}

Also, an architecture with multiple pathways is designed to support two types of learning that is required for this problem. First, an agent needs an internal representation which is general and gives an understanding of a scene. Second, to better understand a scene the agent needs to remember unique features of the scene which then help the agent to organize and remember the scenes.

Architectures

The authors use neural networks to parameterize policy and value functions. These neural networks share weights in all layers except the final linear layer. The agent takes image pixels as input. These pixels are passed through a convolutional network. The output of the Convolution network is fed to a Long Short-Term Memory (LSTM) as well as the past reward [math]\displaystyle{ r_{t-1} }[/math] and previous action [math]\displaystyle{ \alpha_{t-1} }[/math].

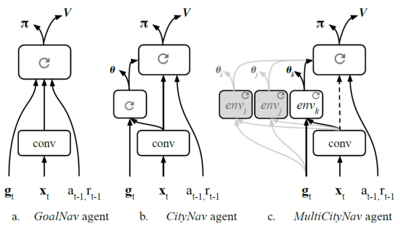

Three different architectures are described below.

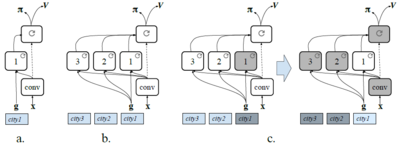

The GoalNav architecture (Fig. 4a) which consists of a convolutional architecture and policy LSTM. Goal description [math]\displaystyle{ g_t }[/math], previous action, and reward are the inputs of this LSTM.

The CityNav architecture (Fig. 4b) consists of the previous architecture alongside an additional LSTM, called the goal LSTM. Inputs of this LSTM are visual features and the goal description. The CityNav agent also adds an auxiliary heading (θ) prediction task which is defined as an angle between the north direction and the agent’s pose. This auxiliary task can speed up learning and provides relevant information.

The MultiCityNav architecture (Fig. 4c) is an extension of CityNav for learning in different cities. This is done using the parallel connection of goal LSTMs for encapsulating locale-specific features, for each city. Moreover, the convolutional architecture and the policy LSTM become general after training on a number of cities. So, new goal LSTMs are required to be trained in new cities.

The auxiliary tasks can speed up learning by providing extra gradients, as well as relevant information. A very natural auxiliary task is employed - The prediction of the agents heading, defined as an angle between the north direction and the agent's pose using a multinomial classification loss on binned angles.

In this paper, the authors use IMPALA [1] to train the agents because IMPALA can get similar performance to A3C [2].

Prior on agent training: IMPALA and A3C

IMPALA (Importance Weighted Actor-Learner Architecture) is an actor-critic implementation of deep reinforcement learning that decouples actions from learning. IMPALA results in a comparable performance to A3C (Google DeepMind's previous algorithm: Asynchronous Actor-Critic Agents) on a single city task, but it has been shown to handle better multi-task learning than A3C. The authors use 256 actors for CityNav and 512 actors for MultiCityNav, with batch sizes of 256 or 512 respectively, and sequences are unrolled to length 50.

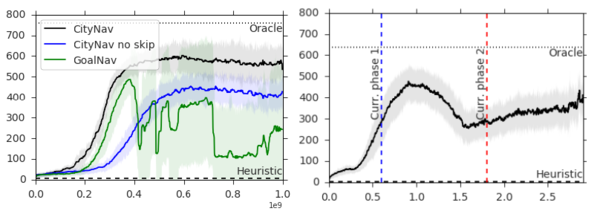

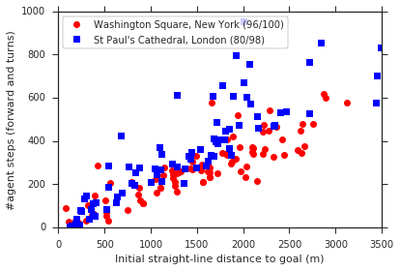

Curriculum Learning

In curriculum learning, the model is trained using simple examples in first steps. As soon as the model learns those examples, more complex and difficult examples would be fed to the model. In this paper, this approach is used to teach agent to navigate to further destinations. This courier task suffers from a common problem of RL tasks which is sparse rewards (similar to Montezuma’s Revenge) . To overcome this problem, a natural curriculum scheme is defined, in which sampling each new goal would be within 500m of the agent’s position. This is called phase 1. In phase 2, the maximum range is gradually increased to cover the full graph (3.5km in the smaller New York areas, or 5km for central London or Downtown Manhattan)

Curriculum learning was first introduced by Bengio et. al in 2009. It serves as a continuation method for non-convex optimization, and improves training time by injecting noisy data. One example outside this paper for curriculum learning is outlined below:

1. We aim to classify shapes within the following three classes: triangles, ellipses, and rectangles. We can create a curriculum by first starting with a simplified dataset that consists of only special cases of these three classes: equilateral triangles, circles, and squares. By first training on these special cases, and then introducing the full model, we can allow the algorithm to converge more quickly towards a local minima before providing "harder" examples. Feeding only these specialized examples also serves as a method to make the classes fall on more distinct manifold locations; with less overlap, these networks will perform better when noise is later added as well.

Results

In this section, the performance of the proposed architectures on the courier task is shown.

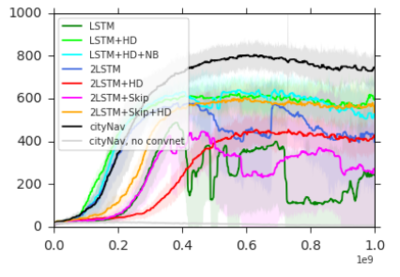

It is first shown that the CityNav agent, trained with curriculum learning, succeeds in learning the courier task in New York, London and Paris. Figure 5 compares the following agents:

1. Goal Navigation agent.

2. City Navigation Agent.

3. A City Navigation agent without the skip connection from the vision layers to the policy LSTM. This is needed to regularise the interface between the goal LSTM and the policy LSTM in multi-city transfer scenario.

Also, a lower bound (Heuristic) and an upper bound(Oracle) on the performance is considered. As it is said in the paper: "Heuristic is a random walk on the street graph, where the agent turns in a random direction if it cannot move forward; if at an intersection it will turn with a probability [math]\displaystyle{ P=0.95 }[/math]. Oracle uses the full graph to compute the optimal path using breadth-first search.". As it is clear in Figure 5, CityNav architecture with the previously mentioned architecture attains a higher performance and is more stable than the simpler GoalNav agent.

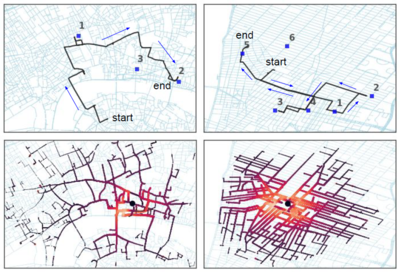

The trajectories of the trained agent over two 1000 step episodes and the value function of the agent during navigation to a destination is shown in Figure 6.

Figure 7 shows that navigation policy is learned by agent successfully in St Paul’s Cathedral in London and Washington Square in New York.

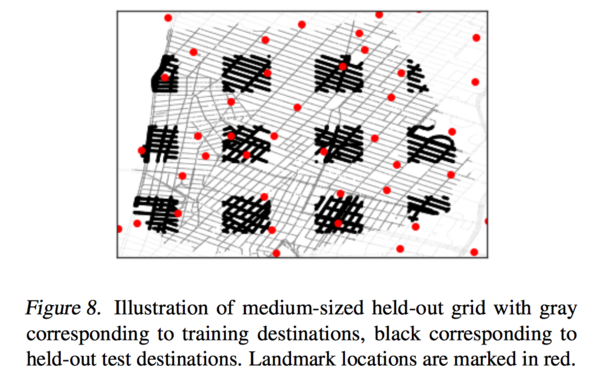

The authors mask 25% of the possible goals and train on the remaining ones in order to investigate the generalisation capability of a trained agent. At test time the agent is evaluated only on its ability to reach goals in the held-out areas. The agent is still able to traverse through the areas, however, it just never samples a goal there. The CityNav agent is trained for 1B steps and then the weights of the agent are frozen and performance evaluated on held-out areas for 100M steps. Experiments showed decreasing performance of the agents as the held-out area size increased. It was observed that while the agent misses more goal destinations on larger held-out grids it still manages to travel half the distance to the goal withing a similar time. This suggests that the agent has an approximate held-out goal representation that enables it to head towards it until it gets close to the goal and the representation is no longer useful for the final approach.

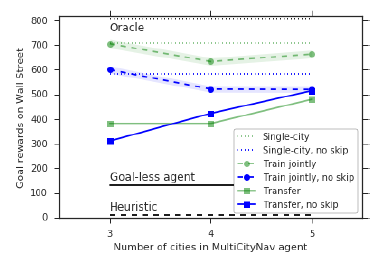

A critical test for this article is to transfer model to new cities by learning a new set of landmarks, but without re-learning visual representation, behaviors, etc. Therefore, the MultiCityNav agent is trained on a number of cities besides freezing both the policy LSTM and the convolutional encoder. Then a new locale-specific goal LSTM is trained. The performance is compared using three different training regimes, illustrated in Fig. 9: Training on only the target city (single training); training on multiple cities, including the target city, together (joint training); and joint training on all but the target city, followed by training on the target city with the rest of the architecture frozen (pre-train and transfer). Figure 10 shows that transferring to other cities is possible. Also, training the model on more cities would increase its effectiveness. According to the paper: "Remarkably, the agent that is pre-trained on 4 regions and then transferred to Wall Street achieves comparable performance to an agent trained jointly on all the regions, and only slightly worse than single-city training on Wall Street alone". Training the model in a single city using skip connection is useful. However, it is not useful in multi-city transferring.

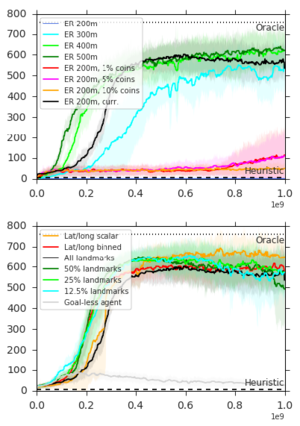

Giving early rewards before agent reaches the goal or adding random rewards (coins) to encourage exploration is investigated in this article. Figure 11a suggests that coins by themselves are ineffective as our task does not benefit from wide explorations. Also, as it is clear from Figure 11b, reducing the density of the landmarks does not seem to reduce the performance. Based on the results, authors chose to start sampling the goal within a radius of 500m from the agent’s location, and then progressively extend it to the maximum distance an agent could travel within the environment. In addition, to asses the importance of the goal-conditioned agents, a Goal-less CityNav agent is trained by removing inputs gt. The poor performance of this agent is clear in Figure 11b. Furthermore, reducing the density of the landmarks by the ratio of 50%, 25%, and 12:5% does not reduce the performance that much. Finally, some alternative for goal representation is investigated:

a) Latitude and longitude scalar coordinates normalized to be between 0 and 1. This is based on the region which the agent navigates.

b) Binned representation.

The latitude and longitude scalar goal representations perform the best. However, since the all landmarks representation performs well while remaining independent of the coordinate system, we use this representation as the canonical one.

Conclusion

In this paper, a deep reinforcement learning approach that enables navigation in cities is presented through the use of Google StreetView for its photographic content and worldwide coverage. Furthermore, the authors discussed a new courier task and a multi-city neural network agent architecture that is transferable to new cities. A successful navigation architecture is presented which relies on integration of general policies with locale-specific knowledge.

Future Works

The paper uses staic Google Street View images. However, this means that there are some more information that we can get from the images beyond the route. Even though it is not the central focus of the paper, it would be extremely useful if we can incorporate such information for effective route-building or planning.

Critique

1. It is not clear how this model is applicable to the real world. A real-world navigation problem needs to detect objects, people, and cars. However, it is not clear whether they are modeling them or not. From what I understood, they did not care about the collision, which is against their claim that it is a real-world problem.

2. This paper is only using static Google Street View images as its primary source of data. But the authors must at least complement this with other dynamic data like traffic and road blockage information for a realistic model of navigation in the world. Also, this is quite understandable not to use maps but is not clear why have they not used GPS to know their position and maybe even made up with a map. This can be something useful in an emergency or even for investigating places that are not known or there is no access to them. The resulting map could be easily compared with the real one and could also be used in training to achieve higher performance. The availability should not be a serious problem because if they are simulating a real city and the google images are available, why should not GPS be? What is the intuition? At least, a complementary description on this could be helpful.

3. The 'Transfer in Multi-City Experiments' results could be strengthened significantly via cross-validation (only Wall Street, which covers the smallest area of the four regions, is used as the test case). Additionally, the results do not show true 'multi-city' transfer learning, since all regions are within New York City. It is stated in the paper that not having to re-learn visual representations when transferring between cities is one of the outcomes, but the tests do not actually check for this. There are likely significant differences in the features that would be learned in NYC vs. Waterloo, for example, and this type of transfer has not been evaluated.

4. The proposed navigation model could be limited by its reliance on pre-defined landmarks, which appears to be strategically placed evenly spreading across each city. This could limit the agent's deployability to new cities.

Reference

[1] Espeholt, Lasse, Soyer, Hubert, Munos, Remi, Simonyan, Karen, Mnih, Volodymir, Ward, Tom, Doron, Yotam, Firoiu, Vlad, Harley, Tim, Dunning, Iain, Legg, Shane, and Kavukcuoglu, Koray. Impala: Scalable distributed deep-rl with importance weighted actor-learner architec- tures. arXiv preprint arXiv:1802.01561, 2018.

[2] Mnih, Volodymyr, Badia, Adria Puigdomenech, Mirza, Mehdi, Graves, Alex, Lillicrap, Timothy, Harley, Tim, Silver, David, and Kavukcuoglu, Koray. Asynchronous methods for deep reinforcement learning. In Interna- tional Conference on Machine Learning, pp. 1928–1937, 2016.