Representations of Words and Phrases and their Compositionality

Representations of Words and Phrases and their Compositionality is a popular paper published by the Google team led by Tomas Mikolov in 2013. It is known for its impact in the field of Natural Language Processing and the techniques described below are till in practice today.

Presented by

- F. Jiang

- J. Hu

- Y. Zhang

Introduction

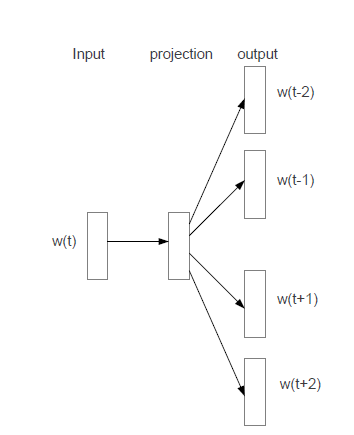

The Skip-gram model is NLP method where a given word ("target") is fed into the model which outputs a vector indicating the probability of finding certain words in the immediate context ("surroundings") of the target word. Thus, words or phrases that appear together in the training sample with more regularity are deemed to have similar contexts and will result in a model generating higher output probabilities for one or the other. For example, inputting the word "light" will probably result in an output vector with high values for the words "show" and "bulb".

Skip-gram requires a pre-specified vocabulary of words or phrases containing all possible target and context words to work.

Skip Gram Model

Hierarchical Softmax

Negative Sampling

Subsampling of Frequent Words

Empirical Results

References

[1] Tomas Mikolov, Ilya Sutskever, Kai Chen, Greg Corrado, Jeffrey Dean (2013). Distributed Representations of Words and Phrases and their Compositionality. arXiv:1310.4546.

[2] McCormick, C. (2017, January 11). Word2Vec Tutorial Part 2 - Negative Sampling. Retrieved from http://www.mccormickml.com