Multi-scale Dense Networks for Resource Efficient Image Classification

Introduction

Multi-Scale Dense Networks, MSDNets, are designed to address the growing demand for efficient object recognition. The issue with existing recognition networks is that they are either: efficient networks, but don't do well on hard examples, or large networks that do well on all examples but require a large amount of resources

In order to be efficient on all difficulties MSDNets propose a structure that can accurately output classifications for varying levels of computational requirements. The two cases that are used to evaluate the network are: Anytime Prediction: What is the best prediction the network can provide when suddenly prompted. Budget Batch Predictions: Given a maximum amount of computational resources how well does the network do on the batch.

Related Networks

Computationally Efficient Networks

Existing methods for refining an accurate network to be more efficient include weight pruning, quantization of weights (during or after training), and knowledge distillation, which trains smaller network to match teacher network.

Resource Efficient Networks

Unlike the above, resource efficient concepts consider limited resources as a part of the structure/loss. Examples of work in this area include:

- Efficient variants to existing state of the art networks

- Gradient boosted decision trees, which incorporate computational limitations into the training

- Fractal nets

- Adaptive computation time method

Related architectures

MSDNets pull on concepts from a number of existing networks:

- Neural fabrics and others, are used to quickly establish a low resolution feature map, which is integral for classification.

- Deeply supervised nets, introduced the incorporation of multiple classifiers throughout the network

- The feature concatenation method from DenseNets allows the later classifiers to not be disrupted by the weight updates from earlier classifiers.

Multi-Scale Dense Networks

Integral Contributions

The way MSDNets aims to provide efficient classification with varying computational costs is to create one network that outputs results at depths. While this may seem trivial, as intermediate classifiers can be inserted into any existing network, two major problems arise.

Coarse Level Features Needed For Classification

Coarse level features are needed to gain context of scene. In typical CNN based networks, the features propagate from fine to coarse. Classifiers added to the early, fine featured, layers do not output accurate predictions due to the lack of context.

To address this issue, MSDNets proposes an architecture in which uses multi scaled feature maps. The network is quickly formed to contain a set number of scales ranging from fine to coarse. These scales are propagated throughout, so that for the length of the network there are always coarse level features for classification and fine features for learning more difficult representations.

Training of Early Classifiers Interferes with Later Classifiers

When training a network containing intermediate classifiers, the training of early classifiers will cause the early layers to focus on features for that classifier. These learned features may not be as useful to the later classifiers and degrade their accuracy.

MSDNets use dense connectivity to avoid this issue. By concatenating all prior layers to learn future layers, the gradient propagation is spread throughout the available features. This allows later layers to not be reliant on any single prior, providing opportunities to learn new features that priors have ignored.

Architecture

(image of arch) The architecture of an MSDNet can be thought of as a structure of convolutions with a set number of layers and a set number of scales. Layers allow the network to build on the previous information to generate more accurate predictions, while the scales allow the network to maintain coarse level features throught.

The first layer is a special, mini-cnn-network, that quickly fills all required scales with features. The following layers are generated through the convolutions of the previous layers and scales. Each output at a given s scale is given by the convolution of all prior outputs of the same scale, and the strided-convolution of all prior outputs from the previous scale.

The classifiers are run on the concatenation of all of the coarsest outputs from the preceding layers.

Loss Function

The loss is calculated as a weighted sum of each classifier's logistic loss. The weighted loss is taken as an average over a set of training samples. The weights can be determined from a budget of computational power, but results also show that setting all to 1 is also acceptable.

Computational Limit Inclusion

When running in a budgeted batch scenario, the network attempts to provide the best overall accuracy. To do this with a set limit on computational resources, it works to use less of the budget on easy detections in order to allow more time to be spent on hard ones. In order to facilitate this, the classifiers are designed to exit when the confidence of the classification exceeds a preset threshold. To determine the threshold for each classifier, Dtest*sum(qk*Ck) <= B must be true. Where Dtest is the total number of test samples, Ck is the computational requirement to get an output from the kth classifier, and qk is the probability that a sample exits at the kth classifier. Assuming that all classifiers have the same base probability, q, then qk can be used to find the threshold

Experiments

When evaluating on CIFAR-10 and CIFAR-100 ensembles and multi-classifier versions of ResNets and DenseNets, as well as FractalNet are used to compare with MSDNet.

When evaluating on ImageNet ensembles and individual versions of ResNets and DenseNets are compared with MSDNets.

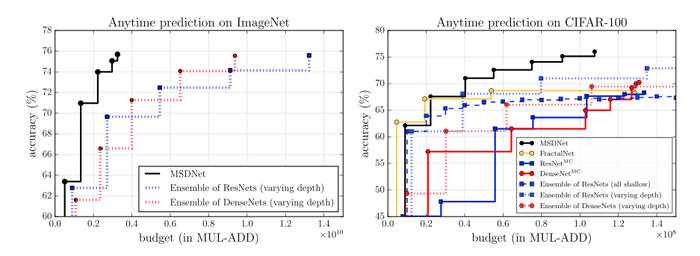

Anytime Prediction

In anytime prediction MSDNets are shown to have highly accurate with very little budget, and continue to remain above the alternate methods as the budget increases.

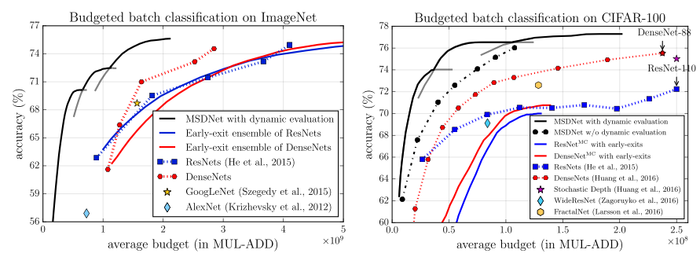

Budget Batch

For budget batch 3 MSDNets are designed with classifiers set-up for varying ranges of budget constraints. On both dataset options the MSDNets exceed all alternate methods with a fraction of the budget required.

Critique

The problem formulation and scenario evaluation were very well formulated, and according to independent reviews, the results were reproducible. Where the paper could improve is on explaining how to implement the threshold; it isn't very well explained how the use of the validation set can be used to set the threshold value.