stat441w18/e-gan

Presented by

1. Yufeng Yue

2. Shanzi Wang

3. Yumeng Li

4. Yuyang Bao

5. Yun Shi

6. Wan Feng Cai

7. Ki Beom Lee

8. Qian Xiang

Introduction

In recent years, Generative Adversarial Networks (GAN) have emerged as a powerful method for learning generative models with real-world data. However, existing GANs (GAN and its variants) is experiencing training problems such as instability and mode collapse.

In this paper, authors proposed a novel GAN framework called Evolutionary Generative Adversarial Networks (E-GAN) for stable GAN training and improved generative performance. Compared with existing GANs, which employ a pre-defined adversarial objective function alternately training a generator and a discriminator, E-GAN uses different adversarial training objectives as mutation operations and evolves a population of generators to adapt to the environment (i.e., the discriminator). E-GAN also uses an evaluation mechanism to measure the quality and diversity of generated samples, such that only well-performing generator(s) are preserved and used for further training so that E-GAN would be able to overcome the limitations of an individual adversarial training objective and always preserves the best offspring, contributing to progress in and the success of GANs.

In a later section of the paper, experiment results on several datasets show that E-GAN achieves convincing generative performance and reduces the training problems inherent in existing GANs.

Related Works

Generative Adversarial Networks (GAN)

Evolutionary Algorithms

Method

Generative Adversarial Networks (GAN)

Evolutionary Algorithms

Mutations

Many mutation techniques can be used when we are producing the children nodes (or the next generation of data points). These mutation techniques each has their own training objectives, but they all attempt to get the generated distribution as close to the data distribution as possible. In this section, we will introduce 3 mutation techniques that we used inside this paper.

Minimax Mutation

A minimax mutation is defined as the following.

[math]\displaystyle{ M^{minimax}_G = {1/2}*E[log(1-D(G(z))] }[/math]

This definition of minimax is identical as the one in the original GAN. Given the optimal discriminator "D", the minimax mutation aims to minimize the Jensen-Shannon divergence (JSD) between the data distribution and the generated distribution. Although the minimax mutation is easy to understand and analyze, its practical performance isn't so great due to the generator's vanishing gradient. "If the support of two distributions lies in two manifolds, the JSD will be a constant, leading to the vanishing gradient." However, if the generated distribution overlaps with the data distribution, which means the discriminator cannot completely distinguish the two, the minimax mutation provides effective gradients and continually narrows the JSD between the data distribution and the generated distribution

Heuristic Mutation

Least-squares Mutation

Evaluation

First, we simply feed generator produced images into the discriminator D and observe the average value of the output, which we name the quality fitness score:

Fq = Ez[D(G(z))]

Then, we employ a similar principle to evaluate generator optimization stability and generative diversity as:

Fd = -logr||delta(D) - Ex[logD(x)] - Ez[log(1 - D(G(z)))]||:

Based on the aforementioned two fitness scores, the final evaluation (or fitness) function is:

F = Fq + gamma*Fd

where gamma >= 0. Overall, a relatively high fitness score F, leads to higher training efficiency and better generative performance.

E-GAN

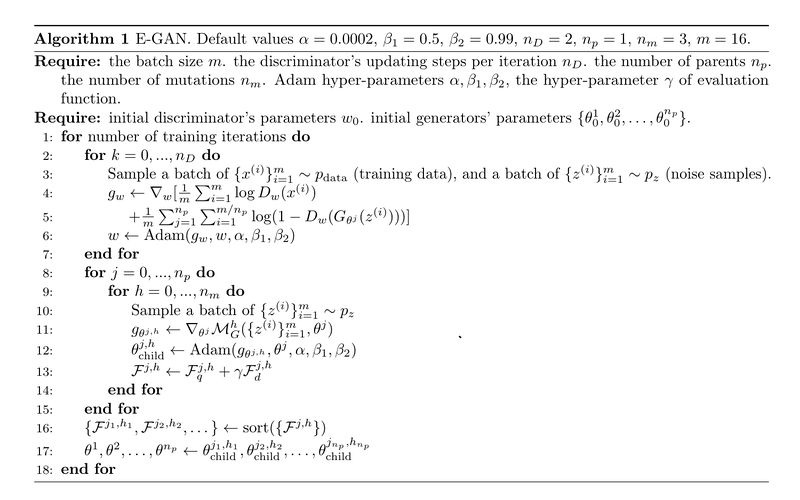

In this section, we are going to focus on the training algorithm of E-GAN. Before introducing the algorithm, let's briefly cover how E-GAN is different from GAN. The core concept of GAN, which is using adversial two-player game to train generator and discrimintor, G and D, still applies here. The only difference is how we update G and D. Ordinary GAN updates G and D alternatively, and for each updates, we are using stochastic gradients. However, as we discussed in the previous sections, alternatively updating G and D requires significant attention. We modify the way we update the generator G to improve stability and generative powers, using the concept of evolutionary algorithms. Below pseudo algorithm will explain what is really happening in the training algorithm. \begin{algorithm}

For each iterations,

1. Train D

- for number of steps you want,

- sample datas and noises with given minibatch size

- calculate sample gradient of total objective function

- use the calculated gredient to update the parameters for D ( with Adam )

2. Evolve G

- for each parents,

- for number of children you want,

- sample noises

- calculate sample gradient of mutation objective funtion

- use the calculated gradient to mutate parameters of G ( with Adam )

[ note that mutated parameters are now the parameters of the child ]

- evaluate fitness

3. Select G

- select according to fitness

\end{algorithm} As you can see, the first part ( updating D ) of the iteration is identical to the ordinary GAN. But for the second part, we adapt the evolutionary process. Note that the mutation process is in fact, we generate several identical copies of the previous known generator ( a parent ) and mutate the parameters. Mutation of the parameter is nothing more than calculate the gradient of mutation objective function and apply adam operation to generate new parameters for the child. And you repeat this process until we obtain predetermined number of children ( mutated generators ). Furthermore, we repeat this process for all parents available at each iterations. It is very important to know that the mutation objective function is a function of D(G(z)). Therefore, the evolutionary process takes account of newly updated discriminator D. Now after we generate all mutated G's, we evaluate survival fitness of each mutations. Finally, we sort the generators with their survial fitness values and use top corresponding mutated generators as parents for the next iteration. Here is the rigourous version of the algorithm, which is provided from the paper.

Experiments

Conclusions

In this paper, authors present E-GAN for effectively training deep generative models. They invent an evolutionary algorithm to evolve a population of generators to adapt to the dynamic environment. Experiments support that E-GAN improves the training stability of GAN models and achieves convincing performance in several image generation tasks. In the future, it will be interesting to focus on further exploring the relationship between the environment and evolutionary population and further improving generative performance.